Introduction

In this article, we will discuss the log configuration in the Delete Activity in Azure Data Factory.

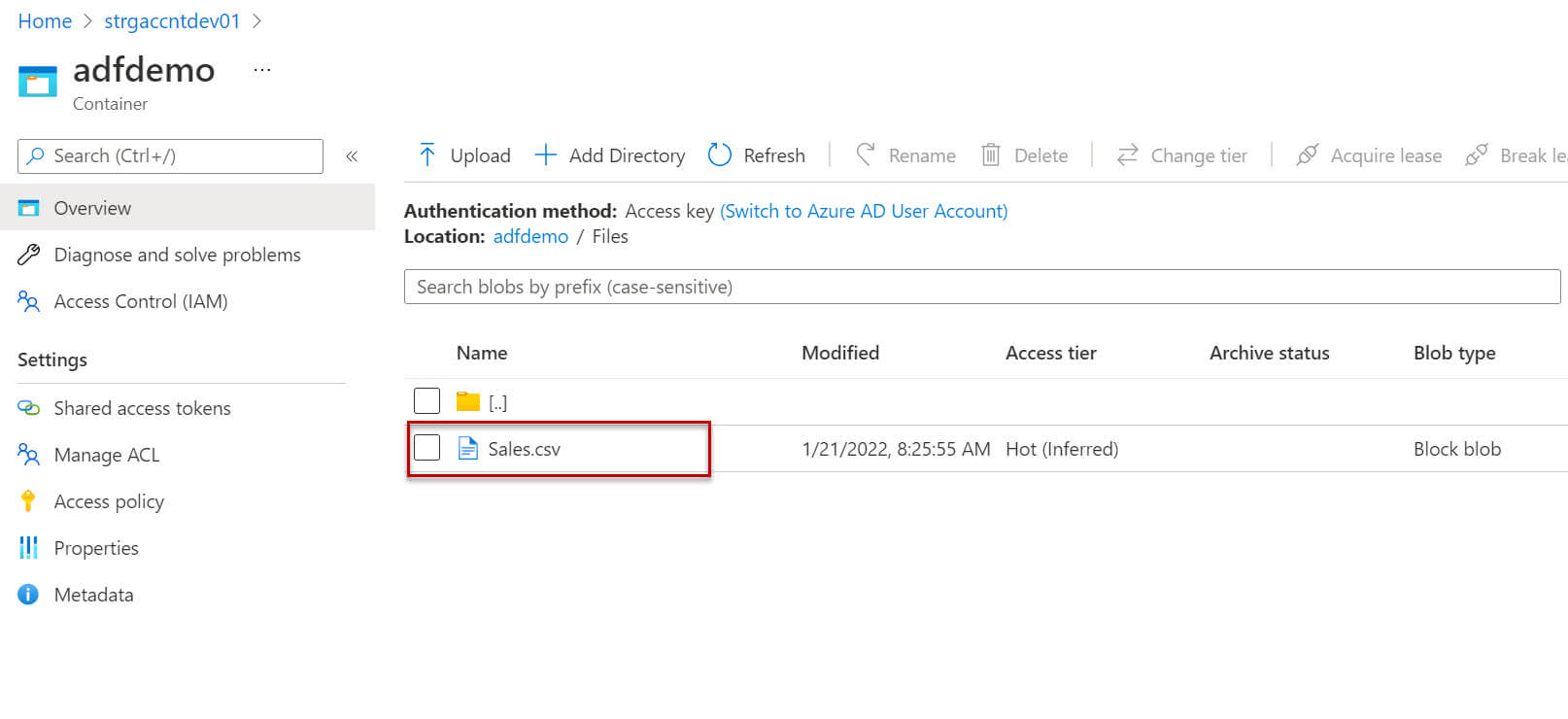

The Source File

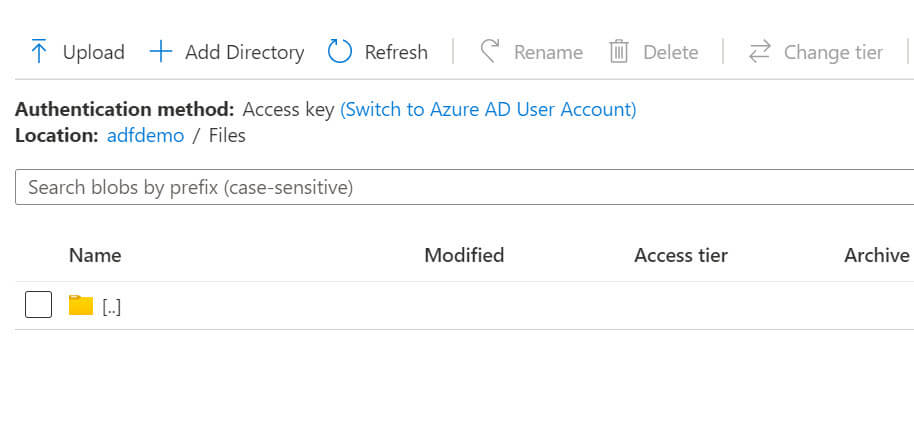

I put a sample file, Sales.csv, in the folder, adfdemo/Files.

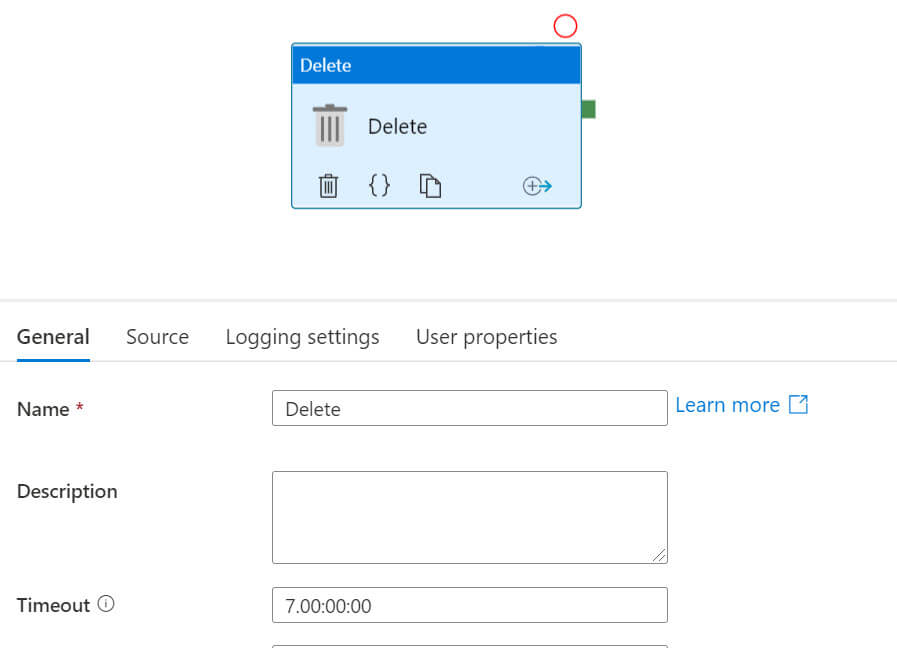

We will use the logging settings in the Delete activity:

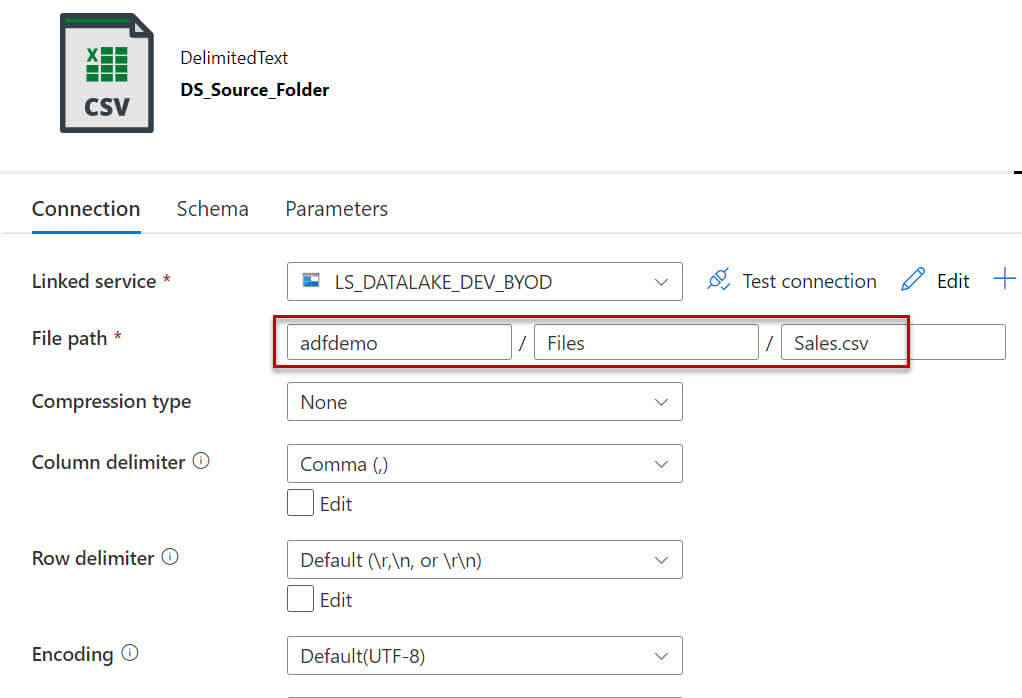

Let's configure the source dataset, I added the linked service for Azure blob storage., then edited the source file path, which is adfdemo/Files/Sales.csv.

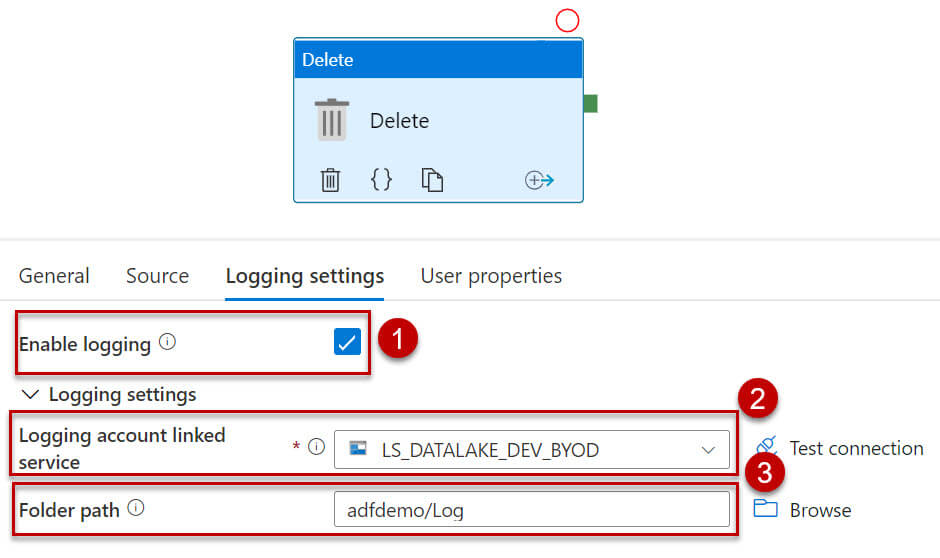

Now I will configure the log settings as below:

- Enable logging: must be enabled

- Logging account linked service: Linked service for the logging

- Folder path: Folder path to creating the log file.

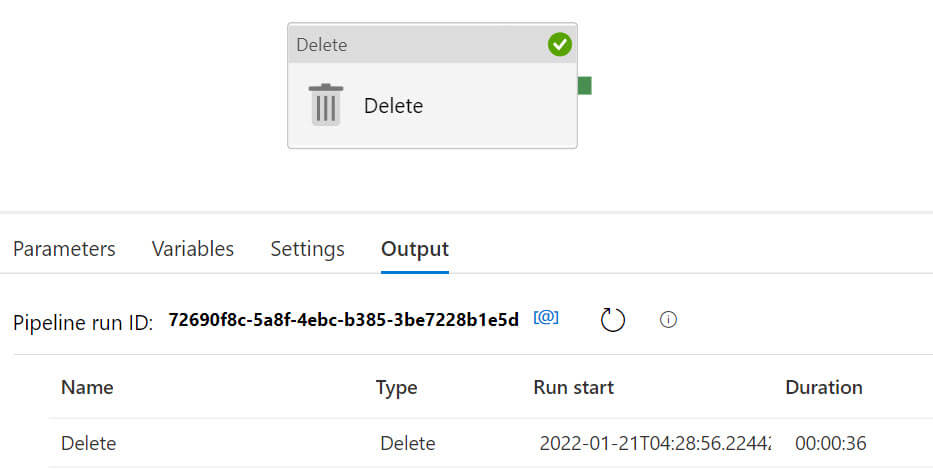

Let's run the pipeline:

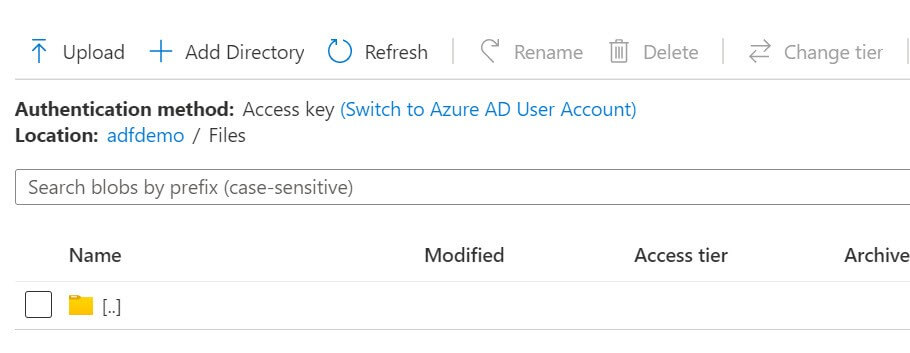

The source file is deleted from the source folder:

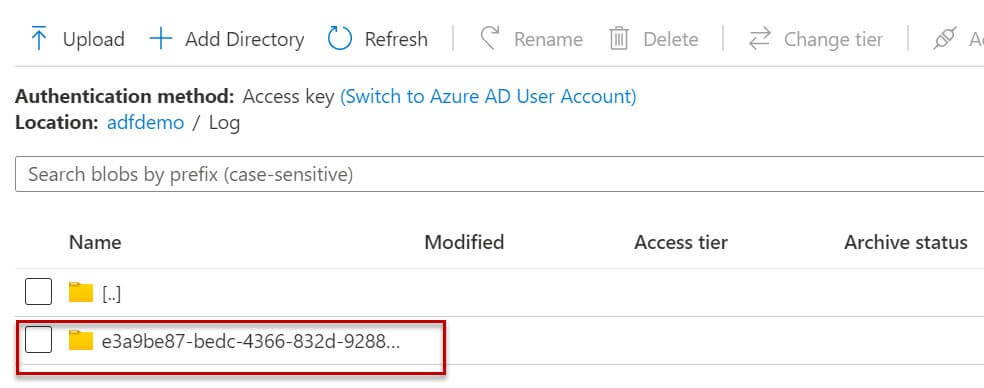

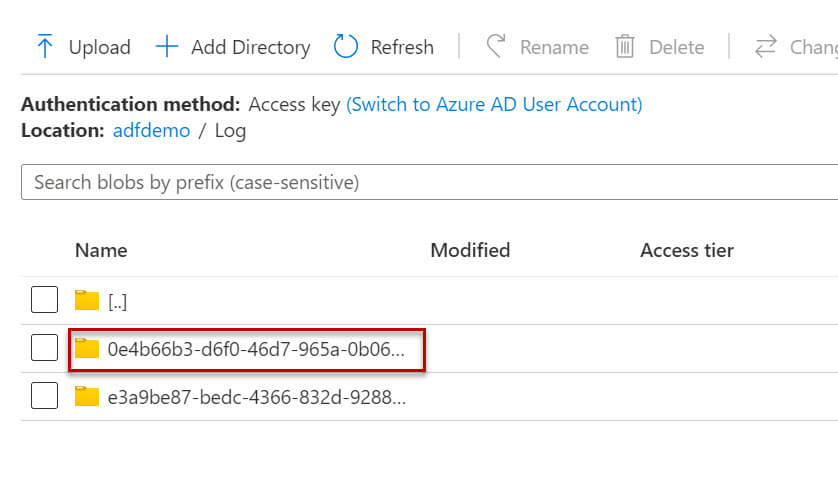

A new folder is created in the log folder:

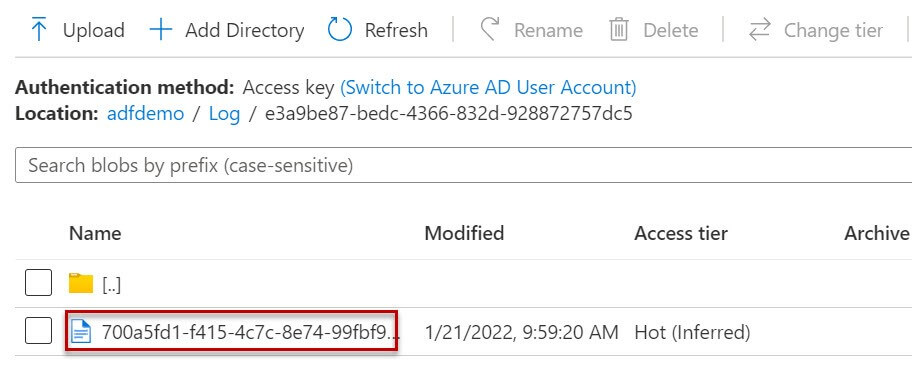

A new log file is created in the log folder:

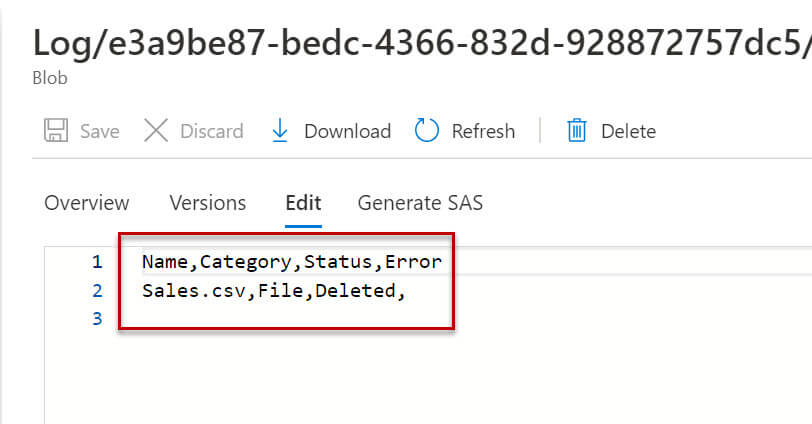

Now I open the log file, it has four columns as below:

- Name - Name of the deleted file or folder.

- Category - Specifies whether it is a file or a folder.

- Status - Delete status.

- Error - Error message if any.

Every time the delete activity deletes a file or folder, it creates a new logfile within a folder.

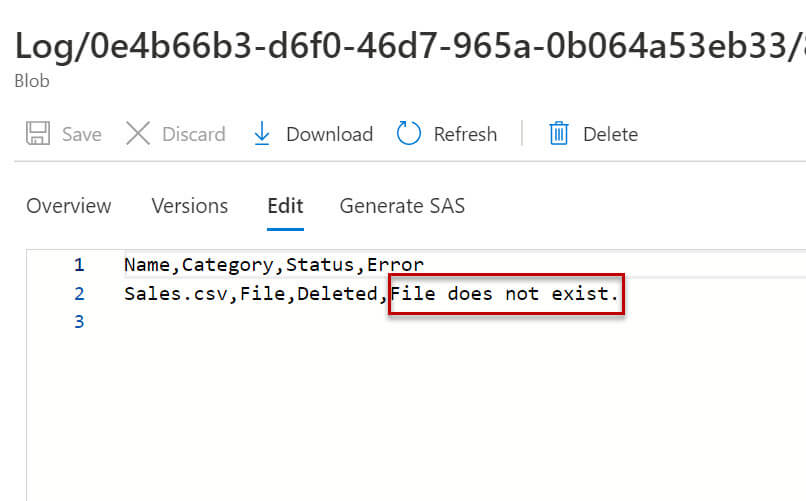

Now we will run the pipeline once again with the empty file in the source folder:

Let's run the pipeline:

The log file is created:

I opened the log file and it is showing the error message in the error column - File does not exist because there was no source file in the folder.

Conclusion

In this article, we discussed how to use a log file in the delete activity. The log file is very useful to check the delete status for the specified files or folders.