SQL 2022 is here! Lets back up our databases directly to GCS!

This new release of SQL Server has added functionality to allow you to back up databases directly to S3-compatible object storage. You can read more details about it by following this link.

Be sure to understand the limitations of this feature, and the risk of being a daring, cutting-edge, no-helmet wearing, implementer of it.

Many DBAs who host self-managed SQL Server instances in GCP will likely have stumbled across documentation suggesting they back up their databases to local drives and then copy them into GCS for long-term storage. This pattern will work but it brings about some risk and additional cost. Wouldn't it be nice to just back the DB up directly into GCS? Don't worry! SQL 2022 has you covered!

Steps to backup SQL DBs directly to GCS

To get started, we need to set up the GCP Cloud Console Configuration as follows.

- First you'll need to create a destination GCS bucket. For this example, I created a multi-region standard GCS bucket in GCP called my_company_name__gcs_sql_backup_bucket_test.

- Take note of your GSUtil URL as you'll need it later: gs://my_company_name__gcs_sql_backup_bucket_test

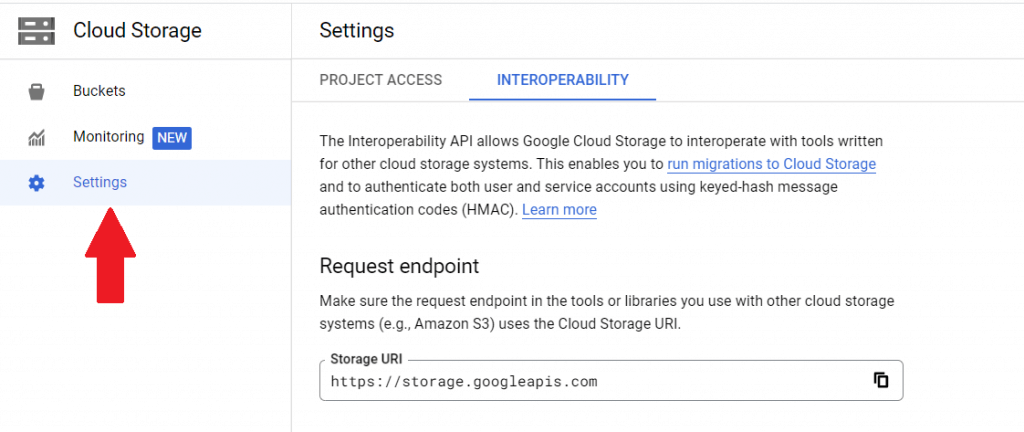

- Next you'll want to navigate in the cloud console to the Cloud Storage, Interoperability settings tab for your GCS bucket.

Take note of the Storage URI (you'll need it later)

Take note of the Storage URI (you'll need it later) - Next you'll scroll down and create an HMAC key for authentication. I used one tied to my personal account for testing, in production it would make sense to use one tied to a service account. Creating this HMAC key will give you an Access Key and a Secret. You'll need both of them later so be sure to save them.

Crafting the S3 URL

To get started we will translate the "GCS URL" into a "S3 URL" that we can point the backup to. The Microsoft documentation tells you that you'll need a URL that looks like one of these

- Path style example:

s3://<endpoint>:<port>/<bucket> - Virtual host example:

s3://<bucket>.<domain>

It's simple to translate a GCS bucket URL to an S3 URL. All you need is to combine the the Interoperability Storage URI, "https://storage.googleapis.com", and the bucket name or GSUtil URL: "gs://my_company_name__gcs_sql_backup_bucket_test"

Like So,

Path Style:

s3://storage.googleapis.com/my_company_name__gcs_sql_backup_bucket_testVirtual Host:

s3://my_company_name__gcs_sql_backup_bucket_test.storage.googleapis.com

Creating a Credential

For us to successfully use a credential to connect to the S3 bucket we'll need the HMAC Access Key and Secret we created above. The Credential URL has to be created using the same format (Path Style or Virtual Host style) that your backup statement will use. I do not see any harm in creating it both ways to enable either syntax to be used aside from having to maintain it twice.

Per the MS documentation the IDENTITY line must be specified exactly as I have supplied below.

-- Path Style CREATE CREDENTIAL [s3://storage.googleapis.com/my_company_name__gcs_sql_backup_bucket_test] WITH IDENTITY = 'S3 Access Key', SECRET = '<AccessKey>:<Secret>'; -- Virtual Host Style CREATE CREDENTIAL [s3://my_company_name__gcs_sql_backup_bucket_test.storage.googleapis.com] WITH IDENTITY = 'S3 Access Key', SECRET = '<AccessKey>:<Secret>';

Backing UP to a GCS Bucket!

All we need now is to use one of our S3 URLs and we should be able to write our first backup directly to GCS.

-- Path Style BACKUP DATABASE [master] TO URL = 's3://my_company_name__gcs_sql_backup_bucket_test.storage.googleapis.com/master.bak' WITH COMPRESSION, STATS = 10 -- Virtual Host Style -- With cert based Encryption BACKUP DATABASE [master] TO URL = 's3://storage.googleapis.com/my_company_name__gcs_sql_backup_bucket_test/master2.bak' WITH COMPRESSION, stats= 10 ENCRYPTION (ALGORITHM = AES_256, SERVER CERTIFICATE = My_Encryption_Cert) -- For Large DBs be sure to read docs about Multi URL, Increased Transfer Size BACKUP DATABASE [userdb] TO URL = 's3://storage.googleapis.com/my_company_name__gcs_sql_backup_bucket_test/userdb_0.bak' , URL = 's3://storage.googleapis.com/my_company_name__gcs_sql_backup_bucket_test/userdb_1.bak' , URL = 's3://storage.googleapis.com/my_company_name__gcs_sql_backup_bucket_test/userdb_2.bak' WITH FORMAT, MAXTRANSFERSIZE = 20971520, COMPRESSION

If you didn't test a RESTORE you didn't do your job!

-- Yes, I can still get a file list RESTORE FILELISTONLY FROM URL = 's3://storage.googleapis.com/my_company_name__gcs_sql_backup_bucket_test/master2.bak' -- Yes, I can still restore the DB RESTORE DATABASE master_restored FROM URL = 's3://storage.googleapis.com/my_company_name__gcs_sql_backup_bucket_test/master2.bak' WITH MOVE 'master' TO 'S:\MSSQL$Data\MSSQL15.MSSQLSERVER\MSSQL\DATA\master2.mdf' , MOVE 'mastlog' TO'S:\MSSQL$Data\MSSQL15.MSSQLSERVER\MSSQL\DATA\mastlog2.ldf' -- Yes, I can still accidentally, or purposefully overwrite a DB /* RESTORE DATABASE master_restored FROM URL = 's3://storage.googleapis.com/my_company_name__gcs_sql_backup_bucket_test/master2.bak' WITH REPLACE */

You're not done yet!

Test restores to a different instance, maybe in a different region (DR)? Yep, you're going to need those credentials over there and any Backup encryption certs!!

I have learned over the year's it is always best to prepare for a worst case scenario. For example what would you do if your laptop and your entire DC just got ransomware encrypted. Understand that just having backups written to a file share is not enough if that share can get accidentally or maliciously deleted or encrypted. It's always a good idea to have some Airlocked copies of backups and encryption certificates to prevent a complete disaster.

I hope this helps kickstart any others who are taking a look at this great new feature!

Thanks for reading!

-Michael Abair

Take note of the Storage URI (you'll need it later)

Take note of the Storage URI (you'll need it later)