One of the great things about SQL Server is that the platform has grown to include a number of configuration options and switches that allow a DBA to get the most performance from their instance that is possible. One of the downsides of the platform, however, is that any sysadmin can make changes (or db_owner for database configuration) without an easy way to audit the changes. There is an entry in the error log, but parsing this data is neither easy, nor something that lends itself to easy alerting and reporting for DBAs.

This article explores how one might go about tracking changes to an instance or database and producing a report that a DBA can easily view. We also look at how alerting might take place in a third party monitoring solution, using Redgate's SQL Monitor.

Disclosure: I work for Redgate Software

Detecting Changes

Not to be too esoteric, but detecting change means that we need to know what a value is or should be. In other words, what is our baseline for a particular value of our configuration?

For any particular instance (or database), we need to determine the values that work best for that environment. There are good, and sometimes best, practices, or there may be standards for our organization. However, those might be overloaded or changed for a particular environment. That means that our tests for changes or deviations need to be adjustable as needed.

To detect change, we really need a few things.

- A storage location for our baseline (table)

- A method to load this storage with our baseline (procedure)

- A method to detect if the current configuration is different from the baseline (procedure)

- A method to update our baseline with new values

I would also argue that we might want to audit this over time, or track changes, so we ought to include a date in our storage location. Our procedure to update the baseline also might want to store previously changed values so that we have an audit trail. This allows us the ability to see what's changed.

Capturing the Baseline

Let's start by storing the data we want to check for configuration changes. This article will start with instance configuration, which is stored in master.sys.configurations. If we check this table, we find there are a number of columns, which correspond to the output of the sp_configure stored procedure. We see these columns:

- configuration_id

- name

- value

- minimum

- maximum

- value_in_use

- description

- is_dynamic

- is_advanced

Of these columns, we are really interested if the "value" changes for any particular setting. Whether this is actually in use or not isn't important. If it is, then the value and value_in_use columns are the same. If only value is changed, potentially this is a change that will be implemented on the server restart or the execution of the RECONFIGURE command. Either of those could result in a deviation in instance performance, so we want to alert someone when there is a change.

I will create a table in a DBA database to store the values I care about. In particular, I add a surrogate PK for the table (always a good idea), a date field for capturing when we are storing the information, and a flag for an audit record. My table looks like this:

CREATE DATABASE DBAAdmin GO USE DBAAdmin GO CREATE TABLE dbo.ConfigValue ( ConfigValueID INT NOT NULL IDENTITY(1,1) CONSTRAINT ConfigValuePK PRIMARY KEY , ConfigValueDate DATETIME2(3) , Configuration_ID SMALLINT , ConfigName VARCHAR(50) , ConfigValue INT , ConfigValueInUse INT , AuditRecord INT DEFAULT 0 ) GO CREATE INDEX ConfigValue_IDX_Date ON dbo.ConfigValue (ConfigValueDate) GO

I also add an index, since I know there will be queries by date. To load this table, I can easily insert from sys.configurations, but since I may update the baseline regularly, let's create a procedure that we can easily call to repeat this process. Here is the procedure.

CREATE PROCEDURE LoadCurrentConfigValue AS BEGIN INSERT dbo.ConfigValue ( ConfigValueDate, Configuration_ID, ConfigName, ConfigValue, ConfigValueInUse, AuditRecord ) SELECT GETDATE(), configuration_id, [name], CAST( [value] AS INT), CAST( value_in_use AS INT), 0 FROM master.sys.configurations END GO

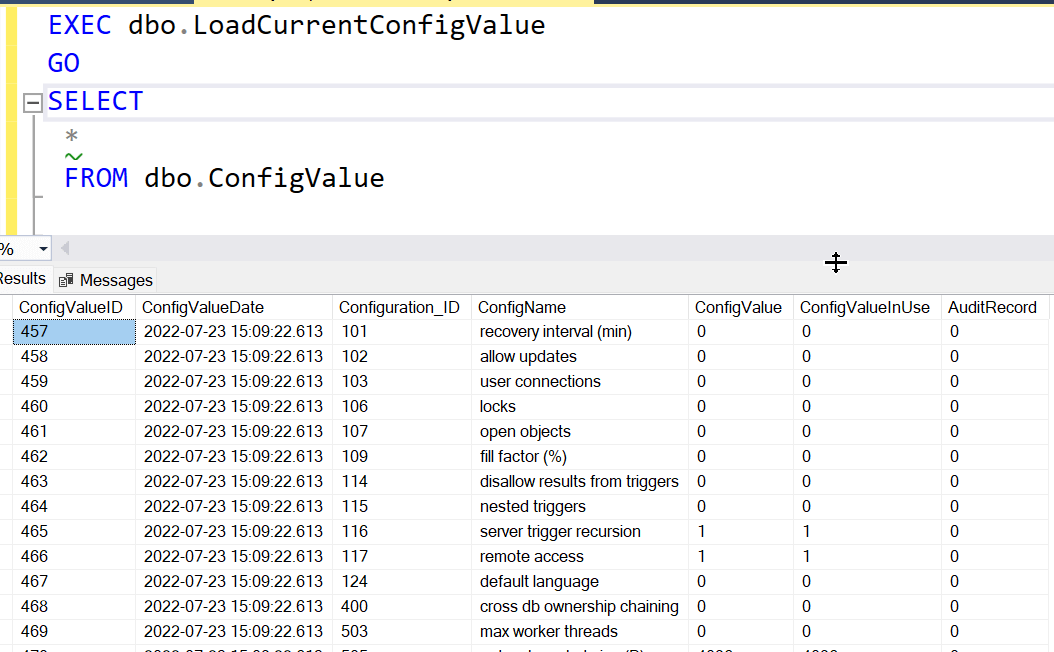

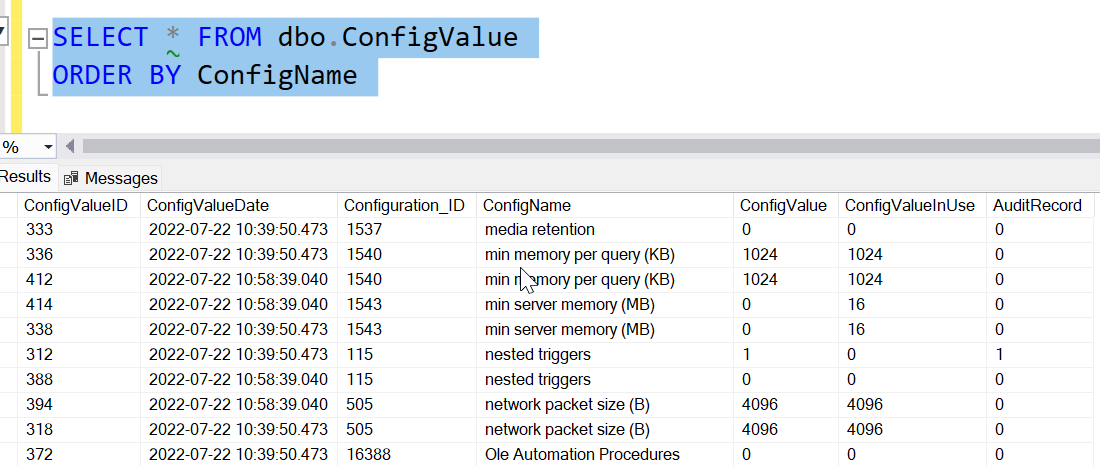

If I execute this procedure on SQL Server 2016, I will get 76 rows inserted into my table. These represent the current configuration values on my instance, as of the execution time, captured by the ConfigValueDate column. You can see the result of me querying the table below.

[image]

Checking for Changes

I will use a procedure to check for changes, which I can easily run at any time. This procedure will compare the current values to what is stored in the table and then return a count of differences. Many alerting or monitoring programs do better with checking for counts of changes and use those for alerting, rather than worrying about capturing and formatting data needed. Whether I use a bespoke or third party system, I can always get more details of changes and send those in an alert if needed.

My procedure needs to do the following:

- find the latest ConfigValueDate in my table and store that

- join my ConfigValue table with sys.configurations

- count the differences based on the name matching in both tables, the latest date matching and the config values being different

I can easily write this as follows. First, get the most recent date in the table. Since we might have multiple baselines loaded, we want the most recent one. Now, join with the DMV on the ID. This is set for a particular version of SQL Server. We also match in the date we found at the beginning of the procedure, and we filter if the values are different.

CREATE PROCEDURE dbo.CheckConfigValue AS BEGIN -- Get latest date of config values DECLARE @dt DATETIME2(3) select @dt = Max(ConfigValueDate) FROM dbo.ConfigValue -- return different records SELECT COUNT(*) 'configdiff' FROM dbo.ConfigValue c INNER JOIN master.sys.configurations mc ON c.Configuration_ID = mc.configuration_id AND c.ConfigValueDate = @dt WHERE c.ConfigValue <> mc.[value] END GO

However, I also want to note that any changes are really audit records that I want to keep. Therefore, I will also use this same stucture to add one more specification to my procedure. I need to do the following:

- find the latest ConfigValueDate in my table and store that

- join my ConfigValue table with sys.configurations

- count the differences based on the name matching in both tables, the latest date matching and the config values being different

- mark rows with differences as audit records

This changes my procedure code as shown here. Note, I've done the "work" of marking audit records first adn then returning the result set at the end. I find many other developers will look at the end of a procedure first for results, so I'll leave those there. In this case, I use an UDPATE statement with the same criteria to set the auditrecord column to 1.

CREATE PROCEDURE dbo.CheckConfigValue AS BEGIN -- Get latest date of config values DECLARE @dt DATETIME2(3) select @dt = Max(ConfigValueDate) FROM dbo.ConfigValue -- capture audit record of diff values UPDATE c SET AuditRecord = 1 FROM dbo.ConfigValue c INNER JOIN master.sys.configurations mc ON c.Configuration_ID = mc.configuration_id AND c.ConfigValueDate = @dt WHERE c.ConfigValue <> mc.[value] -- return different records SELECT COUNT(*) 'configdiff' FROM dbo.ConfigValue c INNER JOIN master.sys.configurations mc ON c.Configuration_ID = mc.configuration_id AND c.ConfigValueDate = @dt WHERE c.ConfigValue <> mc.[value] END GO

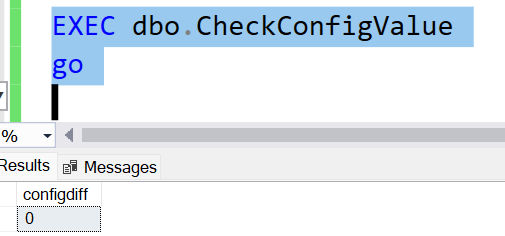

If I run this, I get a 0, because the bvalues in my table match the instance. Let's do a little testing on if this works. I'll run this code:

EXEC sys.sp_configure @configname = 'nested triggers', -- varchar(35) @configvalue = 1

When I run this, I've changed a value. If I run EXEC dbo.CheckConfigValue, the result is a 1. This shows me that I've actually detected a change. You can see a screenshow of this working below.

Note: I did not need to run RECONFIGURE to detect the change.

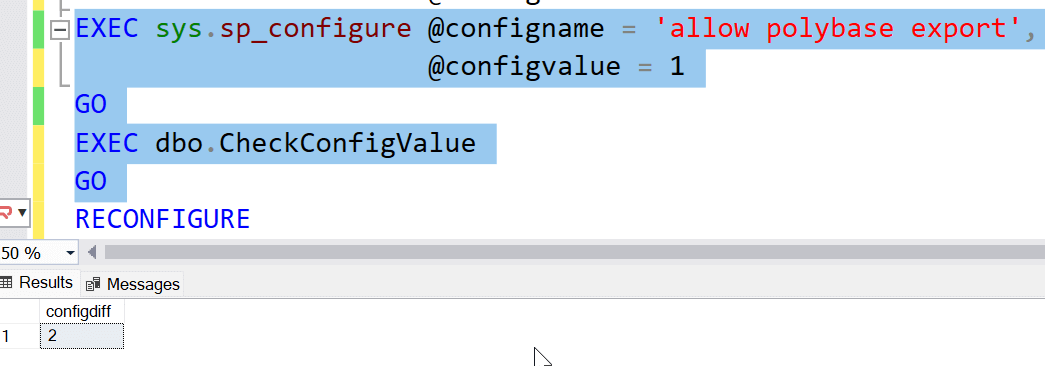

If I make another change, I'll see another value. Let's change another setting. You can see in the screenshow below that a second change is detected. This is good.

Updating the Baseline

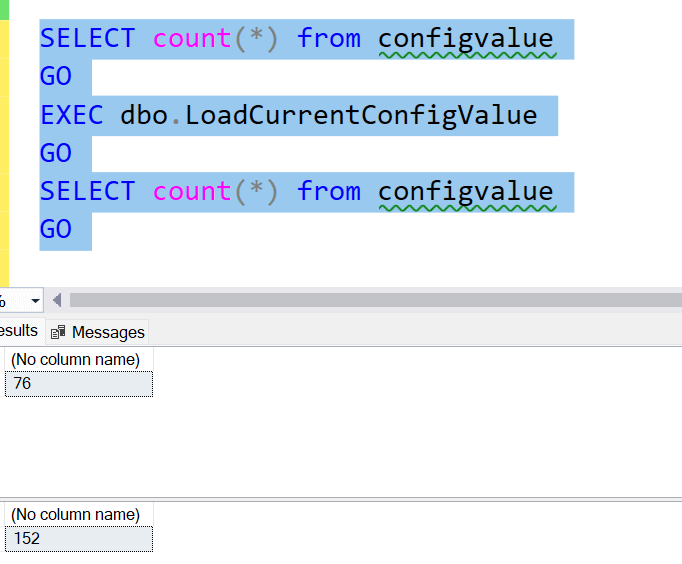

Let's assume that I think these changes actually make sense. I now want to update the baseline. To do this, I can re-run my load procedure, which will load the latest values into my table. I'll show this by counting rows before and after.

Now I have the latest values, plus the previous ones. If I now run a check, I should see 0 changes. This is what I see.

Data Management

One of the things I think is important is managing data over time. Many third party monitoring products do this well, removing data after some time, or even avoiding repeating values to limit data storage. We want to do the same thing here, with a mini-cleanup process.

If we look at the table, we see a complete set of duplicate values for the various settings. I've ordered things by the name, so you can see this below:

When we look at this sample of data, you can see there are two rows for each setting that are duplicate except for the PK and the datetime value. However, for the setting we changed (and detected the change), one record has a audit marking and one does not. These records marked as audit records are really the only ones we want to keep. The rest of the data, which is unchanged settings from the baseline on the earlier date, are not needed.

Let's remove them. To do this, we create a third procedure that is designed to help us remove unnecessary date. This procedure will look for the max date in the table, which is the most recent baseline. Everything before this can be removed IF it is not marked as an audit record. We use that criteria in the procedure DML statement.

There is one filter at the beginning of the procedure ensures we have more than one baseline date. If there is only one, we don't want to remove the current one, so we do not delete anything.

CREATE PROCEDURE RemoveOldConfigValue

AS

BEGIN

IF

(SELECT COUNT(DISTINCT ConfigValueDate)FROM dbo.ConfigValue

) > 1

BEGIN

DECLARE @dt DATETIME2(3);

SELECT @dt = MAX(ConfigValueDate)

FROM dbo.ConfigValue;

DELETE dbo.ConfigValue

WHERE ConfigValueDate < @dt

AND AuditRecord = 0;

END;

END;If we run this, then our count of records drops to 78. This is 76 rows for the current baseline and two rows for the audit records.

As I continue to load, check, and remove records with my procedure, I'll keep a history of configuration changes. If we don't ever change anything for this set of configuration values, I'll never have more than 78 rows.

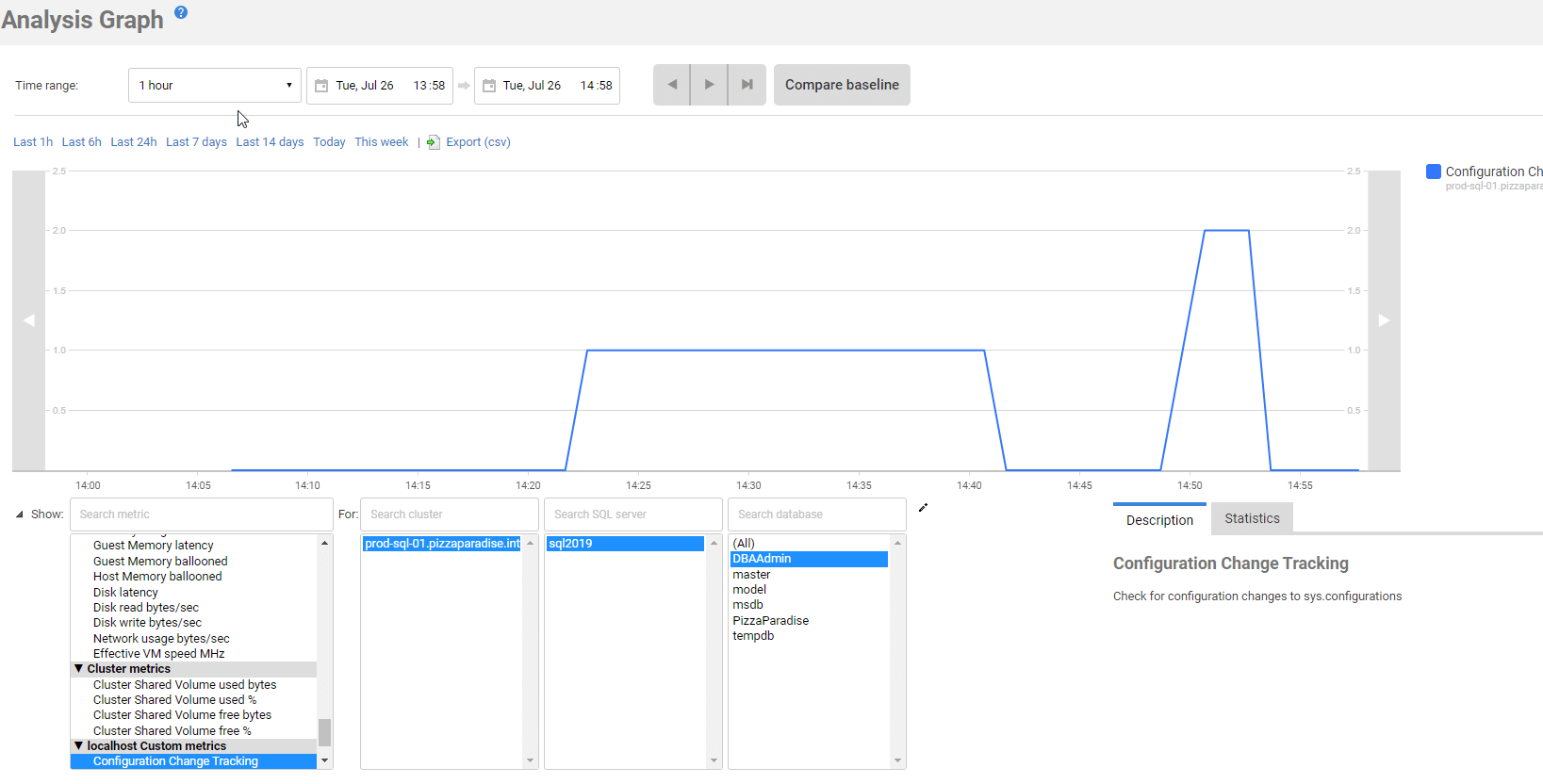

Alerting on Changes with SQL Monitor

One of the tasks you might want to implement as a part of detecting configuration changes is to have an alert that lets a sysadmin or DBA know that something has changed. Many monitoring systems have a facility for alerting sysadmins, but they might not accurately track this particular type of change. I'll show how we can add alerting to this configuration tracking using SQL Monitor from Redgate Software.

Disclosure: I work for Redgate Software

SQL Monitor include a facility for adding custom metrics that the software will track. To add alerting, we will add a custom metric to SQL Monitor that looks at the results from our EXEC dbo.CheckConfigValue procedure.

To do this, we will go to the custom metric section in SQL Monitor.

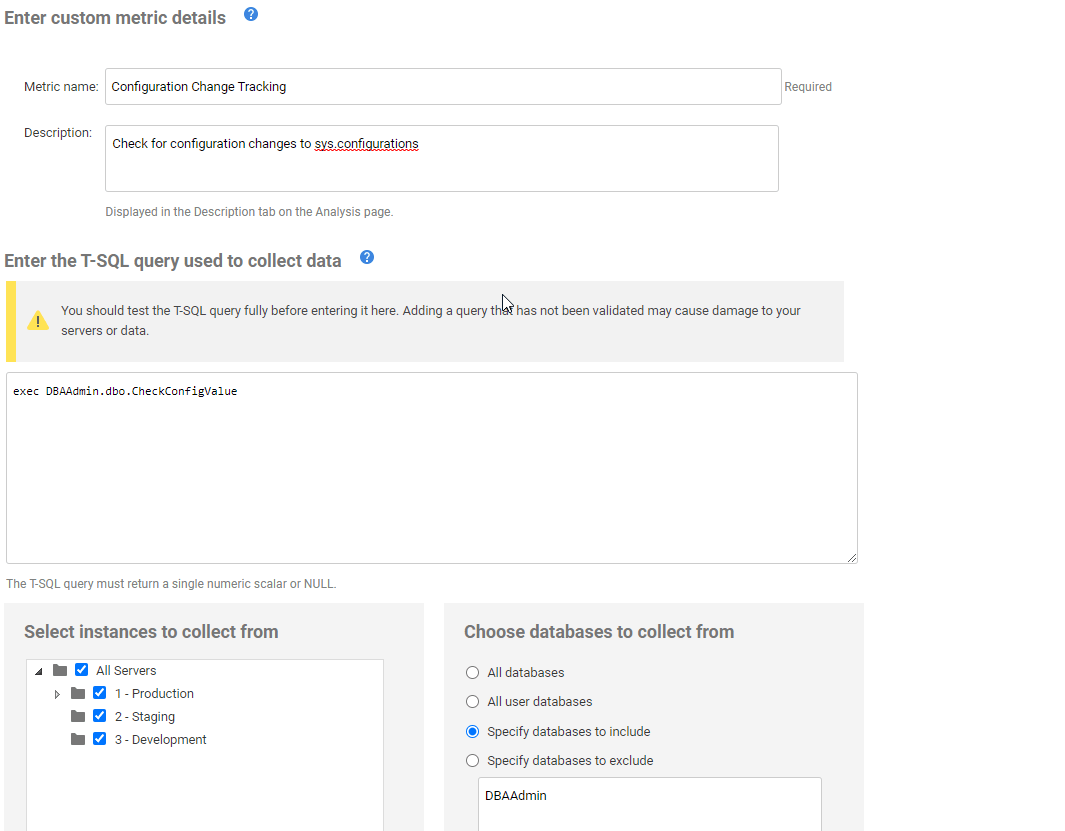

We add a new metric, which is designed to run our check procedure. The load/remove are processes that we run manually when we decide we've changed something and need to reset a baseline. Here are the values I'd set for the custom metric. I give it a name and specify the stored procedure to query. I also limit this to the DBAAdmin database, as that's where I run this from.

Note: I'd want this same database to be on every instance.

The important part of this process is to not check the calculated measure. We want to know when this is different from the baseline, not the change in the value. Really, any value above zero is an issue for us. If we like a change, we should reset the baseline.

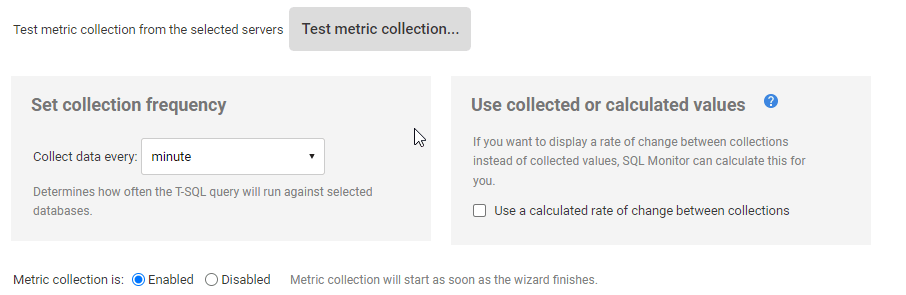

I also set this for every minute. I expect rare changes, and really once a day usually is good enough for me, but you can decide what matters to you. Perhaps you only set this to check once a day.

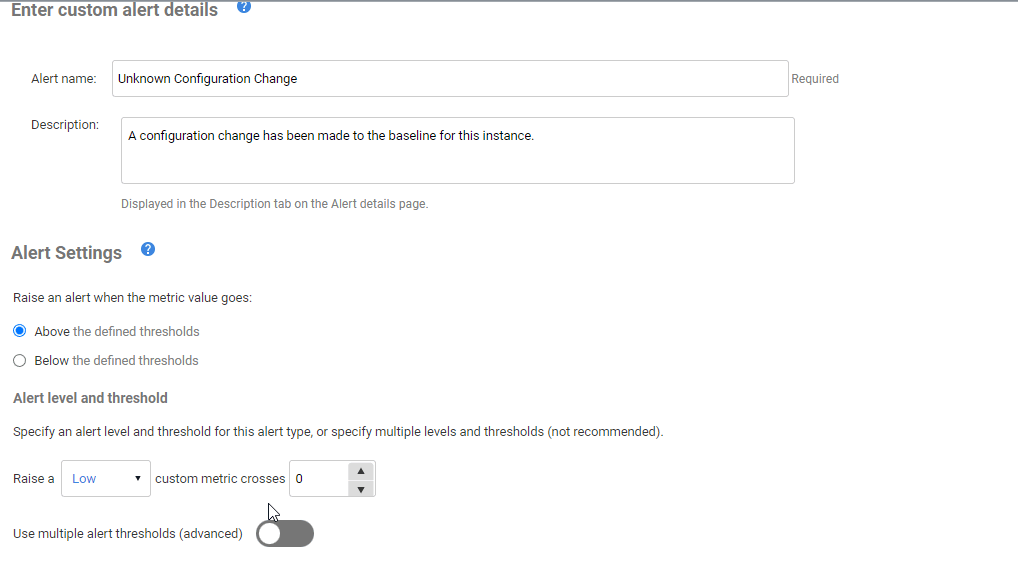

In the alerting section, we alert if there is any change. I give the alert a name and description, noting this is a change to the baseline. I then have an alert fire when this changes for any value. I set this as a low priority alert, but you might choose to make this a higher level. Depending on the instance, I might consider this a medium alert. Since many config changes are unlikely to cause an outage, I don't know that I'd set this as a high alert.

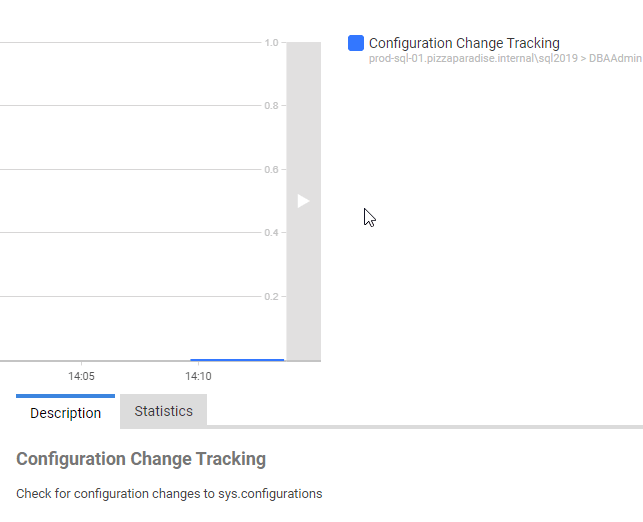

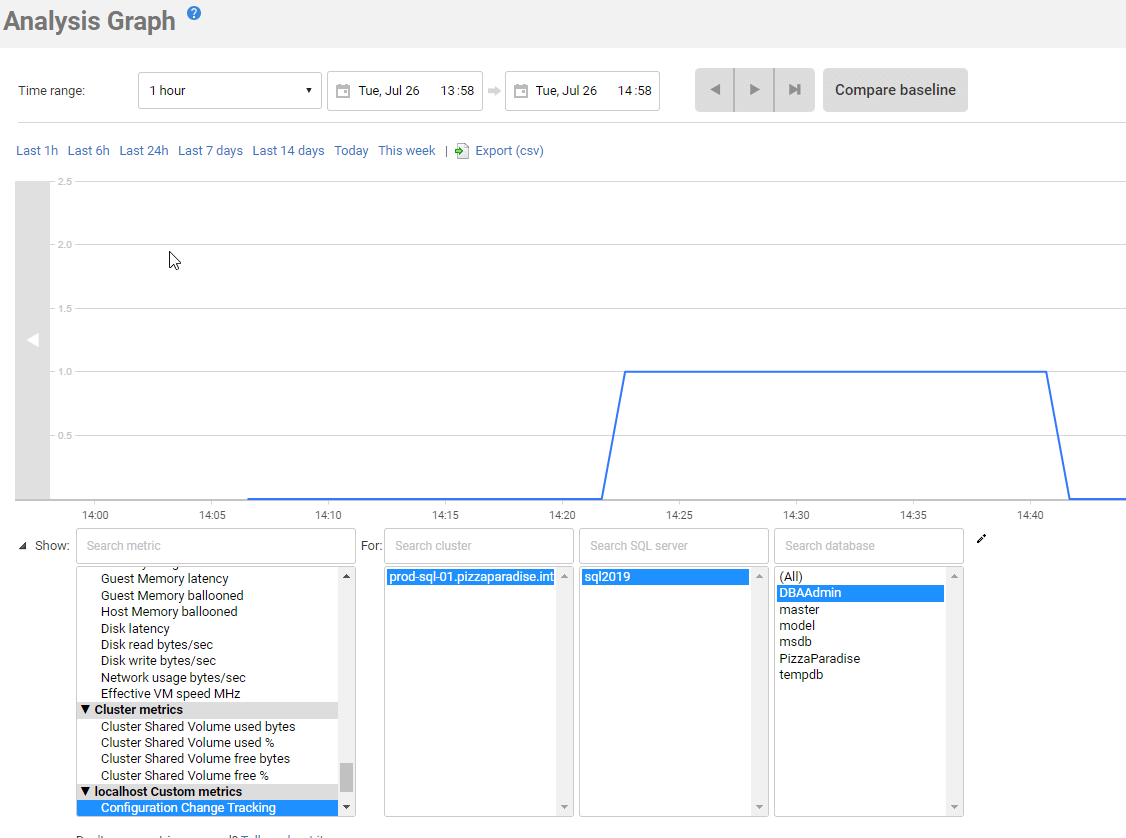

Now, I can let this run and then go check the Analysis graph at some point. I should see some data, which is all zeros for the time period before any changes are made.

Now I'll make a change. As shown earlier in the article, I'll change nested triggers. When I do that, I will see a change in the graph. If I reset the value back, it matches the baseline again, and the graph notes this.

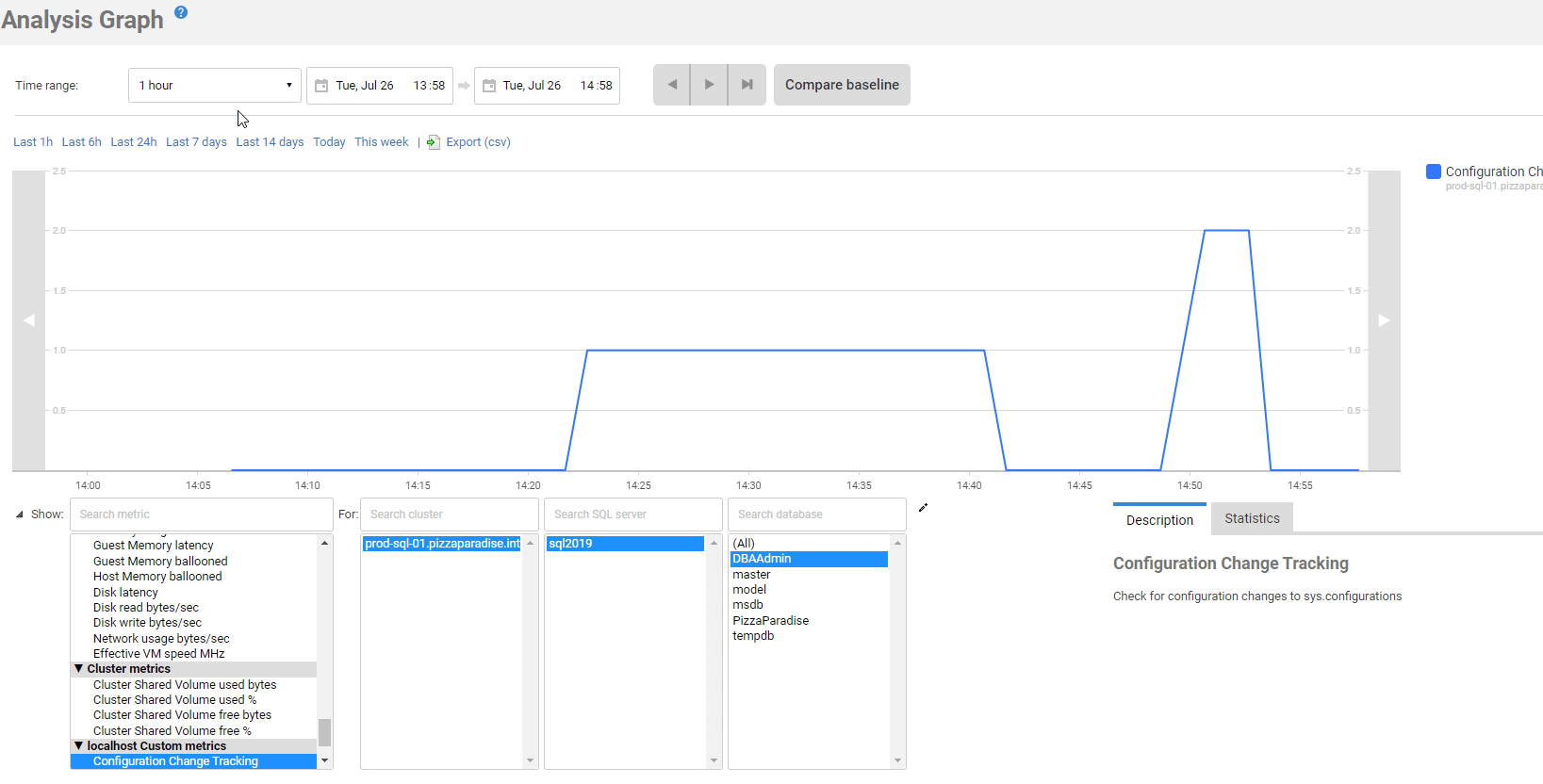

If I make a second change, I'll see another marker in the graph. Here I've re-made my nested trigger change and added another update to "allow polybase export". We see two updates in the graph.

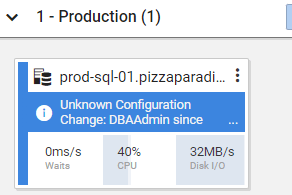

My front tile in the Global Dashboard also shows there is an alert (low) that has fired.

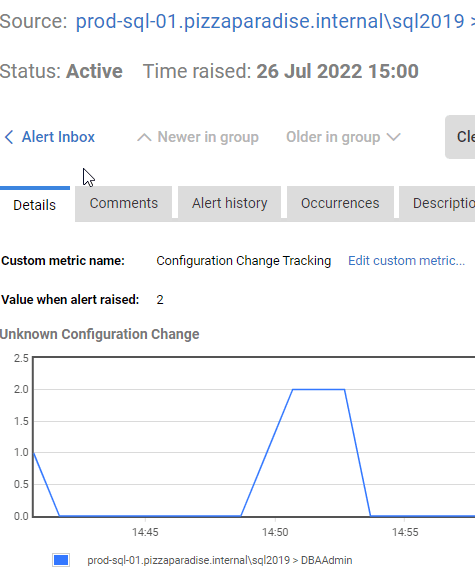

The detail of the alert also show my graph for me. This gives me some view into the changes that have taken place and I can confirm my testing of various settings here.

With this information, I can then investigate, check the error log, and more to determine if this change should be allowed.

Summary

This article presents a way that you can capture and detect changes to configuration settings without parsing the error log. This also allows you to capture the audit data over time of changes made to your configuration settings. This also minimizes the data captured by removing all rows that are not needed as audit records. This also avoids the repetition of data by showing many changes made by a DBA that might experiment with settings.

This is not a complete solution for tracking configuration changes, nor is it the best way to implement this monitoring solution. This article shows the name of the setting being tracked, but that's unnecessary. The configuration_id is sufficient for these purposes, and you can join back to sys.configurations for the name if needed. This article also doesn't cover other configuration settings, such as trace flags, database scoped configuration, and other values. However, you can easily duplicate this process for other tables/DMVs as needed.

Third party monitoring systems are valuable, and while they may let you know a change was made, they often may not help you determine what the baseline is, especially if a DBA makes a few changes to test things across a short period. Not all settings are as simple as a 0 or 1 setting, so having a baseline value is important. This article has shown how to integrate SQL Monitor with this tracking, but other systems can likely read this data in a similar manner.