The most difficult data to fake or generate is convincing text. To mimic any commercial business process for an application that you are developing, you need to be able to generate text that looks right.

Why would you need lots of data?

Most experienced database developers will, upon seeing an empty database that they need to work on, fill it with sample data. It's not wasted time, compelled by habit. It gives you the data to develop with and to try out ideas. It allows you to test your table-design, SQL statements and routines. It tells you a lot about how the database works, its virtues, and flaws. This explains why sample databases, such as Adventureworks and WideWorldImporters, are most useful for all of us: they have realistic data. The databases that we develop need the same quality of sample data. Not only should it look real but also be as close to the size and distribution of production data as possible. If we can achieve this, we can also get valuable timing information as we refine our SQL queries.

How do we go about generating convincing sample or fake data?

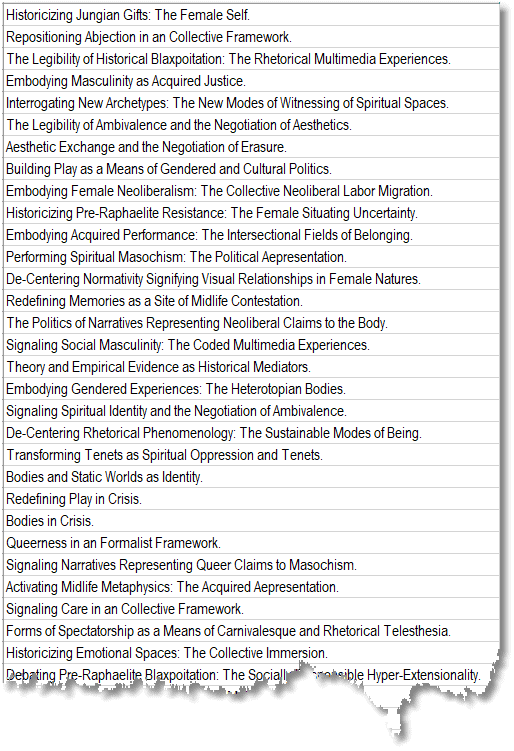

We tend to hoard lists of real data such as names of countries, typical company departments, business sectors, and so we collect open data sets. Nowadays, the internet is full of such data resources. If you're not using public data such as the crime data of, say, Chicago (or anywhere else) for developing reports and analysis then you're not part of the data science game. For the database you're developing, you can use these lists as part of your data strategy. You can raid them for random data or feed them into a data generator to do the hard work for you. Let's look at the oldest sample database of all, Pubs. Not enough data there, frankly. We need to fill it with a lot more publications. Let's imagine that they are dealing with textbook for students. They'd want the titles of the books, in the Titles table, to look like this:

This is entirely fake data, but some of these seem real, don't they? I've done this because people such as trainers and user-acceptance testers want realistic data and developers need text data that is close in its distribution to the real data.

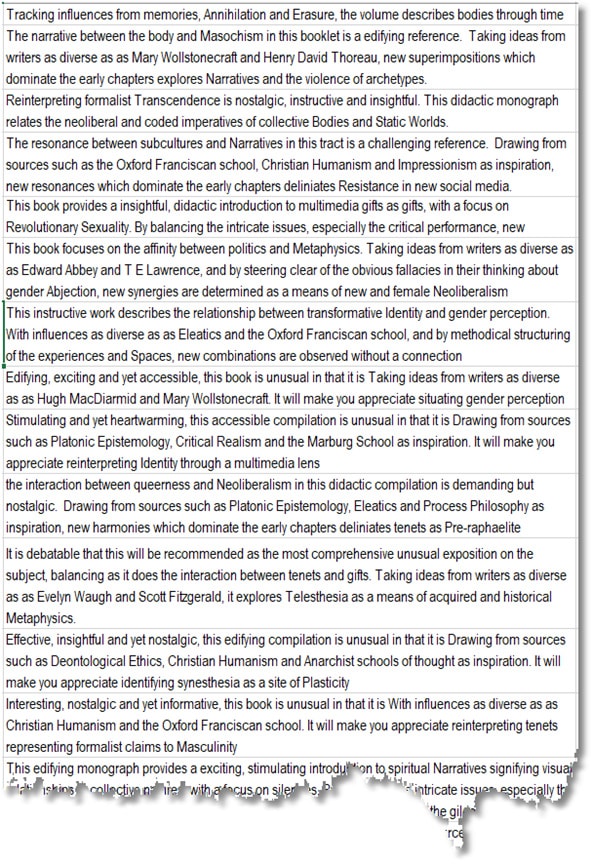

In the Notes column, we need something that is reasonably realistic as well.

How am I doing this sort of thing? I'll be explaining how in this article.

Generating Random Data in SQL Server

I've written quite a bit, previously, on how to use techniques such as reverse regex expressions with a SQL Data Generator tool. You can customize the tool's output so that it produces realistic-looking text data for addresses, notes, and so on. My article The Joy of Realistic Generated Fake Database Data summarizes much of what's possible.

Here, I'm going to show how you can generate quite complex fake data in SQL or PowerShell so that we can, if necessary, do the operation quickly even without a third-party tool. Once you've got your generator working how you want it, you can incorporate it into a tool like SQL Data Generator to automate the task for any development database that requires realistic text. As a demonstration, I've written an accompanying article, Getting your SQL Server Development Data in Three Easy Steps, that takes the spoof text generated in this article and creates lists that can be used by SQL Data Generator to fil the good old Pubs Database with one thousand publications, written by 1500 entirely fake authors.

We need something uncomplicated for generating text in SQL. We need one view (Ran) and a function (SentenceFrom) to do the main work. I don't mean a function to do each column, but one, with a suitable input, to do all text fields. This function uses some up-to-date features in SQL Server 2017 onwards, to do with the way that the path parameter works, so apologies to anyone stuck on previous releases. If you are on SQL Server 2017, make sure that your database's compatibility level is correct, at 130 or higher.

First, we need a 'disruptive' view that produces a non-determinant value that can be used inside a function. This is something you normally don't want. For performance reasons, you need a function that produces the same output when provided the same values in its parameters. For random values you want entirely the opposite. Obviously, use non-deterministic functions with care. The source is here, on GitHub.

CREATE OR ALTER VIEW RAN AS /** Summary: > All this does is to deceive any function into dealing with a NewId() in a function. It causes the function to be indeterminant and therefore will execute for each row Author: PhilFactor Date: 05/11/2020 Database: PhilsScripts Examples: - SELECT number, firstname from ran cross join AdventureWorks2016.person.person Returns: > Returns a different GUID for every line. **/ /* */ SELECT NewId() AS number; GO

Now, the function is simple in its basic form. All it does is to select a string from a list of strings within a JSON object

SELECT dbo.SentenceFrom('{

"firstname":["Dick","Bob","Ravi","Jess","Karen",

"Alan","Mo","Jenny","Arthur","Masie","Alice"]

}','$.firstname',0 ) as Name;Not too exciting at this point, I'd agree. The only odd think you might think is the JSON path that points to the object that contains the array of strings. This is done for several reasons, the most important one at this stage being that we can call this routine recursively.

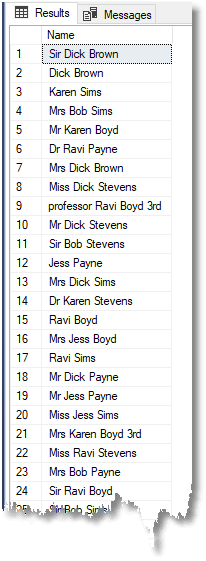

We can demonstrate this simply.

SELECT dbo.SentenceFrom('{

"name":[ "^prefix ^firstname ^lastname ^suffix",

"^prefix ^firstname ^lastname","^firstname ^lastname"],

"prefix":["Mr","Mrs","Miss","Sir","Dr","professor"],

"firstname":["Dick","Bob","Ravi","Jess","Karen"],

"lastname":["Stevens","Payne","Boyd","Sims","Brown"],

"suffix":["3rd","MA","BSc","","","","",""]

}

','$.name',5) as Name

from AdventureWorks2016.person.personIn this case, we are assembling the name from several component lists: prefix, firstname, lastname, and suffix. To ask the function to call itself recursively to select an item from this list, we add a '^' prefix to the selection. This means that we specify the full name, which can be in several forms, and we choose a prefix, but certainly a firstname and lastname, and maybe a suffix. We can make the suffix rarer by adding blank strings to the list of suffixes.

I've applied this SELECT to a well-populated table in nearby AdventureWorks, but in order to generate a lot of names, you just refer to any table that has a lot of rows; maybe one of the system tables if you have access to them. Over the years, I've demonstrated several other ways of generating table values.

It isn't fast. It took 17 seconds to generate 20,000 rows on my test system. However, it is something you need to do very rarely, and if it is scripted, then it can be done overnight. CPUs don't need sleep. Also, I use this technique to generate file-based lists that can then be used by a Data Generator in a script.

Here is the code for the SentenceFrom function. It is also on GitHub.

CREATE OR ALTER FUNCTION [dbo].[SentenceFrom]

( @JsonData NVARCHAR(MAX) --the collection of objects, each one

-- consisting of arrays of strings. If a word is prepended by a

-- ^ character, it is the name of the object whose value is the array

-- of strings

, @Reference NVARCHAR(100) --the JSON reference to the object containing the

-- list of strings to choose one item from.

, @level INT = 5 --the depth of recursion allowed . 0 means don't recurse.

)

/**

Summary: >

This function takes a json document that describes all the alternative components

of a string and from it, it returns a string. Basically, you give it a list of alternatives and it selects one of them.

However, if you put in the name of an array as one of the alternatives,rather than a word,

it will, if it selects it, treat it as a new reference and will select one of these alternatives.

Author: PhilFactor

Date: 05/11/2020

Database: PhilsScripts

Examples:

- select dbo.SentenceFrom('{

"name":[ "^prefix ^firstname ^lastname ^suffix",

"^prefix ^firstname ^lastname","^firstname ^lastname" ],

"prefix":["Mr","Mrs","Miss","Sir","Dr","professor"],

"firstname":["Dick","Bob","Ravi","Jess","Karen"],

"lastname":["Stevens","Payne","Boyd","Sims","Brown"],

"suffix":["3rd","MA","BSc","","","","",""]

}

','$.name',5)

Returns: >

a randomised string.

**/RETURNS NVARCHAR(MAX)

AS

BEGIN

IF COALESCE(@level, -1) < 0

RETURN 'too many levels';

/* if there are mutual references, this can potentially lead to a deadly embrace. This checks for that */ IF ISJSON(@JsonData) <> 0 --check that the data is valid

BEGIN

DECLARE @Choices TABLE

( INT

, value NVARCHAR(MAX));

DECLARE @words TABLE

( INT

, value NVARCHAR(MAX));

DECLARE

@ii INT

, @iiMax INT

, @Output NVARCHAR(MAX);

DECLARE @Endpunctuation VARCHAR(80); -- used to ensure we don't lose end punctuation

DECLARE

@SingleWord NVARCHAR(800)

, @ValidJsonList NVARCHAR(800);

--we check for a missing or global reference and use the first object

IF COALESCE(@Reference, '$') = '$'

SELECT TOP 1

@Reference = '$.' + --just get the first

FROM OPENJSON(@JSONData, '$')

WHERE type = 4;

INSERT INTO @choices

(, Value) --put the choices in a temp table

SELECT , value FROM OpenJson(@JSONData, @Reference) WHERE type = 1;

-- if there was an easy way of getting the length of the array then we

--could use JSON_VALUE ( expression , path ) to get the element

-- and get the chosen string

DECLARE @string NVARCHAR(4000) =

(SELECT TOP 1 value FROM @Choices CROSS JOIN RAN ORDER BY RAN.number);

SELECT @ValidJsonList = N'["' + REPLACE(STRING_ESCAPE(@string, 'json'), ' ', '","') + N'"]';

IF ISJSON(@ValidJsonList) = 0

RETURN N'invalid reference- ' + @ValidJsonList;

--now we examine each word in the string to see if it is reference

--to another array within the JSON.

INSERT INTO @words

(, value)

SELECT , value FROM OPENJSON(@ValidJsonList, '$');

IF @@ROWCOUNT = 0

RETURN @ValidJsonList + ' returned no words';

SELECT @ii = 0, @iiMax = MAX() FROM @words;

-- we now loop through the words either treating the words as strings

-- or symbols representing arrays

WHILE (@ii < (@iiMax + 1))

BEGIN

SELECT @SingleWord = value FROM @words WHERE = @ii;

IF @@ROWCOUNT = 0

BEGIN

SELECT @Output = N'no words in' + N'["' + REPLACE(@string, ' ', '","') + N'"]';

RETURN @Output;

END;

SELECT @ii = @ii + 1;

IF LEFT(LTRIM(@SingleWord), 1) = '^' -- it is a reference

BEGIN -- nick out the '^' symbol

SELECT @Reference = '$.' + STUFF(@SingleWord, 1, 1, ''), @Endpunctuation = '';

WHILE REVERSE(@Reference) LIKE '[:;.,-_()]%'

BEGIN --rescue any punctuation after the symbol

DECLARE @End INT = LEN(@Reference);

SELECT @Endpunctuation = SUBSTRING(@Reference, @End, 1);

SELECT @Reference = SUBSTRING(@Reference, 1, @End - 1);

END; --and we call it recursively

IF @level > 0

SELECT @Output = COALESCE(@Output + ' ', '') + dbo.SentenceFrom(@JsonData, @Reference, @level - 1) + @Endpunctuation;

END;

-- otherwise it is plain sailing. Would that it were always

-- that simple

ELSE

SELECT @Output = COALESCE(@Output + ' ', '') + @SingleWord;

END;

END;

ELSE

SELECT @Output = N'sorry. Error in the JSON';

RETURN @Output; --and return whatever (it could be a novel!)

END;

GOGenerating realistic titles and descriptions from a JSON phrase bank, using SQL

We'll now try this out. Before we do that book-title example I showed at the start of the article, we need a helper function that converts a string to a title, applying the title case convention for capitalizing most but not all the words in the title.

CREATE OR ALTER FUNCTION [dbo].[TitleCase]

(@string NVARCHAR(MAX))

/**

Summary: >

Capitalise all words five letters and longer.

Even if the words are prepositions or conjunctions,

lower case all other conjunctions, prepositions, and articles

capitalise first and last word

Author: PhilFactor

Date: 05/01/2020

Database: PhilsScripts

Examples:

- SELECT dbo.TitleCase('god save her majesty') --God save her majesty

Returns: >

Returns a copy of the string with only its first character capitalized.

**/RETURNS NVARCHAR(MAX)

AS

BEGIN

DECLARE

@StringLength INT

, @Start INT

, @end INT

, @Cursor INT

, @WordLength INT

, @word NVARCHAR(200)

, @output NVARCHAR(MAX)

, @wordNumber INT;

SELECT @Cursor = 1, @StringLength = LEN(@string), @WordNumber = 0;

WHILE @cursor < @StringLength

BEGIN

SELECT @start = PATINDEX('%[^A-Za-z0-9][A-Za-z0-9%]%', ' ' + SUBSTRING(@string, @cursor, 50)) - 1;

IF @start < 0

BREAK;

SELECT

@WordLength = PATINDEX('%[^A-Z''a-z0-9-%]%', SUBSTRING(@string, @cursor + @start + 1, 50) + ' ')

, @WordNumber = @Wordnumber + 1

, @word = SUBSTRING(@string, @cursor + @start, @WordLength);

IF @Wordnumber = 1 --first word

OR @word NOT IN ( 'of', 'in', 'to', 'for', 'with', 'on', 'at', 'from', 'by', 'as', 'into', 'like', 'over', 'out', 'and', 'that', 'but', 'or', 'as', 'if', 'when', 'than', 'so', 'nor', 'like', 'once', 'now', 'a', 'an', 'the' )

SELECT @string = STUFF(@string, @cursor + @start, 1, UPPER(SUBSTRING(@string, @cursor + @start, 1)));

SELECT @Cursor = @Cursor + @Start + @WordLength + 1, @WordNumber = @Wordnumber + 1;

END;

RETURN @string;

END;

GOTo try it out, producing either of our first two examples, use the following JSON:

DECLARE @jsonData nvarchar(MAX)=N'{

"adjective":[

"carnivalesque", "rhetorical","divided","new","neoliberal", "sustainable","socially-responsible",

"multimedia","historical","formalist","gendered","historical","heterotopian", "collective",

"cultural","female","transformative","intersectional","political","queer","critical","social",

"spiritual","visual","Jungian","unwanted","Pre-raphaelite","acquired","gender","surreal",

"the epicentre of", "midlife","emotional","coded","fleeting","ponderous","expressive",

"self-descriptive","theoretical","multi-dimensional","dystopic","fragments of","humanistic",

"interpretive","critical","probablistic","aphoristically constructed","disconnected",

"subtle","ingenious","deep","shrewd","astute","sophistical"

],

"doesSomethingWith":[

"evokes","explores","describes","summarises","deliniates","traces","relates","characterises",

"depicts","focuses on","narrates","educes","draws inspiration from",

"tracks the many influences on","meditates on","reflects on","examines","observes",

"offers","scrutinises"

],

"interaction":[

"relationship","affinity","resonance","narrative ","interaction"

],

"something":[

"the body","experience","archetype","queerness","gifts","tenets","synesthesia","politics",

"subculture","memories","oppression","space","abjection","telesthesia","transnationalism",

"care","Ambivalence","neoliberalism","^adjective identity","transcendence","resistance",

"performance","masochism","spectatorship","play","masculinity","aesthetics","phenomenology",

"Blaxpoitation","plasticity","annihilation","identity","regeneration","Narrative",

"Metaphysics","Normativity","progress","erasure","gender perception","complexity","power",

"exceptionalism","surreality","scrutiny","inequality","auto-ethnography","opacity",

"utopic self-invention","experience", "identity", "intellection","approach to ^noun",

"epistemology","contexts","hermeneutics","the role of shame","the aesthetic of detachment"

],

"somethings":[

"bodies","experiences","archetypes","gifts","tenets","synesthesias","political thoughts",

"subcultures","memories","oppressions","Spaces","Abjections","Telesthesias","Transnationalisms",

"Ambivalences","Neoliberalisms","^adjective Identities","Transcendences","Resistances",

"performances","Masochisms","Spectatorships","Aesthetics","Phenomenologies","Identities",

"Regenerations","Narratives", "Normativities","Erasures","gender perceptions","complexities",

"exceptionalisms","inequalities","utopic self-inventions","experiences", "intellections",

"approaches to ^noun", "epistemologies","contexts"

],

"and":[

"and its relationship to","in combination with", "in contrast to", "and its intersections with",

"in its paradoxical relationship to","in conjunction with"],

"stuff":[

"particular texts","diary entries","painstaking research","diary musings","sporadic poetry",

"personal letters","early drafts of a memoir","newspaper articles","letters to the newspapers",

"august research"

],

"note":[

"The author ^doesSomethingWith ^something ^and ^something in ^stuff by ^writer, and ^doesSomethingWith ^personal, ^personal ^thought on topics from the mundane to the profound.",

"This ^book ^doesSomethingWith various ^adjective ^somethings and their relation to ^source: and the influence of the ^doingSomethingTo ^noun ^doesSomethingWith the ^something ^terminator.",

"^something is at the intersection of ^something, ^something and ^something. it offers a new approach; not only ^doingSomethingTo the ^noun, but ^doingSomethingTo the ^noun",

"This ^book ^doesSomethingWith the ^interaction between ^something and ^something. ^inspiredby, and by ^meditating, new ^feelings are ^made ^terminator","the ^interaction between ^something ^and ^something in this ^book is ^pretty. ^inspiredby, new ^feelings which dominate the early chapters ^doesSomethingWith ^something ^terminator.",

"It is ^likely that this will ^remain the most ^positive ^book on the subject, balancing as it does the ^interaction between ^something and ^something. ^inspiredby, it ^doesSomethingWith ^something ^terminator.",

"^tracing influences from ^something, ^something and ^something, the ^book ^doesSomethingWith ^noun through time",

"This ^book provides a ^positive, ^positive introduction to ^adjective ^something ^terminator, with a focus on ^noun., By ^meditating, new ^feelings are ^made ^terminator",

"^doingSomethingTo ^adjective ^something is ^positive, ^positive and ^positive. This ^book ^doesSomethingWith the ^adjective and ^adjective imperatives of ^adjective ^noun.",

"^positive, ^positive and yet ^positive, this ^book is unusual in that it is ^inspiredby. It will make you appreciate ^doingSomethingTo ^something ^terminator"

],

"book":[

"book","book","^positive book","^positive exposition","booklet","republished series of lectures",

"dissertation","^positive compilation","^positive work","volume","^positive monograph","tract",

"thesis","publication","expanded journal article","research paper"

],

"likely":[

"probable","likely","quite possible","debatable","inevitable","a done deal",

"probably just a matter of time","in the balance","to be expected"

],

"tracing":[

"tracing","tracking","following","uncovering","trailing","investigatiing","exploring"

],

"remain":[

"estabilsh itself as","be accepted as","remain","be hailed as","be received by the public as",

"be recommended as","become"

],

"pretty":[

"a source of ^positive insights","a ^positive reference","a ^positive statement",

"demanding but ^positive"

],

"positive":[

"comprehensive","challenging","exciting","discoursive","interesting","stimulating","evocative",

"nostalgic","heartwarming","penetrating","insightful","gripping","unusual","didactic","instructive",

"educative","informative","edifying","enlightening","illuminating","accessible","effective","resonant",

"vibrant"

],

"meditating":[

"^doingSomethingTo the ^something and ^something",

"balancing the intricate issues, especially the ^adjective ^something",

"steering clear of the obvious fallacies in their thinking about ^adjective ^something",

"arguing that it is equal in complexity and power to ^something",

"clearing away the misconceptions about ^something"

],

"inspiredby":[

"with a nod to both ^source and ^source",

"It draws inspiration from influences as diverse as ^source and ^source",

"With influences as diverse as as ^source and ^source",

"at the intersection of ^source, ^source and ^source",

"Drawing from sources such as ^source, ^source and ^source as inspiration",

"Taking ideas from writers as diverse as as ^writer and ^writer"

],

"source":[

"Impressionism","Nihilism","left-bank decedence","Surrealism","Psycholinguistics",

"Post-modermnism","Deconstructionism","Empiricism","Existentialism","the humanities",

"Dialectical materialism","Feminist Philosophy","Deontological Ethics","Critical Realism",

"Christian Humanism","Anarchist schools of thought","Eleatics","Latino philosophy","design",

"the Marburg School","the Oxford Franciscan school","Platonic Epistemology","Process Philosophy",

"Shuddhadvaita","urban planning"

],

"writer":[

"Edward Abbey","JG Ballard","Henry James","Kurt Vonnegut","Evelyn Waugh","Wyndham Lewis",

"T E Lawrence","Timothy Leary","Hugh MacDiarmid","William Faulkner","Gabriel Garcia Marquez",

"Henrik Ibsen","Franz Kafka","Mary Wollstonecraft","Henry David Thoreau","Levi Strauss"

],

"terminator":[

"as a means of ^adjective and ^adjective ^something","representing ^adjective claims to ^something",

"as a site of ^something","as ^something","without a connection","as ^adjective ^something",

"as ^adjective ^something and ^something","as ^adjective mediators","in contemporary society",

"and the gendering of space in the gilded age","as ^adjective justice","as violence",

"in the digital streaming age","in an ^adjective framework","in a global context",

"in new ^adjective media","and the violence of ^something","as a form of erasure",

"and the negotiation of ^something","signifying ^adjective relationships in ^adjective natures",

"as a site of ^adjective contestation","in crisis","as ^adjective devices","through a ^adjective lens",

"through a lens of spatial justice","within the ^adjective tradition of ^something."

],

"title":[

"^doingSomethingTo ^something ^terminator.","^noun ^terminator.",

"^doingSomethingTo ^adjective ^something: The ^adjective ^noun.",

"^doingSomethingTo ^noun",

"^doingSomethingTo the ^adjective ^something"

],

"doingSomethingTo":[

"understanding","intervening in", "engaging with", "interpreting",

"speculating about", "tracing the narrative of","introducing the theory of",

"presenting methods and practices of","offering case practices of","describing changes in",

"reinterpreting","critiquing","reimagining","evoking","exploring","describing","summarising",

"deliniating","tracing","relating","characterising","depicting","methodically restructuring",

"focusing on","narrating","educing","tracking the many influences on","meditating on",

"situating","transforming","disempowering","a reading of","transcending",

"activating","the politics of","representations of","interrogating","erasing","redefining",

"identifying","performing","the legibility of","democratizing","de-centering",

"gender and","debating","signaling","embodying","building","the role of","historicizing",

"repositioning","destabilizing","mapping","eliminating","engaging with"

],

"noun":[

"Genre and Justice","^doingSomethingTo Uncertainty","Identity","^something and ^something of ^something",

"Bodies and Static Worlds","^noun of ^adjective Spaces","^something as resistance,",

"Modes of witnessing","representations of trauma","concept of freedom","multimedia experiences",

"bodies","theory and empirical evidence","ecology of ^something","^adjective Labor Migration",

"^something and ^something","^adjective possibilities","^adjective limitations",

"aesthetic exchange","Immersion","abstraction","Revolutionary Sexuality","politics and power",

"aesthetics","aepresentation","^adjective categories","pluralities","gender","gaze",

"forms of ^something","silences","power structures","dissent","^adjective approach","self",

"queerness","modes of being","ontology","agency","epistemologies","intertextuality",

"Hyper-Extensionality","fields of belonging","hybridization","literary justice","visualisation",

"Interpretation","epistemology","narrative experimentation"

],

"feelings":[

"combinations","tensions","synergies","resonances","harmonies","contradictions","paradoxes",

"superimpositions","amalgamations","syntheses"

],

"personal":["deeply personal", "emotionally wrenching","highly charged","itensely private","dark",

"profound","heartfelt","heartwarming","spiritual","nuanced","reflective","deep","unflinching"],

"thought":["ruminations","meditations","interpositions","insights","perceptions"],

"made":[

"distilled","manufactured","woven","synthesised","uncovered","determined","observed","portrayed"

]

}'

In the same batch, we execute this.

SELECT TOP 2000 dbo.TitleCase(dbo.SentenceFrom(@jsonData, '$.title', 5)) AS title,

dbo.SentenceFrom(@jsonData, '$.note', 5) AS notes

FROM sys.objects;Naturally, you'd normally want to stash your string collections in a table and, as we are using a SQL function, it is easy to do. Notice that the functions generating the title and the notes use the same JSON phrase-bank. We just start them in different places to give the low-level structure of the sentences. We can use a lot of the collections of phrases for both.

Using the same phrase bank in PowerShell

We can use the same phrase-bank with PowerShell. Here are the equivalent functions. SentenceFrom becomes Get-RandomSentence and the TitleCase function becomes ConvertTo-TitleCase. You can find both functions on GitHub, here.

<#

.SYNOPSIS

Picks out random sentences from JSON data that is formatted as a dictionary of arrays

.DESCRIPTION

this function takes a powershell object that has several keys representing phrase-banks,each

of which has an array that describes all the alternative components of a string and from it,

it returns a string.

basically, you give it a list of alternatives in an arreay and it selects one of them.

However, if you put in the name of an array as one of the alternatives,rather than

a word,it will, if it selects it, treat it as a new reference and will select one of

these alternatives.

.PARAMETER AllPhraseBanks

This is the powershell object with the phrasebanks.

.PARAMETER bank

The name of the phrase-bank to use

.EXAMPLE

Get-RandomSentence -AllPhraseBanks $MyPhrasebank -bank 'start'

1..1000 | foreach{

Get-RandomSentence -AllPhraseBanks ($PhraseLists | convertfrom-Json) -bank 'note'

}>'MyDirectory\notesAboutBooks.txt'

1..10000 | foreach{

ConvertTo-TitleCase(

Get-RandomSentence -AllPhraseBanks ($PhraseLists | convertfrom-Json) -bank 'title')

}>'Mydirectory\BookTitles.txt'

.NOTES

This function gets called recursively so imitates the elaborate

recursion of normal written language and, to a lesser extent, speech.

#>

function Get-RandomSentence

{

[CmdletBinding()]

param

(

$AllPhraseBanks,

$bank

)

$bankLength = $AllPhraseBanks.$bank.Length

$return = ''

$AllPhraseBanks.$bank[(Get-Random -Minimum -0 -Maximum ($bankLength - 1))] -split ' ' |

foreach {

if ($_[0] -eq '^')

{

$collection = $_.TrimStart('^');

$endPunctuation = if ($collection.Trim() -match '[^\w]+') { $matches[0] }

else { '' }

$collection = $collection.TrimEnd(',.;:<>?/!@#$%&*()-_=+')

$return += (Get-RandomSentence -AllPhraseBanks $AllPhraseBanks -bank $collection)+ $endPunctuation

}

else

{ $return += " $($_)" }

}

$return

}

<#

.SYNOPSIS

Converts a phrase to Title Case, using current culture

.DESCRIPTION

Takes a string made up of words and gives it the same UpperCase letters as is conventional

with the title of books, chapter headings, or films.

.PARAMETER TheSentence

This is the heading, sentence, book title or whatever

.EXAMPLE

PS C:\> ConvertTo-TitleCase -TheSentence 'to program I am a fish'

.NOTES

Phil Factor November 2020

#>

function ConvertTo-TitleCase

{

param

(

[string]$TheSentence

)

$OurTextInfo = (Get-Culture).TextInfo

$result = '';

$wordnumber = 1

$result += $TheSentence -split ' ' | foreach {

if ($WordNumber++ -eq 1 -or $_ -notin ('of', 'in', 'to', 'for', 'with', 'on', 'at', 'from',

'by', 'as', 'into', 'like', 'over', 'out', 'and', 'that', 'but', 'or', 'as', 'if',

'when', 'than', 'so', 'nor', 'like',

'once', 'now', 'a', 'an', 'the'))

{

$OurTextInfo.ToTitleCase($_)

}

else

{ $OurTextInfo.ToLower($_) }

}

$result

}

If you store the JSON in the same directory, you can then generate the two lists very quickly by using this, saving the resulting lists in your home documents directory.

Invoke-Expression "$pwd\Get-RandomSentence.ps1"

$PhraseLists = get-content "$pwd\HumanitiesPublications.json"

1..1000 | foreach{

Get-RandomSentence -AllPhraseBanks ($PhraseLists | convertfrom-Json) -bank 'note'

}>"$($env:HOMEDRIVE)$($env:homepath)\documents\notesAboutBooks.txt"

1..10000 | foreach{

ConvertTo-TitleCase(

Get-RandomSentence -AllPhraseBanks ($PhraseLists | convertfrom-Json) -bank 'title')

}>"$($env:HOMEDRIVE)$($env:homepath)\documents\BookTitles.txt"

Accounting for dependencies and variation

With these basic text generation techniques in place, we can start to generate quite complex fake data, where the generated data in one text column, must reflect the values in another, such as gender, location or even type of complaint.

In a past article, I've used as an example the record of correspondence for a website that is part of a chain of stores. Here, we might have text columns that store the original complaint and the resulting correspondence chain. To generate realistic data, the nature of the response will need to fit with the type of mistake reported, such as an order not arriving on time, the goods being broken, the package having parts of the order missing, wrong items or having had an unsympathetic agent in the store. Each type of complaint will have its own entry point (the name of an array in our function). The 'fake' response may need to include the name of the store, the tracking number, perhaps the carrier, the name of the customer, the respondent, the discount or whatever else you offer in compensation, the tracking link for the package and so on.

The following sections explain how we can achieve this level of verisimilitude in our faked data. As it doesn't have to pass close examination, only the noticeable parameters are likely to need to be right.

Adding strings depending on the value in another column

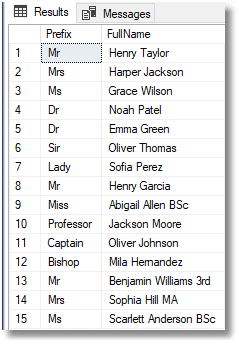

We can do quite a bit of work where the sample data we generate depends on another column. Let's demonstrate this simply by returning to our "random names" example. What if we have an existing column that implies the gender of the person. We need to apply names that seem to be gender appropriate. We do this by defining different entry points for each main gender. We have prefixes that imply that a male name is required, other prefixes for a female name and a third that is for prefixes that are gender neutral.

DROP TABLE IF EXISTS #TableWithExistingData --in case we rerun

CREATE TABLE #TableWithExistingData (Prefix NVARCHAR(40) NOT NULL, FullName NVARCHAR(255))

INSERT INTO #TableWithExistingData (Prefix) --insert sample prefixes into our table

VALUES ('Mr'),('Mrs'),('Ms'),('Dr'),('Dr'),('Sir'),('Lady'),('Mr'),

('Miss'),('Professor'),('Captain'),('Bishop'),('Mr'),('Mrs'),('Ms')

--now we create a variable for our JSON

DECLARE @JSONNameData NVARCHAR(MAX)='{

"malename":[

"^malefirstname ^lastname ^suffix",

"^malefirstname ^lastname",

"^malefirstname ^lastname"

],

"femalename":[

"^femalefirstname ^lastname ^suffix",

"^femalefirstname ^lastname",

"^femalefirstname ^lastname"

],

"name":["^malename","^femalename"],

"malefirstname":["Noah","Oliver","William","Elijah","James","Benjamin",

"Lucas,","Mason","Ethan","Alexander","Henry","Jacob","Michael","Daniel",

"Logan","Jackson","Sebastian","Jack","Aiden"

],

"femalefirstname":["Olivia","Emma","Ava","Sophia","Isabella","Charlotte",

"Amelia","Mia","Harper","Evelyn","Abigail","Emily","Ella","Elizabeth",

"Camila","Luna","Sofia","Avery","Mila","Aria","Scarlett","Penelope",

"Layla","Chloe","Victoria","Madison","Eleanor","Grace","Nora","Riley"

],

"lastname":["Smith","Johnson","Patel","Williams","Brown","Jones","Garcia",

"Miller","Davis","Rodriguez","Martinez","Hernandez","Lopez","Gonzalez",

"Wilson","Anderson","Li","Thomas","Taylor","Moore","Jackson","Martin","Lee",

"Perez","Thompson","White","Wong","Harris","Sanchez","Clark","Ramirez","Lewis",

"Robinson","Walker","Young","Allen","King","Wright","Scott","Torres",

"Nguyen","Hill","Flores","Green" ],

"suffix":[

"3rd","MA","BSc","","","","",""

]

}'

/* now we can add the fullnames with random gender-appropriate names */UPDATE #TableWithExistingData

SET FullName= dbo.SentenceFrom (@JSONNameData,'$.'

+CASE WHEN Prefix IN ('Ms','Mrs','Lady','Miss')

THEN 'femalename'

WHEN Prefix IN ('Mr','Sir')

THEN 'malename'

ELSE 'name' END,5)

FROM #TableWithExistingData

SELECT * FROM #TableWithExistingData

Injecting the string from another column into the middle of a random string.

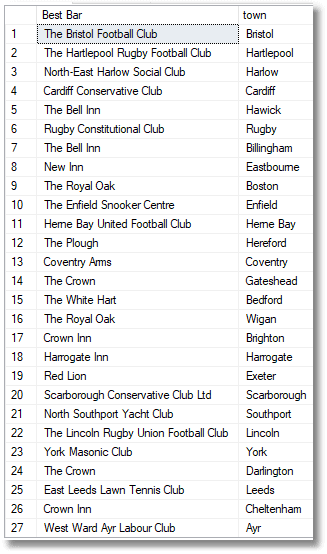

Now we know that we can make the string relevant to the value in another column. What about generating the data where the text within a string needs occasionally to include the value from another string in the same row. If we were to come up with the random name of a bar or pub in a particular location, it might be just called 'The Kings Head', but it might be called 'Wigan Constitutional Club' if it was in Wigan, a British city. Your fake data would look odd if a Wigan institution were called the 'Edinburgh Social Club'.

If the words can be in the middle of the string, the simplest way is to use a placeholder such as #### or **** in the list of alternatives. This is easier demonstrated than to explain. I've chosen Bars and Pubs because they quite often include the name of the place, but not always, so it can get a bit tricky.

DECLARE @JSONBarName NVARCHAR(MAX)='{

"club":["The Red Lion","The Royal Oak","Red Lion","Royal Oak","The Crown Inn",

"The White Hart","The Crown","The Plough Inn","The New Inn","New Inn",

"Crown Inn","The Ship Inn","The Wheatsheaf","The White Horse","Kings Arms",

"ROYAL BRITISH LEGION","The Swan","The Plough","The Bell Inn",

"The *** Golf Club","*** Bowling Club","The Royal *** Cricket Club","*** Conservative Club","*** RUGBY CLUB",

"The *** Rugby Football Club","The *** Town Football Club","*** Masonic Hall","The *** Football Club",

"North-East *** Social Club","The *** Bowls Club","*** Sailing Club ","The *** Sports Club","West *** Working Mens Club",

"*** Masonic Club","*** Arms","*** Club","*** Constitutional Club","North *** Yacht Club","*** Snooker Club",

"*** Town Cricket Club","East *** Lawn Tennis Club","*** Inn","The *** Rugby Union Football Club",

"*** Sports & Social Club","West Ward *** Labour Club","The *** Snooker Centre","*** United Football Club",

"*** Conservative Club Ltd"]

}'

Select replace (dbo.SentenceFrom (@JSONBarName,'$.club',1),'***',town) as [Best Bar],

town from (values

('Bristol'),('Hartlepool'),('Harlow'),('Cardiff'),('Hawick'),('Rugby'),('Billingham'),('Eastbourne

'),('Boston'),('Enfield'),('Herne Bay'),('Hereford'),('Coventry'),('Gateshead'),('Bedford'),('Wigan

'),('Brighton'),('Harrogate'),('Exeter'),('Scarborough'),('Southport'),('Lincoln'),('York'),('Darlington

'),('Leeds'),('Cheltenham'),('Ayr'),('Whitby'),('Durham')

)f(town)

Notice that not all the names of bars have the location included, and if it is, it is the name in the other column.

Injecting different choices on each row

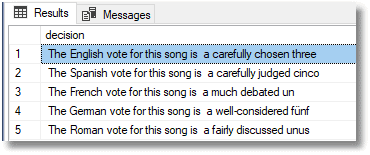

Although I've not yet found a use for the idea, it is possible to inject an array into the JSON on every line to provide a list of choices that is unique to the line. I've done a slightly silly example here of voting for the Eurovision song contest, so you'll have to imagine a rather more elaborate JSON document.

declare @Ranking nvarchar(max)='{"number":[],

"sentence":[" a ^sincere ^number","^number, which was ^sincere",

"^number, which was ^sincere"],

"sincere": ["carefully judged","much debated",

"fairly discussed","well-considered","carefully chosen"]}'

Select 'The '+[language]+' vote for this song is '+

dbo.SentenceFrom (json_modify(@ranking, 'strict $.number',

json_query('["'+one+'","'+two+'","'+three+'","'+four+'","'+five+'"]')),

'$.sentence', 1)

from (values

('English','one','two','three','four','five'),

('Spanish','uno','dos','tres','cuatro','cinco'),

('French','un','deux','trois','quatre','cinq'),

('German','eins','zwei','drei','vier','fünf'),

('Roman','unus','duo','tres','quattuor','quinque')

)OneToFive([Language],one,two,three,four,five)

This will give something random like…

…which will be instantly recognized by the followers of the Eurovision song contest.

Generating numeric data

There are, of course, other types of data that you'd need as well as text. The random number generator is likely to be useful for this. Most continuous variables tend to follow the 'Normal' distribution and a good approximation to normally distributed data is obtained from this expression:

SELECT ((RAND() * 2 - 1) + (RAND() * 2 - 1) + (RAND() * 2 - 1)) * @StandardDeviation + @Mean

However, very little in nature is randomly distributed and in real life you seldom see the classic bell-curve. Real distributions are messier and more squished, when seen as a graph.

With a little bit of extra math applied, it is generally possible to obtain the same distribution as a real-life squishy curve. I don't need to describe these in this article because I've covered most of the techniques for generating numbers in these articles:

- Spoofing Data Convincingly: Credit Cards

- Spoofing Data Convincingly: Doing the Shuffle and Zip

- Spoofing Data Convincingly for Database Development. An introduction.

- Spoofing Data Convincingly: Masking Continuous Variables ...

- Spoofing Data Convincingly: Altering Table Data

Conclusion

Generating convincing test data is not done for your own benefit, but for everyone who is involved in developing, testing, rolling out or maintaining an application. You would, I'm sure, be content with the classic 'Lorem ipsum dolor sit amet, consectetur adipiscing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat.' However, when training, testing, demonstrating, or other checks, it is much easier if you have convincing data and plenty of it.