Introduction

Relational databases are designed to track changes introduced to a database by data modification language (DML) commands. The fundamental reason for this construct is to ensure that changes are durable and that they can be rolled back reliably. The typical DML command used in SQL are INSERT, UPDATE and DELETE. When INSERT introduces new rows to a database table, the database engine must persist the activity physically in an efficient manner.

This means the change must be recorded quickly in a log file (log buffer first) while the actual data blocks are still in memory until a checkpoint occurs. This also goes for UPDATE and DELETE operations. Attempting to persist changes made in memory to data files directly would not be efficient. This log file or Transaction Log File is thus very important for the activities of a relational database system such as SQL Server.

In this article we illustrate how the transaction log can consume much more space that the actual data contained in the database. We also demonstrate that this happens because the transaction log captures and stores a history of changes to the database compared to the data files which store the end state after changes are done. Also covered are a few recommendations on how to manage this transaction log growth proactively and reactively.

Experiment: Log Growth vs. Data Growth

The first step is to show an example of log growth compared to data file growth when performing simple DML operations. The database used for the experiment has been designed to make the impact of the steps apparent. The code in Listing 1 creates the database and enables FULL RECOVERY mode.

-- Listing 1: Create Database with Small Filegrowth for Illustration USE [master] GO /****** Object: Database [DB01] Script Date: 14/05/2022 9:38:51 am ******/ CREATE DATABASE [DB01] CONTAINMENT = NONE ON PRIMARY ( NAME = N'DB01', FILENAME = N'C:MSSQLDataDB01.mdf' , SIZE = 4MB , MAXSIZE = UNLIMITED, FILEGROWTH = 1KB ) LOG ON ( NAME = N'DB01_log', FILENAME = N'E:MSSQLLogDB01_log.ldf' , SIZE = 512KB , MAXSIZE = 2048GB , FILEGROWTH = 1KB ) GO ALTER DATABASE DB01 SET RECOVERY FULL; GO

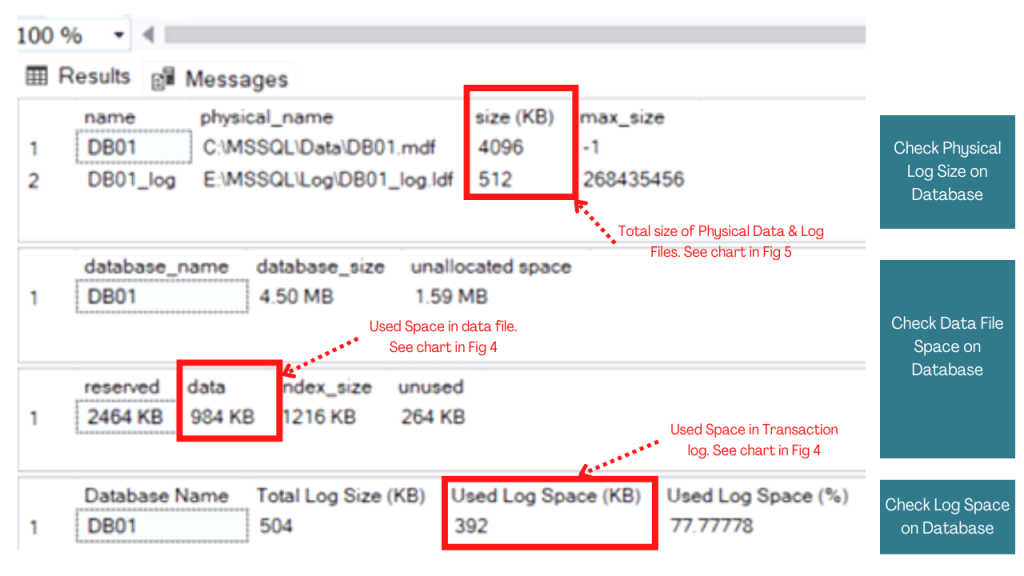

To ensure there is a baseline, we use Listing 2 to check the physical size of the data and log files as well as the space used. Figure 1 shows the result sets obtained from executing Listing 2.

-- Listing 2: Check Physical Log Growth on Database USE DB01 GO SELECT name, physical_name, size*8 , max_size FROM sys.master_files WHERE name like 'DB01'; GO -- Check Data File Space on Database EXEC sp_spaceused; GO -- Check Log Space on Database SELECT DB_NAME(database_id) [Database Name] ,total_log_size_in_bytes/1024 [Total Log Size (KB)] ,used_log_space_in_bytes/1024 [Used Log Space (KB)] ,used_log_space_in_percent [Used Log Space (] FROM sys.dm_db_log_space_usage ; GO

Figure 1: Data & Log File Size, Usage (Baseline)

Figure 1: Data & Log File Size, Usage (Baseline)Pay close attention to the physical file sizes for the data and log files as well as the space used as indicated in Figure 1. The data and log files are 4096KB and 512KB in size respectively. Recall these are the sizes specified in the database creation script. The space used so far are also indicated.

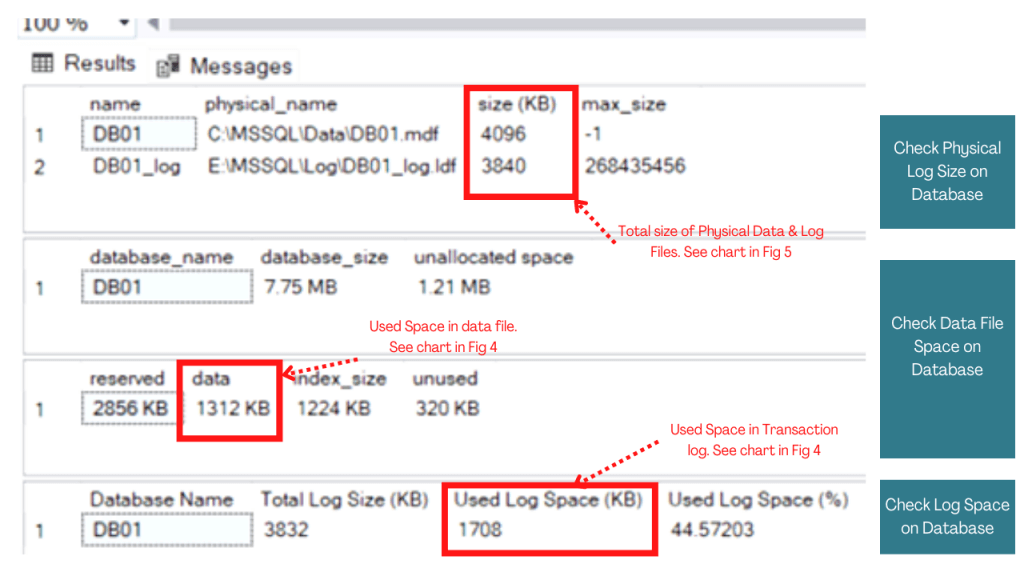

We then create a new table in the database and insert 5000 rows. Following this INSERT activity, we check the file growth again. The result of running Listing 2 again is shown in Figure 2.

-- Listing 3: Statement to Create and Populate table

USE DB01

GO

CREATE TABLE TAB01 (

ID INT IDENTITY (1,1)

,Name CHAR(50));

GO

INSERT INTO TAB01 VALUES ('Kenneth Igiri');

GO 5000

Figure 2: Data & Log File Size, Usage (After Table Creation, Insert)

Figure 2: Data & Log File Size, Usage (After Table Creation, Insert)Again, pay close attention to the sections of the result set indicated by red arrows. The physical data file size remains the same (4096KB) but the Transaction Log has grown to 3840KB. Used space has increased in both cases but the transaction log is much more significant. This is further illustrated in the charts shown in Figure 4 and Figure 5.

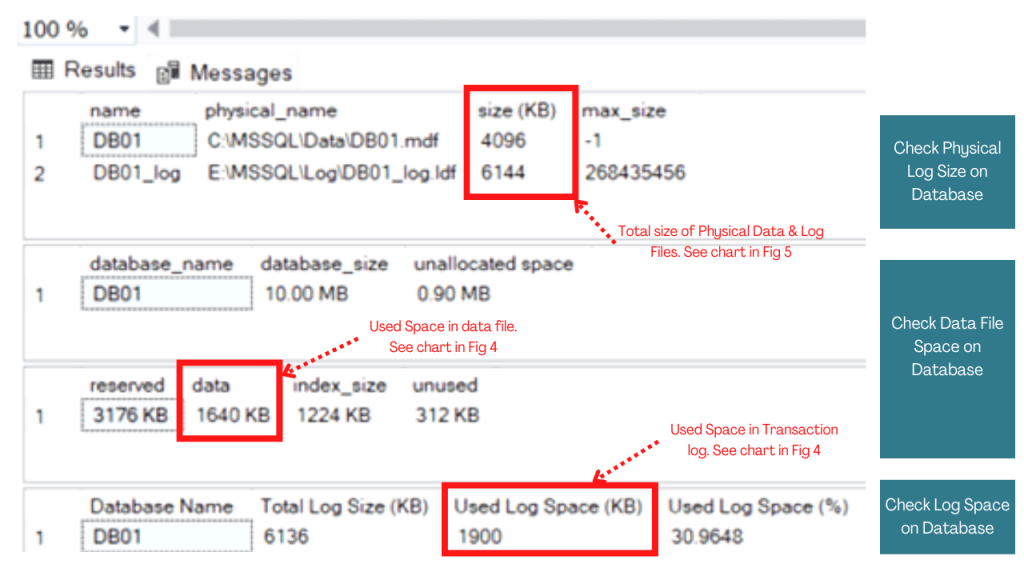

We now run the DML shown in Listing 3, which is a set of INSERT and DELETE statements that leave the database as it was before in terms of row count. However, we observe as shown in Figure 3 that there are changes in the space usage of the data and log files.

-- Listing 4: Check Log Growth on Database

USE DB01

GO

INSERT INTO TAB01 VALUES ('Kenneth Igiri');

GO 5000

DELETE FROM TAB01 WHERE ID>5000;

GO

SELECT COUNT(*) as Computed FROM TAB01;

GO

Figure 3: Data & Log File Size, Usage (After Insert, Delete)

Figure 3: Data & Log File Size, Usage (After Insert, Delete)By now, a significant part of the total database size is due to the transaction log file size.

Notes on Transaction Log Growth

As shown, the interesting thing about the growth of the transaction log in SQL Server is that a transaction log can grow significantly larger than database files depending on database activity. The transaction log records the history of changes in the database while the database file has a store of the results of each change without the history.

Graphical Analysis

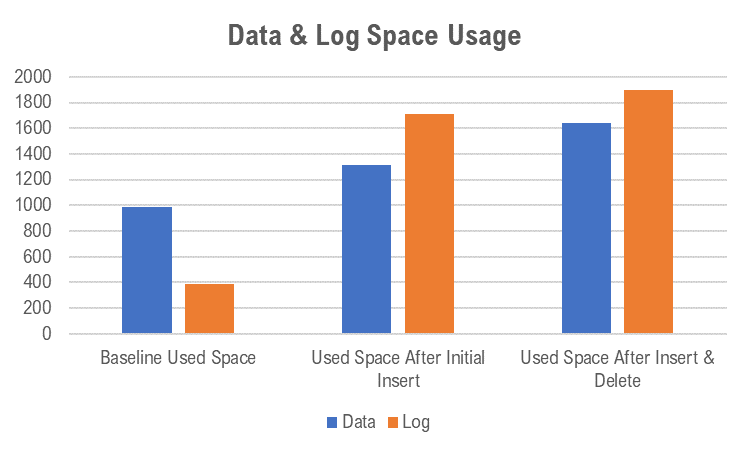

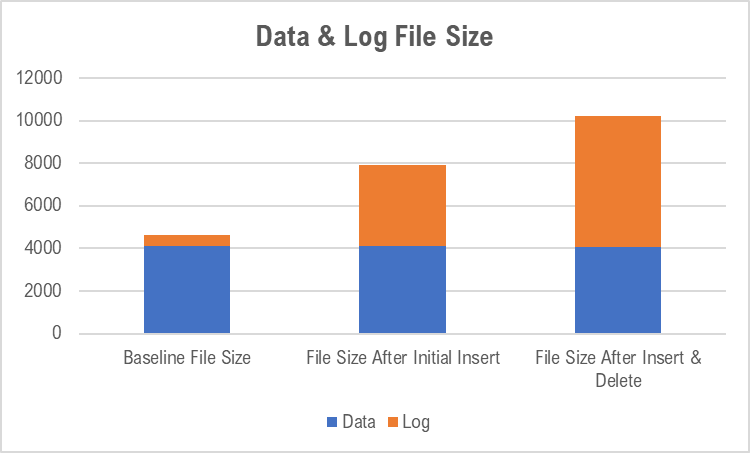

To make things a little clearer, the numbers returned in the preceding section we fed into a spread sheet and represented as bar chart. We indicated the specific numbers used in Figures 1,2 and 3.

Figure 4 shows the change in space used for both data and log files using a bar chart. The gap between the used space growth of the log and that of the data file corroborates the earlier assertion. Figure 5 also shows that the physical log now contributes more to the total database size than the data file itself.

Figure 4: Bar Chart Showing Used Space for Data & Log Files

Figure 4: Bar Chart Showing Used Space for Data & Log FilesA row count of the TAB001 table will show that the overall useful data is still the same because we merely inserted additional 5000 rows and then deleted them. In a perfect world, we are not even supposed to see any change in the data file used space but factors like fragmentation account for some observed growth.

Figure 5: Bar Chart Showing Physical Size for Data & Log Files

Figure 5: Bar Chart Showing Physical Size for Data & Log FilesImplications of Transaction Log Growth vs. Data Growth

Depending on the nature of activities in your database environment it is very possible for your transaction log file to grow significantly larger than your data files. You as the DBA working on-premises will have to make provision for such growth. If you are running the workload in the cloud, you may incur costs associated with storage consumption.

Some DBAs try to take the shortcut of setting production databases in SIMPLE recovery mode to manage log growth. This is tricky because data recovery is compromised in case of a crash in the middle of the day. Also, you still have to address growth in scenarios where long-running transactions still grow the transaction log and leave you needing to shrink the transaction file. It is always a good idea to use FULL REOVERY MODE for production databases.

Recommendations

In order to address log growth you should take the following measures:

- Analyze your database activity and determine the largest size the transaction log can grow to within the busiest hour of your day. Based on this, make provision in the allocated drive so your transaction log never runs out of space in the operating system (Error 9002).

- Schedule a routine transaction log backup as part of your backup strategy. This would likely be the third tier in a strategy that involves a full backup, differential backup, and log backup. The timing for this could be hourly or less depending on hour busy the database is.

- If you are using Transaction Log Shipping for disaster recovery, you may not need transaction log backups. However, you should ensure your disaster recovery setup is working consistently.

- If in the unfortunate event you run out of disk space or exceed your budgeted disk space, you will need to do a log shrink or create an additional log file on a different volume. Whatever you do, a manual log backup of the transaction log is always advised as the first step if possible.

The following sections give a little more detail on the above recommendations.

Analyze Database Activity

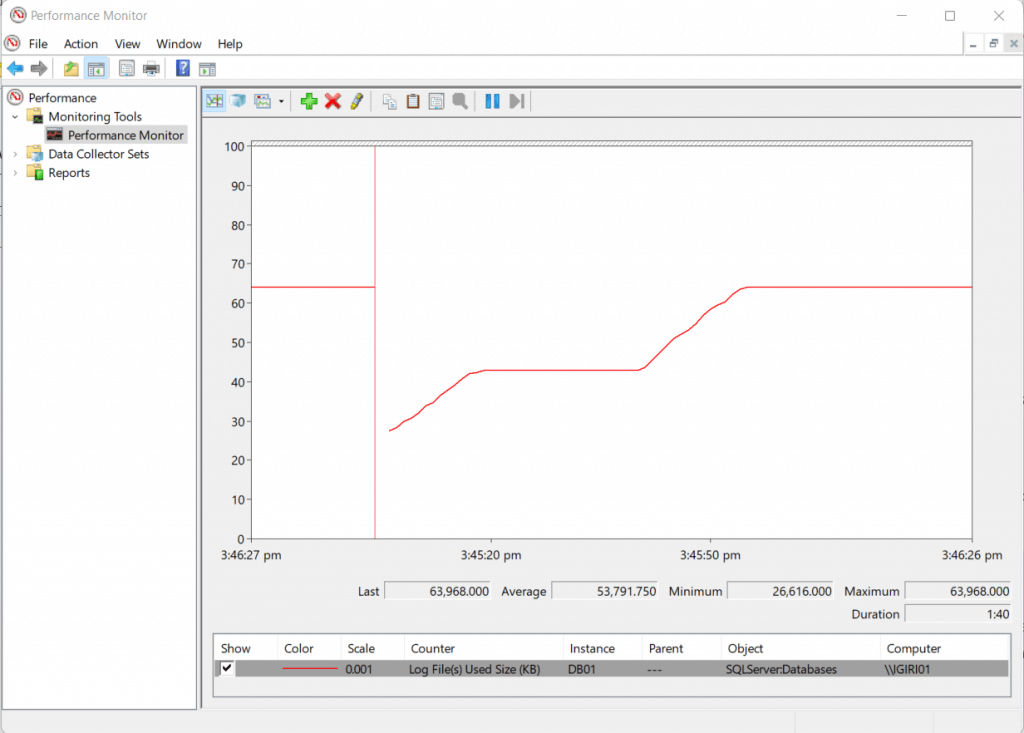

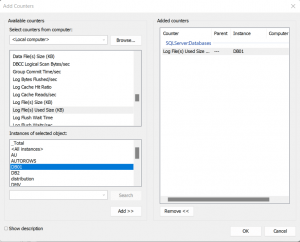

One quick way to track the transaction log file usage on your instance is to use the Log Files Used Size (KB) Performance Counter as shown in Figure 6. This counter is available on Performance Monitor under the SQLServer:Databases group (See Figure 7).

Figure 6: The Log Files Used Performance Counter

Figure 6: The Log Files Used Performance CounterNotice that this counter was enabled for the database of interest alone - DB01. This helps isolate the needs of each database rather than a case where the Total_ for all instances is used.

Figure 7: The Log Files Used Performance Counter

Schedule Routine Transaction Log Backup

Listing 5 is an example of a script which creates an SQL Agent job to backup the transaction log for specified databases at a one-hour interval. The job also sends a notification once complete so you can keep track of successful log backups. It is expected that you will have other jobs to perform full and differential backups assuming you choose this method.

It is possible to achieve this using third party backup tools such as Veritas Netbackup, Devart's SQL Backup Tool and others. This article describes a very specific use case f configuring backups with Veritas Netbackup.

-- Listing 5: Create a Job to Backup the Transaction Log

-- SQL Agent Job Script

-- Be sure to define your backup path. This can be a UNC path too

-- Replace DB1, DB2, DB3 etc. with list of databases in the instance

-- Create an operator "DatabaseAdmin" or use a name of your choice

USE [msdb]

GO

/****** Object: Job [Custom_Log_Backups] Script Date: 12/11/2016 10:07:21 ******/

BEGIN TRANSACTION

DECLARE @ReturnCode INT

SELECT @ReturnCode = 0

/****** Object: JobCategory [[Uncategorized (Local)]]] Script Date: 12/11/2016 10:07:21 ******/

IF NOT EXISTS (SELECT name FROM msdb.dbo.syscategories WHERE name=N'[Uncategorized (Local)]' AND category_class=1)

BEGIN

EXEC @ReturnCode = msdb.dbo.sp_add_category @class=N'JOB', @type=N'LOCAL', @name=N'[Uncategorized (Local)]'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

END

DECLARE @jobId BINARY(16)

EXEC @ReturnCode = msdb.dbo.sp_add_job @job_name=N'Custom_Log_Backups',

@enabled=1,

@notify_level_eventlog=0,

@notify_level_email=3,

@notify_level_netsend=0,

@notify_level_page=0,

@delete_level=0,

@description=N'No description available.',

@category_name=N'[Uncategorized (Local)]',

@notify_email_operator_name=N'DatabaseAdmin', @job_id = @jobId OUTPUT

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

/****** Object: Step [Backup Log] Script Date: 12/11/2016 10:07:22 ******/

EXEC @ReturnCode = msdb.dbo.sp_add_jobstep @job_id=@jobId, @step_name=N'Backup Log',

@step_id=1,

@cmdexec_success_code=0,

@on_success_action=1,

@on_success_step_id=0,

@on_fail_action=2,

@on_fail_step_id=0,

@retry_attempts=0,

@retry_interval=0,

@os_run_priority=0, @subsystem=N'TSQL',

@command=N'exec sp_MSforeachdb @command1=''

DECLARE @backup sysname

set @backup=N''''L:BACKUP?_'''' + convert(nvarchar,getdate(),112)+N''''.trn''''

if ''''?'''' in ("DB1","DB2","DB3")

backup log [?] to disk = @backup with compression''',

@database_name=N'master',

@flags=0

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_update_job @job_id = @jobId, @start_step_id = 1

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobschedule @job_id=@jobId, @name=N'Sch_Backup_Log',

@enabled=1,

@freq_type=4,

@freq_interval=1,

@freq_subday_type=8,

@freq_subday_interval=1,

@freq_relative_interval=0,

@freq_recurrence_factor=0,

@active_start_date=20161211,

@active_end_date=99991231,

@active_start_time=180000,

@active_end_time=235959,

@schedule_uid=N'575f95a5-b353-42b8-9b62-e09e0653c5b6'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobserver @job_id = @jobId, @server_name = N'(local)'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

COMMIT TRANSACTION

GOTO EndSave

QuitWithRollback:

IF (@@TRANCOUNT > 0) ROLLBACK TRANSACTION

EndSave:

GO

Disaster Recovery Configuration

Configuring disaster recovery is a completely different topic all together. The connection to the current topic is that in all disaster recovery methods in SQL Server, Microsoft uses transaction log backups and truncation as part of the solution. After all, one fundamental purpose of the transaction log is recovery. Transaction Log Shipping implements log backups in the background thus there is no real need to configure log backups separately. When using AlwaysOn Availability Groups, log backups are required on at least one replica.

This article describes a step by step configuration of Transaction Log Shipping with special attention to delayed recovery. Review the section Setting Up the Environment which shows that a Log Shipping configuration is essentially composed of three SQL Agent Jobs - a backup job at the primary, a copy job and a restore job (both at the secondary databases).

Backup Log and Shrink

Listing 6 shows a script used to backup the transaction log and shrink it to 2MB. Notice that a full backup is required before a log backup can be performed. Also notice that the size to which the transaction log can be shrunk is dependent on how much free space is available.

-- Listing 6: Truncate Transaction Log

-- Backup the Database (Full Backup)

USE master

GO

BACKUP DATABASE DB01

TO DISK = N'E:BackupDB01.bak';

-- Backup the Transaction Log

BACKUP LOG DB01

TO DISK = N'E:BackupDB01_Log.trn';

-- Confirm Free Space in Transaction Log

USE DB01

GO

SELECT

DB_NAME(database_id) [Database Name]

,total_log_size_in_bytes/1024 [Total Log Size (KB)]

,used_log_space_in_bytes/1024 [Used Log Space (KB)]

,used_log_space_in_percent [Used Log Space (%)]

FROM sys.dm_db_log_space_usage ;

GO

-- Shrink the Transaction Log to the Desired Size Based on Free Space

USE DB01

GO

DBCC SHRINKFILE ('DB01_log',2)

GOTransaction Log Monitoring

Microsoft provides several tools and scripts for monitoring the transaction log some of which are highlighted in the references. Third-party tools, like Redgate's SQL Monitor, dbForge Transaction Log, SQL Monitor (part of Devart's DevOps tools for database), and SQL Transaction Log Reader, help DBAs monitor and manage the transaction log. These tools typically come with visual dashboards which make things easier to see.

Conclusion

Managing transaction Log growth is a critical aspect of SQL Server database administration. This is especially true when you are still hosting instances on-premises or using virtual machines to run workloads in the cloud. Every DBA worth his salt should have proactive, automated ways of doing this.

It is worth noting that asides transactions on user databases, maintenance activities such as index rebuilds can also cause significant growth. This must be factor into maintenance plans.