Introduction

In my last article, Working with Azure IoT Central at SQLServerCentral, I gave an overview of Azure IoT Central. I had connected my mobile phone as a device to Azure IoT Central and collected the telemetry data. In this article, I will export the telemetry data continuously from Azure IoT Central to Azure SQL Database through Azure Event Hubs and Stream Analytics.

Azure Event Hubs

Azure Event Hubs is a fully managed Platform-as-a-Service (PaaS). Azure Event Hubs is a distributed big data streaming platform with low latency and seamless integration and event ingestion service. It can receive and process millions of events per second. Event Hubs provides a unified streaming platform with a time retention buffer, decoupling event producers from event consumers.

Here, the solution is designed with Azure IoT Central as the event producer and Stream Analytics as the event consumer. I will discuss Azure Event Hubs in detail in a separate article.

Azure Stream Analytics

Azure Stream Analytics is a real-time analytics and complex event-processing engine designed to analyze and process high volumes of fast streaming data from multiple sources simultaneously. An Azure Stream Analytics job consists of an input, a query, and output(s). The input may be Azure Event Hubs, Azure IoT Hub, or Azure Blob Storage. The query is based on the SQL language and can be used to filter, sort, aggregate, and join streaming data over a period of time. Each job may have one or more outputs.

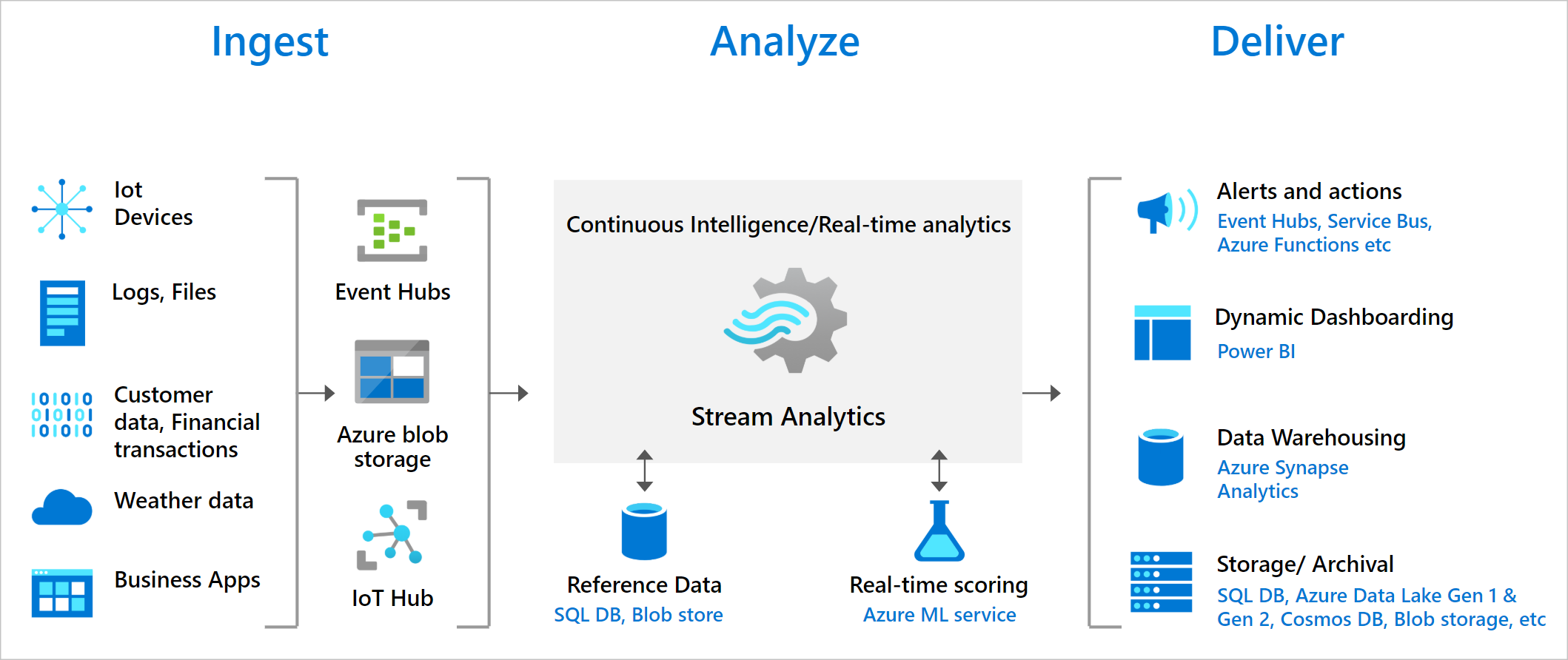

The following image (taken from Microsoft site) shows how the data is sent to Stream Analytics, analyzed, and sent for storage or presentation:

Solution Architecture

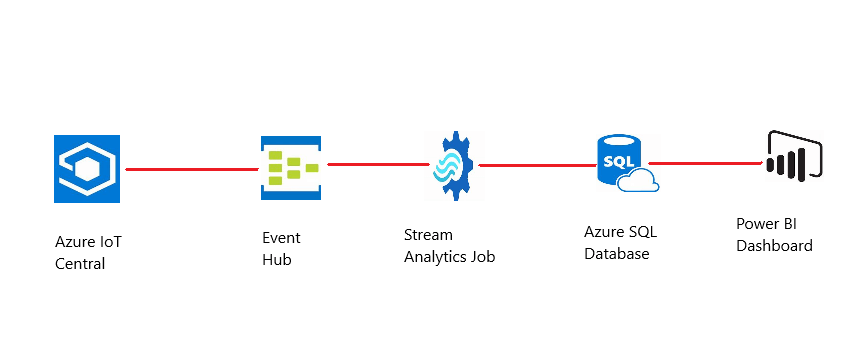

Azure Stream Analytics is best used with other services to create an end-to-end solution. The streaming data can be first populated in a SQL database. Then, I may use Power BI's SQL connector to query the SQL database for the latest data.

If Power BI is directly connected with Stream Analytics, real-time dashboards and alerts can be created. But using SQL database in the solution gives more flexibility. This solution maximizes Power BI's capabilities to further slice and dice the data for reports and provides more visualization options. Other dashboard solutions also can be used to generate reports from the SQL database. The solution with SQL database is optimal for jobs with latency requirements greater than one second.

The maximum throughput to SQL Database from Azure Stream Analytics is currently around 24 MB/s. If the event sources in the solution produce data at a higher rate than 24 MB/s, processing logic is required to be used in Stream Analytics to reduce the output rate to SQL. Techniques such as filtering, windowed aggregates can be used.

Implementation

First, Azure IoT Central setup is done and the mobile phone is connected as a device to collect the telemetry data. The previous article, Working with Azure IoT Central, contains the details about Azure IoT Central. I will create Event Hub, Stream Analytics job, and Azure SQL Database from the Azure Portal. Then, I will make the necessary configurations to transfer the telemetry data from Azure IoT Central to Azure Event Hub, Event Hub to Stream Analytics job and then finally to Azure SQL database.

Create an Event Hubs

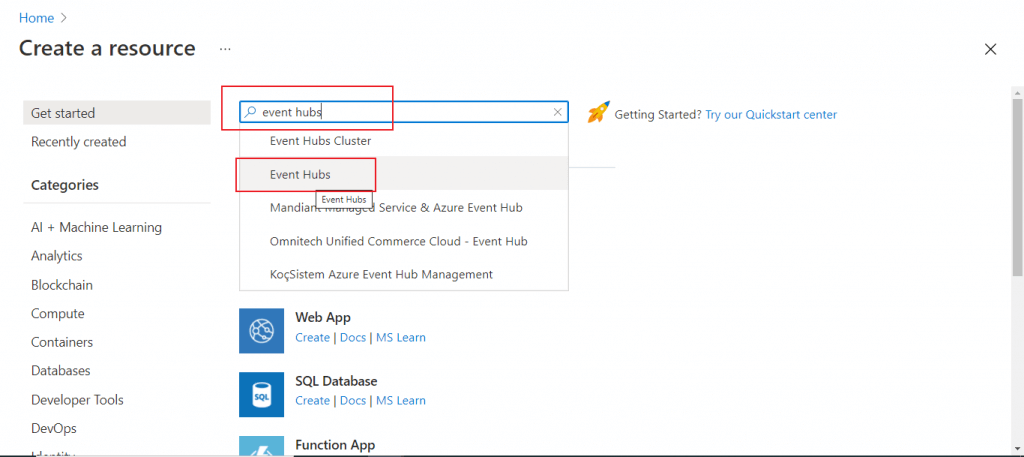

In the Home page of Azure portal, I search for the resource event hubs.

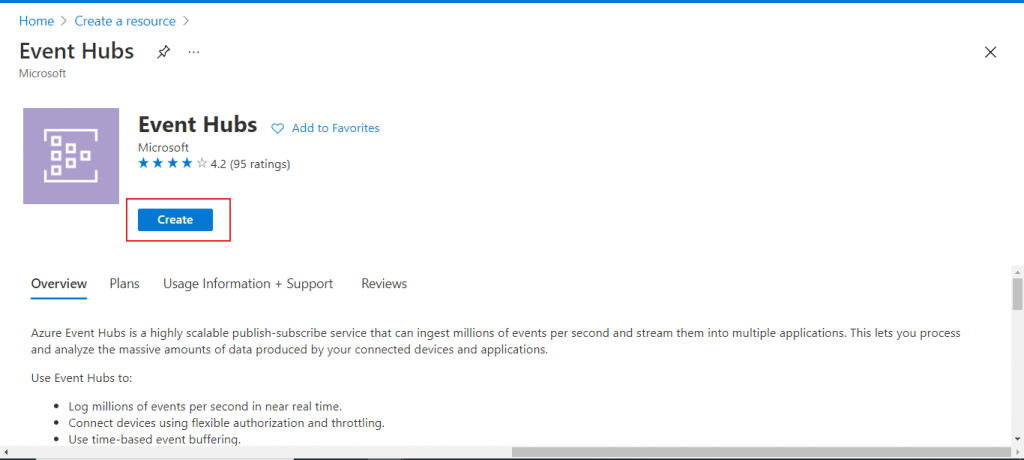

In the next screen, I press the Create button.

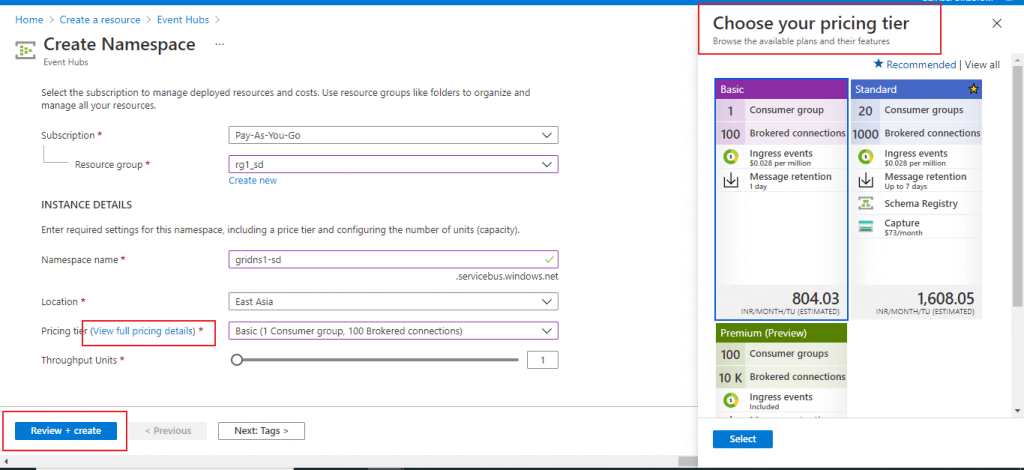

Create a namespace

In the next screen, I have to create an Event Hubs namespace. A Namespace is a management container for event hubs. I provide a name and location for the namespace. Also, I need to select the pricing tier. The pricing tier decides the number of consumer groups and connections allowed for the event hub namespace. I go for the Basic tier. I press the 'Review + Create' button.

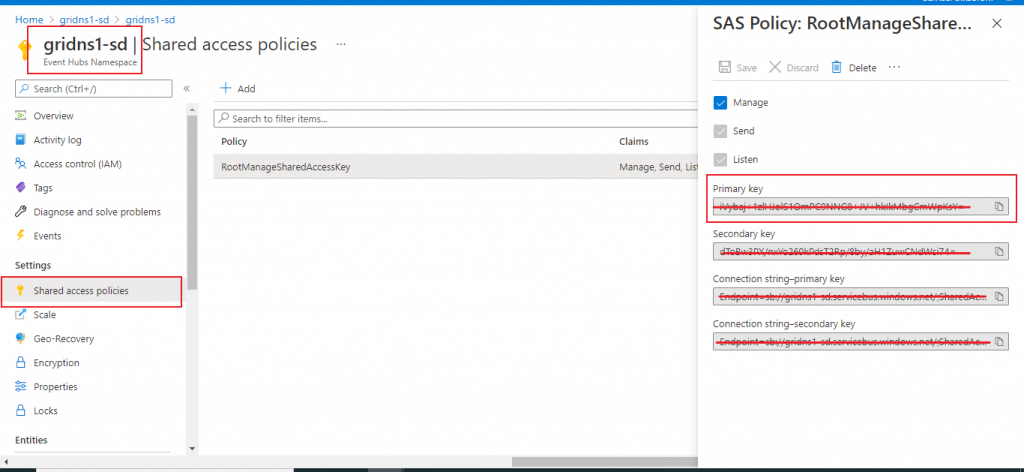

Save the connection string

Once the Event Hubs namespace is created, I go to the 'Shared access policies' tab. I click on the RootManagerSharedAccessKey link and a pop-up window opens. I make a copy of the 'Connection string- primary key' and save that in a document. I will need this key value at a later step.

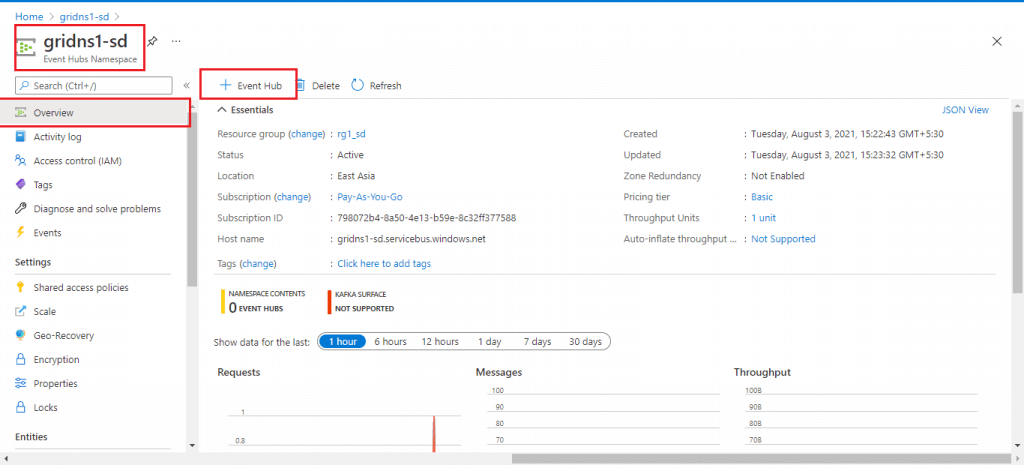

Create an Event Hub

I go to the Overview tab of the Event Hubs namespace and click on the '+ Event Hub' button to create an Event Hub under the namespace.

In the next screen, I need to provide the name and and the partition count for the event hub. I keep the partition count value as 2. Then, I press the Create button.

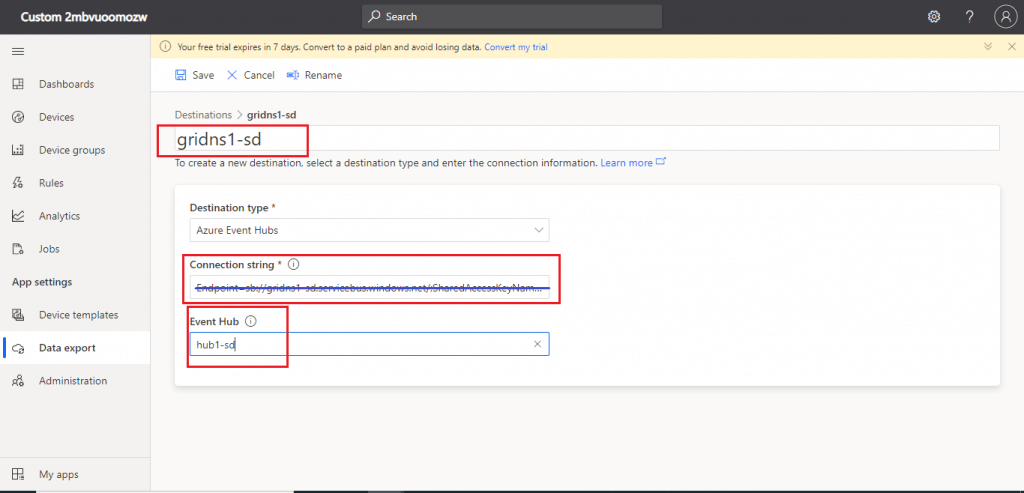

Destination for Data export

Now, I come to the IoT Central Home Page. My mobile phone is connected as a device in IoT Central. I go to the Destination tab of Data Export. I select the Destination Type as Azure Event Hubs. Then, I provide the Connection String of Event Hubs as saved in a document in an earlier step. I select the name of the Event Hubs as created in the last step. I press the Save button.

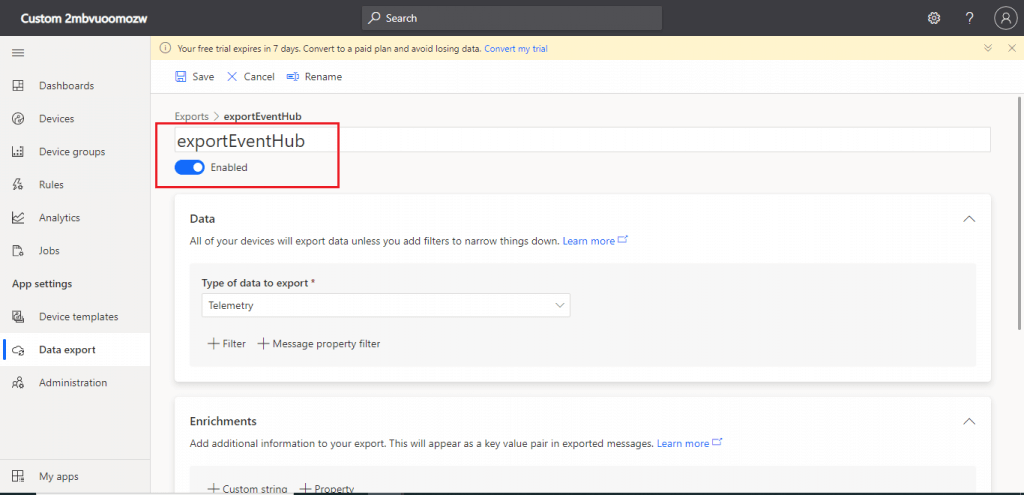

Create the export

I go to the Exports tab of Data Export. I provide a name for the export. Then, I select the type of data as Telemetry. I select the destination already created in the earlier steps. I press the Save button.

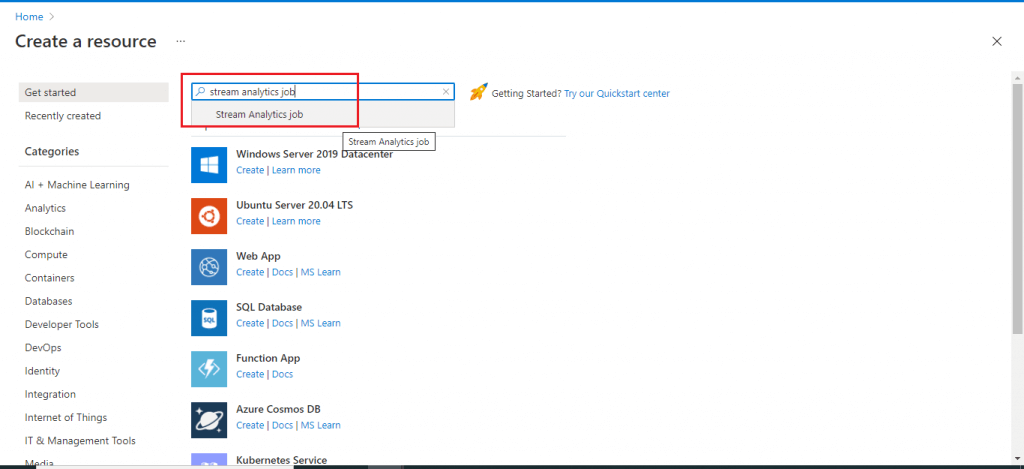

Create a Stream Analytics job

I go back to the Azure Portal again. I search for the resource named stream analytics job and click on it.

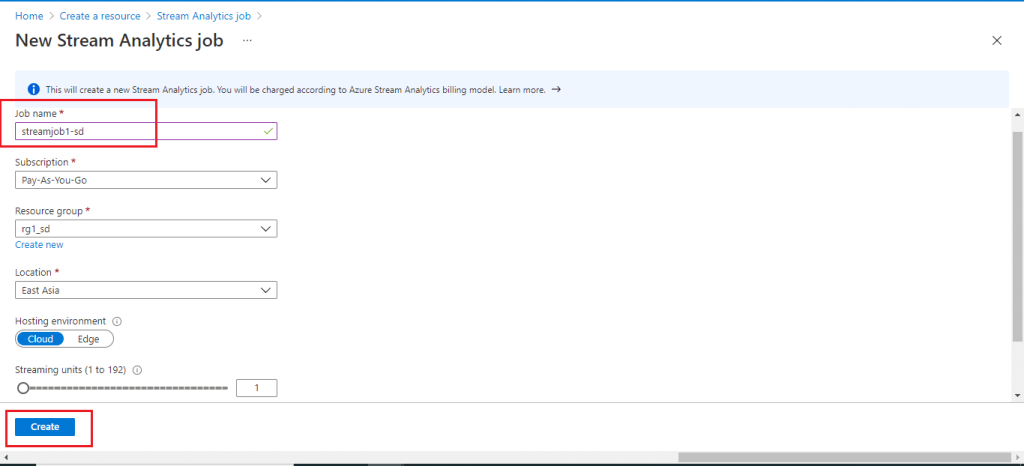

In the next screen, I give a name for the new Stream Analytics job. I need to select the number of streaming units. I keep it with the minimum value 1. Then, I press the Create button.

Streaming Units (SUs) represents the computing resources that are allocated to execute a Stream Analytics job.

Add Stream Input

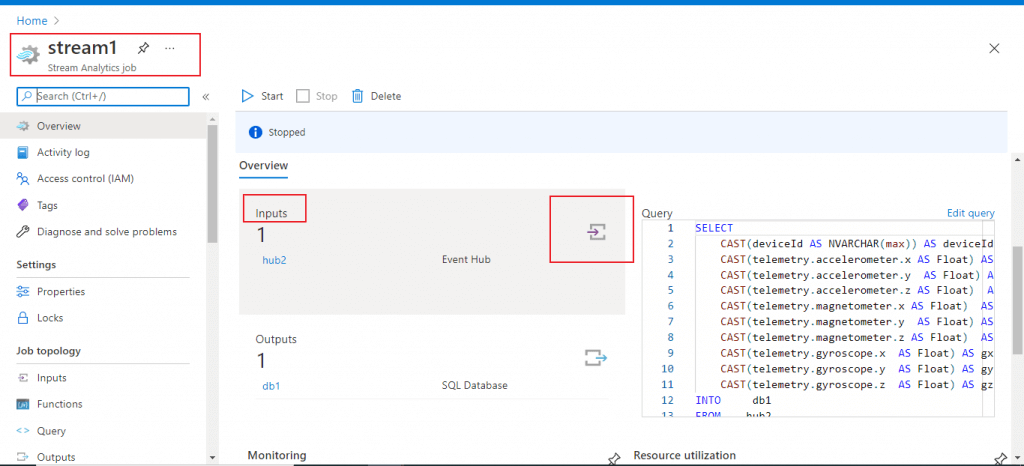

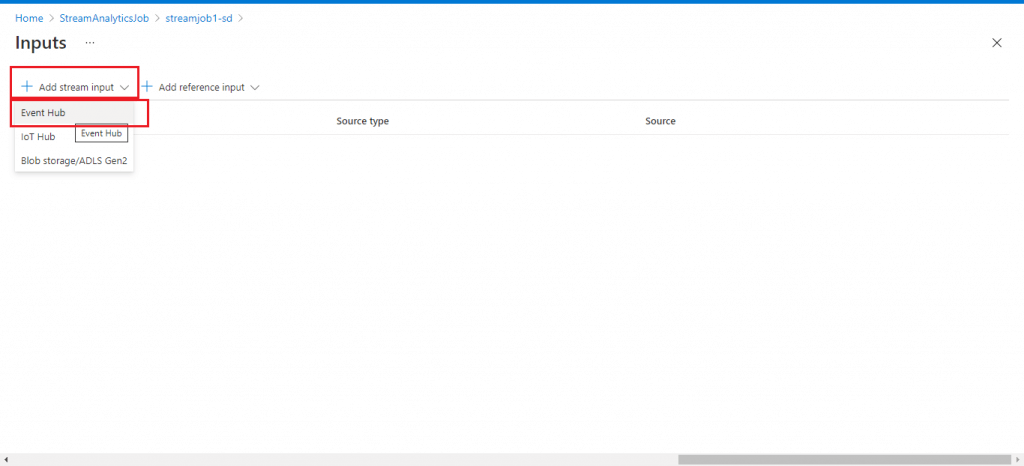

Once the Stream Analytics job is created, I go to the Overview page. I select the arrow link for Inputs.

In the next screen, I need to add the stream input from a drop-down list. The available options are:

- Event Hub

- IoT Hub

- Blob Storage/ADLS Gen2

I select Event Hub.

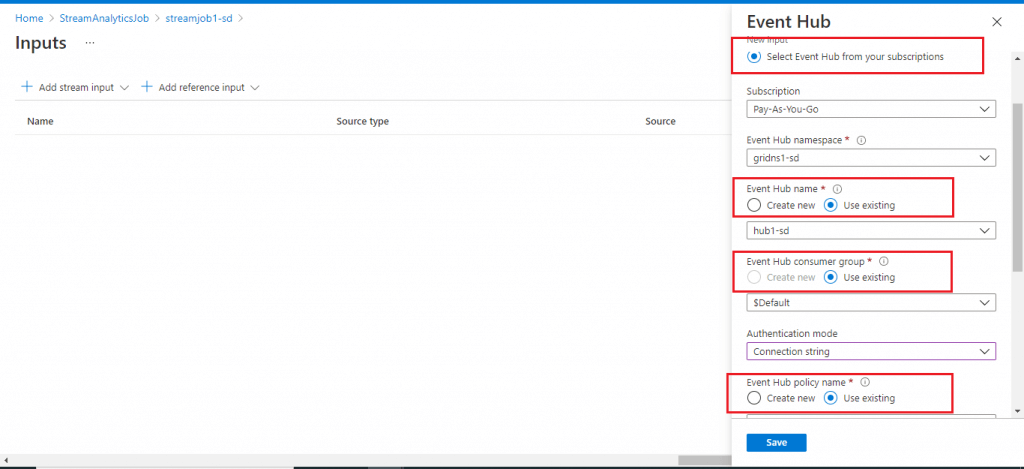

A pop-up window opens where I need to provide the details for the Event Hub. I choose the Event Hub namespace and the Event Hub as created in the earlier steps. I press the Save button. Stream Input is created now.

Create a SQL Database

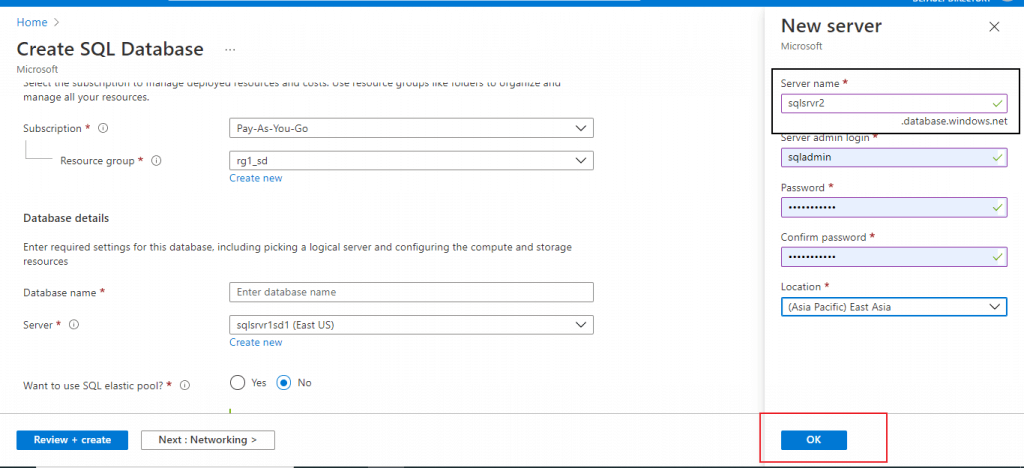

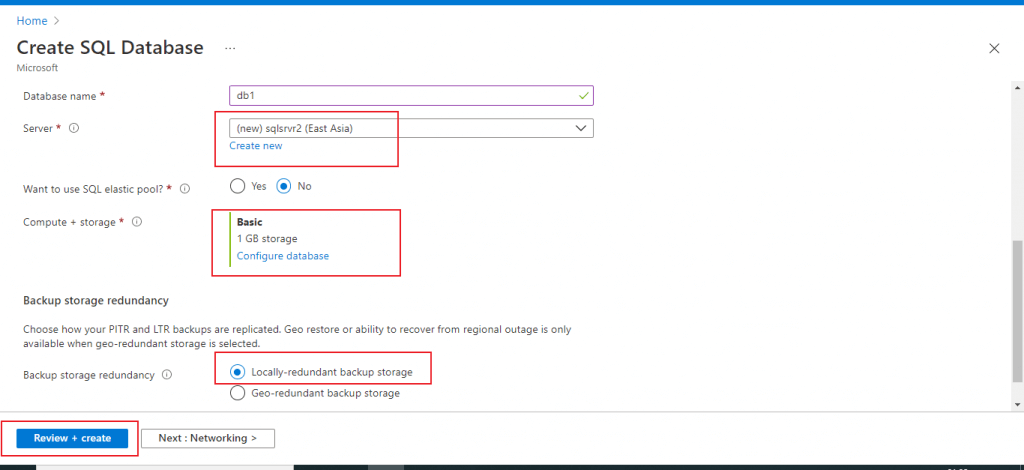

I go back to the Home page of Azure portal and create a SQL database. I will use the SQL database as stream output in the Stream Analytics job. The Stream Analytics job and the logical server for Azure SQL database should be in the same region. Otherwise, there may be some communication required between the two different regions and that may incur some extra cost.

So, I create a logical server in the same region of the Stream Analytics job. Then, I create a new SQL database under this server.

I provide the required details and press the 'Review + create' button. The SQL database is created now.

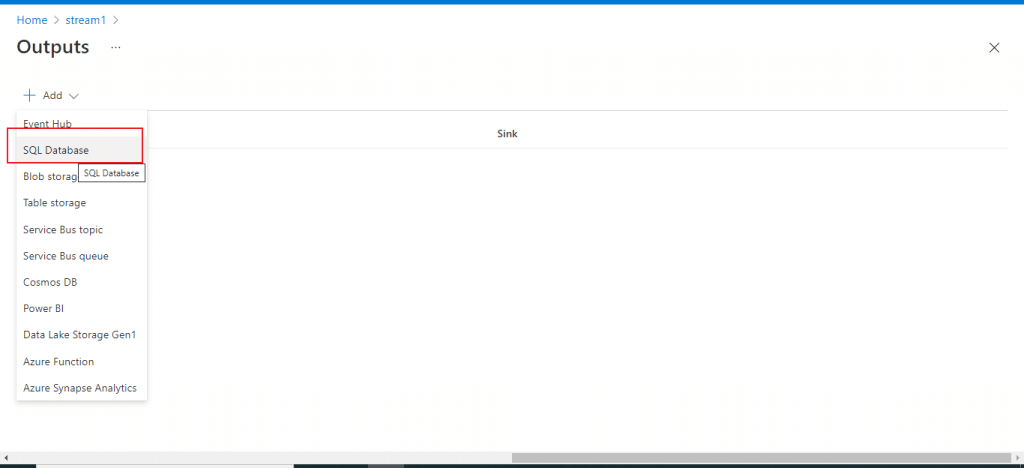

Add Stream Output

I go back to the Stream Analytics job. I go to the Outputs link. I select SQL Database as an output from a drop-down list provided.

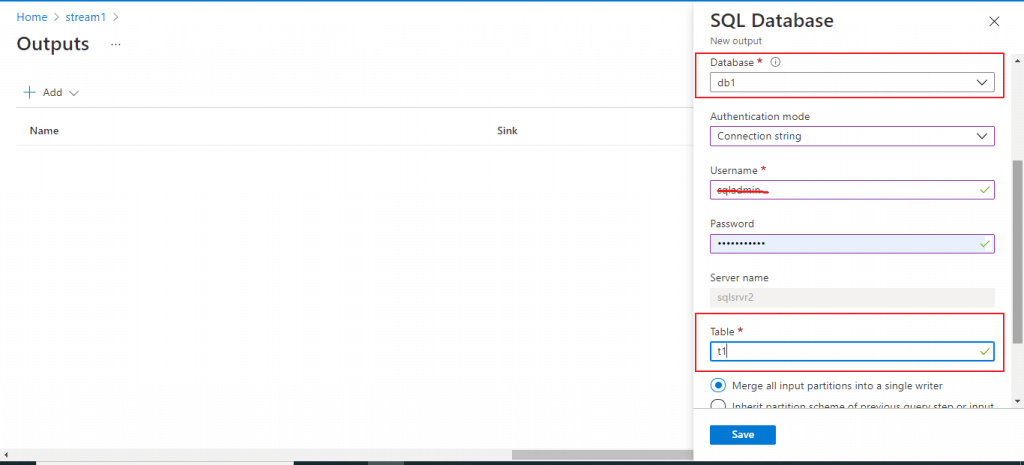

A pop-up window is open. I need to provide the details of the SQL database created. I already added the client IP address of the Stream Analysis job in SQL Server firewall.

I select the database from the drop-down list. I provide the table name t1. This table is already created in Azure SQL database. I press the Save button.

The structure of the table t1 in SQL database is given below.

create table t1 ( deviceId NVARCHAR(max), ax float, ay float, az float, mx float, my float, mz float, gx float, gy float, gz float, )

Edit the Query

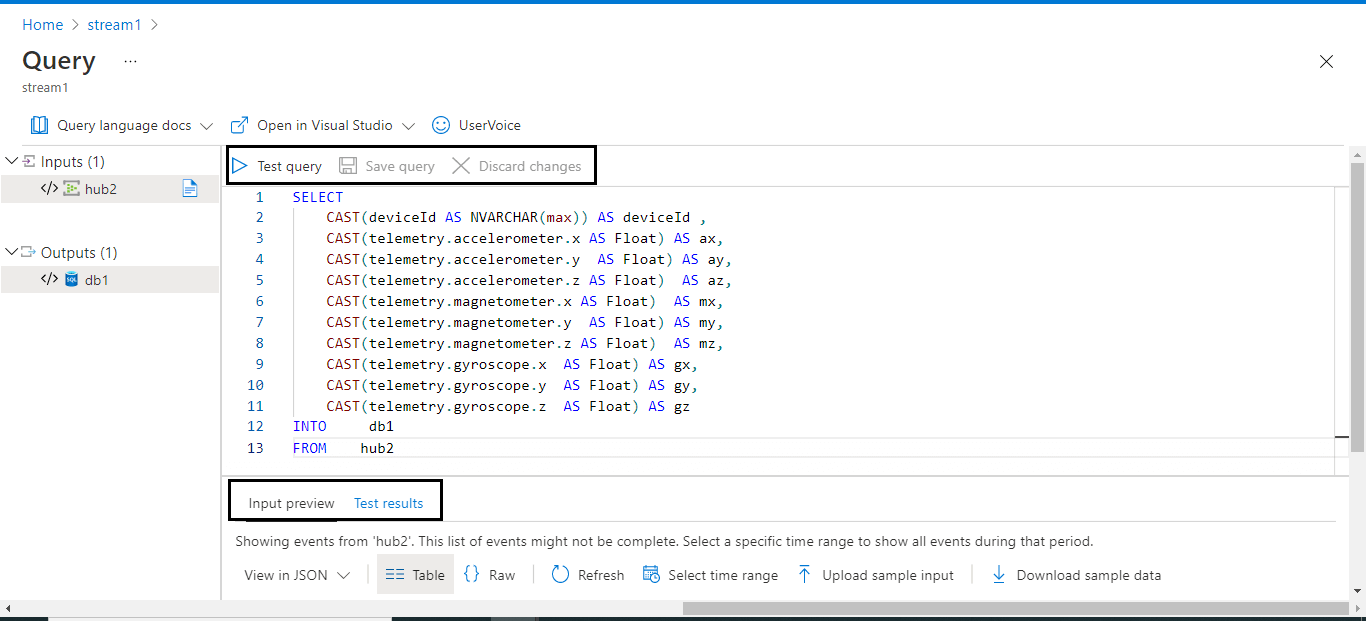

Input and Output are now set for the Stream Analytics job. I press on the Edit Query link at the right.

The Query window is now open. I may modify the query. Then, I can test the query and save the query as well. At the bottom, test results are available for the queries executed. Data preview from the input stream is also available.

I write the following query to retrieve details from the telemetry data as collected from the mobile phone. Data type of each column is explicitly converted to match the table structure created in the Azure SQL database. I check the test results for the query and return to the Stream Analytics job overview page.

SELECT

CAST(deviceId AS NVARCHAR(max)) AS deviceId ,

CAST(telemetry.accelerometer.x AS Float) AS ax,

CAST(telemetry.accelerometer.y AS Float) AS ay,

CAST(telemetry.accelerometer.z AS Float) AS az,

CAST(telemetry.magnetometer.x AS Float) AS mx,

CAST(telemetry.magnetometer.y AS Float) AS my,

CAST(telemetry.magnetometer.z AS Float) AS mz,

CAST(telemetry.gyroscope.x AS Float) AS gx,

CAST(telemetry.gyroscope.y AS Float) AS gy,

CAST(telemetry.gyroscope.z AS Float) AS gz

INTO db1

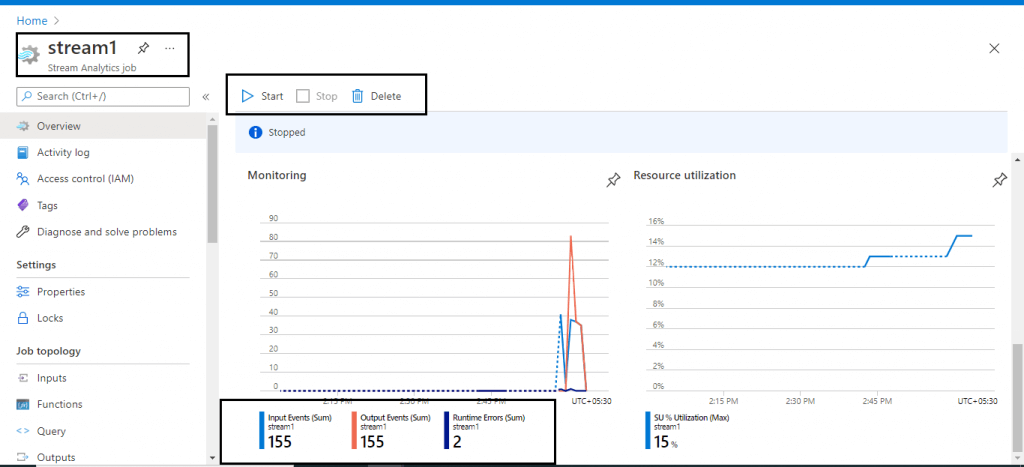

FROM hub2I press the Start button. The Stream Analytics job Monitoring can be checked to understand how data streams are transferred from the input Event Hub to the output Azure SQL Database.

I can check the SQL table as well to check how streams of telemetry data are getting populated in the SQL Database.

SELECT deviceId,ax,ay,az,mx,my,mz,gx,gy,gz FROM t1

The data in Azure SQL database table may be used in querying and reporting purposes. Also, I may connect the data from Power BI Desktop and generate some useful graphical reports.

Conclusion

In this article, I did the step-by-step implementation for populating Azure SQL database with the telemetry data collected from my mobile phone in Azure IoT Central. Data export is done to Azure Event Hub. Then, Azure Stream Analysis job transfers the data from Event Hub to Azure SQL database using query. Data in the SQL table may be used for reporting and analytics purpose. Data from Stream Analytics may be sent to different outputs depending on the purpose. I will discuss different solution approaches for handling IoT in the future articles.