In Part 1 we did an overview of partitioning and related techniques such as

archiving. This time we'll dig deeper into archiving which is perhaps the

easiest technique to use regardless of the platform.

The common archiving strategy is to do so by a date column. To illustrate a

simple archiving strategy we'll use the Person.Contact table in Adventureworks.

Start by creating an archive table with the same structure as Person.Contact and

I like to add two additional columns; ArchiveID which will be an identity and

ArchivedDate which will be a datetime set to a default of GetUTCDate() when the

row is added to the table. You can use the technique of your choice for building

this table but I like the simplicity of select into because I can get a plain

table with no indexes.

select * into person.contactarchive from person.contact where 1=0

I then use the SSMS designer to add ArchiveID and DateAdded, and I make ArchiveID the primary key. This is the final result:

CREATE TABLE [Person].[contactarchive]( [ContactID] [int] NOT NULL, [NameStyle] [dbo].[NameStyle] NOT NULL, [Title] [nvarchar](8) NULL, [FirstName] [dbo].[Name] NOT NULL, [MiddleName] [dbo].[Name] NULL, [LastName] [dbo].[Name] NOT NULL, [Suffix] [nvarchar](10) NULL, [EmailAddress] [nvarchar](50) NULL, [EmailPromotion] [int] NOT NULL, [Phone] [dbo].[Phone] NULL, [PasswordHash] [varchar](128) NOT NULL, [PasswordSalt] [varchar](10) NOT NULL, [rowguid] [uniqueidentifier] NOT NULL, [ModifiedDate] [datetime] NOT NULL, [ArchiveID] [int] IDENTITY(1,1) NOT NULL, [DateAdded] [smalldatetime] NOT NULL CONSTRAINT [DF_contactarchive_DateAdded] DEFAULT (getutcdate()), CONSTRAINT [PK_contactarchive] PRIMARY KEY CLUSTERED ( [ArchiveID] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY] ) ON [PRIMARY]

A few points worth mentioning before we continue. One is that it's worthwhile

to be consistent in your naming convention. I like adding the 'archive' postfix

so that I can tell at a glance if a table has an archive relative in the

database. I also like using archive rather than history as history is often used

for different purposes - auditing being the most common, where you use triggers

to capture some or all changes to a table. I've also

elected to lightly index, which makes sense if you won't be accessing the table

at all, but in many cases you may be hitting the archive table frequently and in

that case you should index to the same degree or more than the base table, since

the only time the indexes will be affected will be during the archive operation.

Another point is that if you follow

my example and use an identity column on the archive table you have to be sure

that all upstream code is using Scope_Identity() rather than @@Identity or bad

things will happen as the incorrect ID is returned to the caller. There

are alternatives to avoid that possibility. One is to just use a

uniqueidentifier, the other is to just continue to use ContactID as the primary

key. The latter works fine if you know you'll be archiving each record once and

only once (which is the scenario we are implementing next and is most common).

Now let's build the archiving code and for this example we'll use

ModifiedDate as our key column.

alter proc usp_PersonContactArchive @ThruDate datetime as set nocount on set xact_abort on begin transaction --first we stabilize the set of records we want to move create table #temp (ContactID int) create unique index ndxMain on #Temp (ContactID) insert into #temp ( ContactID) select ContactId from Person.Contact where ModifiedDatePretty vanilla code, the goal is to make sure that we don't delete records from the source table without them being in the archive table. An alternate, and perhaps slightly more robust, implementation would be to join back to the archive table to positively identify records to delete, like this:

create proc usp_PersonContactArchive2 @ThruDate datetime as set nocount on set xact_abort on begin transaction --put the records in the archive table INSERT Person.contactarchive (ContactID ,NameStyle ,Title ,FirstName ,MiddleName ,LastName ,Suffix ,EmailAddress ,EmailPromotion ,Phone ,PasswordHash ,PasswordSalt ,rowguid ,ModifiedDate ) select C.ContactID ,NameStyle ,Title ,FirstName ,MiddleName ,LastName ,Suffix ,EmailAddress ,EmailPromotion ,Phone ,PasswordHash ,PasswordSalt ,rowguid ,ModifiedDate from Person.Contact C where ModifiedDateAll that remains is to test it and to set up a job to run it. The nice thing about this implementation is that if the job fails, the next run will catch all the records the previous run should have with no extra tracking needed. The job just needs a single step, though in practice I put all my archiving code into a single job with a separate step for each table. Our calling syntax is simple:

declare @ThruDate datetime set @ThruDate = DateAdd(yy, -9, getutcdate()) exec usp_PersonContactArchive @ThruDateBecause we're using Adventureworks and their is no new data being added, I've

elected to archive anything more than a nice odd 9 years old each time it runs.

We can run it at whatever internal makes sense. It's less work to do it daily so

that we move smaller chunks of data, but we might have a business situation

where we want to archive once a year on Jan 1.

This is simple archiving and ignores two possibilities. One is that you'll

have foreign key constraints and the other is that the number of records to be

archived is so large that doing it in a single transaction is prohibitive.

Foreign keys can range from mild pain to severe. Let's look at a not quite so

simple situation where we our target table is part of a foreign key

relationship. I've created a table called TestFKey and populated it with data

from Person.Contact, and then added the foreign key constraint to person.contact.

CREATE TABLE [dbo].[TestFkey]( [contactid] [int] IDENTITY(1,1) NOT NULL, [modifieddate] [datetime] NOT NULL, CONSTRAINT [PK_TestFkey] PRIMARY KEY CLUSTERED ( [contactid] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY] ) ON [PRIMARY]ALTER TABLE [dbo].[TestFkey] WITH CHECK ADD CONSTRAINT [FK_TestFkey_Contact] FOREIGN KEY([contactid]) REFERENCES [Person].[Contact] ([ContactID])To test, I ran this statement:

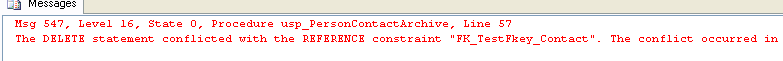

exec usp_PersonContactArchive '12/30/1998'And this is the resulting error:

A check of our archive table shows that we're still transactionally

consistent. When the delete failed our transaction failed along with it. This

will happen if even one foreign key relationship is still valid within our

target set. The only way to resolve is to either only move records without a

foreign key relationship (not incredibly useful), or we have to archive our way

from the bottom up, starting with records in the TestFkey table. With a good

number of foreign keys and add in some business rules that are any more complex

than date based and it gets pretty complex. To resolve this one we would just

mirror our archiving process pointed at the TestFkey table, put that step ahead

of our Person.Contact step and let it run.

Let's revisit our other potential issue which is that we may have too many

records to process in one pass. Only experience can tell you what that number

is, but it's easy enough to implement. First we need to modify our archiving

proc a bit (changes are highlighted):

alter proc usp_PersonContactArchive2 @ThruDate datetime, @ArchiveCount int output as set nocount on set xact_abort on begin transaction --put the records in the archive table INSERT Person.contactarchive (ContactID ,NameStyle ,Title ,FirstName ,MiddleName ,LastName ,Suffix ,EmailAddress ,EmailPromotion ,Phone ,PasswordHash ,PasswordSalt ,rowguid ,ModifiedDate ) select top 1 C.ContactID ,NameStyle ,Title ,FirstName ,MiddleName ,LastName ,Suffix ,EmailAddress ,EmailPromotion ,Phone ,PasswordHash ,PasswordSalt ,rowguid ,ModifiedDate from Person.Contact C where ModifiedDate order by ModifiedDate set @ArchiveCount = @@rowcount --delete from source delete from person.contact where ContactId in (select c.contactid from person.contact c inner join person.contactarchive ca on c.contactid = ca.contactid) commit transactionThen we add a higher level calling proc that will call our original proc

multiple times:

alter proc usp_PersonContactArchiveLoop as set nocount on declare @ThruDate datetime declare @RecCount int declare @MaxLoop int set @ThruDate = DateAdd(yy, -8, getutcdate()) set @maxLoop = 10 while @MaxLoop > 0 begin exec usp_PersonContactArchive2 '1/1/1999', @RecCount output print 'Archived ' + convert(varchar(10), @RecCount) set @MaxLoop = @MaxLoop - 1 if @RecCount = 0 break endI've designed this one to loop up to 10 times and to archive one record on

each pass. In practice we might want to let it run until completion, or have it

break once a certain time is reached so that it doesn't continue to run into

production hours.

Hopefully that will get you started thinking about how you might go about

archiving in your current situation. Archiving can lead to some performance

gains and it can delay having to purchase more expensive disk space if you move

the archive tables to cheaper storage. The downside is that the data is not

quite as easily available as it used to be. Next time we'll dig into partitioned

views!