Intro

In 2008 I wrote an article called “Scope: The drastic caveat with Logon Triggers.” Ever since, we have been using Event Notifications to capture logins for our SQL Server instances. Issues were only very rare, usually on servers that had an extreme load of many connections. In such a case, we stopped this continuous monitoring and only monitored for a couple of hours randomly in a month. This was the case on 3 of our 150+ instances.

The bite in the back

Recently, we had major downtime on one of our Azure Managed Instances due to this mechanism being activated and not shut down after the monitoring window. Over a weekend it recorded more than 210 Million events and blew up msdb (+300GB ) as well as our DBA database ( + 500GB ) processing these queues, by not being able to keep up with the load. Bear in mind, Azure Managed Instances are a PaaS solution, so manipulating systems database ( master, msdb, … ) is very much restricted.

It took quite a while to get the notification services dropped. After that, we restarted production, with caution to users that it may still be slowed down due to the needed extra actions.

For regular SQL Server instances, in such a situation, I would restore msdb to a time before the incident occurred, but this being an Azure Managed Instance, this was not an option. To get rid of this situation took quite a while because we needed to perform an “end conversation” for all messages in the queue. This took more than 12 hours!

The main cause for this crisis was a failing resource monitoring over the managed instances resource.

The nasty taste in the mouth

Even though the main reason for this was not in our hands, the situation and the fact that an actual plant had suffered downtime due to us not having shut down this monitoring, left us with an uncomfortable bad feeling. We knew extended events exist, but never felt the actual need to adopt, because our current solution worked fine for our needs.

So, we got onto the matter and started figuring out how Extended Events could be used to serve our needs. We mitigated the problem from our part (DBA) by creating a SQLAgent job, which monitored the sys.sysxmitqueue in msdb. This job would halt the Xe session whenever there are more then 10000 messages in this queue and alert the DBA team. Quick and dirty, we know, but this was only a temporary solution.

Building it for our SQL Server instances

We started our quest using our own DBA Development SQL Server instance. As we know this engines behavior quite well, this was our preferred choice. Fairly quickly, our search engine pointed to a number of posts related to failed logins. As we monitor those, too, we decided to start here. These were two of our results:

- SQLShacks “Using Extended Events to review SQL Server failed logins”

- Steve Jones’ “Tracking Logins with Extended Events”

We checked for the syntax of the Create Event Session in the Microsoft T-SQL documentation. We set it up on our test instance and looked how it provided information, directly from SQL Server Management Studio.

Below is the Extended Events Session we set up to capture 18456 errors ( i.e. Logon Failed ):

CREATE EVENT SESSION [DBA_TrackFailedLogins] ON SERVER ADD EVENT sqlserver.error_reported( ACTION(sqlserver.client_app_name, sqlserver.client_hostname, sqlserver.database_id, sqlserver.nt_username, sqlserver.session_nt_username, sqlserver.username) WHERE([severity] = (14) AND [error_number] = (18456) AND [state] > (1))) ADD TARGET package0.event_file( SET filename = N'D:\MSSQL15\MSSQL\Log\DBA_XE_TrackFailedLogins_20211007_' , max_file_size = (2) , max_rollover_files = (5) ) WITH (MAX_MEMORY = 2048 KB , EVENT_RETENTION_MODE = ALLOW_MULTIPLE_EVENT_LOSS , MAX_DISPATCH_LATENCY = 3 SECONDS , MAX_EVENT_SIZE = 0 KB , MEMORY_PARTITION_MODE = NONE , TRACK_CAUSALITY = OFF , STARTUP_STATE = OFF ); ALTER EVENT SESSION [DBA_TrackFailedLogins] ON SERVER STATE = START;

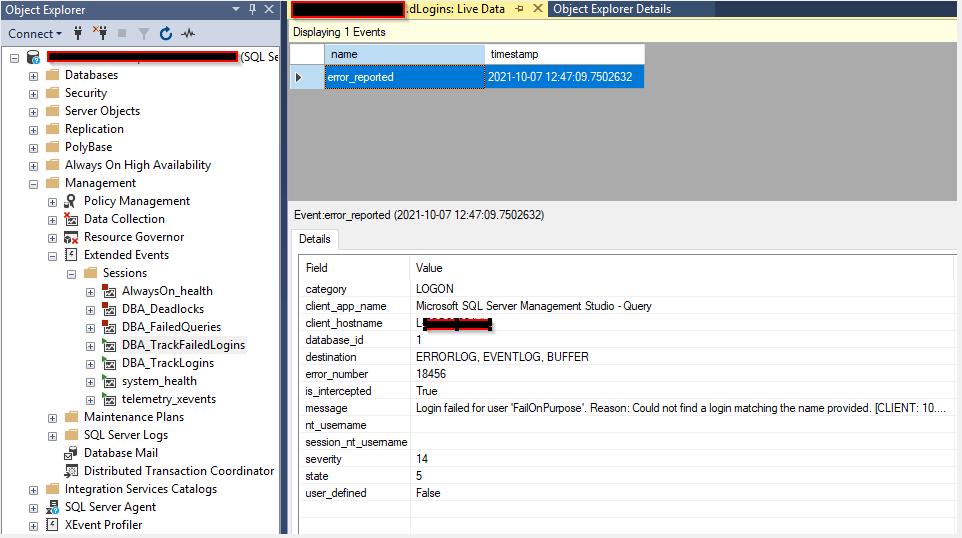

As you can see in the image below, the information in the extend event is quiet interesting.

We can see who is trying to logon from which client machine, using which application and account, and from what IP address. The IP address is what follows behind [CLIENT: 10…

As you can see in the Event Session DDL, we chose to record this information into a file. The parameters, max_file_size and max_rollover_files, control the size and the number of files to be kept by this service. That means, SQL Server will actually monitor the active file and will perform a roll forward by allocating a new file whenever the current file reaches the given size and it will even remove old files if the number of files goes over the specified max_rollover_files.

Using this out of the box mechanism, it will never cause your disk space consumption to go over the total maximum size.

As we also want to persist this information in our DBA database, we needed to figure out how to do that. We produced this stored procedure (spc_DBA_Process_XE_FailedConnectionTracker see attached scripts) handling it all:

- Keep track of its last running meta data

- Import the extended events result files

- Extract the wanted data from the xml attributes

- Store the data into a table

All we needed to do extra is to create a SQL Agent job and let it execute this stored procedure on a regular basis.

When we notice to many incoming failed logins, all we need to do to stop this data load is to disable the SQL Agent job that processes the extended events result files. SQL Server itself will handle the extended events files to be contained within the specified limits.

Steve’s article pointed us to the SSMS solution for handling succeeded logon sessions. We adapted that to store results to the file system and developed the stored procedure to process it all. This time, recording the succeed logons metadata and keeping track by modifying the tsLastUpdate column once a day, when new events for a given row come in. We are not using a Merge statement for this, but that is another story.

Keep in mind we did use “STARTUP_STATE = OFF” during our test phase. That means our Extended Events Session will not get started up with instance failover or after a server reboot! With our production version “STARTUP_STATE = ON” is being used

So far for the solution for our own SQL Server instances.

Extended Events on Azure Managed Instances

Then we tried to use this same Extended Events DDL to see if that would work on Azure Managed Instances, too:

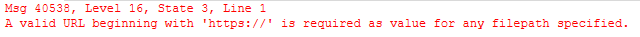

It didn’t!

That made sense, as you are not supposed to have (C or D, ..) drives at your disposal with a PaaS solution, right?

Since we already had a credential available for ad-hoc backup requests where backup files are to be written to Azure Blob Storage, I copied such backup files URL, modified it with a new filename and extension, and tried to execute the Extended Event DDL.

... SET filename = N'https://ourblobstorageaccount.blob.core.windows.net/sqlbackups/sqlinstancename/DBA_TrackFailedLogins_20211007.xel'

Then we started the session

ALTER EVENT SESSION [DBA_TrackFailedLogins] ON SERVER STATE = START;

This failed!

Msg 25602, Level 17, State 23, Line 23

The target, "5B2DA06D-898A-43C8-9309-39BBBE93EBBD.package0.event_file", encountered a configuration error during initialization. Object cannot be added to the event session. (null)

It would have been too easy to work just like that, right?

A quick search on the internet pointed me to this page: Extended Events: Azure Storage Account Types Matter. In that article, it stated: "When attempting to use a general-purpose v2 account type , however, the issue does not occur."

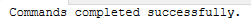

It turned out BLOB Storage has to be of type/generation V2 or later. We called upon our Azure infrastructure team to be provided with such Blob Storage Account. We created the credential on our Azure Managed Instance, provided a URL for that Storage Account, and things started working. Testing for failed logons, the events started coming in. So far so good.

As this prerequisite for “off instance” file reference with extended events still left us a bit puzzled, we followed it up with extra caution, even if this was just on our development Azure Managed Instance and that turned out to be a good thing. It turns out an Azure Managed Instance indeed rolls forward to the next event file when the current one reaches the declared max_file_size, however it does not stick to the max_rollover_files!

We noticed the number of files increasing and we needed to find a solution to keep the numbers down and keep space consumption under control. It does not make sense to keep this data for more than one day, as it gets processed and persisted on our Azure Managed Instance by our processing stored procedures.

When I contacted one of my peers who is more into cloud storage, he pointed me to Lifecycle Management for Azure Blob Storage. This option is to be found in the Azure Portal Blob Storage resource, under the topic, Data Management.

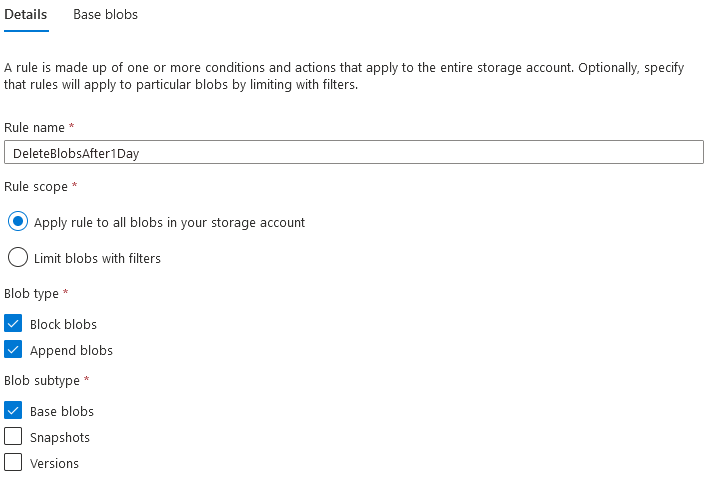

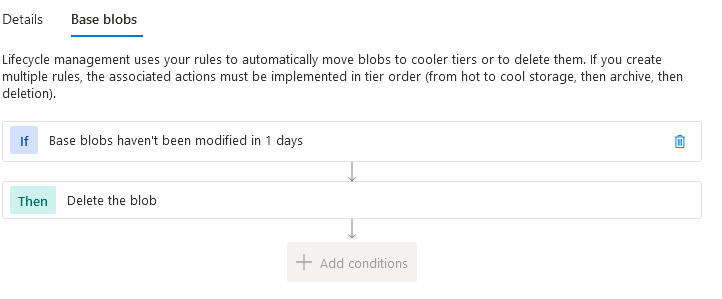

We quickly set up a rule to delete all files after one day for the given storage account.

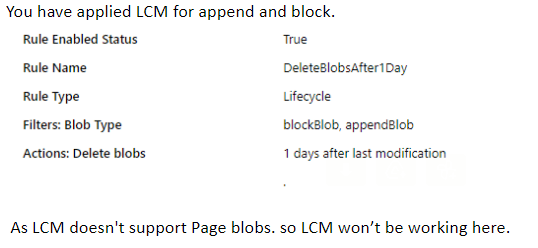

Little did we pay attention to the BLOB Type the extended events would write!

The next Monday I checked if the rule kicked in and performed the wanted cleanup. It did not!

I opened a case with Microsoft support. Microsoft support pointed us to the fact that Lifecycle Management currently does not support Page Blobs, which are written by Azure Managed Instance Extended Events (also see Extended Events: Azure Storage Account Types Matter ).

As with all cloud services, this is also an evolving feature. Let’s hope it will also support Page Blobs fairly soon.

For the moment, we have setup a PowerShell script to delete all files older than 1 day and scheduled it.

$TsRef = ( get-date -Hour 0 -Minute 0 -Second 0 -Millisecond 0 ).AddDays( - $RetentionDays );

Get-AzStorageBlob -Context $ctx -Container $ContainerName | where LastModified -lt $TsRef |

where { $_.BlobProperties.LeaseStatus -ne [Azure.Storage.Blobs.Models.LeaseStatus]::Locked } | Remove-AzStorageBlob -PassThru ;This is to be converted into an Azure Logic App solution.

Logic App schema

Logic App schemaFinally

Over the time of more than a decade, we did not invest time to keep up with new techniques to perform better monitoring. That did cost us big $$ as one of our plants lost nearly a day of production.

I hope this article demonstrates alternatives are always worth investigating, especially if they can prevent unplanned down time. As said so many times "Keep up or be left behind" actually holds ground, because we failed to adapt in time and had an outage.

I really hope you liked this little article and the attached scripts and I hope they can help people getting started to adopt and embrace Extended Events.

Life is good

P.S.: Attached you can find the T-SQL setup scripts for both SQL Server and Azure Managed Instances. With regards to the Azure Managed Instances, do keep in mind, the declaration of the Credential for the access to the Blob Storage is not included in the scripts !

CREATE CREDENTIAL [https://<StorageAccountName>.blob.core.windows.net/<ServerName>] WITH IDENTITY = ''SHARED ACCESS SIGNATURE'', SECRET = ''<SASKey>''