Azure Data Factory has two different connectors that can copy data from APIs. Originally, there was only the HTTP connector. The REST connector was added later. Although there are situations where either connector would work for copying data, there are some differences in functionality that would necessitate the use of one over the other. Both support GET and POST requests. Both support anonymous and basic authentication.

The REST connector supports pagination and authentication using Azure Active Directory (AAD) service principals and managed identities for Azure resources. But its JSON response must either be downloaded as-is or mapped using schema mapping in the copy activity. Further conversion would require another activity.

The HTTP connector allows Windows and Client Certificate authentication where the REST connector does not. But the important feature difference is that it supports more output file types than just JSON, including CSV, Parquet, ORC, Excel, and XML.

I had been successfully downloading JSON data from a web API using the REST connector with basic authentication when I was asked to switch to downloading CSVs from the same API. The API I was working with supported the download of CSVs by changing the URL in the GET request. For example, I could download a file about categories by using a URL such as https://www.websitename.org/api/categories.csv.

Downloading a CSV

To download a CSV file from an API, Data Factory requires 5 components to be in place:

- A source linked service

- A source dataset

- A sink (destination) linked service

- A sink(destination) dataset

- A pipeline containing a copy activity

Defining the Source and Sink of the Copy Activity

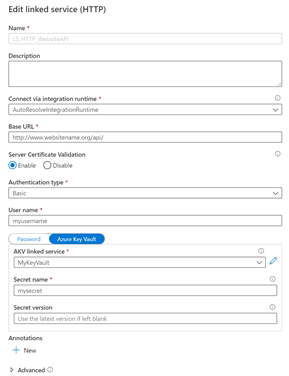

The source linked service for downloading the CSV file uses the HTTP connector. When you create an HTTP linked service, you must specify a name, the integration runtime to be used, a base URL, the authentication type, and credentials.

Data Factory user interface for defining an HTTP linked service

Azure Key Vault should be used to store passwords rather than storing them directly in Data Factory.

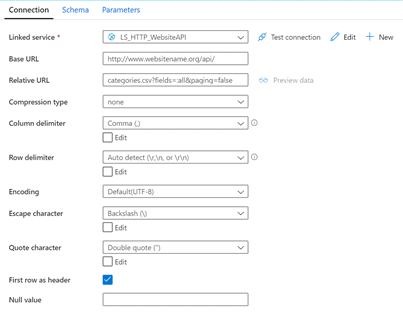

The source dataset type for the CSV file download is Delimited Text. It uses the HTTP linked service, specifies the column delimiter as a comma, and in this example, defines the first row as headers. It takes the base URL from the HTTP linked service and allows the input of the relative URL.

Data Factory properties for the CSV dataset using the HTTP connector

The sink linked service in this case is Azure Blob storage, and the sink dataset is delimited text.

In many cases, you can create a pipeline, add a copy activity, specify the source and sink, and be done. But in some cases, this doesn't produce the intended CSV file, I suspect because it is treating the request as a web request instead of an API request.

When the HTTP Connector Doesn't Get the Expected Response

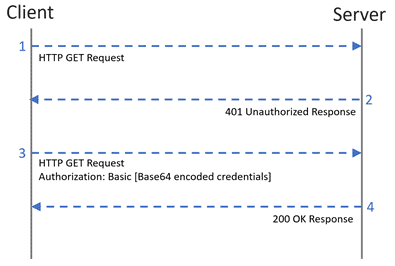

Unlike the REST connector, the HTTP connector doesn't send the basic auth credentials with the initial request. It expects a 401 Unauthorized response to the initial request, and then it sends the credentials as shown below.

Overview of the requests and responses expected by Data Factory when communicating with an HTTP source

In my case, the source API was not returning a 401 response, and I was receiving a CSV file containing the HTML of the login page of the website that hosted the API. In this situation, Data Factory never sends the credentials defined in the HTTP linked service to the API server. To solve this, I needed to add the following to the additional headers property in the source of the copy activity: Authorization: Basic [Base64-encoded username:password].

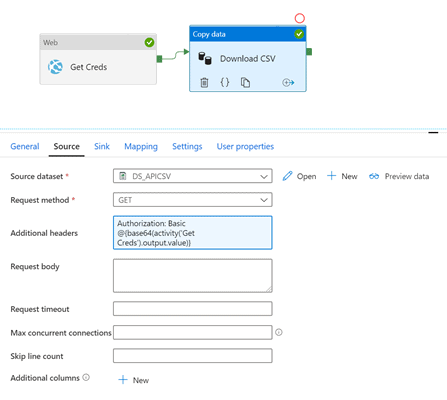

It's not advisable to paste plain text credentials in the copy activity. Instead, the username:password string can be added as a secret in Key Vault and a web activity in Data Factory can be used to retrieve it. Just give the data factory the appropriate access to the secret in the key vault and put the URL of the key vault secret in the URL property of the web activity.

Web activity connecting to Azure Key Vault to retrieve a secret

Make sure to check the Secure Output box on the General properties of the web activity and connect it to the copy activity. Then check the Secure Input box on the copy activity. This makes sure the username and password are not captured in the Data Factory logs.

We can then use the output of the web activity to populate the additional headers in the source of the copy activity. Since the activity in the example is named Get Creds, the expression to populate the additional headers property is as follows.

Authorization: Basic @{base64(activity('Get Creds').output.value)}Copy activity source showing the expression for the Additional headers property

Conclusion

If a JSON file is acceptable, the REST connector is the way to go. If you need to download a CSV file, the HTTP connector must be used. If the copy activity isn't authenticating as expected, you may need to add an additional header.