Introduction

Azure Data Factory recently introduced a new activity, called the Script activity. We can use the Script activity to execute DML (Data Manipulation Language) statements like SELECT, INSERT, UPDATE, as well as DDL (Data Definition Language) statements like CREATE, ALTER, and DROP operations.

Currently, the Script activity supports the following data stores:

- Azure SQL Database

- Azure Synapse Analytics

- SQL Server Database

- Oracle

- Snowflake

A few example use cases for the Script activity:

- Create, alter and drop database objects such as tables, views, and indexes.

- Truncate a table.

- Execute a stored procedure.

- Use the result set returned from a query in a subsequent activity.

Pipeline Development

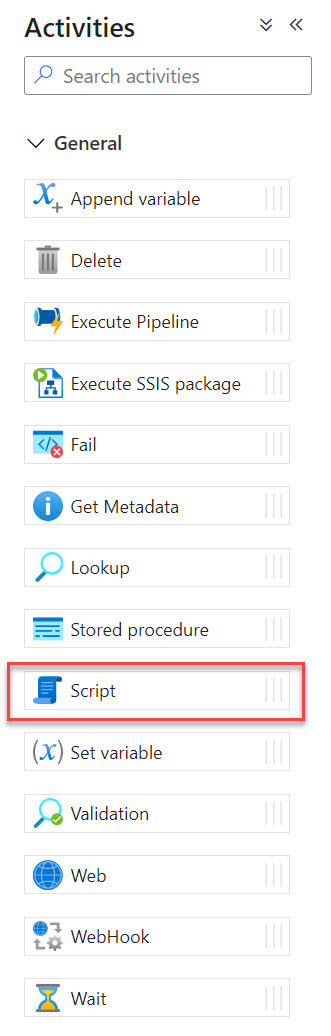

In this example, we will create a simple pipeline to understand the script activity. Let's go to the Azure Data Factory authoring section and create a new pipeline. The script activity is available under the General section of the activities:

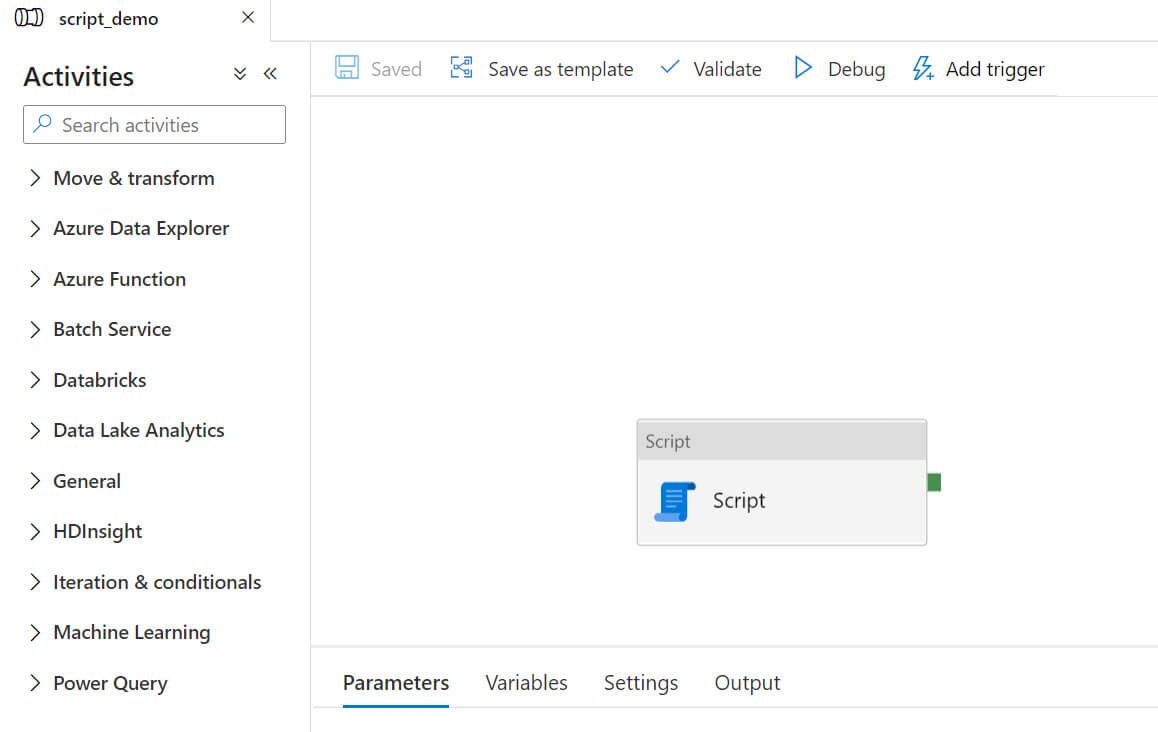

Add the script activity to the design surface :

The General section is similar to other activities with these items:

- Name - Name of the activity.

- Description - Comments about this activity (optional).

- Timeout - Timeout in D.HH:MM:SS format, the default timeout value is 7 days.

- Retry - Maximum number of retry values.

- Retry interval (sec) - Specify the number of seconds between each retry attempt, the default value is 30.

- Secure output - Output from the log file will not be captured if checked.

- Secure input - Input from the log file will not be captured if checked.

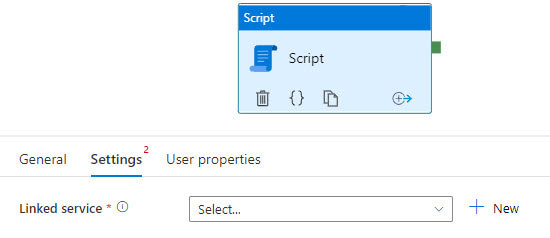

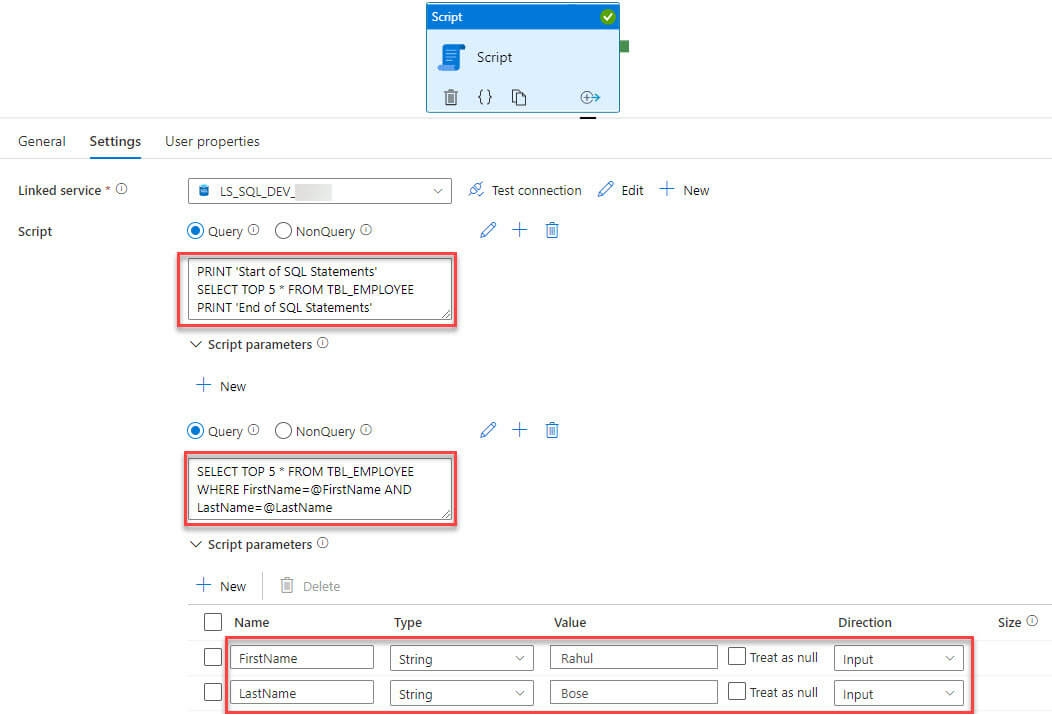

Next, click on the Settings tab. First, we need to add a linked service:

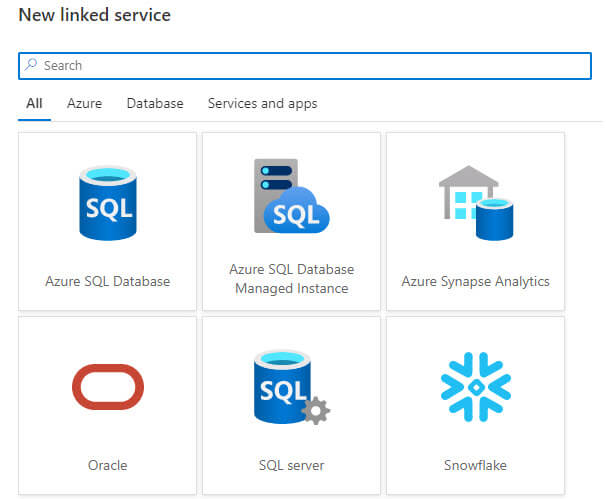

Currently, limited data sources are available in the linked service, it supports Azure SQL Database, Azure SQL Database Managed Instance, Azure Synapse Analytics, Oracle, SQL Server, and Snowflake. Here I am using Azure SQL Database:

Once a linked service is created, the Script section is available. It has two query options:

Query - Select this option to return one or more result sets.

NonQuery - Use this option to run DML statements like INSERT, UPDATE or DELETE statements or perform database catalog operations such as create tables.

We can add multiple SQL statements and Script activity supports both input and output parameters.

I added two SQL queries in the script section. The first query is a simple select statement and the second query has two input parameters:

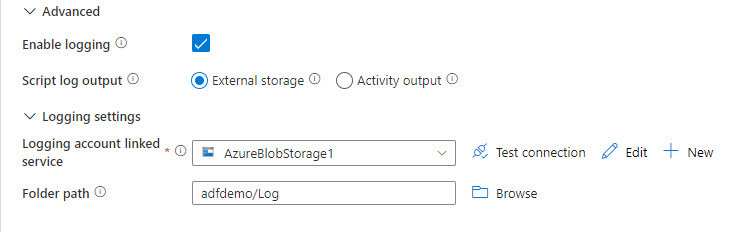

Let's edit the advanced section and change these settings:

- Enable logging - Select this option to log the query output. Output log should be generated by the PRINT statement in SQL Server, Azure SQL, Azure SQL MI, and Azure Synapse Analytics linked services.

- Script log output - It has two options External storage and Activity output.

- External Storage - User-defined messages will be stored in Azure Storage defined by the linked service. It has no size limit.

Activity output - User-defined messages will be available in activity output but the maximum size limit is 4 MB. Any messages that exceed the size limit will be truncated.

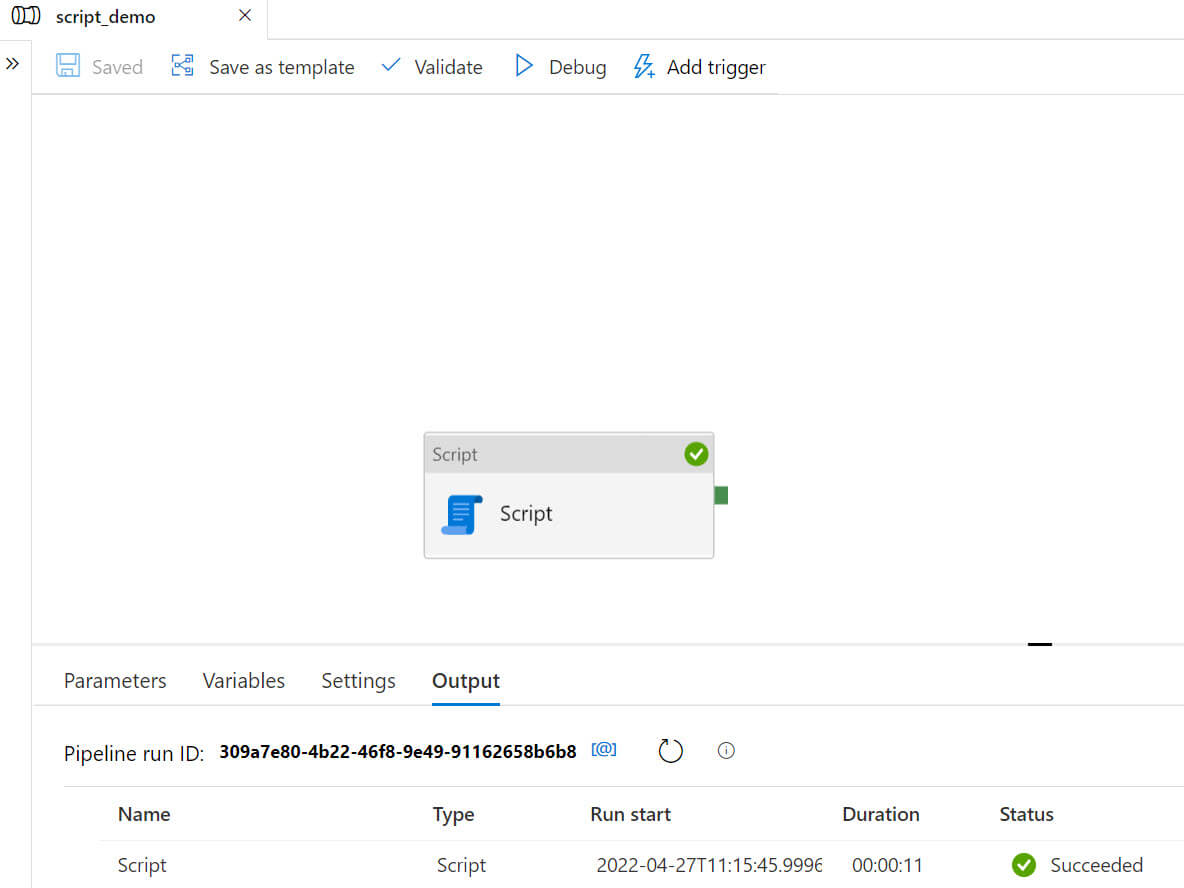

Let's run the pipeline:

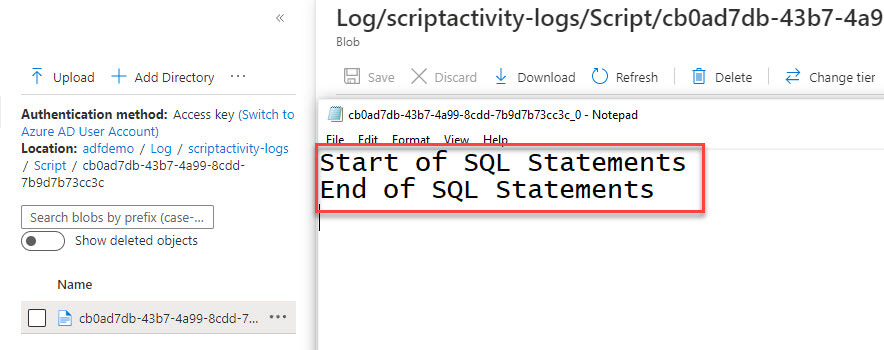

Now the pipeline is executed and the log file should be generated. Let's go to the data lake folder for the log file, download the file and open it in notepad. The print statements should be available in the log file:

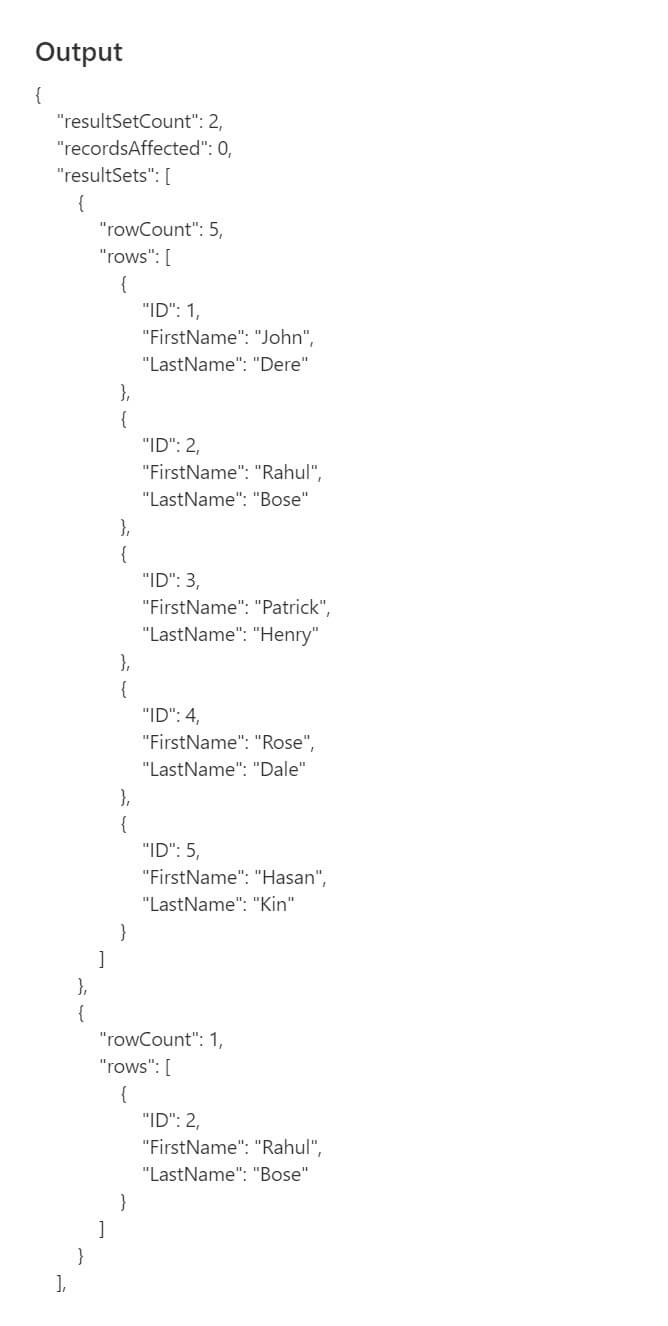

You can also view the resultset from the output window:

Conclusion

In this article, we discussed the script activity in Azure Data Factory. It is a very useful activity to run multiple SQL statements or use input/output parameters etc.