Introduction

As DBAs, we often think of INCLUDE columns as a harmless way to cover queries.They aren’t part of the index key, so it’s easy to assume that adding them wouldn’t have much impact.

This thinking is dangerously incomplete. While INCLUDE columns don’t participate in index order, they physically reside in index leaf rows. The silent impact of this design choice became crystal clear in a recent experiment I ran to test how INCLUDE columns affect transaction log generation during updates.

INCLUDE columns only: When you update columns that are only in the INCLUDE portion of the index (not part of the key), SQL Server doesn't need to maintain the index structure – it simply updates the leaf-level data. This generates log records, but not as much as key column updates.

Key column updates: If you update columns that are part of the index key, this requires more log activity because the index structure may need rebalancing. SQL Server logs a delete and insert for the affected index rows, including all INCLUDE columns, resulting in higher log volume.

Wider rows: Adding more INCLUDE columns makes index rows wider. As a result, whenever updates occur – even if they’re not on the INCLUDE columns themselves – slightly more data gets written to the transaction log because the overall index row structure is larger.

While it’s understandable that adding INCLUDE columns increases index size and, consequently, full backup sizes, what’s not so obvious is how it also causes transaction log sizes to grow. This indirect behaviour is the real focus of our discussion.

Setup: Building a Realistic Orders Table

In this setup, I first created a database named TestLogImpact and set it to FULL recovery mode to enable transaction log backups for precise measurement. I then switched to this database and dropped any existing Orders table to start clean. Next, I created a wide Orders table simulating a realistic ERP scenario with columns such as CustomerID, OrderDate, ShipDate, Quantity, Price, Status, Comments, Region, CreatedBy, UpdatedBy, and five additional VARCHAR(500) columns named Extra1 to Extra5 to simulate payload-heavy tables. Finally, I inserted 100,000 dummy rows into this table by selecting from a CROSS JOIN of sys.all_objects to generate sufficient data volume. Each row was populated with a random CustomerID between 0 and 999, current dates for OrderDate and ShipDate, random Quantity and Price values, and repeated strings for Comments and all Extra columns to ensure wide rows. This dataset created a practical yet performance-impactful baseline for testing how index INCLUDE columns affect transaction log generation during subsequent updates.

CREATE DATABASE TestLogImpact;

GO

ALTER DATABASE TestLogImpact SET RECOVERY FULL;

GO

USE TestLogImpact;

GO

DROP TABLE IF EXISTS Orders;

GO

CREATE TABLE Orders

(

OrderID INT IDENTITY PRIMARY KEY,

CustomerID INT,

OrderDate DATE,

ShipDate DATE,

Quantity INT,

Price DECIMAL(10,2),

Status VARCHAR(20),

Comments VARCHAR(500),

Region VARCHAR(50),

CreatedBy VARCHAR(50),

UpdatedBy VARCHAR(50),

Extra1 VARCHAR(500),

Extra2 VARCHAR(500),

Extra3 VARCHAR(500),

Extra4 VARCHAR(500),

Extra5 VARCHAR(500)

);

GO

INSERT INTO Orders (CustomerID, OrderDate, ShipDate, Quantity, Price, Status, Comments, Region, CreatedBy, UpdatedBy, Extra1, Extra2, Extra3, Extra4, Extra5)

SELECT TOP (100000)

ABS(CHECKSUM(NEWID())) % 1000,

GETDATE(),

GETDATE(),

ABS(CHECKSUM(NEWID())) % 100,

CAST(ABS(CHECKSUM(NEWID())) % 5000 + 100 AS DECIMAL(10,2)),

'Open',

REPLICATE('Comment', 20),

'North',

'System',

'System',

REPLICATE('Extra1', 50),

REPLICATE('Extra2', 50),

REPLICATE('Extra3', 50),

REPLICATE('Extra4', 50),

REPLICATE('Extra5', 50)

FROM sys.all_objects a

CROSS JOIN sys.all_objects b;

GO

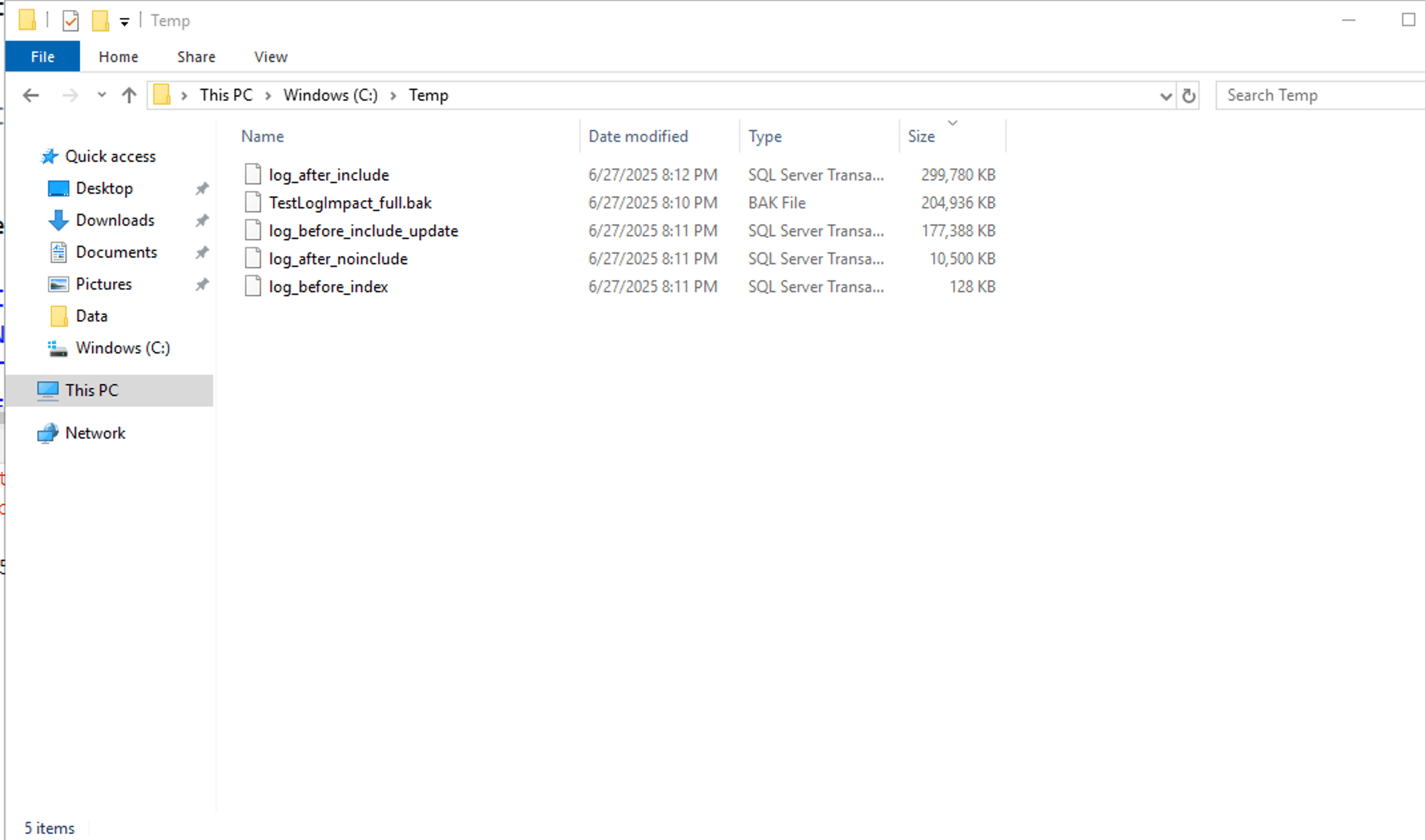

Step 1: Taking a Full Backup

Since log backups require a base full backup, I took one before proceeding.

BACKUP DATABASE TestLogImpact TO DISK = 'C:\Temp\TestLogImpact_full.bak' WITH INIT; GO

Step 2: Measuring Log Generation Without INCLUDE

I backed up the log to reset it before testing.

BACKUP LOG TestLogImpact TO DISK = 'C:\Temp\log_before_noinclude.trn' WITH INIT; GO

Then I created a nonclustered index without any INCLUDE columns.

CREATE NONCLUSTERED INDEX IX_CustomerID ON Orders (CustomerID); GO

I ran an update modifying Quantity values for customers between 100 and 900.

INCLUDE index updates generated much larger logs.

INCLUDE index updates generated much larger logs.