Welcome to my latest article, which looks in detail at combining a Failover Cluster Instance (FCI) of SQL Server and an AlwaysOn (AO) availability group. In this guide we'll work through an example to look at how AO is implemented alongside an FCI. The article will examine the storage provisioning, the network provisioning, the cluster validation and the deployment of the Always On group.

To fully understand the integration it will be necessary to work through the guide, which at the end will leave you with your own virtual test system that you may use for almost any purpose you require. The aim of this guide is to provide an understanding of how an Always On group works in conjunction with an FCI and to demonstrate how Windows Server Failover Clusters are used to manage the Always On group(s).

Since the release of SQL Server 2012, Always On has sparked a great deal of interest. This new technology takes Windows Server Failover Cluster's (WSFC) and database mirroring and provides a whole new level of HA. Traditionally, clustering was used as the defacto high availability for SQL Server. This would often be combined with technologies such as database mirroring and log shipping, with Always On, your group contains a set of specific databases (mirroring previously only allowed a 1-1 relationship) that are then mirrored on up to 4 secondary replicas.

Each instance that takes part in an Always On group is referred to as a "Replica", whether it be a Primary or a Secondary replica. Secondary replicas may be targeted for backup operations, as well as read only routing to handle read only requests. Read only routing offers the ability to direct read only intent operations to Secondary databases, this helps to ease the load on the Primary database.

The Always On technology has a clear and concise list of pre requisites and restrictions, these should be adhered too and researched fully prior to deployment to avoid having the situation where you have a configuration that is not suitable for your required purpose. A classic example of this would be deploying an FCI with an Always On group expecting to be able to use automatic failover; this configuration is not supported!

The Pre Requisites and Restrictions in detail may be found at the following link: http://msdn.microsoft.com/en-us/library/ff878487.aspx

Anybody who has worked with Oracle RDBMS will be aware of the Listener functionality, Always On now employs such technology to provide a central client access point into the Always On database(s). We'll look at the listener later during the guide to see what it does and how it fails over between nodes.

Software Used

For this article I am using the following software (180 day trials are available from Microsoft)

- Windows Server 2012 R2 Standard

- Windows Server 2008 R2 Enterprise

- SQL Server 2012 Enterprise

- VMWare ESXi 5.1

- Starwinds iSCSI SAN Free

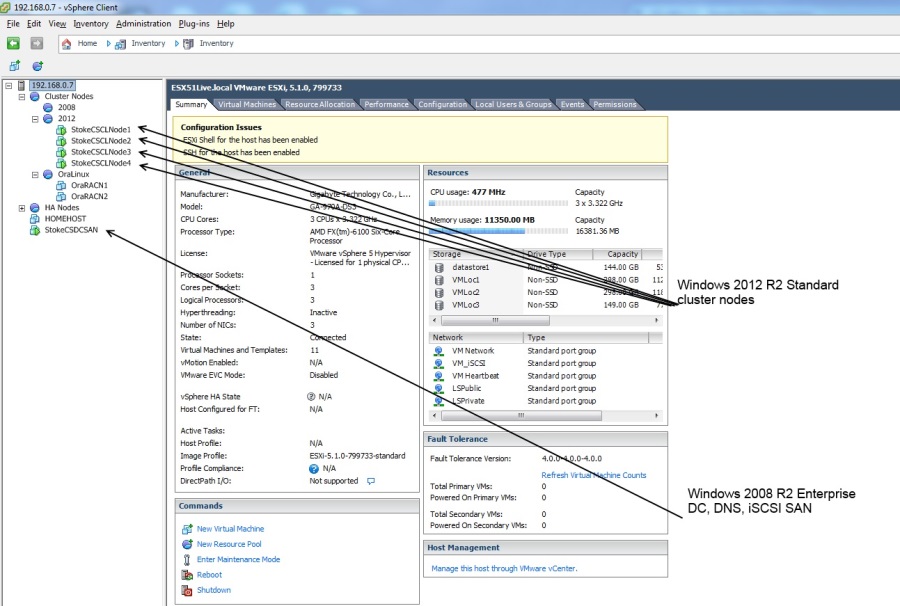

I have the following VMs deployed

- 4 x Windows Server 2012 R2 cluster node

- 1 x Windows Server 2008 R2 Domain Controller and DNS server and iSCSI SAN.

For the purposes of this article the following apply

| AO | Always On |

| FCI | Failover Cluster Instance |

| iSCSI | Internet Small Computer System Interface |

| SAN | Storage area network |

| Replica | An instance of SQL Server that is part of an Always On group |

| WSFC | Windows Server Failover Cluster |

| vNIC | Virtual network card |

| vCPU | a proportionate share of a physical host CPU |

| OS | Windows Operating System |

VMWare Configuration

My VMWare configuration is as follows;

I'm not going to cover the installation and configuration of ESX5i, there are way to many guides on the internet that show you how to do this, in any case, it really is so easy to install.

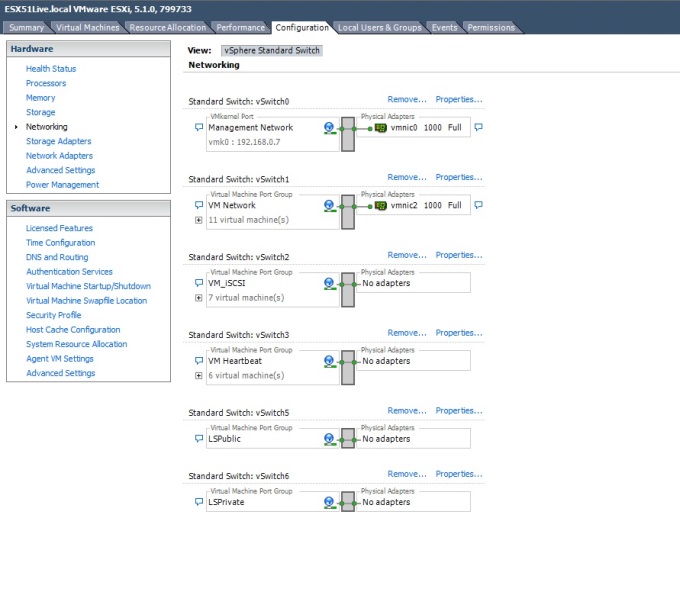

I have the following virtual switches configured, only vSwitch1 has a route out onto my public LAN, the others are host based virtual networks.

vSwitch1 has a physical NIC connection on the host out onto my public LAN. vSwitch2 and vSwitch3 are private host based networks for use by the Virtual Machines only.

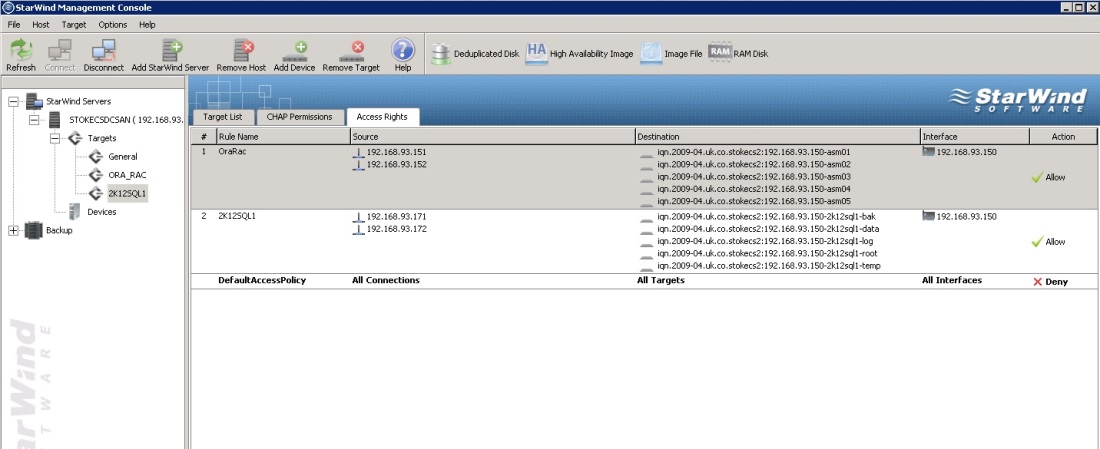

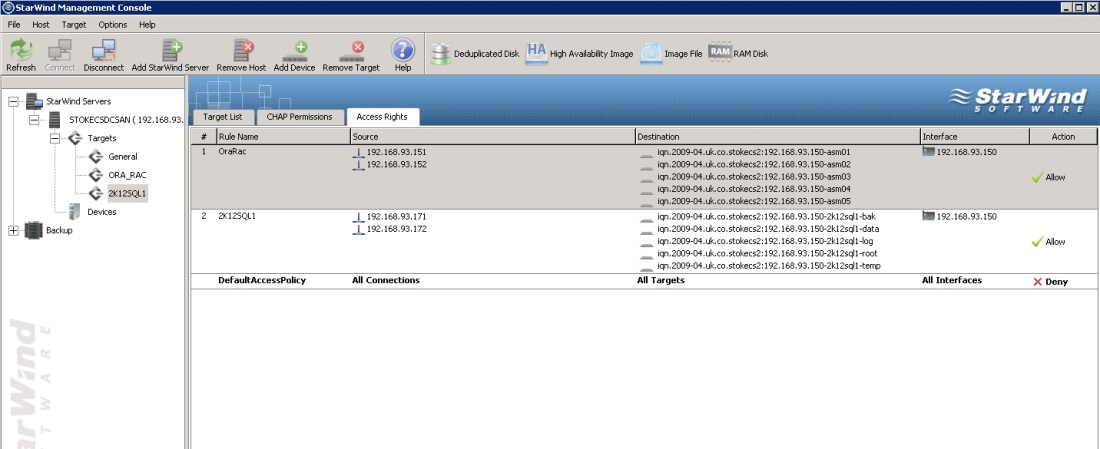

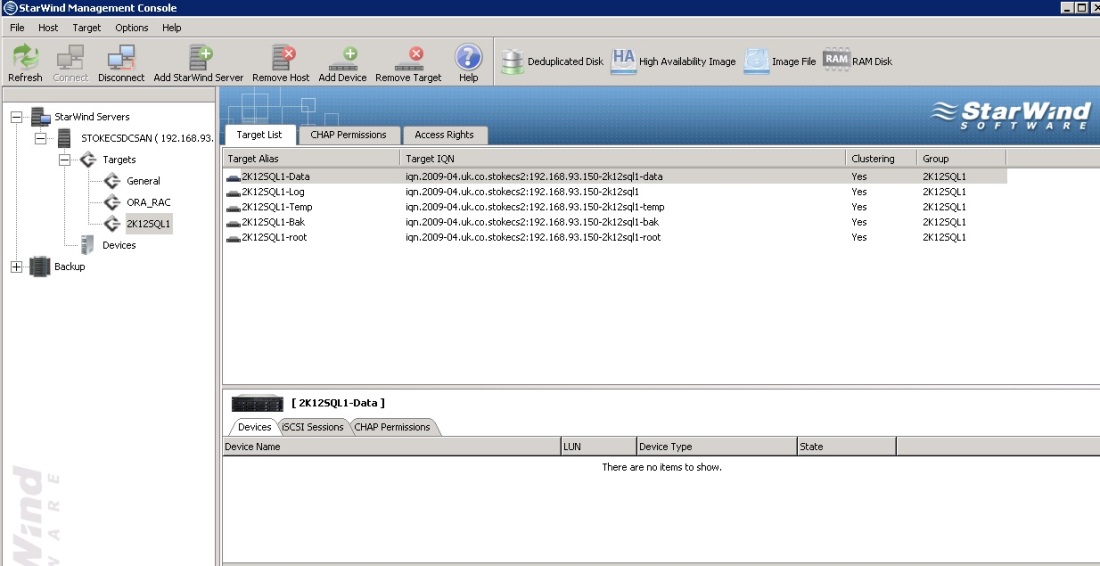

I am using the Starwinds Free iSCSI\SAN software to provision my iSCSI storage to my WSFC nodes. The groups below show the shared storage group (2K12SQL1) for the FCI. The group 2K12SQL1 has been presented to cluster nodes StokeCSCLNode1 and StokeCSCLNode2, these nodes will participate in the FCI.

Again, I won't cover the install of Starwinds iSCSI\SAN, the vendor has a nice guide showing how to achieve this. I will however, provide an overview on how to create LUNs that will then be presented to the Windows server cluster nodes.

WSFCs, AO and FCIs

Let's start with the basics before setting up our virtual environment.

Windows Server Failover Clusters

WSFCs are a feature of the Windows Server 2012 operating system. As a part of the windows subsystem, this technology has evolved through the life of Windows server to provide a more resilient Failover platform. It is important to know that this provides failover partner capability only and not scaled out server services. With WSFC's, clustered applications are installed into separate groups or "Applications", which contain a set of resources such as disks, services, IP addresses, etc. The group and its resources are owned by only one node at any one time, the resources are not accessible from any other partner node unless a planned switch or a failover to that node occurs. Where Always On is to be deployed within a WSFC, the only disk storage shared between all of the nodes would be the quorum disk resource or the DTC disk resource if you are using them. Although cluster nodes may have disparate hardware it's usually best to keep them uniform in this respect. However, the nodes must all use the same patch levels and network configurations, this will become clear when validating your configuration before deploying the Windows Server Failover Cluster.

The WSFC requires some form of mediation to control cluster resource ownership, and uses Quorum to maintain cluster stability. In Windows Server 2012 WSFC, this Quorum takes the form of a node voting system with the majority required to maintain Quorum. You may also use additional quorum resources in the form of a disk for localised clusters or a remote file share for multi site clusters. Over the versions of Windows Server, the quorum has changed extensively with the addition of Majority Node Sets and more recently, Dynamic Weight Configuration. The latest "Dynamic node weight" configuration is a new feature, this will dynamically balance the cluster votes during a node outage to ensure the cluster does not failover unnecessarily. This works best under controlled outages and is by no means a single protector for the cluster. You must still configure an appropriate quorum level for your node configuration.

Typically, in large multi node clusters you install your clustered applications across pre defined nodes. Making the mistake of installing the applications across all nodes can cause some undesired failovers and, as we'll see, also violates the Always On group restriction policy which ensures that all AO instances are homed on separate nodes in the cluster. What this means is that no Instance configured as a replica in a given Always On group, whether clustered or non clustered, may reside on the same cluster node as a partner replica within the same WSFC. This can become slightly confusing when you consider that all nodes that are to participate in an AO group, must be part of the same windows cluster.

So, clusters provide the ability to combine multiple computer nodes (physical and\or virtual) to service a set of applications for high availability, in this case a SQL Server instance. The application is made highly available by presenting to clients a virtual access point comprising a unique IP address and a unique computer name or "virtual network name". These become resources in an application group and are passed between participating nodes like tokens. A critical hardware failure of the active computer node would result in the loss of group service and would automatically start the group on an available partner node. At a high level, the client access point details are transferred along with any disk and service resources, etc to a failover partner node. A failover of the clustered instance causes disconnection of client connections, these may then reconnect once the service is available on a partner node.

Common failures usually are;

- Public NIC or network failure

- Power supply failure

- Motherboard failure

- CPU Failure

To, recap: for the deployment of an FCI, the computer nodes use shared storage, which is presented to each node, usually from a SAN. The disks are added to clustered application\group and will failover between the nodes when the group is moved (either manually or through automatic failover). Throughout normal operation, only one node may obtain access to the storage disks at any one time (the node that owns the particular resources) to avoid volume corruption, this is also true for all of the remaining resources in the cluster group too. Disk access is controlled by the cluster service once the disks have been added as cluster resources.

With Always On, the nodes utilise their own local storage, this is not shared with cluster partners. So, how to integrate the two?

Failover Cluster Instances

A highly available instance of SQL Server is clustered to mitigate any node hardware failures. The only weak link here is the storage; this becomes the single point of failure. It is this very weakness that AO provides mitigation for. Each AO replica will be provisioned with its own storage. This storage is not usually shared by other AO nodes within the cluster.

A Failover Cluster Instance is an instance of SQL Server, default or named, that has been installed onto a WSFC as a clustered application. The clustered application typically has the following resources as a minimum:

- IP address

- Network name

- Shared disk(s)

- SQL Server service

- SQL Server agent service

A clustered instance of SQL Server will utilise any shared storage that has been presented to the Windows Server Failover Cluster nodes. Usually this storage will take the form of LUNs presented from a SAN. A Failover Cluster Instance of SQL Server is deployed by launching the "New SQL Server Failover Cluster Installation" wizard on the first computer node that will participate in the FCI. Once this has been performed you would then launch the "Add Node to a SQL Server Failover Cluster" wizard on any computer node in the WSFC that you wish to participate in the new SQL Server FCI. For Standard edition of SQL Server you are limited to 2 nodes, Enterprise and upwards support the OS maximum (8 nodes in Windows 2003 and 16 nodes in Windows 2008).

Note: Standard edition limits the FCI to 2 nodes but this does not dictate how many nodes have membership of the Windows cluster, it is merely at the installer level.

Always On Groups

As the new high availability architecture for SQL Server 2012, this technology takes database mirroring and Windows Server Failover Clusters and creates a new level of high availability. Multiple nodes each host a synchronised copy of the AO database(s), and access is best provided by the configuration of a listener. Read only replicas may be configured to receive read only intent operations or may be targeted for backup\maintenance operations to relieve the pressure on the Primary database. The Always On groups use traditional database mirroring endpoints for their communication. These are automatically configured when using the availability group deployment wizard. If you are performing a first time manual group deployment, you must manually create the mirror endpioints and grant security permission on them for the appropriate account.

An Always On group will consist of 1 primary replica and up to 4 further secondary replicas, 5 in all. You have the option of asynch or synch commit modes, there are also a host of other settings to specify such as backup preference and read\write ability. We'll see more of this as we move through the deployment.

An Always On Availability Group, is a group of one or more databases configured on a primary replica (or SQL Server instance) for high availability. This will typically include one or more further replicas which will service a copy of the highly available database(s). Partner databases may be either readable or standby and may also use either asynchronous or synchronous commit modes.

In Always On a replica refers to an instance of SQL Server that is participating in the Always On availability group.

Always On relies on the WSFC core functionality to achieve the high availability that AO offers, but does not require any of the following shared resources associated with an FCI.

- Shared disks

- Shared IP address

- Shared network name

- Shared SQL Server and SQL Server agent resources

There is one exception to this rule, when creating an Always On group listener this will create a set of resources shared by the AO group replicas, but note that this application\cluster group has no relation to a Failover Cluster Instance.

If you are using a listener for your AO group you are able to provide a central client access point for entry into the availability group, this provides a great deal of resilience when used in your application connection strings.

Always On is a feature configured at the individual service level by using SQL Server Configuration Manager. A computer node MUST first be a member of a valid WSFC before you may enable the Always On service feature. Availability replicas MUST be situated on separate physical nodes. Given this, in an example, INST1 must reside on Node1 and INST2 must reside on Node2. You cannot install INST1 and INST2 on to Node1 and configure an AO group.

For a basic Always On configuration, each node has local disk storage and non clustered instances of SQL Server installed. Ultimately, AO removes the storage single point of failure that is common place with shared disks. The nodes are completely stand alone apart from their membership of a valid Windows Server Failover Cluster.

Now that we have a little background we may start to look at how these technologies interact.

Always On Listener

The listener, when configured, is created as a clustered application and contains 3 resources. These are

- Virtual IP address

- Virtual networkname

- Always On resource

The application is failed over between nodes in the cluster when a failover of the Always On group occurs. The location of the clustered application tracks the Primary replica and its underlying node and moves around in the cluster as required. Where the Primary replica is a clustered instance of SQL Server, the Listener is owned by the active node for that FCI\replica.

When read only routing has been configured a connection to the listener specifying a read only intent connection will be routed to a secondary partner rather than the primary replica. Again, we'll see more of this later in the deployment.

AO and FCI Deployment Part 1

Without further ado let's start the deployment, this first part will cover creating the initial virtual machines, adding the storage and validating\deploying the cluster. As shown above, you can see the VMs I have deployed, 5 in total.

Within the VMWare Infrastructure client, deploy the required VMs. If your host configuration spec is high, I recommend you use the following for each VM (as i have)

| VM | vCPUs | RAM |

| DC\SAN | 2 | 2GB |

| Nodes | 2 | 2GB |

The VMs will require the following vNICs\IPs

| VM | Network | IP Address |

| DC\SAN | Public | 192.168.0.140 |

| iSCSI | 192.168.93.140 | |

| Nodes 1 - 4 | Public | 192.168.0.141 - 192.168.0.144 |

| Private | 10.10.10.141 - 10.10.10.144 | |

| iSCSI | 192.168.93.141 - 192.168.93.144 |

Each VM requires the following VMware Virtual Disks (VMDKs)

| VM | Drive Letter\Mount | Local or SAN | Size |

| DC\SAN | C: | Local | 40GB |

| D: | Local | 100GB | |

| AO Nodes x 2 | C: | Local | 40GB |

| D: | Local | 15GB | |

| G: | Local | 100MB | |

| G:\Data | Local | 12GB | |

| G:\Log | Local | 5GB | |

| G:\Backup | Local | 9GB | |

| G:\Tempdb | Local | 6GB | |

| FCI Nodes x 2 | C: | Local | 40GB |

| D: | Local | 15GB | |

| FCI Shared disks | G: | SAN | 100MB |

| G:\Data | SAN | 15GB | |

| G:\Log | SAN | 6GB | |

| G:\Backup | SAN | 9GB | |

| G:\Tempdb | SAN | 5GB |

The first step you need to achieve is the creation and configuration of the ESX server. Setup any datastores you may need for your Virtual Machine files. You can find extensive detail on how to install\configure ESX server, create VMWare datastores and Virtual Machines via the VSphere client on the VMWare forums. Once the system has been configured deploy the required VMs, as a guide, these are the settings i have used for my VMs

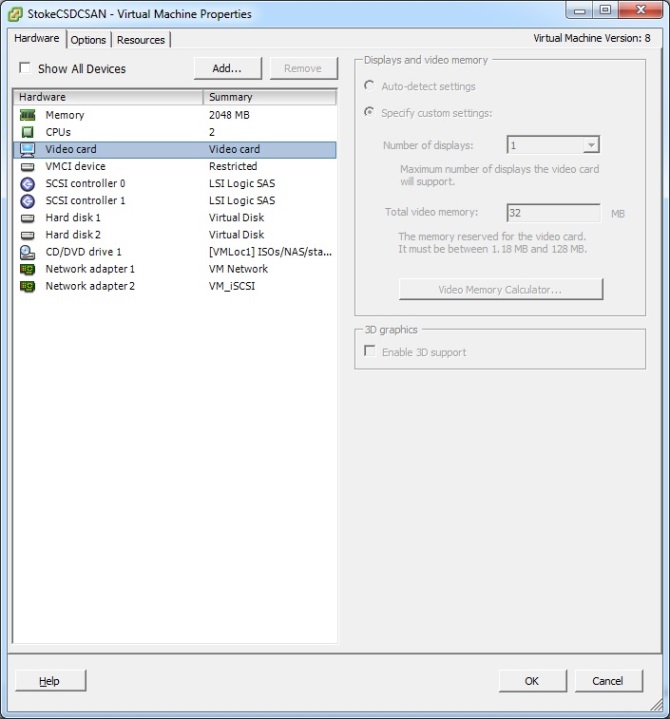

DC\SAN

Basic configuration required here. We have only 2 vNICs connected to Public LAN and iSCSI LAN. 2 SAS disks homing the OS and the Starwinds iSCSI image files. 2 vCPUs and 2 GB RAM

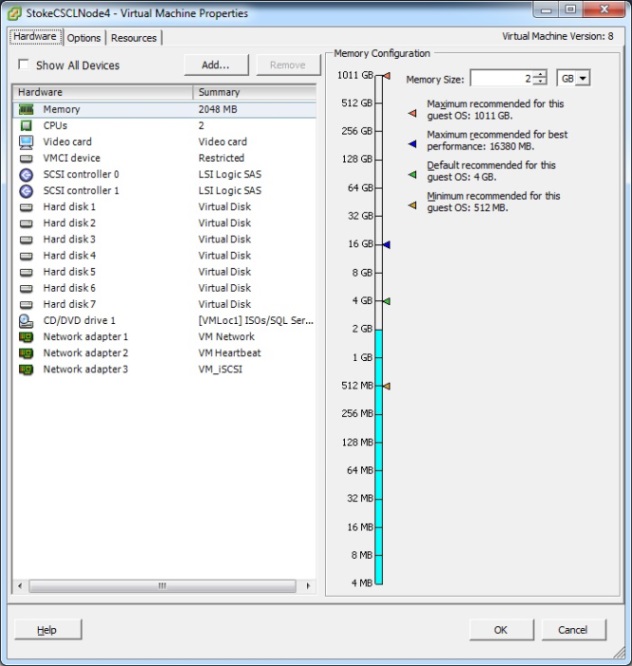

2012 R2 Cluster Nodes

These are a little different, they have an extra vNIC for the cluster heartbeat connection. The screenshot below shows the settings for the 2 cluster nodes which will hold the stand alone instances of SQL Server 2012. These are to be the 2 standby replicas in the Always On group. The remaining 2 nodes will have the same spec as below but minus the HDDs 3, 4, 5, 6 and 7 as this storage will take the form of LUNs presented over iSCSI from the DC\SAN VM.

Once you have the VMWare environment configured and the 5 VMs created, proceed to install Windows and set up your DC\SAN. This first task involves adding the Active Directory Domain Services role to the DC\SAN node (don't worry about DNS, the DC promotion will take care of this). Once this role is installed, execute DCPROMO.EXE from a command prompt and follow the wizard, it will ask you to confirm you wish to install DNS services 😉

These are the general steps for creating the domain controller via DCPROMO.EXE

- Click next at the welcome screen

- Confirm the OS compatibility

- Select the option to create a new domain in a new forest and click next

- Provide a fully qualified domain name, if you own your own domain name you could use this and click next

- Confirm the forest functional level (the default of windows 2003 will be fine)

- Confirm the additional DNS components to install and click next

- Note the delegation warning and click yes to continue

- Select paths used for AD components, the defaults are fine

- Supply a restore mode admin password, this will be required to gain local access to restore the AD catalog should it be required

- Review the summary and click next, the install will start. Check the "Reboot on completion" checkbox

Once the wizard completes and you have rebooted, you should have a fully functioning domain controller.

Now install the Starwinds iSCSI SAN and configure the software. Starwinds have more than enough information on this in their software guide.

Now that we have the DC and SAN installed and configured it's time to create ourselves a set of LUNs for the Failover Cluster Instance. Log onto the SAN and open the Starwinds console, to create the LUNs seen below you'll need to follow the next few steps (once for each LUN required).

Create the LUNs

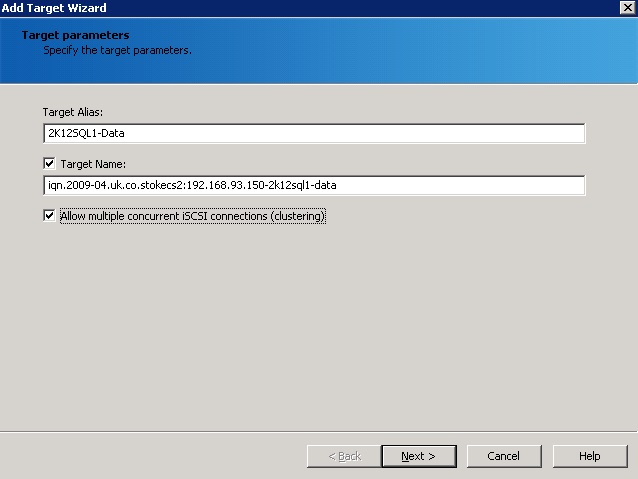

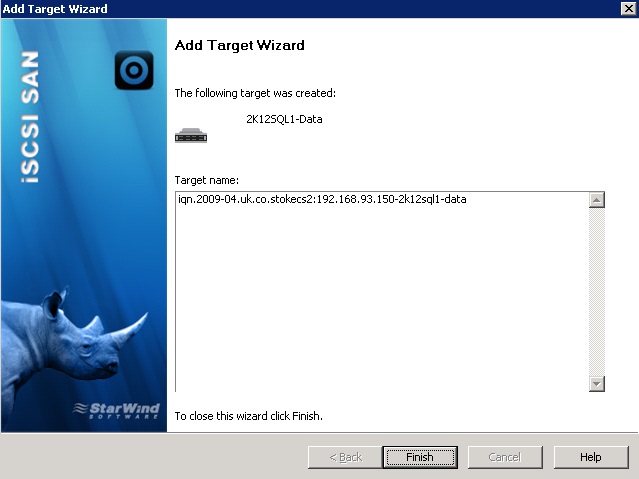

Right click "Targets" and click "Add Target", this will invoke the following wizard. Supply an alias and if required check the "Target-Name" checkbox and edit the name. Select the option to allow multiple concurrent iSCSI connections and click "Next".

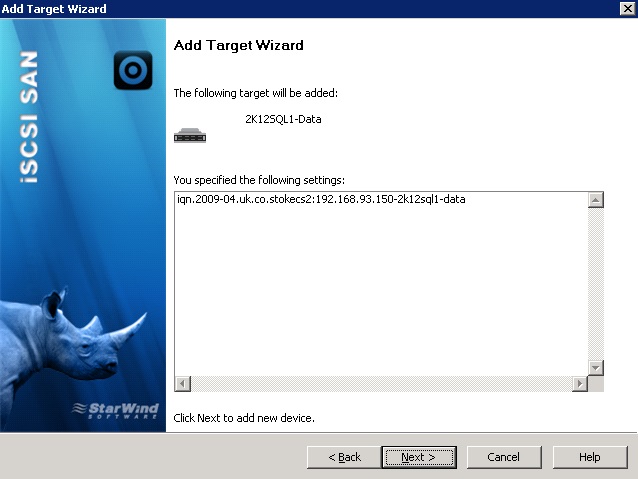

Click "Next"

Click "Finish". Complete this for each LUN you wish to present over iSCSI.

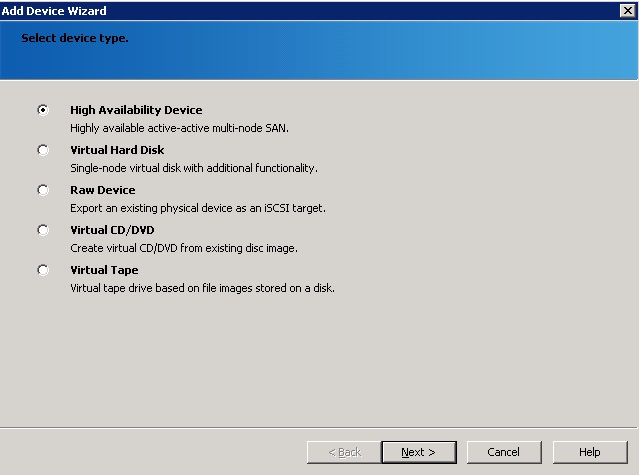

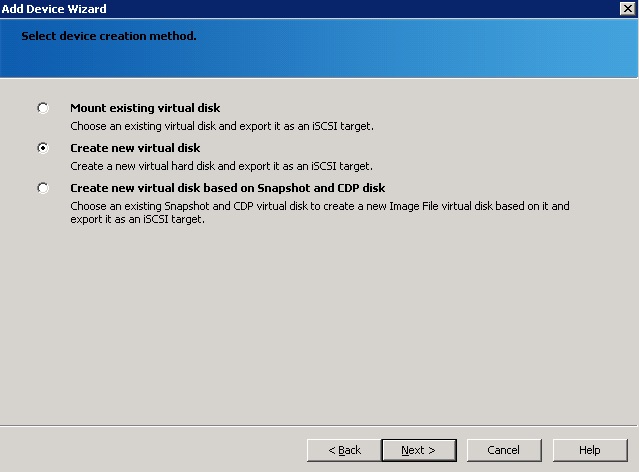

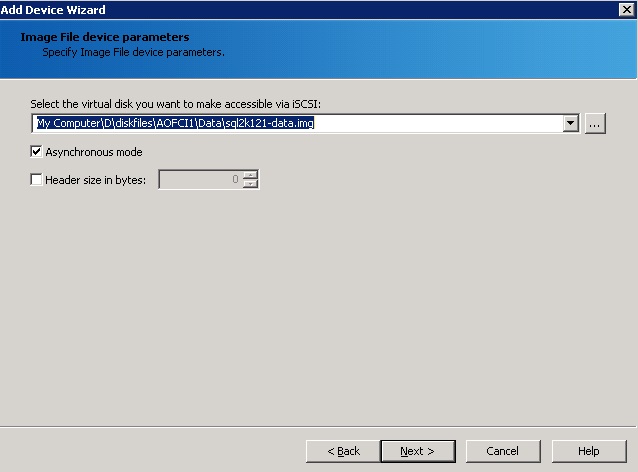

With all the Targets created you must now attach image file devices. In the Starwinds console right click "Devices" and select "Add Device", this will invoke the following wizard shown in the following steps

Select "Virtual Hard Disk" and click "Next"

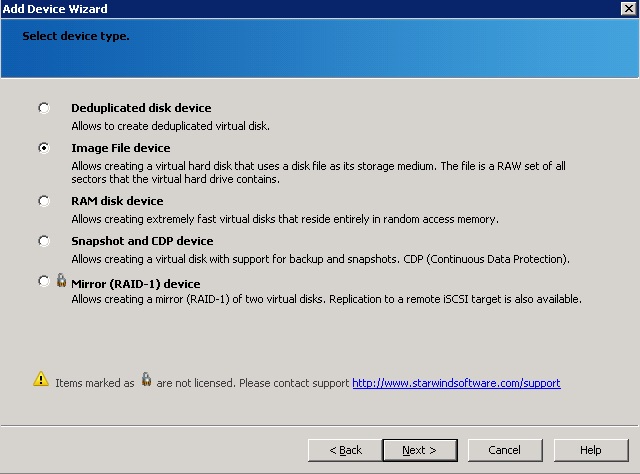

Select "Image File Device" and click "Next"

Select "Create new virtual disk" and click "Next"

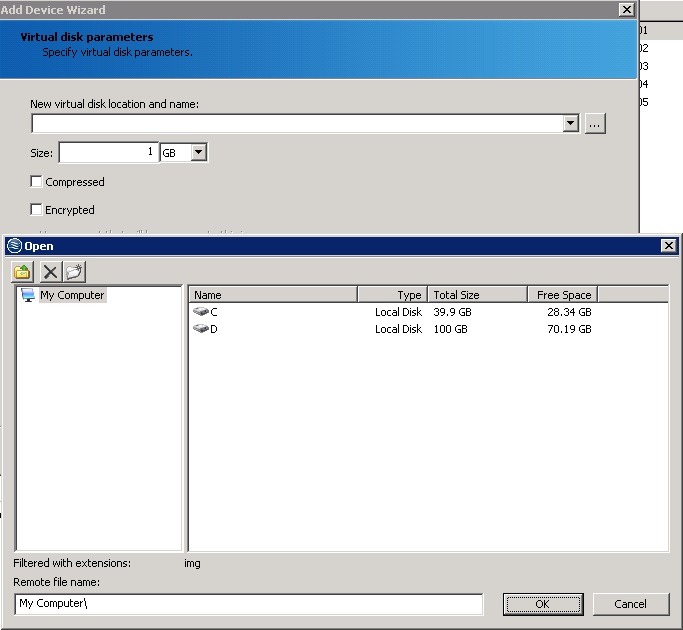

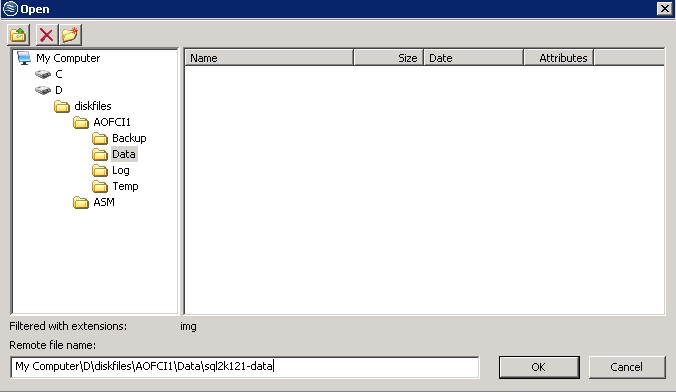

Clicking the ellipsis (...) button will show the file browser box. Select your chosen path and filename (don't add the file extension it will be done for you)

Setting the path and filename, click "OK" when done.

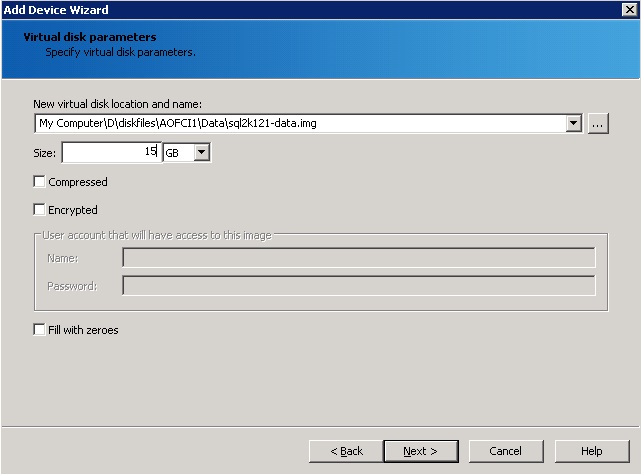

File details specified, supply a file size and file properties then click "Next"

Verify image device parameters and click "Next"

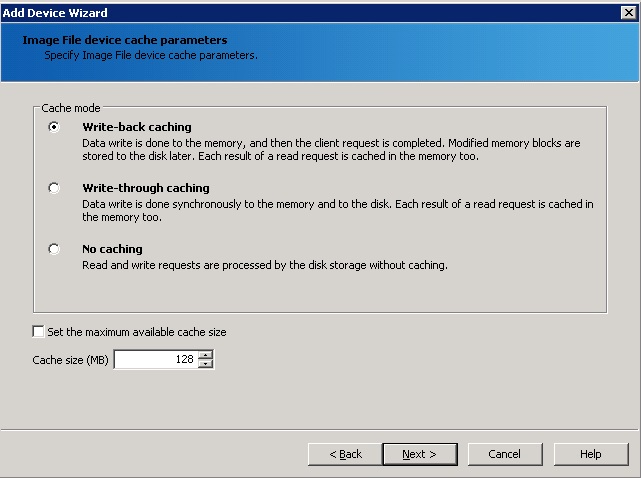

Configure caching (I select none usually) and click "Next"

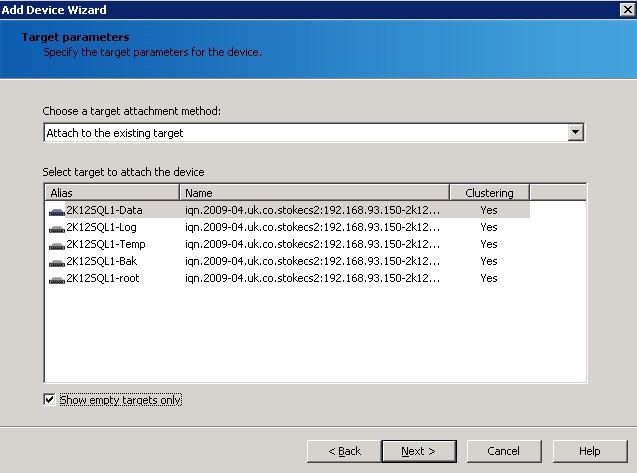

Select to attach to an existing target ( which you created earlier 😉 ) and click "Next". Checking the "Show empty targets only" checkbox makes things easier

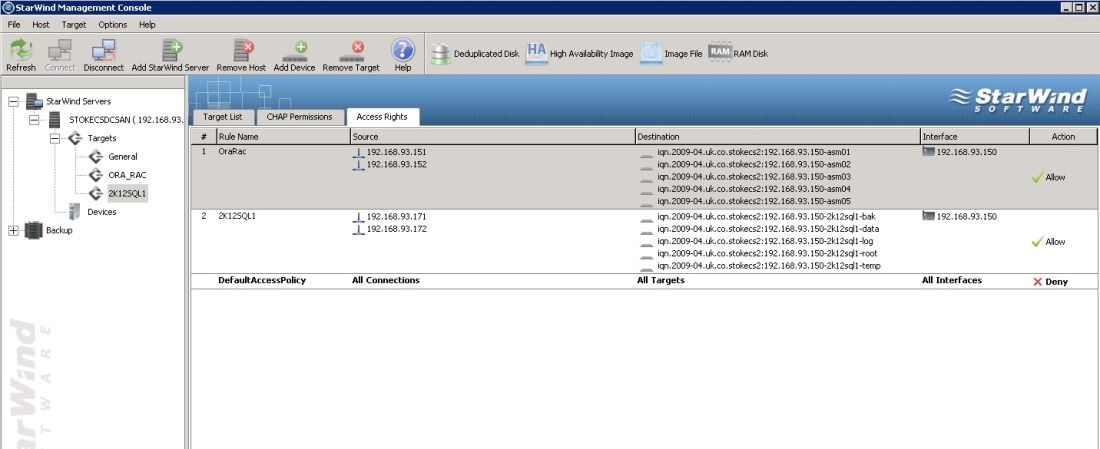

For the last 2 steps simply click "Next" and then "Finish". You will need to do this for each LUN you are presenting. You should see something along the lines of the screenshot below for the group 2K12SQL1

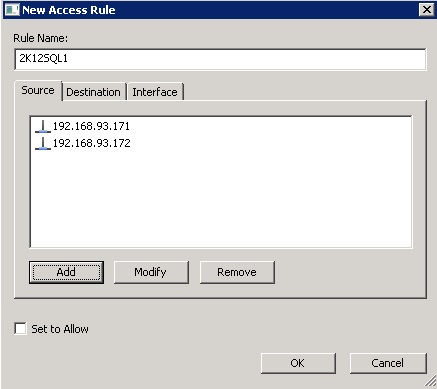

The Starwinds iSCSI SAN uses a rule set to ascertain which hosts may access which LUNs. We'll create a rule set now and set it to allow ready for the VMs to connect. On the "Access Rights" tab right click and select "Add Rule". On the dialog shown below give the rule a name and set the IP addresses of the hosts allowed to connect. Once you have set these select the Destination tab.

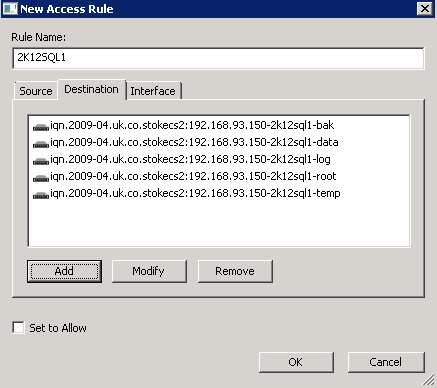

On the Destination tab add the devices included in this rule set, then select the Interface tab.

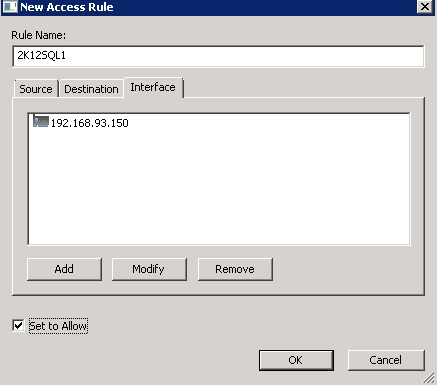

Add the interface on the iSCSI box that will listen for iSCSI connections and check the "Set to Allow" checkbox then click "OK". Remember we are using the 192.168.93.x range for our iSCSI network 😉

All LUNs have been created and the rule set provided to allow the 2 nodes to connect which will host the FCI. Now move onto the cluster node VMs themselves. Deploy the 4 VMs using the specification I detailed above. The Windows 2012 R2 installer is a cinch to complete. Follow the wizard and installation will be completed in next to no time. Don't be frightened to have a good look round the desktop and familiarise yourself with the look and feel. Once the OS has been deployed configure networking and join the machines to the new domain you created earlier.

Tip: ensure your public NIC has the IP address of the DC\SAN as its DNS server

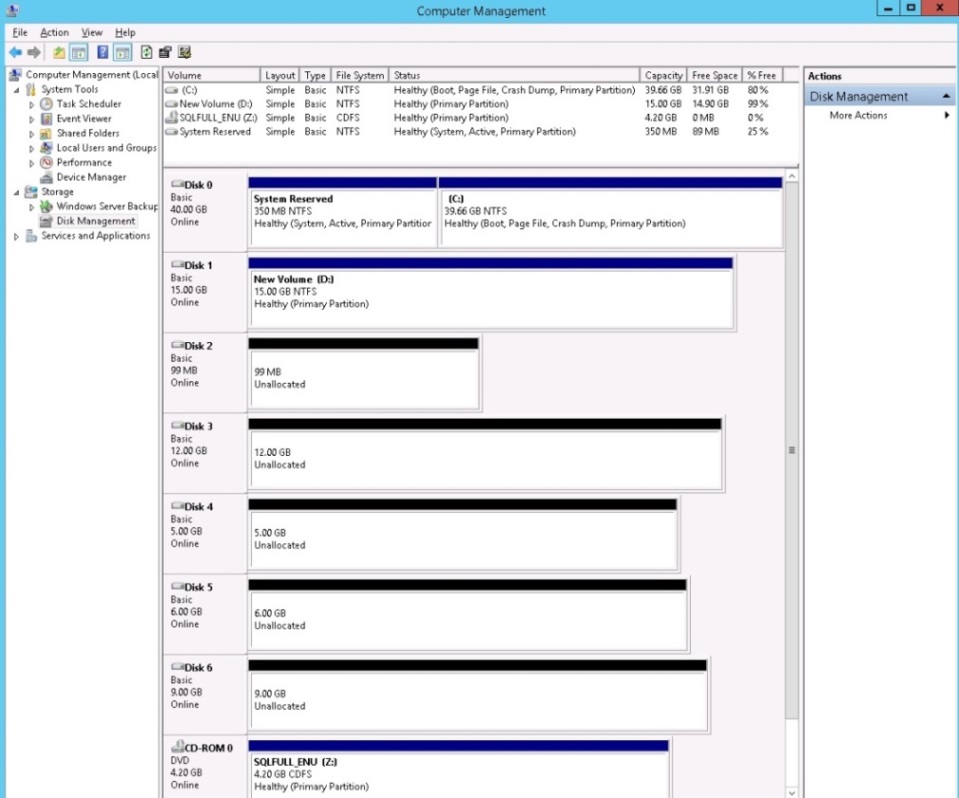

On the nodes 3 and 4 you'll need to format all the local disks and create volumes upon them. To achieve this open Computer Management and go to the Disk Management option.

To start all the disks will likely be offline, bring all disks online and right click and select to Initialise the disks. The online disks shown below.

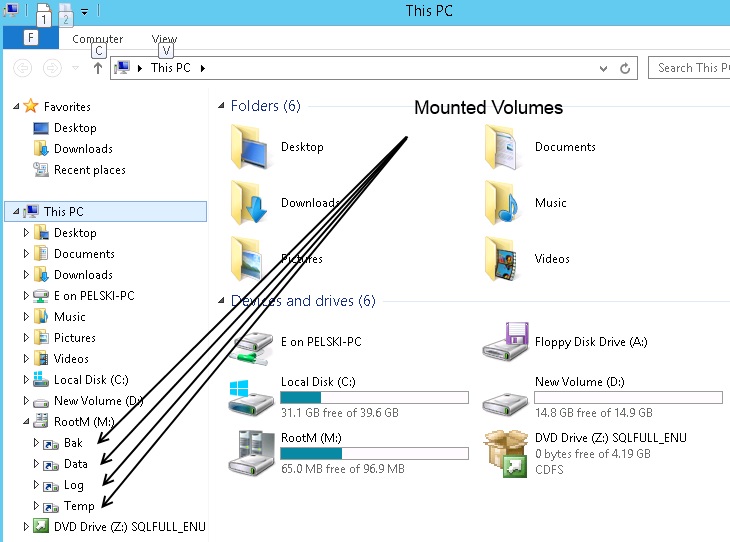

First format the root drive, this is the disk that is 100MB in size. Create a new volume and assign a drive letter. Once this is done create 4 folders at the root, the folders are:

- M:\Data

- M:\Log

- M:\Bak

- M:\Temp

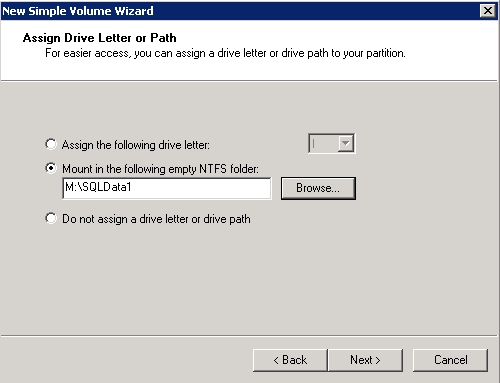

Now create and format the remaining 4 volumes but for each volume instead of assigning a drive letter, mount the disk under the appropriate folder you created above. The wizard has a section where you select either:

- drive letter

- no letter

- mounted folder

Below is an example of the create volume wizard step detailing the options available .

You should see the following for the mounted volumes:

Do this for both Node3 and Node4 and at that point we'll stop, I think you have more than enough to be getting on with 😀

Come back and read Part 2, where we'll assign the LUNs to Node1 and Node2 and create the Windows cluster. We'll also talk a bit more about the cluster quorum setup and the dynamic node weight option.

As always, if you're stuck with any of the above post in the discussion for extra help.