As I imagine (or hope), the majority of us adhere to some form of standards in the environment that they work in. Whether that be whether you have to wear a Shirt and Tie, be in for 9am or those of us in a Database or Development environment, Coding Standards.

Everyone who has worked with me knows that one of my standards is NO NULLABLE columns in any new development unless there is an extremely good, valid reason and to date, I’ve only ever had 1 developer convince me that they should make their column nullable – and that was due to the current system design meaning we had to have it without a complete re-write of that part of the system. I don’t really want this blog to turn into a big debate as to whether I’m right or wrong on this, its purely my view based on the many years I’ve been working with SQL Server and development teams.

There are many blogs out there where DBAs share the same or similar views such as Thomas LaRock (Blog|Twitter) in which he talks about the harm they cause by the result of a person not knowing they are there. What I’m going to focus on in this blog is more where people know the NULL values are there and tailor their T-SQL around it, specifically when the value is NULL and they don’t want to show NULL on screen so they replace it with another value.

I’ve knocked up a quick script to populate a table EMPLOYEE with dummy data for the purposes of this blog and yes, I’ve purposely left MODIFIEDBY_ID and MODIFIEDBY_DATE as NULLable and no, i’m not saying this is the table design anyone should be using in their systems, especially later on.

CREATE TABLE EMPLOYEE

(

EMP_ID INTEGER IDENTITY(1000,1)

PRIMARY KEY

NOT NULL

,DATE_OF_BIRTH DATETIME NOT NULL

,SALARY DECIMAL(16,2) NOT NULL

,EMP_NAME VARCHAR(50) NOT NULL

,INSERTED_ID INT NOT NULL

,INSERTED_DATE DATETIME NOT NULL

,MODIFIEDBY_ID INT NULL

,MODIFIEDBY_DATE DATETIME NULL

)

GO

SET NOCOUNT ON ;

DECLARE @Counter BIGINT ;

DECLARE @NumberOfRows BIGINT

SELECT @counter = 1

,@NumberOfRows = 70000

WHILE ( @Counter < @NumberOfRows )

BEGIN

INSERT INTO EMPLOYEE

(

DATE_OF_BIRTH

,SALARY

,EMP_NAME

,INSERTED_ID

,INSERTED_DATE

)

SELECT CONVERT(VARCHAR(10) , getdate() - ( ( 18 * 365 ) + RAND() * ( 47 * 365 ) ) , 103)

,CONVERT(DECIMAL(16,2) , ( 50000 + RAND() * 90000 ))

,'Dummy Name' + CONVERT(VARCHAR(100) , @Counter)

,CONVERT(INT , rand() * 10000)

,CONVERT(VARCHAR(10) , getdate() - ( ( 18 * 365 ) + RAND() * ( 47 * 365 ) ) , 103)

SET @Counter = @Counter + 1

END ;

SELECT @counter = 1

,@NumberOfRows = 25000

WHILE ( @Counter < @NumberOfRows )

BEGIN

INSERT INTO EMPLOYEE

(

DATE_OF_BIRTH

,SALARY

,EMP_NAME

,INSERTED_ID

,INSERTED_DATE

,MODIFIEDBY_ID

,MODIFIEDBY_DATE

)

SELECT CONVERT(VARCHAR(10) , getdate() - ( ( 18 * 365 ) + RAND() * ( 47 * 365 ) ) , 103)

,CONVERT(DECIMAL(16,2) , ( 50000 + RAND() * 90000 ))

,'Dummy Name' + CONVERT(VARCHAR(100) , @Counter)

,CONVERT(INT , rand() * 10000)

,CONVERT(VARCHAR(10) , getdate() - ( ( 18 * 365 ) + RAND() * ( 47 * 365 ) ) , 103)

,CONVERT(INT , rand() * 10000)

,CONVERT(VARCHAR(10) , getdate() - ( ( 18 * 365 ) + RAND() * ( 47 * 365 ) ) , 103)

SET @Counter = @Counter + 1

END ;

I also create a couple of non-clustered indexes that should be utilised by the sample queries below:

CREATE INDEX idx1 ON employee ( MODIFIEDBY_DATE , inserted_date ) INCLUDE ( emp_id ,salary ) CREATE INDEX idx2 ON employee ( MODIFIEDBY_DATE ) INCLUDE ( emp_id ,salary )

So, the table is there and the data populated, now for one of my biggest bugbears:

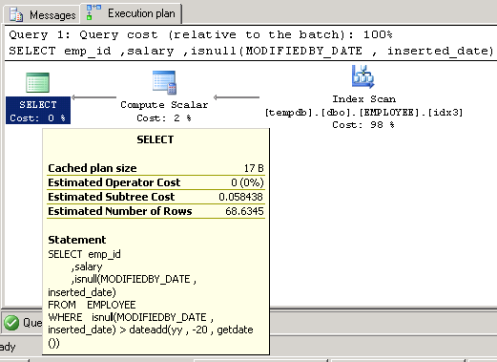

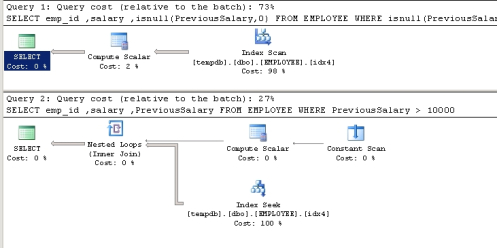

Query 1:

SELECT emp_id

,salary

,isnull(MODIFIEDBY_DATE , inserted_date)

FROM EMPLOYEE

WHERE isnull(MODIFIEDBY_DATE , inserted_date) > dateadd(yy , -20 , getdate())

Arrrrgggghhhh!!!! Don’t get me wrong, i do understand why tables and columns like this do exist but for a DBA its a nightmare to tune as any index you may put on these columns will ultimately end in a Scan as opposed to a Seek.

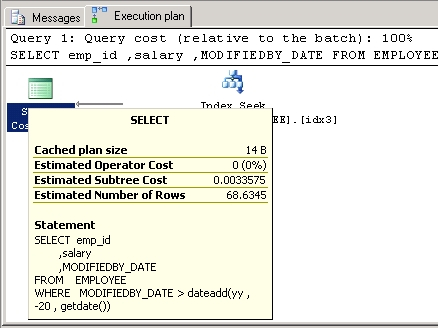

As a comparison here is the query and execution plan for the query without the ISNULL function:

Query 2:

SELECT emp_id

,salary

,MODIFIEDBY_DATE

FROM EMPLOYEE

WHERE MODIFIEDBY_DATE > dateadd(yy , -20 , getdate())

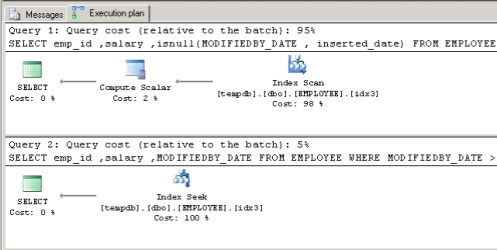

And a comparison of the two:

SELECT emp_id

,salary

,isnull(MODIFIEDBY_DATE , inserted_date)

FROM EMPLOYEE

WHERE isnull(MODIFIEDBY_DATE , inserted_date) > dateadd(yy , -20 , getdate())

SELECT emp_id

,salary

,MODIFIEDBY_DATE

FROM EMPLOYEE

WHERE MODIFIEDBY_DATE > dateadd(yy , -20 , getdate())

Thats 95% against 5%!! What a difference!

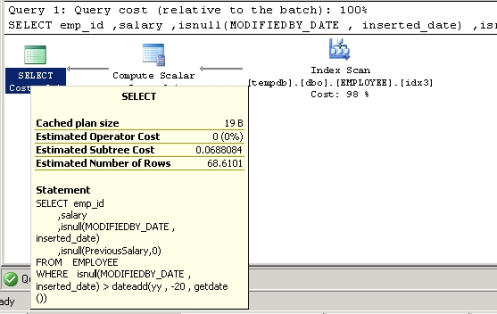

Now, for bugbear #2 i’ll need to add a new column with new values, again, i’m not saying you should or would ever do this but for the purposes of the blog:

ALTER TABLE EMPLOYEE ADD PreviousSalary DECIMAL(16,2)

So i’ve now added a new column with all NULL values, however, i don’t want my users seeing the word NULL on the front end. Easy, i’ll replace it with a zero:

SELECT emp_id

,salary

,isnull(MODIFIEDBY_DATE , inserted_date) AS LastModifiedDate

,isnull(PreviousSalary,0) AS PreviousSalary

FROM EMPLOYEE

WHERE isnull(MODIFIEDBY_DATE , inserted_date) > dateadd(yy , -20 , getdate())

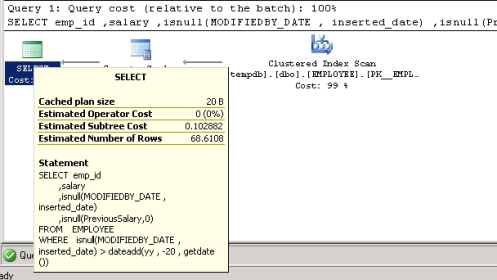

From this you can now see that the SQL Optimiser will now ignore the index created and scan the clustered index:

Obviously i could amend idx1 to factor in this column or create a new index:

CREATE INDEX idx3 ON employee ( MODIFIEDBY_DATE , inserted_date ) INCLUDE ( emp_id ,salary, PreviousSalary )

And sure enough it will choose that index but again, its a scan!

Ok, so you may well be thinking that this doesn’t happen often or wouldn’t cause too much of an issue on your system which may very well be correct. However, what about a highly transactional financial system with 100′s of millions of rows? Yes it does happen! What makes matters even worse is if you decided you wanted to search for (in this example) PreviousSalary > 10000, you’d then have to handle the NULL values and convert them to another value (typically 0) which begs the question, why is it not set to an arbitrary default value in the first place?

I’ve updated a random selection of PreviousSalary records to now have non-NULL values and added a new index:

CREATE INDEX idx4 ON employee ( PreviousSalary ) INCLUDE ( emp_id ,salary )

Running the query to handle the NULLs will still produce and Index Scan:

SELECT emp_id

,salary

,isnull(PreviousSalary,0)

FROM EMPLOYEE

WHERE isnull(PreviousSalary,0) > 10000

If I now update all the NULL columns to 0 (I could have used an arbitrary figure such as -1, -100 or even -99999 to indicate a NULL value) and amend the query above, we now get the execution plan:

SELECT emp_id

,salary

,isnull(PreviousSalary,0)

FROM EMPLOYEE

WHERE isnull(PreviousSalary,0) > 10000

SELECT emp_id

,salary

,PreviousSalary

FROM EMPLOYEE

WHERE PreviousSalary > 10000

I think by now everyone will be getting the picture. So how do you rectify this? First and foremost its education. Speak with your developers, do “Lunch ‘n’ Learn” sessions, anything you can to express how much of an issue this can potentially be and make the time to sit with them and (re)design that part of the system so that you don’t have to handle NULLs either at the query level or even the front end. If they understand that if they use the ISNULL function in this way then their query will not be SARGable and ultimately hurt performance, I’m confident that you won’t have any issues converting them to this method.

I know that the majority of people who may read this may not take anything away from it but even if I get a handful of people who can take this and speak with their development teams on this very simple concept of database design then you could save yourself major headaches in the future!