Good day everyone , In last post

In Memory OLTP – 1

we saw the what is In Memory OLTP and what are the requirements for that. In This post I will show you the demo of how we get performance gain.

The scripts (demo ) i will be using are from MSDN. which i will be using on my local machine to show you the the demo.

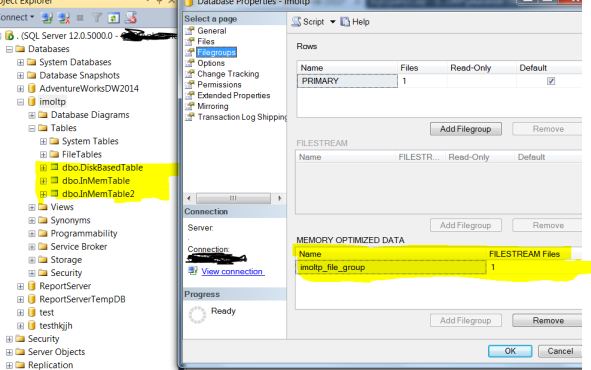

- Create a database named imoltp and alter its file details to set it up for using In-Memory OLTP.

— Create a new database.

CREATE DATABASE imoltp

GO

— Prepare the database for In-Memory OLTP by adding a memory-optimized filegroup to the database.

ALTER DATABASE imoltp ADD FILEGROUP imoltp_file_group

CONTAINS MEMORY_OPTIMIZED_DATA;

— Add a file (to hold the memory-optimized data) to the new filegroup.

ALTER DATABASE imoltp ADD FILE (name=’imoltp_file’, filename=’C:\Program Files\Microsoft SQL Server\MSSQL12.MSSQLSERVER\MSSQL\DATA\imoltp_file’)

TO FILEGROUP imoltp_file_group;

GO

2.Create the database objects for our sample:

Three tables and a natively-compiled stored procedure.

run the following code to create the disk-based table, two (2) memory-optimized tables, and the natively-compiled stored procedure that will be used to demonstrate the different data access methods:

USE imoltp

GO

— If the tables or stored procedure already exist, drop them to start clean.

IF EXISTS (SELECT NAME FROM sys.objects WHERE NAME = ‘DiskBasedTable’)

DROP TABLE [dbo].[DiskBasedTable]

GO

IF EXISTS (SELECT NAME FROM sys.objects WHERE NAME = ‘InMemTable’)

DROP TABLE [dbo].[InMemTable]

GO

IF EXISTS (SELECT NAME FROM sys.objects WHERE NAME = ‘InMemTable2’)

DROP TABLE [dbo].[InMemTable2]

GO

IF EXISTS (SELECT NAME FROM sys.objects WHERE NAME = ‘usp_InsertData’)

DROP PROCEDURE [dbo].[usp_InsertData]

GO

— Create a traditional disk-based table.

CREATE TABLE [dbo].[DiskBasedTable] (

c1 INT NOT NULL PRIMARY KEY,

c2 NCHAR(48) NOT NULL

)

GO

— Create a memory-optimized table.

CREATE TABLE [dbo].[InMemTable] (

c1 INT NOT NULL PRIMARY KEY NONCLUSTERED HASH WITH (BUCKET_COUNT=1000000),

c2 NCHAR(48) NOT NULL

) WITH (MEMORY_OPTIMIZED=ON, DURABILITY = SCHEMA_AND_DATA);

GO

— Create a 2nd memory-optimized table.

CREATE TABLE [dbo].[InMemTable2] (

c1 INT NOT NULL PRIMARY KEY NONCLUSTERED HASH WITH (BUCKET_COUNT=1000000),

c2 NCHAR(48) NOT NULL

) WITH (MEMORY_OPTIMIZED=ON, DURABILITY = SCHEMA_AND_DATA);

GO

— Create a natively-compiled stored procedure.

CREATE PROCEDURE [dbo].[usp_InsertData]

@rowcount INT,

@c NCHAR(48)

WITH NATIVE_COMPILATION, SCHEMABINDING, EXECUTE AS OWNER

AS

BEGIN ATOMIC

WITH (TRANSACTION ISOLATION LEVEL = SNAPSHOT, LANGUAGE = N’us_english’)

DECLARE @i INT = 1;

WHILE @i <= @rowcount

BEGIN

INSERT INTO [dbo].[inMemTable2](c1,c2) VALUES (@i, @c);

SET @i += 1;

END

END

3.Run the different queries and display the response times for each query.

Run the following code multiple times. Ignore the results from the first run which is negatively affected by initial memory allocation.

SET STATISTICS TIME OFF;

SET NOCOUNT ON;

— Delete data from all tables to reset the example.

DELETE FROM [dbo].[DiskBasedTable]

WHERE [c1]>0

GO

DELETE FROM [dbo].[inMemTable]

WHERE [c1]>0

GO

DELETE FROM [dbo].[InMemTable2]

WHERE [c1]>0

GO

— Declare parameters for the test queries.

DECLARE @i INT = 1;

DECLARE @rowcount INT = 100000;

DECLARE @c NCHAR(48) = N’12345678901234567890123456789012345678′;

DECLARE @timems INT;

DECLARE @starttime datetime2 = sysdatetime();

— Disk-based table queried with interpreted Transact-SQL.

BEGIN TRAN

WHILE @I <= @rowcount

BEGIN

INSERT INTO [dbo].[DiskBasedTable](c1,c2) VALUES (@i, @c);

SET @i += 1;

END

COMMIT

SET @timems = datediff(ms, @starttime, sysdatetime());

SELECT CAST(@timems AS VARCHAR(10)) + ‘ ms (disk-based table with interpreted Transact-SQL).’;

— Memory-optimized table queried with interpreted Transact-SQL.

SET @i = 1;

SET @starttime = sysdatetime();

BEGIN TRAN

WHILE @i <= @rowcount

BEGIN

INSERT INTO [dbo].[InMemTable](c1,c2) VALUES (@i, @c);

SET @i += 1;

END

COMMIT

SET @timems = datediff(ms, @starttime, sysdatetime());

SELECT CAST(@timems AS VARCHAR(10)) + ‘ ms (memory-optimized table with interpreted Transact-SQL).’;

— Memory-optimized table queried with a natively-compiled stored procedure.

SET @starttime = sysdatetime();

EXEC usp_InsertData @rowcount, @c;

SET @timems = datediff(ms, @starttime, sysdatetime());

SELECT CAST(@timems AS VARCHAR(10)) + ‘ ms (memory-optimized table with natively-compiled stored procedure).’;

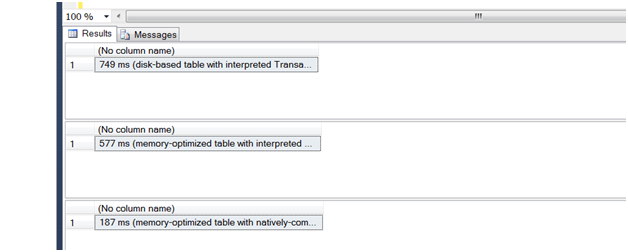

here is what i recived as output

How memory-optimized tables perform faster

Dual nature:

A memory-optimized table has a dual nature: one representation in active memory, and the other on the hard disk. Each transaction is committed to both representations of the table. Transactions operate against the much faster active memory representation. Memory-optimized tables benefit from the greater speed of active memory versus the disk. Further, the greater nimbleness of active memory makes practical a more advanced table structure that is optimized for speed. The advanced structure is also pageless, so it avoids the overhead and contention of latches and spinlocks.

No locks:

The memory-optimized table relies on an optimistic approach to the competing goals of data integrity versus concurrency and high throughput. During the transaction, the table does not place locks on any version of the updated rows of data. This can greatly reduce contention in some high volume systems.

Row versions:

Instead of locks, the memory-optimized table adds a new version of an updated row in the table itself, not in tempdb. The original row is kept until after the transaction is committed. During the transaction, other processes can read the original version of the row.

When multiple versions of a row are created for a disk-based table, row versions are stored temporarily in tempdb.

Less logging:

The before and after versions of the updated rows are held in the memory-optimized table. The pair of rows provides much of the information that is traditionally written to the log file. This enables the system to write less information, and less often, to the log. Yet transactional integrity is ensured.

How native procs perform faster

Reduction in instruction

Converting a regular interpreted stored procedure into a natively compiled stored procedure greatly reduces the number of instructions to execute during run time.

Trade-offs of In-Memory features

As is common in computer science, the performance gains provided by the In-Memory features are a trade-off. The better features bring benefits that are more valuable than the extra costs of the feature.

Trade-offs of memory-optimized tables

Estimate memory: You must estimate the amount of active memory that your memory-optimized table will consume. Your computer system must have adequate memory capacity to host a memory-optimized table.

Partition your large table: One way to meet the demand for lots of active memory is to partition your large table into parts in-memory that store hot recent data rows versus other parts on the disk that store cold legacy rows (such as sales orders that have been fully shipped and completed). This partitioning is a manual process of design and implementation.

Trade-Offs Of native procs

A natively compiled stored procedure cannot access a disk-based table. A native proc can access only memory-optimized tables.

When a native proc runs for its first time after the server or database was most recently brought back online, the native proc must be recompiled one time. This causes a delay before the native proc starts to run.

That’s All folks in this post. suggestion and comments are welcome. will try to add more about index for In memory OLTP in next post till than enjoy and keep reading