We have a table that contains just over 1.7 billion records and requires a daily feeding of around 9 million. It is split into 10, roughly equal, partitions. I originally just pushed the daily feeding into the main table from a staging table and it worked well, but thought to myself, as I nearly always do when developing: "Is this the best that can be done?". The single source stuck in the back of my head, but at the time never made it forward.

While working on a different project, I realized that sometimes pre-processing specific pieces of information provides an overall benefit to the process. That's when the single source thought in the back of my head came sliding forward and smacked me. Pre-process the single source using the partitions!

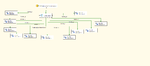

Here is what the steps look like:

The first Data Flow Task, "Pull Missing Records multi", extracts the 9 million records and divides them up into 10 Raw File Destination components, based upon the partition function.

I recreated the partition function, very easily, in a Conditional Split component. I put them in the order they appeared in the function in SQL Server.

I decided to split the data loading into 3 Data Flow Tasks 4/4/3. Oh, you might be thinking, "Hey, Joe! That adds up to 11". It does. I have the default output just in case something is not caught by the partition function. This has always been 0. Now I have to read the data back in.

Each of the OLEDB Destination Tasks looks like this:

The important thing is NOT to select 'Table Lock', however you can choose whatever 'Maximum Insert Commit Size' suits your needs. This improved our load times significantly, but of course your mileage will vary. What I hope to have shown you is to think of problems in a less static way. Things aren't always as rigid as they might appear.