I wanted to find out how many SQL Saturday’s I’d spoken at and how I ranked with others. I got a spreadsheet from Kendal Van Dyke at one point, but it was quickly out of date. However Kendal mentioned that there was an XML document on the site, and I looked. Sure enough, there is, but it’s separate for each event.

That makes sense, and it’s fine. I can get the data and put it together. That’s a nice project and I decided to tackle it with Powershell. Certainly SSIS would work, and I may do that as well, or leave it to you to do. There are others that have done this and Kendal has an XLS he’s updated with this data, but this is something I wanted to just try.

In any case, here was my plan:

- Download the XML file for each event.

- Save the XML locally.

- Parse out the event node, capturing the title and speaker.

- Load data into a SQL Server database on Azure.

- Report on speakers and events.

That’s it, and it’s not a big project, but it does take a little work to get the pieces to work well. I have potential load issues, duplicate data, etc.

This post will look at just the first and second items, downloading the XML data for each event and saving it.

Downloading XML – Technique #1

This is the first way I found to do this, which was interesting. I hadn’t expected this, thinking I’d need to load the XML document and then save it. However the Invoke-WebRequest doesn’t need that. It has this format:

Invoke-WebRequest $sourceURL -OutFile $DestinationFile

I can give a source file location (URL) and a destination, and it works. I used this code, and it downloaded an XML file to my local machine.

# get SQL Saturday data from the site

$debug = 1;

# counter for events

$i = 1

$baseURL = “http://www.sqlsaturday.com/eventxml.aspx?sat=”

$DestinationFile = “E:\SQLSatData\SQLSat” + $i + “.xml”

$sourceURL = $baseURL + $i

# debug information

if ($debug -eq 1) {

write-host $DestinationFile

}

if ($debug -eq 2) {

Write-Host $sourceURL

}

# Get file from web server

Invoke-WebRequest $sourceURL -OutFile $DestinationFile

Now if I add a counter to increment the $i variable, I’ll get all the files.

Downloading – Technique #2

The other way of doing this is to load an XML document from a path. In this case, instead of the Invoke-WebRequest, I’ll use this code:

$doc = New-Object System.Xml.XmlDocument

$doc.Load($sourceURL)

$doc.Save($DestinationFile)

I create an XML document, load it, and then save it to the path.

Looping

In both of these cases, I add a looping item, looking for an error. For me, I decided to use 9999 as the loop terminator. That’s an easy one, since I’m not sure we’ll get to 9999 events any time soon.

I added this code to the top:

While ($i -lt 9999) {

I then covered the load call with a TRY..CATCH.

try {

$doc.Load($sourceURL)

# save file

$doc.Save($DestinationFile)

}

Catch

{

# if we can’t load the file, assume we’re done for now.

$i = 9999

}

$i = $i + 1

# end loop

}

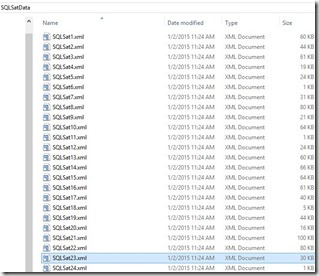

This worked OK, as you can see, but it failed early on.

Event 39 doesn’t have an XML file. In fact, the site for SQL Saturday #39 – New York City, fails with an ASP.NET error.

This isn’t a good design, but it was a good start and allowed me to get moving on the data. From here, I can start working on the parsing and import procedures.

A better development process might be to encapsulate the download into a separate process and pass in the path, as I can use this same technique to read my local XML files. I should also separate out the save, and the parsing.

Of course, I need a better way to error handle and loop through files. I don’t want to download all the files every time, so I think I should have some parsing of my file system, finding which files I’m missing, and then ignoring those in my loop. I should also be limiting my downloads based on some number, which I’m not sure about how to calculate now, but I’ll think about it. I guess I could increment based on some list, but I’d have to get one from the SQL Saturday people. Or I could take a high guess, like 500, and just try to load all those files.

References

- http://www.powershellatoms.com/basic/download-file-website-powershell/

- http://stackoverflow.com/questions/1819341/how-to-read-xml-file-from-web-using-powershell

- http://stackoverflow.com/questions/13732715/loop-through-xml-elements

- http://www.vexasoft.com/blogs/powershell/7255220-powershell-tutorial-try-catch-finally-and-error-handling-in-powershell

Filed under: Blog Tagged: powershell, SQL Saturday, syndicated, xml

![]()