By Steve Bolton

…………As I’ve said many times throughout this series of amateur mistutorials, SQL Server Data Mining (SSDM) is one of the most powerful yet neglected products Microsoft has to offer. The ability to extend it with your own algorithms is in turn the most powerful yet neglected aspect of SSDM itself. The product ships with the nine algorithms discussed earlier in this series, which are quite useful out-of-the-box but nonetheless represent just a drop in the bucket among the thousands of extant mining methods in use today – not to mention the thousands more that are expected to be developed in coming decades, as “Big Analysis” becomes a leading field in IT alongside Big Data. Despite the fact that Microsoft has largely neglected SSDM and Analysis Services for much of the past decade (particularly now that the top brass is obsessed with Tablet Panic) they still remain among the leading database server products. One of the most attractive aspects of SSDM is its extensibility, which as discussed in my last article, helps data miners avoid reinventing the wheel by taking care of such functionality as backups, security and transaction management, and access through such tools as Excel, SQL Server Integration Services (SSIS), Reporting Services and the Data Mining Expression (DMX) language processor in SQL Server Management Studio (SSMS). Despite these advantages, only a few third-party vendors have developed professional plug-in algorithms for SSDM. This is mainly due to lack of awareness of just how good the tools are, which I hope to alleviate a little by giving SSDM a little badly needed free press in this series of articles.

…………I also put a drop in the bucket on behalf of the programming language I’m most comfortable with, Visual Basic .Net, by providing what may be the only tutorial on the Internet for SSDM plug-ins written in VB. I’ve only found a few other tutorials on the subject, all of which seem to be derived from the definitive SQL Server Data Mining Managed Plug-In Algorithms Tutorial, a Microsoft Word document written by founding SSDM developer Bogdan Crivat and included with the plug-in Software Development Kit (SDK). The last article in this series described how to download and install the SDK and compile the DMWrapperPlugin.dll file, which your Visual Studio project must reference. The latter step is one of the aspects of SSDM that could use a makeover after eight years of declining attention from Microsoft, since a native .Net architecture for plug-ins would probably perform better and be easier to write and maintain that the COM interop made possible by this .dll. Once it’s built, however, it is a simple matter to set a reference to it, which should be one of the first actions you take after creating a new Visual Studio class project to host your plug-in. I’m not going to explain how to create such a project, which is one of those trivial steps that often clutter programming tutorials and therefore one of the last distractions we want to deal with when doing high-level algorithm development. The project we’ll be working with for the next few tutorials is a VB class type that was originally derived from the sample code provided in Crivat’s .doc, but which is now almost completely unrecognizable after being converted from C# and substantially and repeatedly altered after that to suit my needs. Perhaps the second-best tutorial I’ve found on SSDM plug-ins is a five-year-old .pdf titled Microsoft Business Intelligence with Numerical Libraries: A White Paper by Visual Numerics, Inc., which is also written in C# and appears closely related to Crivat’s original sample code. I have yet to find a lesson in plug-in development that is not centered on simple Clustering algorithms, so I’ll take a fresh approach and illustrate how to use other data mining methods.

…………In fact, just to get us started and get SSDM to return some kind of simple result, we’ll begin by calculating a few statistics that would normally be considered far too trivial to calculate with data mining software. Skewness, kurtosis and excess kurtosis are measures of how lopsided a dataset is, calculated using well-known and relatively simple formulas which I found at this online calculator and its sister site, and verified at this webpage in the National Institute of Standards and Technology (NIST) Engineering Statistics Handbook, which is one of the most readable and concise books on stats I’ve yet come across. I selected these particular statistics because they were fairly simple, yet I knew little about them, plus I needed some practice converting simple equations into code. I won’t even bother to clutter this tutorial further by posting the code of the classes here, but I’ll provide links to the two classes I developed to implement the logic of my “algorithm,” if it is worthy of such a title. SkewnessKurtosisClass has a constructor that takes the case count, mean and variance of a particular mining model column, then stores them in class-scoped variables. It is also feeds the variance to a method that calculates the column’s standard deviation and also stores it in the same kind of variable. During algorithm training, the standard deviation, mean and an array of data points representing the column (i.e. “attribute” in data mining parlance) and an iterator to identify which column is being operated on are fed to methods that calculate skewness and kurtosis. Excess kurtosis is merely the kurtosis minus three, which provides a more human-readable value of zero for normal distributions of data (i.e. bell curves). This class also has a class-scoped TotalValue variable, which is a cumulative sum of all the raw data values for a particular column that is calculated outside the class itself. My simple project also has a DataPointClass which tracks all of the individual values, which have to be stored in an array of these objects and fed en masse to the other class in order to calculate global stats like skewness and kurtosis. The DataPointClass also has a class-scoped Boolean named IsEqualToMaxSupport, which is supposed to identify whether a particular data point is equal to the maximum value in a column, but which is not working properly yet as of this writing. None of these internal details of these particular classes are of any great importance, since the object at this point is merely to prod SSDM into returning some kind of output. In fact, the calculations for skewness and kurtosis are a little off, because I’m relying on variance values precalculated by SSDM, which uses a biased formula (one which takes into account the entire dataset) rather than the unbiased formula (one derived from an incomplete sample of a dataset) I found in the above sources. It would only take a few lines of extra code to correct this, but I’m trying to keep the number of lines to the barest possible minimum in order to diminish possible confusion. Our purpose for now is simply to make SSDM perform the simplest of tasks, so that we can concentrate on explaining the plug-in object model. If you need to review the trivial code within these classes, you can follow these links to the SkewnessKurtosisClass.vb and DataPointClass.vb.

…………A typical plug-in project will consist of user-defined classes like the SkewnessKurtosisClass and DataPointClass that encapsulate most of the logic of your custom algorithms, as well as three abstract classes that control processing, navigation and other basic tasks: AlgorithmMetadataBase, AlgorithmBase and AlgorithmNavigationBase. Almost all of their methods must be overridden, although you can add methods, properties and other members as you see fit. Each of these classes must be prefaced with Imports statements for Microsoft.SqlServer.DataMining.PluginAlgorithms and System.Runtime.InteropServices; some sources also include System.Collections.Generic and System.Text, and although I haven’t seen any harm come from leaving them out, there’s always a first time. Each of these three classes must inherit the respective class type within Microsoft.SqlServer.DataMining.PluginAlgorithms, of course. Normally, you would also include an Implements Microsoft.SqlServer.DataMining.PluginAlgorithms.ICaseProcessor clause in the class declaration for AlgorithmBase, which is not mandatory but makes it possible to use the ProcessCase method. AlgorithmMetadataBase is the most difficult one to declare, since it contains the three lines of COM gobbledygook in between the carets depicted in Figure 1. All that does is mark the .Net class for COM interop, identify the type of AlgorithmBase associated with it and assign the class GUID that will identify it in the registry, which in this case is defined as a constant in the #Region block. Some old tutorials on COM interop say that you also need to include an InterfaceID and EventsID in the class declaration, but this is not the cases with SSDM plug-in projects.

Figure 1: A Sample AlgorithmMetadataBase Declaration

Imports Microsoft.SqlServer.DataMining.PluginAlgorithms

Imports System.Runtime.InteropServices

Imports System.Collections.Generic

Imports System.Text

Namespace PluginProject

<ComVisible(True)>

<ComClass(AlgorithmMetadataBase.ClassId)>

<MiningAlgorithmClass(GetType(MyAlgorithm))>

Public Class AlgorithmMetadataBase

Inherits Microsoft.SqlServer.DataMining.PluginAlgorithms.AlgorithmMetadataBase

#Region “COMRegion”

Public Const ClassId As String = “06da68d6-4ef0-4cea-b4dd-1a7c62801ed2″

#End Region

Figure 2: A Sample AlgorithmBase Declaration

Imports Microsoft.SqlServer.DataMining.PluginAlgorithms

Namespace PluginProject

Public Class MyAlgorithm

Inherits Microsoft.SqlServer.DataMining.PluginAlgorithms.AlgorithmBase

Implements Microsoft.SqlServer.DataMining.PluginAlgorithms.ICaseProcessor

Figure 3: A Sample AlgorithmNavigationBase Declaration

Imports Microsoft.SqlServer.DataMining.PluginAlgorithms

Namespace PluginProject

Public Class AlgorithmNavigator

Inherits Microsoft.SqlServer.DataMining.PluginAlgorithms.AlgorithmNavigationBase

…………In order to work with the SSDM plug-in object model, the SSDMPlugIn.chm help file included in the same folder as Crivat’s .doc will have to become your constant companion. It mentions other interfaces, classes and class members that you can implement in COM but which are taken care of behind the scenes in .Net, so there’s no sense in even mentioning the many objects that are marked with such warnings as “do not call this function directly in your plug-in.” Once you reference the DMPluginWrapper.dll and inherit Microsoft.SqlServer.DataMining.PluginAlgorithms, Intellisense and the Object Browser will also provide invaluable clues about how to work with the object model. Crivat’s .doc file is likewise indispensable in understanding the plug-in classes. Unlike with many other native classes in the .Net architecture, there is no single definitive guide or diagram available on sites like MSDN that summarizes the plug-in object model succinctly, so you’ll have to rely on all of these sources. I’m still an amateur, fumbling my way through the object model myself, but I’ll try to pass on what little I know in the hopes that it may save other users some time and energy.

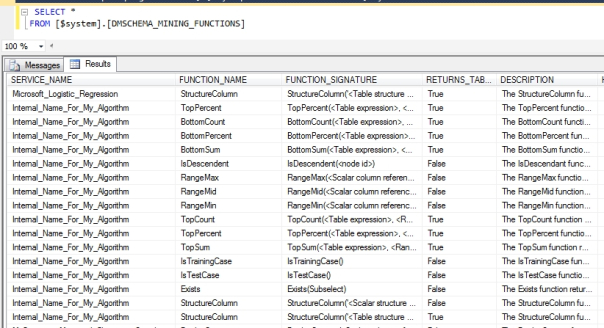

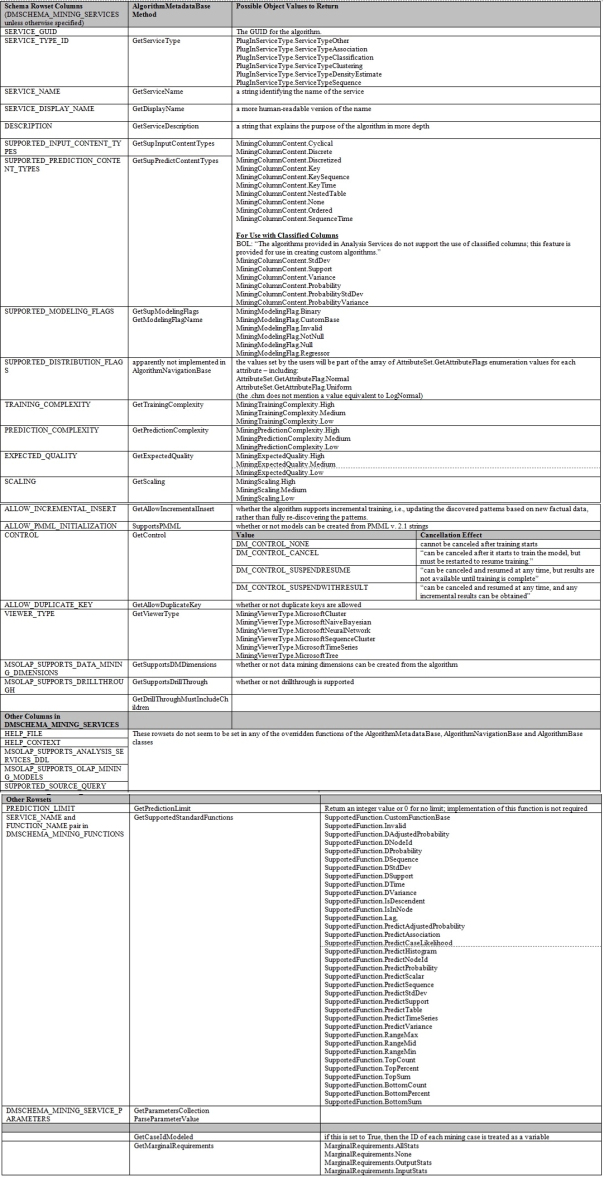

…………The most helpful advice I can give is to look at it from this perspective: whether we’re taking about mining model properties, the NODE_DISTRIBUTION table, node names, or even the metadata returned by schema rowsets (i.e., roughly equivalent to relational DMVs), pretty much every type of data we’ve seen associated with SSDM in previous tutorials must be specified somewhere in these three mandatory classes. Most of their methods are marked Must Override and are designed to supply a specific type of data associated with the model, which sharply reduces the complexity of the development process. For example, AlgorithmMetadataBase consists mainly of quite simple methods that return values equivalent to properties associated with an algorithm. As depicted in Figure 4, more than a dozen methods map directly to columns in the DMSCHEMA_MINING_SERVICES schema rowset we covered in an earlier tutorial: GetServiceName, GetDisplayName, GetServiceDescription, GetServiceType, GetExpectedQuality, GetPredictionComplexity, GetTrainingComplexity, GetViewerType, GetSupportsDMDimensions, GetSupportsDrillThrough, GetDrillThroughMustIncludeChildren, GetSupInputContentTypes, GetSupPredictContentTypes, GetSupModelingFlags, GetModelingFlagName and GetPredictionLimit. It is a simple matter of mapping the appropriate data type or enumeration value to the result you want, then executing a Return statement. So far, I have not yet found a way to specify the values for the HELP_FILE, HELP_CONTEXT, MSOLAP_SUPPORTS_ANALYSIS_SERVICES_DDL, MSOLAP_SUPPORTS_OLAP_MINING_MODELS and SUPPORTED_SOURCE_QUERY columns in DMSCHEMA_MINING_SERVICES. For each Data Mining Query (DMX) language function you allow in GetSupportedStandardFunctions, pairs of values for you algorithm and the corresponding function will be added to the SERVICE_NAME and FUNCTION_NAME columns in the DMSCHEMA_MINING_FUNCTIONS rowset. In future tutorials we’ll implement algorithm parameters in methods like GetParametersCollection and ParseParameterValue, which are depicted in the DMSCHEMA_MINING_SERVICE_PARAMETERS schema rowset. The value of the ALLOW_PMML_INITIALIZATION column in DMSCHEMA_MINING_SERVICES is specified in the SupportsPMML routine, but Predictive Model Markup Language (PMML) is a topic we’ll be putting off for a future article. Four of these routines are not mapped to any schema rowsets, because they perform special internal functions. CreateAlgorithm merely instantiates an instance of the AlgorithmBase, where all of your training and prediction takes place; GetCaseIdModeled indicates whether or not the ID of each mining case should be treated as a separate variable during that processing; GetMarginalRequirements indicates what sort of marginal statistics SSDM should precalculate for you, and ValidateAttributeSet merely provides a place to implement your own validation code to make sure the attributes of the model being input follow the format you want. Because there are so many methods in the AlgorithmMetadataBase class, the VB code for my implementation of that class is too long to post here, even with error-checking Try…Catch blocks and other extraneous clutter removed. It can be downloaded from this link though. Note how the values for the selection of DMSCHEMA_MINING_SERVICES columns in Figure 5 reflect the values I assigned in the routines of that class, including the algorithm name “Internal_Name_For_My_Algorithm.” The functions I specified in GetSupportedStandardFunctions are likewise reflected in the query of DMSCHEMA_MINING_FUNCTIONS in Figure 6.

Figure 4: Mapping DMSCHEMA_MINING_SERVICES and Other Schema Rowsets to AlgorithmMetadataBase Routines (click to enlarge)

Figure 5 and 6: Two Schema Rowsets for My Algorithm

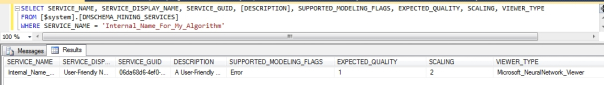

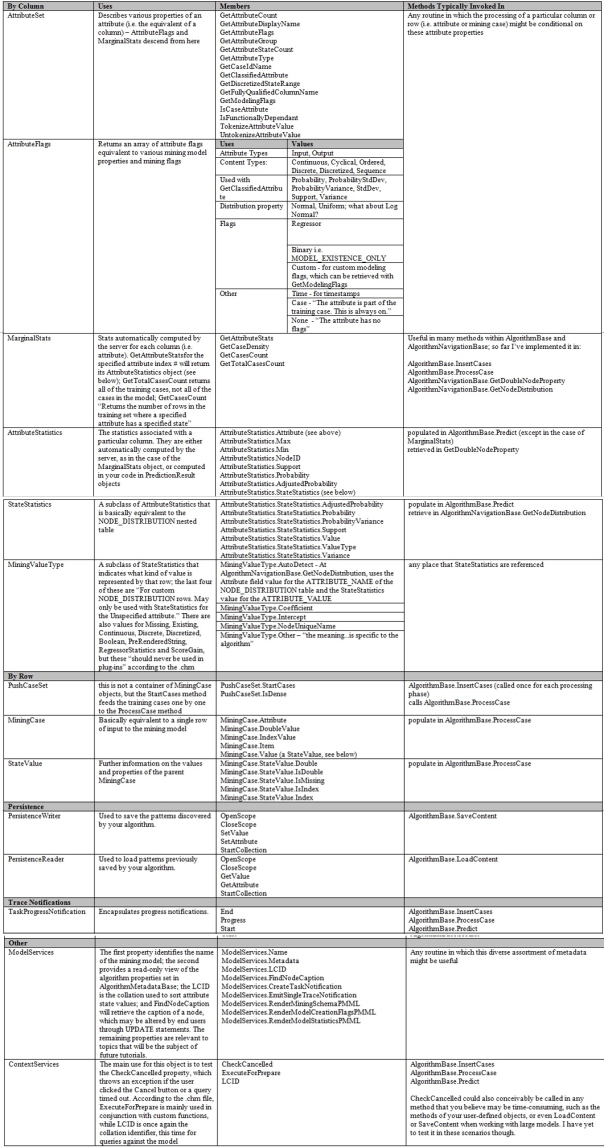

…………It is not even necessary to reference the most common classes in the plug-in object model within the methods of AlgorithmMetadataBase. Figure 7 depicts the objects you work with most frequently in AlgorithmNavigationBase and AlgorithmBase, with the exception of some like PMMLWriter, MiningFunctionAttribute, MininParameter, MiningTableColumRefAttribute, FlagAttribute, ClusterMembershipInfo, PredictionResult and AttributeGroup, which we will delay for later tutorials in order to reduce the amount of information glut we have to deal with this week. The data fed into the mining model case either be considered vertically, in terms of columns (i.e. attributes), or horizontally, in terms of rows (i.e. mining cases). At the apex of the object model pertaining to attributes, we have the AttributeSet object, which provides a lot of basic properties of a particular column or some pertaining to all columns, like GetAttributeCount. GetAttributeFlags returns an AttributeFlags object which provides other column properties readers will be familiar with if they have been following this column, such as whether it is an Input or Output column (or both), the Contnet type, the Distribution property, various mining flags and the like. The MarginalStats object for a particular column includes precalculated statistics for that attribute such as the training case count, as well as an AttributeStatistics object that maps to columns commonly seen in mining model results, such as the probability and support figures. AttributeStatistics objects may also contain a collection of StateStatistics which in turn map to the nested NODE_DISTRIBUTION table. The ValueType column in the NODE_DISTRIBUTION table likewise maps to the MiningValueType object, which is at the bottom of the attribute hierarchy. When looking at our data in terms of rows rather than columns, the PushCaseSet represents all of the training cases in the model, whereas the MiningCase object represents a single row of data. The StateValue object can be used to retrieve further information about a particular case. The main use of ContextServices is the CheckCancelled method, which can be employed in the AlgorithmBase class to detect whether or not a user to has tried to cancel training or prediction. ModelServices returns some basic information about a model, including some PMML properties that we will put off for a later date. The TaskProgressNotification object is designed to send messages about processing progress to traces, but this must also be deferred for now, because I have yet to solve the Access Violation exceptions I’ve been getting with them. PersistenceWriter and PersistenceReader are used in the SaveContent and LoadContent methods of AlgorithmBase to save patterns to disk and then call them back up as needed, such as when a user tries to navigate through mining model results in Visual Studio or SSMS. The values are stored in PersistenceTag enumeration values which can be cast to your liking. Statements to get or set values for these objects must be encapsulated in OpenScope and CloseScope statements. In my implementation of AlgorithmBase, it is a simple matter of iterating through the arrays of SkewnessKurtosisClass and DataPointClass arrays and saving or loading the results. When viewing my code, keep in mind that I implemented this much differently than Bogdan Crivat or any other tutorial writer I’ve found to date, who usually use the C# equivalent of For/Next or While loops to accomplish the same task. I personally prefer explicit array iteration for debugging purposes and am not yet aware of any potential performance penalties for implementing my loops in this way.

Figure 7: The Plug-In Object Model (click to enlarge)

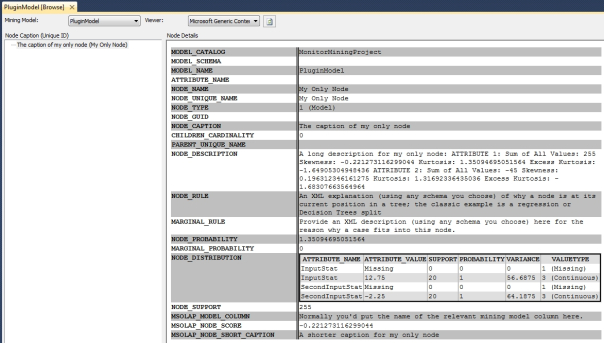

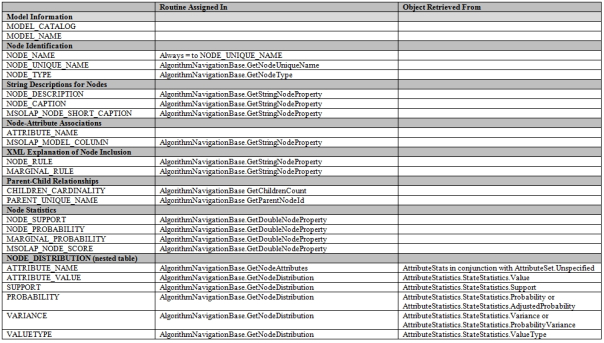

…………The fourth column in Figure 7 shows that certain values for these objects are calculated in the main processing methods of AlgorithmBase, like InsertCases, ProcessCase and Predict, then retrieved in certain methods of AlgorithmNavigationBase, particularly GetStringNodeProperty, GetDoubleNodeProperty and GetNodeDistribution. To simplify this further, let’s map the object model in Figure 7 in the opposite direction. Anyone who has followed this series or is otherwise familiar with SSDM will recognize that the leftmost column in Figure 8 contains the names of columns in the Generic Content Tree Viewer, which depicts SSDM results in their most basic format. For simplicity’s sake I left out MODEL_SCHEMA and NODE_GUID, which are always VT_NULL or NULL respectively, because as Books Online puts it, “this column is not supported by Analysis Services” – which of course begs a question I cannot answer, of why they are included in the SSDM object model at all. The most pertinent question is this: what do you want to appear in these columns of this standardized result format? Some of the values, like MSOLAP_NODE_COLUMN, NODE_RULE, MARGINAL_RULE, NODE_DESCRIPTION, NODE_CAPTION and MSOLAP_NODE_SHORT_CAPTION are set in AlgorithmNavigationBase.GetStringNodeProperty. For each node in your result set, SSDM will loop through that method several times until it has gone through several possible values of the NodeProperty enumeration; your job is to do a Select Case based on the NodeProperty value and return a string value that will be mapped to it. Likewise, SSDM will call GetDoubleNodeProperty several times for each node and feed it a different NodeProperty enumeration value each time; simply implement a Select Case to find out which value you’re assigning that time, then supply a double value equivalent to the NODE_SUPPORT, NODE_PROBABILITY, MARGINAL_PROBABILITY or MSOLAP_NODE_SCORE you want to appear in the results for that node. It is important to note that in my example of this class, I supplied the total of all column values, the skewness and the kurtosis for the first model column under NODE_SUPPORT, MSOLAP_NODE_SCORE and NODE_PROBABILITY respectively. This is not something you’d do in the real world; I just wanted to show how such values could be assigned. Other important result values can be set in methods like GetNodeUniqueName, GetNodeType and GetUniqueNameFromNodeID. I left my implementation of methods like GetParentCount, MoveToParent, GetParentNodeId, GetChildrenCount, GetChildNodeId, LocateNode, MoveToNextTree, ValidateNodeId and GetNodeAttributes as bare as possible, because their uses can best be illustrated with more complex node structures. For the sake of simplicity, I limited this week’s tutorial to a single node, but in a future article we’ll get into more depth about how to use these other methods in AlgorithmNavigationBase.

Figure 8: Mapping the Standard SSDM Result Format to AlgorithmNavigationBase and AlgorithmBase Methods

…………How are the values retrieved in AlgorithmNavgiationBase set in the first place? The meat and potatoes of the SSDM plug-in architecture consists of three methods in AlgorithmBase which encapsulate training and prediction. For the sake of simplicity, we’ll defer discussion of the Predict method for a couple of weeks, but suffice it to say that this is where patterns are created and added to the output on the basis of DMX prediction queries. All three of these routines are appropriate places to check ContextServices for cancellation by the end user, or to emit trace messages with ProgressNotification objects. Model training occurs in InsertCases, which is called one time only between calls to the Initialize method and either SaveContent or GetNodeIDsForCase; Crivat recommends putting logic dependent on the training parameters at the beginning of this routine. That doesn’t stop you, however, from iterating over the case set several times within this routine, so many plug-in developers implement several phases of training at this point. I split mine into two phases, beginning with one in which I populate the SkewnessKurtosis classes for each attribute with certain global stats that are needed for computing the skewness and kurtosis, such as the variance. Then I call CaseSet.StartCases(Me), which calls ProcessCase one time for each row of input in the mining model. For each row within ProcessCase, retrieve the value of each column in my model and add it the DataPointClass array, then detect whether or not it is equal to the maximum value for that row and set IsEqualToMaxSupport accordingly. Just to show how cumulative statistics can be computed, I also added a running total for each attribute in this routine. After ProcessCase is finished iterating over the whole case set and SSDM returns to the remainder of the InsertCases method, it then enters a post-process phase, in which I perform the calculations of skewness, kurtosis and the like by feeding the SkewnessKurtosisClass the whole DataPointClass array for that attribute. There may be much more efficient ways of implementing this, but my object for the time being was just to get some sort of results back from SSDM, given that the most difficult aspect of plug-in development is next week’s topic, deployment and debugging.

…………Although training is implemented in just two routines, the ways in which you can flexibly implement them are practically infinite. You can call ProcessCase more than once, or not at all, or nest it within a call to itself. You can create separate phases within InsertCases that do not call ProcessCase, as I did in my example. One of the things that began to dawn on me as I rejigged my InsertCases and ProcessCase methods is that the correct choice of implementations will be dictated in large part by the scope of the data itself. Think of the dilemma as a Cartesian product of comparing different attributes, cases and particular states to each other, or in relational lingo, columns, rows and states. It is critical to identify ahead of time what combination of comparisons you’re making in your algorithm. Do you need to know how the marginal stats for Attribute A compare against Attribute B? If so, then it’s probably more apt to put that code in InsertCases, before calling ProcessCase. Pretty much anytime you need to compare the value of any row against a global stat, you’re going to want to compute that global stat early in InsertCases, before row-by-row processing (row by agonizing row, or RBAR?) in ProcessCase. On the other hand, if you need to compare a particular row value against some cumulative value for that row, it is probably more appropriate to calculate the cumulatives one row at a time in ProcessCase, then perhaps compare the final value against the value of a particular row in another iteration of ProcessCase. Fortunately, SSDM precalculates some cumulatives and global stats for you, such as the mean and biased variances for each attribute, as long as you retuned an All value in the AlgorithmMetadataBase.GetMarginalRequirements. Your algorithm may need to make comparisons between rows but within the same attribute, within the same row across different attributes, or reference histograms of particular states of an attribute, or make even more complex calculations involving cumulatives and the like. You could conceivably combine all of these permutations together and perform calculations based on different rows, across different states of different attributes, which in turn depend upon cumulatives, precalculated marginal stats or more complex global stats. Once you’ve made those determinations, another factor you need to be cognizant of is how to retrieve the upper and lower bounds of the arrays you’ll be dealing with, if you choose to implement your plug-ins with explicit iterators rather than While loops and the like. Some of the most common iterators you’ll see are StateStatistics ().Count, AttributeSet.GetAttributeStateCount, AttributeSet.GetAttributeCount, MarginalStats.GetTotalCasesCount and MarginalStats.GetCasesCount, some of which must be supplied the appropriate index numbers. Much of the code in my implementation of AlgorithmBase revolves around iterating through the attributes, mining cases, attribute statistics and other such objects and setting or getting values appropriately. It also contains a trivial method called GetNavigator to call an instance of AlgorithmNavigationBase, which in turn has a class-scoped reference to the AlgorithmBase, plus an iterator for the CurrentNode.

…………It is still too premature to get into an in-depth discussion of more complex node structures, so I’ll defer discussion of certain other methods in AlgorithmNavigationBase. In future tutorials on more advanced topics like algorithm parameters, custom mining functions, feature selection and PMML, we’ll implement some optional members of these three classes, like GetNodeIDsForCase, GetAllFeatureSelectionInfo, GetAttributeFeatureSelectionInfo, HasFeatureSelection, LoadPMMLContent and RenderPMMLContent. As mentioned earlier, the handful of tutorials I’ve found to date on the Internet have been implementations of Clustering, so I’ll refer anyone interested in other optional clustering-specific methods like ClusterMembership and CaseLikelihood to those tutorials, particularly Crivat’s and the Visual Numerics .pdf. Writing a plug-in is not trivial, but it is not as difficult as it may seem either; nevertheless, the material is thick enough that I needed to break up this tail end of the Rickety series into bite-sized chunks. For that reason, I will also put off discussion of the Predict method within AlgorithmBase, because it is possible for SSDM to at least return training statistics without it. I still have plenty to learn about the object model and every other aspect of SSDM plug-ins, but one of the leading reasons I’ll delay discussion of important topics like prediction and navigation is that debugging and deployment are the more difficult aspects of writing custom algorithms. It took me a long time to climb that mountain to get the simple results depicted in Figure 9 on my screen, after processing a two-column model with 20 rows of dummy data. Remember, I substituted a cumulative total, the skewness and kurtosis from the first column for the NODE_SUPPORT, NODE_PROBABILITY and MSOLAP_NODE_SCORE, just to show how that can be done. The calculations for skewness and kurtosis would be accurate, except for the fat that SSDM uses the biased method of variance calculation; I could have added extra steps to correct this, but didn’t want to complicate this tutorial series any further. I don’t want to turn anyone’s brain to mush unnecessarily, because readers will need fresh grey matter to get through the next mistutorial, on the many pitfalls data miners may stumble over when debugging and deploying custom algorithms. This is really the summit of the whole series as far as difficulty goes, after which we start descending back to easier tasks until we wrap up on the topic of writing data mining viewers.

Figure 9: My First Plug-In Results, for a Single Node