by Steve Bolton

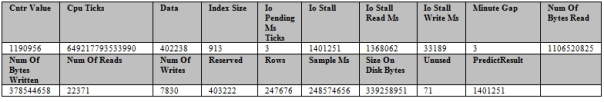

In the last two installments of this series of amateur self-tutorials on SQL Server Data Mining (SSDM), I discussed how to performed Data Definition Language (DDL) and Data Manipulation Language (DML) tasks with Data Mining Expressions (DMX), the SQL-like language used to administer SSDM. These topics were easier to explain and less critical to know than the material covered in previous posts, like the installments on SSDM’s nine algorithms; furthermore, the syntax for both operations is far simpler and serves far fewer purposes than DDL and DML with T-SQL and Multidimensional Expressions (MDX), the languages used for relational tables and OLAP cubes in SQL Server. Much of the functionality DMX provides can be also be performed faster in the GUI of SQL Server Data Tools (SSDT) and is thus only useful in certain limited scenarios. This is less true, however, of prediction queries, which allow users to make calculations based on the patterns contained in a previously trained mining model, sometimes by feeding it sets of input values that don’t exist in the model. It is often more difficult or even impossible to perform the same tasks without writing DMX code, which makes prediction queries the most important component of the language. We can perform some of the same tasks using the Mining Model Prediction tab in SSDT, which isn’t difficult to understand if you’ve already used the tools in SSDT and Visual Studio provides to graphically create tables and views instead of coding them by hand with T-SQL. Figure 2 is a screenshot of the tab, in which a few columns of the LRDenormalizedView1Model mining model we used previously in this series are selected in the table to the left and then appear in the grid at the bottom; to see the predicted values when compared against the set of cases selected to the right, simply right-click the tab surface and select the Result menu item. To view the DMX query the tool uses to create the prediction, select Query. The rest is fairly self-explanatory, except for the Singleton Query menu item, which requires an introduction to the various types of DMX prediction queries. Like equivalent graphical tools that generate T-SQL to create tables and views, the Mining Model Prediction Tab is awkward and deceptively simplistic; it seems like it ought to save you the trouble of writing DMX code, but often does not. As discussed in previous posts, the use cases for DML and DDL in DMX are quite limited because many of the same tasks can be performed more efficiently through the GUI, but prediction queries (like stored procedures and views in T-SQL) are really the one part of DMX that can typically be more efficiently coded by hand.

Figure 1: The Mining Model Prediction Tab

The equivalent functionality in DMX is provided by a couple of interdependent clauses and functions which are often used in concert. The first of these are prediction joins, which employs syntax elements similar to those of T-SQL joins to compare the patterns stored in a mining model against a set, which can come from explicitly defined valued, the results of a sub-select, an MDX query or the data returned by an OpenQuery statement. To test a particular case (i.e., a single row of values) against your model, you supply a FROM statement coupled with the mining model name, followed by a PREDICTION JOIN clause with a list of explicit hand-coded values corresponding to the columns in the model. An example of such a “singleton query” can be found in Figure 4, in which we see what output is returned from a mining model we used in A Rickety Stairway to SQL Server Data Mining, Algorithm 5: The Neural Network Algorithm, when it is supplied with specific values for the DatabaseID and FileHandleID columns. In that query I used the NATURAL PREDICTION JOIN clause, which automatically correlates the columns from the mining model and the joined set together based on their names. If the keyword NATURAL is left out, then you must manually correlate each set of columns together using ON clauses in much the same manner as in a T-SQL query, which in Figure 3 would mean tacking on syntax like this: ON [NNDenormalizedView1Model10].[Database ID] = T2.[Database ID] AND [NNDenormalizedView1Model10].[File Handle ID] = T2.[File Handle ID]. An “unnatural” prediction join of this kind can be awkward to write when you’re dealing with numerous columns, but it is mandatory when the column names don’t match, which often occurs when the joined set data comes from an OpenQuery of an external data source. Selecting from a mining model without a prediction join clause (as depicted in Figure 3) amounts to what is called an empty prediction join, which returns the most likely values based on patterns already stored in a model. Like the OpenQuery syntax borrowed from T-SQL, the MDX statements that can be used to specify the joined set are beyond the scope of this tutorial series. We’ve already covered sub-selects and most of the syntax elements pertinent to SELECT statements in the last two tutorials, such as TOP, WHERE and ORDER BY, in previous tutorials, so I won’t belabor the point. Keep in mind, however, that when performing prediction queries you will often need to use the FLATTENED keyword, because many of the prediction functions that are often used alongside prediction joins return nested tables.

Figure 2: The PredictNodeID Function

SELECT PredictNodeID([Io Stall Read Ms])

FROM [LRDenormalizedView1Model]

SELECT ATTRIBUTE_NAME, NODE_CAPTION, NODE_SUPPORT

FROM [LRDenormalizedView1Model].CONTENT

WHERE NODE_UNIQUE_NAME= ’00000000e’

| ATTRIBUTE_NAME | NODE_CAPTION | NODE_SUPPORT |

| Io Stall Read Ms | All | 99456 |

Prediction functions can be applied to columns in a mining model without the use of prediction joins, as depicted in Figures 1 and 2, but they are often used in tandem, as seen in Figure 4. The algorithm used in a mining model determines which subset of prediction functions can be used against its data; for example, PredictNodeID is not available for the Logistic Regression and the Neural Network algorithms. It is not strictly a prediction function, since it merely returns a node name rather than predicting a value based on patterns in a model’s data, but I’ve included it here for the sake of clarity, given that it has the word Predict in its name. The first query in Figure 2 returns the NODE_UNIQUE_NAME value 00000000e, which you can use to look up information for a specific node. In the example above, the node identifier is used to restrict the results to the NODE_SUPPORT, ATTRIBUTE_NAME and NODE_CAPTION for a single node. You could also use the node identifier to retrieve important stats about a node from its nested NODE_DISTRIBUTION table, but this would imply the use of the FLATTENED keyword to denormalize the multiple rows it returns.

Figure 3: 5 DMX Functions in an Empty Prediction Join

SELECT PredictSupport([Io Pending Ms Ticks], INCLUDE_NULL) AS SupportResult,

PredictProbability([Io Pending Ms Ticks], INCLUDE_NULL) AS ProbabilityResult,

PredictAdjustedProbability([Io Pending Ms Ticks], INCLUDE_NULL) AS AdjustedProbabilityResult,

PredictStdev([Io Pending Ms Ticks]) AS StDevResult,

PredictVariance([Io Pending Ms Ticks]) AS VarianceResult

FROM [NNDenormalizedView1Model10]

| SupportResult | ProbabilityResult | AdjustedProbabilityResult | StDevResult | VarianceResult |

| 99456 | 1 | 0 | 9.31134197258688 | 86.7010893304582 |

Figure 4: 5 DMX Functions in a Singleton Natural Prediction Join

SELECT PredictSupport([Io Pending Ms Ticks], INCLUDE_NULL) AS SupportResult,

PredictProbability([Io Pending Ms Ticks], INCLUDE_NULL) AS ProbabilityResult,

PredictAdjustedProbability([Io Pending Ms Ticks], INCLUDE_NULL) AS AdjustedProbabilityResult,

PredictStdev([Io Pending Ms Ticks]) AS StDevResult,

PredictVariance([Io Pending Ms Ticks]) AS VarianceResult

FROM [NNDenormalizedView1Model10]

NATURAL PREDICTION JOIN

(SELECT 7 AS [Database ID], 4 AS [File Handle ID]) AS T2

| SupportResult | ProbabilityResult | AdjustedProbability Result | StDevResult | VarianceResult |

| 9999.00019996001 | 0.999900019996001 | 0 | 1.36773308691065 | 1.87069379703014 |

SSDM also provides the five functions depicted in the two figures above to return statistical predictions for some fairly simple, common measures we’ve used throughout this series, such as support (the number of cases in our mining model, i.e. rows of data), standard deviation, variance and probability. The only unique one is AdjustedProbability, which “is useful in associative predictions because it penalizes items that are too popular in favor of less-popular items.” I have yet to see exact formula by which this measure is calculated though and vaguely recall reading somewhere that it is proprietary Microsoft code, so it may not be publicly available. As I’ve tried to stress throughout this series, however, detailed knowledge of the underlying equations is not necessary to derive substantial benefits from SSDM, any more that it is necessary to design your own combustion engine before driving a car. The most important thing to keep in mind is the concept of what AdjustedProbability is used for, so that if you encounter a scenario where you suspect that values with excessively high counts are obscuring underlying patterns in your data, it might be a good idea to apply this function and see what pops out.

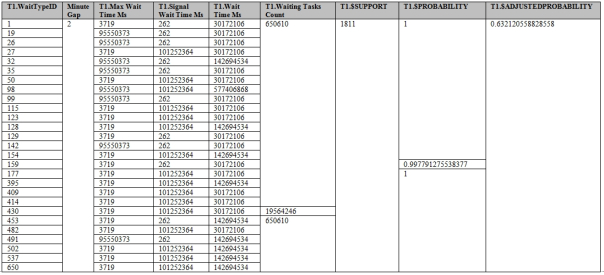

Figure 5: PredictHistogram Usage on a Linear Regression Mining Model

SELECT FLATTENED PredictHistogram([Io Stall Write Ms]) AS T1

FROM [LRDenormalizedView1Model]

| T1.IoStall WriteMs | T1.$SUPPORT | T1.$PROBABILITY | T1.$ADJUSTED PROBABILITY | T1.$VARIANCE | T1.$STDEV |

| 32921 | 99456 | 0.999989945504635 | 0 | 52333897.9797561 | 7234.21716426567 |

| 0 | 1.00544953648776E-05 | 1.00544953648776E-05 | 0 | 0 |

These statistical prediction functions are available for every algorithm except Time Series, which only supports PredictStDev and PredictVariance. Time Series is also the only algorithm which does not support PredictHistogram, contrary to Books Online (BOL), which states that it “can be used with all algorithm types except the Microsoft Association algorithm.” I verified this manually by executing the function on Time Series and Association Rules models, the first of which resulted in an “Error (Data mining): The PREDICTHISTOGRAM function cannot be used in the context at line 1, column 18.” The second returned results similar to those in Figure 5, which depicts the histogram returned for one of our old Linear Regression mining models used in A Rickety Stairway to SQL Server Data Mining, Algorithm 2: Linear Regression. The documentation for PredictHistogram is a bit puzzling, however, because it mentions phantom $ProbabilityVariance and $ProbabilityStdev columns which I have yet to encounter, but which are apparently meaningless anyways, given that they’re both accompanied by disclaimers like these: “Microsoft data mining algorithms do not support $ProbabilityVariance. This column always contains 0 for Microsoft algorithms.” The function does return an StDev column though, as depicted in Figure 5, along with the predicted value and columns for familiar measures like $Support, $Probability and $AdjustedProbability. To muddy the waters even further, the same page of Microsoft documentation states that the function should return $Cluster, $Distance and $Probability columns with mining models that use the Clustering algorithm, but it simply returns the same columns in the example below. All of the 17 clustering models we used in A Rickety Stairway to SQL Server Data Mining, Algorithm 7: Clustering return the same six columns. If we want to return this cluster information, we have to use one of the four functions used exclusively with Clustering, which are depicted in Figure 6. The Cluster () function tells us which cluster the values in our prediction join are most likely to fall into, while ClusterProbability and ClusterDistance tells us the probability of the input set belonging to a particular cluster or its distance from it. If no values are supplied to those functions, then the comparisons are made against the most likely clusters. They are not strictly prediction functions, because Clustering is rarely used for predictions, but I’ve included them here because they perform roughly analogous purposes. PredictCaseLikelihood is prefaced with the word Predict because it is a prediction function in a stricter sense. It provides the statistical likelihood that the input set will belong to a particular cluster, which is subtly different from ClusterProbability. Measures of likelihood make an inference based on observations made on actual data, while probabilities are calculated without reference to observations of a sample.[ii] The NORMALIZED keyword can be used with PredictCaseLikelihood to specify that the results are adjusted to an even scale, which is the default behavior, or NONNORMALIZED to return raw unadjusted calculations. The term “normalization” in the data mining literature most often refers to a process that adjusts disparate scales to make them comparable, not to any concepts relevant to relational database theory.

Figure 6: Clustering-Specific Functions

SELECT Cluster () AS T1Cluster,

ClusterProbability (‘Cluster 2′) AS T1ClusterProbability,

ClusterDistance () AS T1ClusterDistance,

PredictCaseLikelihood(NORMALIZED) AS T1DefaultNormalizedLikelihood,

PredictCaseLikelihood(NONNORMALIZED) AS T2RawLikelihood

FROM [ClusteringDenormalizedView1Model13]

NATURAL PREDICTION JOIN

(SELECT 7 AS [Database ID], 4 AS [File Handle ID]) AS T2

| T1Cluster | T1ClusterProbability | T1ClusterDistance | T1DefaultNormalized Likelihood | T2RawLikelihood |

| Cluster 5 | 0.14552979054638 | 0.776691246872147 | 0.000134777874081471 | 3.6216743774023E-144 |

All nine algorithms can make use of the polymorphic Predict function, but its behavior varies greatly depending on the data mining method selected and the type of value input to it. When used with the Time Series or Sequence Clustering algorithm to becomes an alias for the PredictTimeSeries or PredictSequence functions respectively. In other cases it must be supplied with the name of a predictable column. If a simple Input column is supplied, you will receive an error message like this: “Error (Data mining): Only a predictable column (or a column that is related to a predictable column) can be referenced from the mining model in the context at line 3, column 15.” As discussed in depth in A Rickety Stairway to SQL Server Data Mining, Part 0.0: An Introduction to an Introduction, the Content type assigned to a column represents one of the most important distinctions in data mining. If it is set to a predictable column with a Content type of Table, then the Predict function is equivalent to PredictAssociation, which makes predictions on nested tables. This is primarily used for recommendation engines of the kind Association Rules is often used to build[iii], but it is not precisely the same as making a prediction on an Association Rules model, since it can be applied to nested tables in other algorithms that support them. Beware of the confusing documentation in Books Online (BOL), which says that it applies to “Classification algorithms and clustering algorithms that contain predictable nested tables. Classification algorithms include the Microsoft Decision Trees, Microsoft Naive Bayes, and Microsoft Neural Network algorithms.” This seems to imply that it can be used with neural nets, but it can’t be since they don’t make use of predictable nested tables. While we’re on the topic of Association Rules, it might be an opportune time to mention OPTIMIZED_PREDICTION_COUNT, which we deferred discussing in A Rickety Stairway to SQL Server Data Mining, Algorithm 6: Association Rules. This is not a parameter to a function, but an algorithm parameter which limits prediction functions to return only the specified number of results with Association Rules models, regardless of how many are requested by a prediction query. When the Predict function is used without a nested table on an Association Rules model, it operates much like the Predict function does with other algorithms, rather than PredictAssociation. A column supplied to the Predict column can be specified with the keywords EXCLUDE_NULL or INCLUDE_NULL, while a table takes the same keywords used with PredictAssociation, INCLUSIVE, EXCLUSIVE, INPUT_ONLY, and INCLUDE_STATISTICS. The last of these returns two additional columns of stats, $Probability and $AdjustedProbability, which can also be supplied as parameters to order the results or limit them to the top n values for that column. Figure 7 provides a simple example of the Predict function applied to a column from one of the neural net models used previously in this series, while Figure 8 gives an example of PredictAssociation in action. Note that the first parameter supplied to it is the name of the predictable table, not a column within it; I initially made the mistake of specifying the table key, which leads to the confusing error message, “Only a nested table column with a KEY column inside is allowed in an association prediction function.”

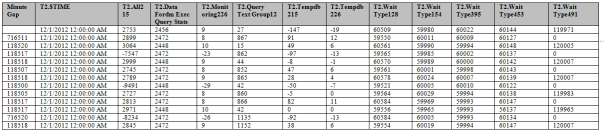

Figure 7: Use of the Plain Predict Statement on a Neural Net Model (click to enlarge results)

SELECT *, Predict([Io Stall], INCLUDE_NULL) AS PredictResult

FROM [NNDenormalizedView1Model10]

Figure 8: The PredictAssociation Function (click to enlarge results)

SELECT FLATTENED [Minute Gap],

(SELECT *

FROM PredictAssociation([DmOsWaitStats], $Probability, INCLUDE_STATISTICS) ) AS T1

FROM [ARDoubleNestedTableStructure2Model1]

The query above returned 673 values, one for each WaitTypeID, but I only included the most noteworthy values in the result table because almost all were identical to the first row. As you can see in the query text, there are no clauses to limit the results. Frankly, this is one of the points at which we slam into the limitations of DMX like a brick wall, for there are no programmatic means I know of to reference the columns of the FLATTENED table to restrict them with a WHERE clause. I therefore had to delete all of the redundant rows by hand when pasting them into Microsoft Word. As I have pointed out many times in this series, I am an amateur at this, so listen to any data mining professional if they contradict anything I’ve written here, but I have yet to find a means of limiting such queries without importing the results en masse into relational or OLAP tables first. Neither of the queries in Figure 9 will allow you to do this, since the first returns the error message, “Error (Data mining): The specified DMX column was not found in the context at line 6, column 7” and the second returns, “The dot expression is not allowed in the context at line 6, column 7. Use sub-SELECT instead.” The latter also occurs if you qualify the WaitTypeID column with T1 instead. Note that in the second query, the sub-select is given the alias T2, to prevent an error to the effect that “Subselects and subcubes cannot be queried in flattened format.”

Figure 9: Column Problems with Two PredictAssociation Queries

SELECT FLATTENED [Minute Gap],

(SELECT *

FROM PredictAssociation([DmOsWaitStats], $Probability, INCLUDE_STATISTICS)) AS T1

FROM [ARDoubleNestedTableStructure2Model1]

WHERE WaitTypeID = 1

SELECT *

FROM (SELECT FLATTENED PredictAssociation([DmOsWaitStats], $Probability, INCLUDE_STATISTICS) AS T1

FROM [ARDoubleNestedTableStructure2Model1]) AS T2

WHERE DmOsWaitStats.WaitTypeID = 1

The same problem unfortunately afflicts PredictSequence and PredictTimeSeries, which are otherwise among the most useful features in DMX. This is not surprising, given that the word “prediction” ordinarily connotes some reference to the future, while Sequence Clustering and Time Series both imply temporal ordering of some kind. If you recall from A Rickety Stairway to SQL Server Data Mining, Algorithm 8: Sequence Clustering, we found several clusters of queries that seemed to progress in a particular order during the collection phase for the data used throughout this series of tutorials, which was based on roughly three days of polling six IO-related Dynamic Management Views (DMVs) every minute using a SQL Server Integration Services (SSIS) job. Not surprisingly, query #339 was the most common one found using the Sequence Clustering algorithm, since it was part of the job that ran every minute during this period. That is probably why it is over-represented in Figure 10. The rest of the sequence revealed by the PredictSequence was highly useful, however, because it seemed to identify a chain of queries that I mistakenly did not group together when examining my data manually. The prediction join singles out query #6, which I originally thought on a casual glance to be a non-descript background process, when the query text actually referred to a change tracking background procedure. Queries #310 and #272 in the results below were related to full-text indexing on the same database that change tracking was enabled on, which had little use during this period, so SSDM effectively clustered these queries together into a sequence I initially missed. In retrospect, I think I altered a few values in a text column at some point, which triggered change tracking and changes to the full-text indexing. QueryTextID #119 is a common Agent background job that was merely executed so frequently that it happened to be included in this cluster, like #339. In the query below, I supplied the name of the nested table just like we would with PredictAssociation, along with two optional parameters to specify the beginning and ending steps in the sequence. Specifying just one number limits it to n number of steps. The NestedTableID column is the key column in the nested table, but I have no idea why it was included in the results but left blank. Other than this, the results might have been surprisingly useful in a real-world scenario. They would have also been much more difficult to extract from the data in the GUI, so we’ve definitely reached the point at which DMX becomes worthwhile to learn for particular scenarios.

Figure 10: PredictSequence

SELECT FLATTENED PredictSequence(QueryTextIDView,5, 15)

FROM [SQQueryTextIDPredictableModel15]

NATURAL PREDICTION JOIN

(SELECT 6 AS QueryTextID) AS T2

| Expression.$SEQUENCE | Expression.NestedTableID | Expression.QueryTextID |

| 5 | 339 | |

| 6 | 339 | |

| 7 | 357 | |

| 8 | 310 | |

| 9 | 272 | |

| 10 | 119 | |

| 11 | 339 | |

| 12 | 339 | |

| 13 | 339 | |

| 14 | 339 | |

| 15 | 339 |

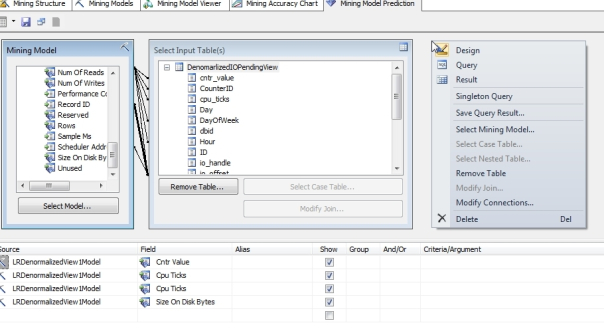

The results may have been useful, but they came at an increased cost in terms of model processing time. It took just 13 seconds to process the model but 3:49 to run the PredictSequence query, during which time msmdsrv.exe ran on just one core. Performance was even more of an issue with the PredictTimeSeries query in Figure 11, which I had to cancel the query after 52 minutes. Selecting just one column did not reduce the processing time down to reasonable levels either. I deleted some of the extraneous columns manually to highlight only the most interesting results, which represent just the first handful of rows out of thousands returned by the query. The first parameter is the nested table name, followed by n number of steps to predict into the future, which defaults to 1. Specifying two numeric values as we did in PredictSequence has the same effect of limiting the prediction to a particular range of steps into the future; for example, you could make predictions for 15 days to 25 days ahead using 15, 25, if your Key Time measures is in terms of days. The REPLACE_MODEL_CASES clause can also be supplied to indicate that predictions should be made using the patterns stored in the model, but by applying them to different data points in the join set. EXTEND_MODEL_CASES works slightly differently, by tacking new data points onto the end of the data already included in the model; for example, you could use it to append another seven days of data to compare them against the data stored in the model without having to actually alter it. I left both of these clauses out and limited it to a single prediction step for both legibility and performance reasons, since I would have to manually supply values for all 67 columns in the join set. Keep in mind that you can also apply PredictTimeSeries to columns outside your nested table, such as MinuteGap in the example below. If it is not applied to one of these columns or a nested table, you will receive this error: “Error (Data mining): Only a top-level column or a nested table column with a KEY TIME column inside can be used in a time series prediction function.”

Figure 11: PredictTimeSeries (click to enlarge results)

SELECT FLATTENED [Minute Gap],

(SELECT *

FROM PredictTimeSeries([TS View], 1) AS T1) AS T2

FROM [TSColumnarModel2]

Once again, however, I had to delete columns and rows by hand after the query was finished because I couldn’t restrict them in the query with a WHERE clause. This is one of the major drawbacks of DMX, which is still in its infancy as a language. Given that it deals with such complex forms of analysis, you’d expect it to have an even richer set of capabilities to slice and dice data than T-SQL or MDX have, but they simply haven’t been added to the language yet. Until the day comes when DMX is augmented with some badly needed new functionality, we will usually be better off importing our mining data into relational tables and OLAP cubes whenever possible. This is particularly true of the nested tables returned by these prediction functions and SSDM’s complicated metadata format, which I explained in A Rickety Stairway to SQL Server Data Mining, Part 0.1: Data In, Data Out and in each individual post on the nine algorithms. As explained throughout the series, the common format is useful in that it allows us to collect apples and oranges that we ordinarily couldn’t compare in the same basket. On the other hand, the price to be paid is a rat’s nest of nested tables and columns whose meaning changes between algorithms, or even from one row to the next. The first instinct of someone trained in relational database theory is to normalize all of these nested structures and rid ourselves of all this redundancy and overlapping meanings of attributes. SSDM provides a native means of importing its results into OLAP cubes in the form of data mining dimensions, which can’t be used with certain algorithms and still retain some of the limitations of this format. In next week’s tutorial, I’ll complement this method with some scripts to import your SSDM results directly into relational tables using a combination of DMX and T-SQL. From there, they can built into new cubes of your own design, rather than using the format imposed by data mining dimensions. This is the pinnacle of DMX, at which we find its primary use – which is to export the data it returns into tables and cubes for more efficient storage, retrieval and analysis.

p. 121, 124, 571, MacLennan, Jamie; Tang, ZhaoHui and Crivat, Bogdan, 2009, Data Mining with Microsoft SQL Server 2008. Wiley Publishing: Indianapolis.

[ii] For an excellent discussion of this nuance, see the discussion titled “What is the difference between “likelihood” and “probability”?” at the CrossValidated website, available at http://stats.stackexchange.com/questions/2641/what-is-the-difference-between-likelihood-and-probability. Pay particular attention to the answer on Sept. 14, 2010 by the user named Thylacoleo.

[iii] p. 123, MacLennan, et.al.