by Steve Bolton

As users become more familiar with software, they typically follow a progression that begins with learning the GUI, followed by how to perform the same functions programmatically, then perhaps becoming adept at writing custom code. Other instructional material on SQL Server Data Mining (SSDM), such as that found in Books Online (BOL) and Data Mining with Microsoft SQL Server 2008, the classic book on the subject written by former members of Microsoft’s Data Mining Team, take a more topical approach. For example, they discuss each algorithm together with the Data Mining Extensions (DMX) functions pertinent to them. My series of amateur self-tutorials is aimed more at the untapped market of SQL Server professionals with moderate to low skills in statistics, who could nonetheless be making productive use of the most under-utilized tools in SQL Server, even to support purely relational or Online Transaction Processing (OLTP) tasks. For that reason, we’ve covered the indispensable parts of SSDM first and are now moving on to the extras, which can be dispensed with but are helpful in certain scenarios. It would be difficult to get useful results out of SSDM without the minimal grounding covered in post 0.0, post 0.1 and post 0.2, as well as the individual discussions of the nine algorithms. You don’t need a doctorate in statistics to use SSDM successfully, any more than you need to write a dissertation on combustion engines to drive a car, but users should have at least a basic familiarity with how to set up projects, structures and mining models, differentiate between Content types, apply the right algorithm for the right job and have an inkling of how to decipher the numbers they return. It helps to understand the inner workings of the algorithms, but SSDM can be put to good use without interpreting a single equation, aside perhaps from the simple calculations used in Linear Regression. All of these basic topics are prerequisites for the more advanced subjects we will be dealing with in the remainder of this series, such as data mining dimensions, plugging in your own custom algorithms with Predictive Model Markup Language (PMML) and mining model validation. It also makes more sense for the class of users this series is aimed at to learn such prerequisites as viewing mining results the easy way, through SSDM’s many visualization tools, before moving on to manipulating the results programmatically. For these purposes, DMX should be considered an advanced topic. Since we’re climbing the rickety stairway of these tutorials (which I am entirely unqualified to write) in order of the ease, complexity and necessity of the topics, I will the first of at least three tutorials on DMX with a discussion of Data Definition Language (DDL) queries. This involves creating mining structures and models programmatically rather than through the SQL Server Data Tools (SSDT) GUI, which we’ve already covered in depth. Once we’ve taken care of this basic task, we can tackle more advanced discussions in coming weeks of how to use DMX to do Data Manipulation Language (DML) on the structures and models we’ve built.

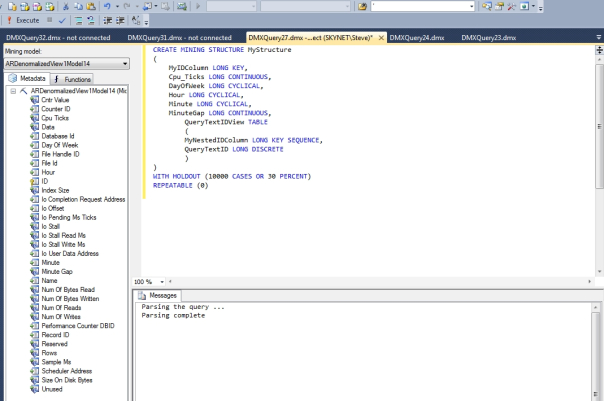

This is really a baby step on the one stair in our series that is least likely to creak. DMX is general is much simpler than T-SQL or Multidimensional Expressions (MDX), the languages used by relational databases and cubes respectively in SQL Server. That is especially true when it comes to DDL, because there are only two types of objects we can create, mining structures and mining models, and only three DDL statements we can apply to them, DROP, CREATE and ALTER. To make matters even simpler, we’ll get rid of one of those right off the bat: to delete a model, simply use the syntax DROP MINING MODEL MyMiningModel and to delete a structure, type DROP MINING STRUCTURE MyMiningStructure. That’s it. To simplify matters further, we won’t fuss over a variation of the CREATE statement geared towards PMML until that really advanced topic comes up for discussion at the tail of the end series, when our rickety stairway will practically be out of the attic and onto the roof. It looks something this, but neither the string nor the purpose of the PMML will make any sense until we’ve gotten to that subject: CREATE MINING MODEL MyMiningModel FROM PMML ‘MyPMMLString’. Before creating models of any kind, we would have to start by creating the parent mining structure. Figure 1 provides a visual example of what a typical structure creation looks like in SQL Server Management Studio (SSMS):

Figure 1: CREATE MINING STRUCTURE Example

To create a DMX query like the one above, simply click on the reddish DMX icon on the top bar, just above the Execute button (assuming of course, that you’ve set up your SSMS interface like mine). Simply connect via the login window that appears and you’ll find a list of available mining models in your default SQL Server Analysis Services (SSAS) database; on the left you’ll see that the last one I selected was named ARDenormalizedView1Model14, which we used in the tutorial on the Association Rules algorithm. That doesn’t have much to do with what’s going on in the query window, where I’m creating a structure identical to the one we used in the tutorial on Sequence Clustering. That algorithm demands a nested table, which makes it ideal to demonstrate how to include the TABLE type; the nested table in MyStructure above is named QueryTextIDView and it has two columns in parentheses, which are specified by the same syntax as that used for regular mining structure columns. All of the data types in this structure are either Key or Long, but SSDM also provides a few other data types like Text, Boolean, Double, Date and Binary. The only reason I didn’t use them throughout this series is because the data we operated on in the examples came from three days of polling six different Dynamic Management Views (DMVs) related to IO pressure every minute, which produced six tables, some of which had more than a million rows, joined to a parent RecordTable with various measures of time. Some of these dependent tables originally had columns with data types like nvarchar or varbinary that could have easily been assigned Text or Binary data types in SSDM, but as explained in post 0.2, performance was an important concern, so I normalized those tables and substituted numeric foreign keys for them. This may have been wise, considering that with certain algorithms I was bedeviled with a frustrating error in which SSDM ran out of room to store strings on disk, which might have been exacerbated if I left these long text and binary values as they were. For many projects, however, it may make perfect sense to use these alternative data types. For a newcomer to SSDM, the only puzzling thing about the syntax might be the second clause following the data type in each column specifier. This corresponds to the Content type that flags the meaning of each column, which is a crucial distinction in data mining but not in relational databases. As discussed in depth throughout this series, there are nine of these Content types (Key, Key Sequence, Key Time, Cyclical, Ordered, Continuous, Discrete, Discretized and Table), but each algorithm has important restrictions on which ones it will accept. It is also possible to disallow nulls in a structure column by placing the NOT_NULL keyword between the data and Content types, but I neglected to depict that in the example above. After the data type and prior to NOT_NULL or the Content type, you can add the keyword UNIFORM, NORMAL or LOG NORMAL to specify the structure column’s Distribution property, which serves as a hint that some algorithms can use for optimization purposes. The first value refers to a flat line in which all values in a column occur with equal frequency, the second to a Gaussian bell curve and the third to a lopsided distribution in which certain values are much more likely. I have yet to see a good guide, however, as to which algorithms make use of this property and how they go about doing so; furthermore, it might require some preliminary queries in T-SQL or MDX to determine the distribution ahead of time, so I may discuss this in a future article on prep work before mining projects. Figure 3 has an example of one of these keywords in action in the CPU_Ticks column. You can also add a RELATED TO clause to specify that the column is related to a nested table key, a Discrete column or another column that has a RELATED TO clause applied to it, but I have yet to experiment with this feature much, nor have I seen a decent example in the literature of how to use it in practice. It creates a hierarchy in the same fashion as SSAS cubes and can apparently also be set using the ClassifiedColumns, but I have yet to see an example of how this would affect the outcomes of mining projects. The structure definition ends with some clauses regarding training cases which are much easier to explain. The optional WITH HOLDOUT clause merely specifies values for the mining structure properties HoldoutMaxCases and HoldoutMaxPercent, while the REPEATABLE keyword merely specifies the HoldoutSeed, all of which we covered way back at the beginning of this series.

The real action takes place in the ALTER MINING STRUCTURE statement, which is only used to add mining models, in the same way that the ALTER TABLE statement in T-SQL can be used to add columns. The top of the query is fairly self-explanatory and quite similar to the CREATE statement we just covered. The difference is that instead of the specifying the data and Content types, we indicate whether the column should be used as an input (which is the default, and therefore blank for some columns), as a predictable column but not an input (using the PREDICT_ONLY keyword) or as both (using the PREDICT keyword). Note that the Hour column also has a mining flag applied to it called MODEL_EXISTENCE_ONLY, which we haven’t used throughout this series since we didn’t want to reduce our data down to a dichotomous choice between Missing or Existing states. The only other flag we can set is REGRESSOR, depending on whether or not we’re using an algorithm like Linear Regression that will accept it. After all that we see the USING statement, which specifies the algorithm. The parentheses after that include the specific parameters for that algorithm, if we don’t want to leave them at their default values. We’ve already covered these in depth in the articles on each individual algorithm, so refer to the pertinent prior posts for details.

Figure 2: ALTER MINING STRUCTURE Example

ALTER MINING STRUCTURE MyStructure

ADD MINING MODEL MyMiningModel

(

MyIDColumn,

Cpu_Ticks,

DayOfWeek,

Hour MODEL_EXISTENCE_ONLY,

Minute,

MinuteGap PREDICT_ONLY,

QueryTextIDView PREDICT

(

MyNestedIDColumn,

QueryTextID PREDICT

)

)

USING [Microsoft_Sequence_Clustering]

–parameters specific to each algorithm

(CLUSTER_COUNT = ’10′)

WITH DRILLTHROUGH,

FILTER(DayOfWeek = 7)

The optional keyword WITH DRILLTHROUGH refers to a feature I’ve neglected to discuss yet in this series. It sets the mining model property AllowDrillThrough to true, which allows users to view the cases used to train a model, contrary to the default behavior. Several prerequisites must be met first, such as granting the user AllowDrillThrough permissions on the structure or model, depending on which one is being accessed. Books Online (BOL) advises that “The model permission lets you drill through from the model, even if you do not have permissions on the structure. Drillthrough permissions on the structure provide the additional ability to include structure columns in drillthrough queries from the model, by using the StructureColumn (DMX) function,” but these are scenarios I’ve yet to encounter, since I’m the only user on the machine I’ve taught myself SSDM on. The parent structure’s CacheMode property must be set to KeepTrainingCases rather than ClearAfterProcessing, which deletes them when processing is finished. The Naive Bayes, Logistic Regression and Neural Network algorithms do not allow drillthrough at all, since they “do not assign cases to specific nodes in the content,” whereas there are several other limitations with Linear Regression and Times Series, as detailed at the MSDN webpage Drillthrough Queries (Data Mining). If all those conditions are met, users can view the cases used to train a model with a DMX SELECT .Cases query, which we will cover next week along with other SELECT clause topics. The FILTER clause corresponds to the mining model Filter property, which I have also neglected to mention. The idea is not much different than a WHERE clause in T-SQL, except that the conditions are enclosed in parentheses and follow DMX-specific syntax, which would be more appropriate to discuss next week. Basically, you can add several conditions connected with familiar keywords such as AND and OR, as well as subqueries with SELECT, EXISTS and the like. It is also possible add a FILTER clause to a nested table by tacking it after the table’s definition. You can create a structure and a model together using the CREATE MINING MODEL statement as depicted in Figure 3, but you can’t add a filter or any of the holdout clauses.

Figure 3: CREATE MINING MODEL Example

CREATE MINING Model MyModel3

(

MyIDColumn LONG KEY,

Cpu_Ticks LONG LOG NORMAL CONTINUOUS,

DayOfWeek LONG CYCLICAL,

Hour LONG CYCLICAL,

Minute LONG CYCLICAL,

MinuteGap LONG CONTINUOUS PREDICT_ONLY,

QueryTextIDView TABLE PREDICT

(

MyNestedIDColumn LONG KEY SEQUENCE,

QueryTextID LONG DISCRETE PREDICT

)

)

USING [Microsoft_Sequence_Clustering]

–parameters specific to each algorithm

(CLUSTER_COUNT = ’10′)

WITH DRILLTHROUGH

It is also possible to duplicate an existing mining model using a SELECT INTO statement, which is mainly useful when you want to operate on a model that is nearly identical except for different algorithm parameters or WHERE filters. There are no column specifiers, but it is fairly simple to stipulate the algorithm, the parameters and a filter, like so: SELECT * INTO CopyOfMyMiningModel USING Microsoft_Neural_Network (HIDDEN_NODE_RATIO = 10) WITH FILTER (FileHandleID = 11) FROM MyMiningModel.

There are far fewer additional clauses to worry about with these statements than their corresponding CREATE and ALTER TABLE counterparts in T-SQL. There isn’t even an ALTER MINING MODEL statement to worry about. WeM might as well include the two RENAME statements here, which merely allow you to change the name of a model or structure like so: RENAME MINING MODEL MyModel3 TO MyModel4. To perform the same operation on a structure, simply change the keyword MODEL to STRUCTURE. The closest thing you have to a BACKUP statement in DMX is EXPORT, which backs up a model or structure to an SSAS backup file like so: EXPORT MINING STRUCTURE MyStructure, TSDenormalizedView1Structure MINING MODEL MyModel4 TO ‘C:\MyStructure3.abf’ WITH DEPENDENCIES, PASSWORD=‘duh’. The DEPENDENCIES keyword specifies that additional objects needed for processing, such as the Data Source View (DSV), are also included in the file, while the PASSWORD keyword is self-explanatory. You can export more structures all at once by separating them with commas (as shown above, where TSDenormalizedView1Structure is an old structure we used with the Time Series tutorial) and indicate that only a specific model should be included, using the MINING MODEL keyword. The EXPORT MINING MODEL statement has basically the same syntax, except that it also exports the structure the model depends on, but only the specified model within the structure. IMPORT is the equivalent of a RESTORE statement in T-SQL and has only one optional clause, for the password previously assigned. For example, IMPORT FROM ‘C:\MyStructure3.abf’ WITH PASSWORD=‘duh’ would restore the structure we just exported.

There’s simply not much else to say about DDL in DMX, which is a welcome break after several weeks of covering complex topics in this blog. It doesn’t provide much additional functionality beyond what we already have in the GUI, nor is there as much benefit to be gained by typing these commands out by hand as there is with T-SQL DDL statements, which help you to think more clearly about the functionality of your tables and views. The main scenario for using the two CREATE statements is with the optional SESSION keyword, which allows you to create structures and models that are discarded when their associated connection is closed, in a manner analogous to temporary tables in T-SQL. I imagine that this is useful mainly if you’re remotely creating temporary objects, as you might through Excel. I can’t remember off-hand, but it may also be possible to do this in Reporting Services, which I only do on occasion; normally I write any DMX queries in SSMS, which may be different from your usage scenario. In other words, much of the DDL we use in DMX is not only extremely simple, but often unnecessary; using the GUI might not be the hip, cool thing to do, but it is often the fastest. In the next couple of tutorials we’ll venture into areas where DMX becomes a progressively more useful tool, or even outright indispensable in some cases. Even in many of these scenarios, however, the learning curve is still quite short compared to broader SQL Server languages like T-SQL and MDX. This simplicity can free up resources for more pressing tasks, like setting algorithm parameters correctly. It is also an antidote to the excessive complexity associated with SSDM’s common metadata format, which as discussed in previous issues has some drawbacks alongside its advantages. As we shall see, one of the primary uses of DMX is to address this limitation, by importing the results into relational tables or OLAP cubes where the results can be sliced and diced with greater ease.