SQL Server 2022 is in preview and I wrote a previous article on the T-SQL language changes. This article is a second part, covering a few more of the changes in T-SQL that are coming with this new version of the database platform.

The first article looked at DISTINCT FROM, DATE_BUCKET, GENERATE_SERIES, GREATEST/LEAST, STRING_SPLIT, and DATETRUNC. The second article covered APPROX_PERCENTILE_CONT, APPROX_PERCENTILE_DISC, and the bit manipulation functions, along with changes to the FIRST_VALUE, LAST_VALUE, and LTRIM/RTRIM/TRIM changes. This article looks at JSON features.

This is a light look at these language features, as I am still experimenting and learning about them. My evaluation of SQL Server 2022 is from the perspective of someone trying to decide whether or not to recommend an upgrade to our systems, so I'm examining where we might use these language changes to make coding easier. I am working with SQL Server 2022 RTM for my experiments in this article.

JSON Features

SQL Server added a number of JSON features to the T-SQL language in SQL Server 2022. There were a few new functions:

- JSON_PATH_EXISTS()

- JSON_OBJECT()

- JSON_ARRAY()

There were already other functions, JSON_VALUE(), JSON_QUERY(), JSON_MODIFY(), available since SQL Server 2016, but those weren't altered. One function previously existed, ISJSON(), and this was enhanced. This article will look at the three new functions above and ISJSON().

JSON_OBJECT()

The JSON_OBJECT() function is new in SQL Server 2023 that is designed to construct JSON object text from zero or more expressions. Essentially this function will set up the document as valid JSON with the outside curly braces and the various key-values included inside. Rather than using string concatenation to assemble a JSON document, you can provide a list of key-value pairs and have this function put them together.

This function takes a variable number of arguments. Each of these arguments are paired up as key value expressions in the form of "string1 : string2". Each two strings or variables is separated by a colon. Then each pair of arguments are separated by commas. This means a single key value would be sent in like this:

selectJSON_OBJECT(@s1 : @s2)

If I needed to put two pairs together, then the structure would be:

selectJSON_OBJECT(@s1 : @s2, @s3 : @s4)

Additional pairs would follow the same format of using another comma and two strings separated by a colon. If only one argument is passed, an error is returned. I don't see a limit to parameters. I tested up to 5000 key value pairs, and I got successful results.

There also is the JSON NULL clause which can optionally be included. This determines how NULL values are handled. There are two options for this clause:

- NULL On NULL

- ABSENT ON NULL

If the first parameter is used, then a SQL NULL is converted to a JSON NULL. If we use the second option, then this value is omitted.

This function returns a JSON string that is an NVARCHAR(MAX).

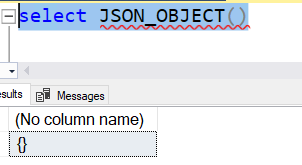

Let's look at how you can use this function. If I call this function without an argument, it works, but I'm not sure it's useful.

Let's call this with scalar values. I'll pass in two strings and see what happens. I'll use my name as a value, with the key being "name". I'll call this code:

select json_object( 'fname' : 'Frank')

The return from this is:

{"fname":"Frank"}If I didn't quote the key (or value), I get an error. Here's what I get without quotes around fname.

Msg 207, Level 16, State 1, Line 1 Invalid column name 'fname'.

I could also use variables like this:

declare @s1 varchar(100),

@s2 varchar(100);

select @s1 = 'fname'

select @s2 = 'Frank'

selectJSON_OBJECT(@s1:@s2)

The result from this is the same as my scalar example above. If I wanted two key-value pairs, I could use code like this:

declare @s1 varchar(100),

@s2 varchar(100);

select @s1 = 'name'

select @s2 = 'Frank'

selectJSON_OBJECT(@s1:@s2)

declare @s3 varchar(100),

@s4 varchar(100);

select @s3 = 'language'

select @s4 = 'PowerShell'

selectJSON_OBJECT(@s1 : @s2, @s3 : @s4)

My final result would be:

{"name":"Frank","language":"PowerShell"}How useful is this? Or is it?

Well, the error message above is a clue about how you might use this. The function was expecting a column so let's try that. I have a table of SalesOrders (from a previous article) that looks like this:

SaleID LineNumber SaleDate CustomerID ProductID Qty Price LineTotal ---------- ----------- ----------------------- ----------- ----------- ----- ---------- -------------- 1 1 2020-06-01 00:00:00.000 1 50 1 100.0000 100.0000 1 2 2020-06-01 00:00:00.000 1 51 10 50.0000 500.0000 2 1 2020-06-05 00:00:00.000 4 52 5 10.0000 50.0000 3 1 2020-06-05 00:00:00.000 6 53 10 6.0000 60.0000

Let's try a column in the function. I'll use SaleDate first, like this:

select JSON_OBJECT('SaleDate':SaleDate)

from SalesOrder

go

This gives me the following values:

{"SaleDate":"2020-06-01T00:00:00"}

{"SaleDate":"2020-06-01T00:00:00"}

{"SaleDate":"2020-06-05T00:00:00"}

{"SaleDate":"2020-06-05T00:00:00"}

{"SaleDate":"2020-06-05T00:00:00"}

{"SaleDate":"2020-06-05T00:00:00"}

{"SaleDate":"2020-06-09T00:00:00"}

{"SaleDate":"2020-06-15T00:00:00"}

{"SaleDate":"2020-06-15T00:00:00"}

{"SaleDate":"2020-06-15T00:00:00"}That's interesting. I could use multiple columns as well. Supposed I want the SaleID, SaleDate, and CustomerID. I could use this code:

select JSON_OBJECT('SaleID' : SaleID ,'SaleDate':SaleDate, 'CustomerID': CustomerID)

from SalesOrder

go

This gives me a better set of documents:

{"SaleID":1,"SaleDate":"2020-06-01T00:00:00","CustomerID":1}

{"SaleID":1,"SaleDate":"2020-06-01T00:00:00","CustomerID":1}

{"SaleID":2,"SaleDate":"2020-06-05T00:00:00","CustomerID":4}

{"SaleID":3,"SaleDate":"2020-06-05T00:00:00","CustomerID":6}

{"SaleID":3,"SaleDate":"2020-06-05T00:00:00","CustomerID":6}

{"SaleID":3,"SaleDate":"2020-06-05T00:00:00","CustomerID":6}

{"SaleID":4,"SaleDate":"2020-06-09T00:00:00","CustomerID":7}

{"SaleID":5,"SaleDate":"2020-06-15T00:00:00","CustomerID":1}

{"SaleID":5,"SaleDate":"2020-06-15T00:00:00","CustomerID":1}

{"SaleID":5,"SaleDate":"2020-06-15T00:00:00","CustomerID":1}Interesting, but I can also nest the function. This can give me a more complex document. Suppose I want a document with 3 key-values: SaleID, CustomerID, and OrderDetails. OrderDetails consists of its own document that has the date, quantity and price. I could run this code:

select JSON_OBJECT('SaleID' : SaleID ,'CustomerID': CustomerID, 'OrderDetails': JSON_OBJECT('SaleDate':SaleDate,'Qty': Qty, 'Price':Price))

from SalesOrder

goWith these results:

{"SaleID":1,"CustomerID":1,"Order":{"SaleDate":"2020-06-01T00:00:00","Qty":1,"Price":100.0000}}

{"SaleID":1,"CustomerID":1,"Order":{"SaleDate":"2020-06-01T00:00:00","Qty":10,"Price":50.0000}}

{"SaleID":2,"CustomerID":4,"Order":{"SaleDate":"2020-06-05T00:00:00","Qty":5,"Price":10.0000}}

{"SaleID":3,"CustomerID":6,"Order":{"SaleDate":"2020-06-05T00:00:00","Qty":10,"Price":6.0000}}

{"SaleID":3,"CustomerID":6,"Order":{"SaleDate":"2020-06-05T00:00:00","Qty":6,"Price":6.0000}}

{"SaleID":3,"CustomerID":6,"Order":{"SaleDate":"2020-06-05T00:00:00","Qty":100,"Price":5.0000}}

{"SaleID":4,"CustomerID":7,"Order":{"SaleDate":"2020-06-09T00:00:00","Qty":2,"Price":100.0000}}

{"SaleID":5,"CustomerID":1,"Order":{"SaleDate":"2020-06-15T00:00:00","Qty":4,"Price":100.0000}}

{"SaleID":5,"CustomerID":1,"Order":{"SaleDate":"2020-06-15T00:00:00","Qty":4,"Price":25.0000}}

{"SaleID":5,"CustomerID":1,"Order":{"SaleDate":"2020-06-15T00:00:00","Qty":8,"Price":50.0000}}That gets interesting. Of course, I might want to get all this in one document. For that some sort of multi-row concatenation or result set processing would be needed. However, that's something clients are good at doing. I can also use the JSON_ARRAY() function here, which I'll discuss next.

Before I do that, what about the NULL clause? Let's test that. I'll make a new table with 3 rows. It looks like this:

appttime title ---------- ---------- Mon 10am Standup Tue 10am Standup Fri 4pm NULL

If I want these documents, I can use this code, with the NULL ON NULL clause:

select json_object( 'Appt' : appttime, 'title':title NULL ON NULL) from schedule

The results are:

{"Appt":"Mon 10am","title":"Standup"}

{"Appt":"Tue 10am","title":"Standup"}

{"Appt":"Fri 4pm","title":null}If I change to the other option, I see this (all results after the hyphens):

select json_object( 'Appt' : appttime, 'title':title ABSENT ON NULL)

from schedule

-----

{"Appt":"Mon 10am","title":"Standup"}

{"Appt":"Tue 10am","title":"Standup"}

{"Appt":"Fri 4pm"}As you can see, I can either include the JSON null or just have the key-value dropped.

JSON_ARRAY()

This is another new function. JSON_ARRAY() is designed to construct a valid JSON array from inputs. Like the previous function, this function can use a variable number of arguments to construct the array. A JSON array is a series of values inside brackets, separated by commas. There also is the JSON NULL clause which can optionally be included.

As previously, with no arguments, an empty result is returned. In this case, it's just two square brackets, which is an empty array. I'm showing the code and results separated by a few dashes.

select JSON_ARRAY() --------- []

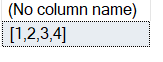

If I used a series of values, I get them condensed into an array, like this:

select json_array(1,2,3,4)

The results are:

Interesting, but less valuable. What if I use a column in here with numbers. I'll select from my dbo.SalesOrder table and see. I get this:

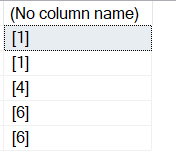

select top 5 json_array(CustomerID) from SalesOrder GO

The results are not what I'd want:

That doesn't seem very useful. What if I put a few columns in there from the table? I can do this, which returns a few values.

select top 5 json_array(productid, qty,price) from SalesOrder GO

The results here are interesting.

[50,1,100.0000] [51,10,50.0000] [52,5,10.0000] [53,10,6.0000] [54,6,6.0000]

Are they useful? I'm not sure. How would someone know what these values in the array mean? I guess most JSON isn't that documented, but this seems worse unless we had the same data in multiple columns, which wouldn't be a normalized design. Then again, lots of databases aren't well normalized. As a developer, I might like working with this type of structure.

One last test. I'll concatenate rows into a string and then send the values to the JSON_ARRAY() function.

with cteString (Customers)

as

(SELECT DISTINCT

STUFF((SELECT

',' + cast( c2.CustomerID as varchar(10)) as Customers

FROM

SalesOrder c2

FOR XML PATH(''), TYPE).value('.', 'varchar(max)'), 1, 1, '')

FROM SalesOrder c1

)

select json_array(Customers)

from cteString

- - - - -

["1,1,4,6,6,6,7,1,1,1"]It doesn't work well. Not sure if I'd use this function, but it's there if you find it useful.

JSON_PATH_EXISTS()

The JSON_PATH_EXISTS() function is new in SQL Server 2022. This function is designed to test a path in a JSON string and let you know if it exists. You can get these possible results, which feels a little too complex to me:

- NULL if the expression sent in is NULL

- 1 - path exists

- 0 otherwise

The good thing is they say this function doesn't return an error, so no error handling, but you do need result handing for the three cases. I'd prefer this just return 0 or 1. After all, if I pass a NULL JSON string, then there isn't a path.

Let's try this out with a few examples. I'll use this document for my experiments. I'll run this with other code, but I don't want to repeat it over and over in the examples.

declare @d varchar(max) = '{

"book":{

"isbn":"9781593279509",

"title":"Eloquent JavaScript, Third Edition",

"author":"Marijn Haverbeke",

"website":"http://eloquentjavascript.net/"

},

"magazines": [

{

"title":"SQL Server Pro",

"publisher":"Zinio"

},

{

"title":"SQL Server Geeks",

"publisher":"DPS"

}

],

"websites": [ {

"title":"SQL Server Central",

"url":"https://www.sqlservercentral.com"

},

{

"title":"SQL Blog",

"URL":"https://sqlblog.org/"

}

]

}'

A JSON path is a particular format thing. It starts with the $ as the root and then a dot (period) separating each key name. Let's look at examples:

select JSON_PATH_EXISTS(@d, '$.book') -- returns 1

The path is the root to book, which is the first key. If I wanted websites, I'd use:

select JSON_PATH_EXISTS(@d, '$.websites') -- returns 1

If I look below the books, I see various keys inside of this one key. For example, the isbn is a key below the book key. It's path is:

select JSON_PATH_EXISTS(@d, '$.book.isbn') -- returns 1

Typos matter, as does case. You can see these items return 0:

-- both return 0 select JSON_PATH_EXISTS(@d, '$.book.isnb') select JSON_PATH_EXISTS(@d, '$.book.ISBN')

What about the websites url? I can't do this.

select JSON_PATH_EXISTS(@d, '$.websites.title') -- returns 0

Actually, I can do this. No errors, remember? This returns a 0 because the path isn't from the websites key to the title key. Instead, I need an array index. JSON is zero-based, so the path actually is websites[0].title. This code returns 1.

select JSON_PATH_EXISTS(@d, '$.websites.title') -- returns 1

However, again, case matters. Look at the document. The two addresses under websites are different cases. That means this code returns a 1 and a 0.

select JSON_PATH_EXISTS(@d, '$.websites[0].url'),

JSON_PATH_EXISTS(@d, '$.websites[1].url')

-- returns 1 and 0If I make both upper case, I get a 0 and 1.

select JSON_PATH_EXISTS(@d, '$.websites[0].URL'),

JSON_PATH_EXISTS(@d, '$.websites[1].URL')

-- returns 0 and 1I put the error issue to the test, and it's wrong. This causes and error:

select JSON_PATH_EXISTS(@d, 'dfgdfgfdgd')

The return in SSMS is:

Msg 13607, Level 16, State 3, Line 28 JSON path is not properly formatted.

Unexpected character 'd' is found at position 0.

Oh well.

If I pass in a NULL document, I get NULL.

declare @d varchar(max) select JSON_PATH_EXISTS(@d, '$.book') go -- returns NULL

I guess this is useful when you want to check for a key existing. Since JSON documents are assembled by applications and often without a forced schema, this means you might need to validate keys before you try to process them.

To me, this is one failing of the whole NoSQL, schema on read item. I need lots of validation in reading, which can make for more complex processing and create its own technical debt in code.

ISJSON()

The ISJSON() function existed before SQL Server 2022, but it has been enhanced in this version. Now we can specify the type of thing we expect from the JSON expression and validate that exists. Prior to SQL Server 2022, there was only one parameter, which was a string expression. We could do this in SQL Server 2017 (which I use often):

DECLARE @d VARCHAR(1000) = '

{ "book":1

}'

SELECT ISJSON(@d)

-- returns 1

SELECT @d = '"book":1'

SELECT ISJSON(@d)

-- returns 0

In SQL Server 2022, I get an optional second parameter that can be of one of four values: VALUE, SCALAR, OBJECT, ARRAY. Each of these corresponds to a type of JSON structure. The definitions (from Docs) are here:

| VALUE | Tests for a valid JSON value. This can be a JSON object, array, number, string or one of the three literal values (false, true, null) |

| ARRAY | Tests for a valid JSON array |

| OBJECT | Tests for a valid JSON object |

| SCALAR | Tests for a valid JSON scalar – number or string |

Let's test this, with the code above. The first document should be a valid JSON object and value. It is not a scalar or array.

DECLARE @JSON VARCHAR(1000) = '

{ "book":1

}'

select isjson(@JSON, VALUE) as JSONValue,

isjson(@JSON, SCALAR) as JSONSCALAR,

isjson(@JSON, ARRAY) as JSONARRAY,

isjson(@JSON, OBJECT) as JSONOBJECT

-- returns

-- JSONValue JSONSCALAR JSONARRAY JSONOBJECT

-- 1 0 0 0What about the second code? This is a key and value only. This shouldn't be anything. It's not valid JSON, and not a value or a scalar. It isn't an array, and it's not an object, as it doesn't have the braces.

SELECT @JSON = '"book:1"'

select isjson(@JSON, VALUE) as JSONValue,

isjson(@JSON, SCALAR) as JSONSCALAR,

isjson(@JSON, ARRAY) as JSONARRAY,

isjson(@JSON, OBJECT) as JSONOBJECT

-- returns

-- JSONValue JSONSCALAR JSONARRAY JSONOBJECT

-- 0 0 0 0Let's test a few other things. I'll include the JSON value as the first result, and then the four results. You can surmise what code was passed into the ISJSON() function.

JSON JSONVALUE JSONScalar JSONArray JSONObject

"book" 1 1 0 0

1 1 1 0 0

{"book": [0,1]} 1 0 0 1

[0,1] 1 0 1 0

{"book": { "title":"DBATools", "Chapters":[1,2]}} 1 0 0 1This is an interesting test, and it mixes the ability to test a JSON document, or a fragment of one, like an array. I don't see these additional items are too valuable, but I guess it's good to test if you have a JSON object or if part of the string is an array. You would need to SUBSTRING() out the array part, but that's not too hard. Cumbersome, but not hard.

Summary

This article looks at the enhancements to JSON processing in T-SQL. We covered the JSON_OBJECT() and JSON_ARRAY() functions that return JSON documents from inputs. The JSON_PATH_EXISTS() and ISJSON() functions for testing JSON documents or parts.

Whether you like JSON or not, it is something developers like. That means it's becoming more of a reality in all sorts of databases all the time. These are functions worth learning about to better understand how you can process JSON documents when needed.