Pushing Logs

Introduction

I work in a large environment. We have thousands of computers, hundreds of servers, dozens of which are SQL Servers. We also have a fancy backup system. And I'll take a minute to brag because I think it's really cool.

Our Backups

We use lots of IBM equipment, and so we have a large AIX based backup robot/unit thingy that uses the Tivoli software. I went to our data center to check it out when I started. It's contained in these refrigerator size boxes that hold tapes inside with a couple robot arms that retrieve and load tapes into the drives. We have 19 boxes.

This thing connects to our servers and snags changed files. Changed files only, every night at either 8pm or 10pm. And we backup close to a terabyte of data every night. Kind of impressive, right?

I was somewhat impressed until I talked with the backup guy. If I bring a new SQL Server online, then this thing backs up the entire machine that night. /WINNT, /MSSQL/BINN, etc. If I left the server alone and didn't run SQL backups, didn't create a database, didn't add files, then our system would NEVER BACKUP THOSE FILES AGAIN.

EVER!

So 1TB of changed data, means 1TB of changed data, not of disk storage. We probably have quite a few TBs of online storage, like the /WINNT folders that don't really change.

But that's not the amazing part.

What's amazing is that we've been using this system for over a year. And every tape that we've ever used to still in the system. Online, retrievable within about 15 minutes. Sounds like a lot of tapes, and it is, but we also expire backups after so much time and versions, so tapes do get reused. Though we still do have the original version of every server. WOW!

The Flaw

We have a flaw in our SQL backup scheme. It was one that seemed really apparent to me when I started, but I think the amazing backup system had lulled some people. You see, we run log backups every hour to the local server. Then at night, we run a full db backup to the local machine. And our amazing backup system then snags the files that night.

See the flaw?

If not, think for a minute and then read this, otherwise skip ahead to the next section.

Suppose that my main server fails at 2:00pm. The RAID controller whacks out and we can't read the data from the physical disks. I have a few options here. One, I can call the hotline for support and there should be a new controller on site in 4 hours. Yeah, right!

I'm sure it probably happens some times, but I bet Murphy strikes Compaq and IBM as often as he hits me. The other possibility is to restore from backup to another server. Easy enough with the amazing backup system. If you have the same hardware, but since we tend to buy lots of the same server, this usually isn't a huge issue for us, however I believe that when I have a server fail, I will likely not have similar hardware nearby and will need to rebuild the server using new hardware. I like to plan for failure!

The Solution

or at least my solution. I built a simple DTS package that is scheduled and moves the logs to a remote server.

Every 15 minutes. Right after the backup of the log is complete. While I can't determine when exactly the log backup is finished, I have an idea of how long it takes and my job is scheduled to run shortly thereafter.

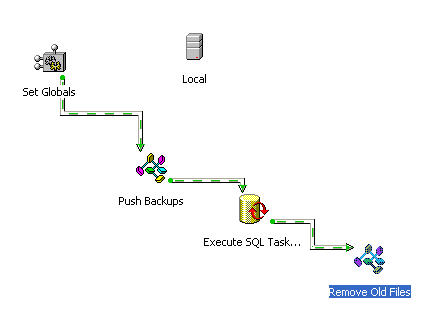

My job consists of a simple solution. I've included a screenshot of the DTS package here for you to examine:

This is a simple package consisting of a few parts. I'll walk through the package from left to right and show the various tasks that exist. First there is a connection to the SQL Server. I've set this to the local server.

Next I start with a Dynamic Properties task. Before I added this one, I created some global variables that hold the server names, paths for files and the last time the transfer occurred. As we'll see, I store this in a table in my DBA database on the server. The Dynamic Properties task updates the LastXfr global variable with the timestamp from a table.

Once this is complete, the first ActiveX task runs. This task is the one that moves the log files from the local server to the remote server. I've included the code here and will describe it below:

'**********************************************************************

' MoveBackups Script

'

' This script reads configration information from the global variables and

' then moves the backup files. The source and destination paths are used to send

' all files that have not been sent based on the file creation date and the

' global variable.

'

'************************************************************************

Function Main()

'On Error Resume Next

Dim strDestPath, fNewFile, strSourcePath, fOldFile, sBackup

Dim fso, f, f1, fc, fcreated, fname, fldrItem, fldrName

Dim objTxtFile, baseDate

Dim SourceFile, DestFile

Dim DebugFileName, ServerName

' Initialize the variables

strSourcePath = DTSGlobalVariables("SourcePath").value

strDestPath = DTSGlobalVariables("DestPath").value

fcreated = DTSGlobalVariables("LastXfr").value

DebugFileName = DTSGlobalVariables("DebugFileName").value

ServerName = DTSGlobalVariables("ServerName").value

' Convert to a date

BaseDate = CDate(fcreated)

' Create the FSO object handle

Set fso = CreateObject("Scripting.FileSystemObject")

' Create the Text File

Set objTxtFile = fso.CreateTextFile( strSourcePath & "\" & DebugFileName, TRUE)

' Write the Header

objTxtFile.writeline( "Log for " & ServerName & " Backup Transfer" )

objTxtFile.writeline( "-------------------------------------------------------" )

objTxtFile.writeline( "Current Date: " & CDate(date) )

objTxtFile.writeline( "Last Transfer: " & BaseDate )

objTxtFile.writeline( "SourcePath: " & strSourcePath )

objTxtFile.writeline( "DestPath: " & strDestPath )

objTxtFile.writeline( "" )

Set f = fso.GetFolder(strSourcePath)

' Loop through all the subfolders

For Each fldrItem in f.SubFolders

Set fc = fldrItem.Files

fldrName = fldrItem.name

fname = " "

' Loop through all files.

For Each f1 in fc

' Check the timestamp and extension of the file

If f1.DateCreated > BaseDate and right(fi.name,3) = "TRN" Then

' build the variables needed

SourceFile = strSourcePath & "\" & fldrname & "\" & f1.name

DestFile = strDestPath & "\" & fldrname & "\" & f1.name

objTxtFile.writeline( "Starting transfer of " & SourceFile )

DestFolder = strDestPath & "\" & fldrname

objTxtFile.writeline("dest:" & DestFolder )

' Check for the destination folder. If this is the first transfer for this database

' we need to create a new folder.

if not fso.folderexists( DestFolder) then

objTxtFile.writeline( " Creating " & DestFolder & " (" & fso.folderexists( DestFolder) & ")" )

fso.CreateFolder( DestFolder)

end if

' Copy the file

fso.CopyFile SourceFile, DestFile, TRUE

objTxtFile.writeline( "Completing transfer to - " & DestFile)

End If

Next

Next

objTxtFile.writeline( "" )

objTxtFile.writeline( " End " )

objTxtFile.Close

Set objTxtFile = Nothing

Set fso = Nothing

Set fc = Nothing

Main = DTSTaskExecResult_Success

End Function

This is a simple task the loops through the backup folders (a separate one for each database) and checks the timestamp of the log file against the last time this job ran. If the log file is new, then it is copied to the destination folder. There folder structure on the destination is maintained so it matches the source folder.

Once this task completes, there is a SQL task that updates my table with the current time. Since these transfers are log files, they complete quickly and the time lost in the transfers doesn't matter.

The last step is another ActiveX task that is very similar to the first one. This one deletes the old transaction log files. Since we run full backups every night, yesterday's log files are no longer needed, so I remove them. The code for this step is pretty simple:

'**********************************************************************

' Remove Log Backups Script

'

' This script reads configration information from the global variables and

' then removes old backup files. Old backup files are defined as created before today

'

'************************************************************************

Function Main()

'On Error Resume Next

Dim strDestPath, fNewFile, fOldFile, sBackup, strSourcePath

Dim fso, f, f1, fc, fcreated, fname, fldrItem, fldrName

Dim objTxtFile, baseDate

Dim DestFile

Dim DebugFileName, ServerName

' Initialize the variables

strDestPath = DTSGlobalVariables("DestPath").value

DeleteFileName = DTSGlobalVariables("DeleteFileName").value

strSourcePath = DTSGlobalVariables("SourcePath").value

ServerName = DTSGlobalVariables("ServerName").value

BaseDate = CDate( month(date) & "/" & day(date) & "/" & year(date))

Set fso = CreateObject("Scripting.FileSystemObject")

' Create the Text File

Set objTxtFile = fso.CreateTextFile( strSourcePath & "\" & DeleteFileName, TRUE)

' Write the Header

objTxtFile.writeline( "Log for " & ServerName & " Backup Deletion" )

objTxtFile.writeline( "-------------------------------------------------------" )

objTxtFile.writeline( "Current Date: " & CDate(date) )

objTxtFile.writeline( "Delete Date: " & BaseDate )

objTxtFile.writeline( "DestPath: " & strDestPath )

objTxtFile.writeline( "" )

Set f = fso.GetFolder(strDestPath)

' Loop through the subfolders on the destination server

For Each fldrItem in f.SubFolders

Set fc = fldrItem.Files

fldrName = fldrItem.name

fname = " "

' Check each file

For Each f1 in fc

objTxtFile.writeline( "Checking " & f1.name & " (" & f1.DateCreated & ")")

' If this file is old enough, remove it

If f1.DateCreated

That's it. I setup this job and scheduled it to run about 5 minutes after every transaction log backup. In 3 months of use, it hasn't failed once and has provided some security for me. Luckily I haven't had to use it at all (knock on wood!).

Conclusions

A pretty simple process, but one that the previous DBAs missed. They left this gaping hole in the backup scheme, which luckily never bit them. The only server failure they had in the last two years was recovered to the previous night's backup, but the client found that acceptable.

I know there are some hot programmers out there and you'll have plenty of ways to enhance this process. I look forward to reading some of your thoughts.

As always I welcome feedback on this article using the "Your Opinion" button below. Please also

rate this article.

Steve Jones

©dkRanch.net September 2002

Return to Steve Jones Home