Introduction

Removing SQL Server Data File Without Downtime is one of important activities in enterprise production scenarios. Managing storage efficiently in SQL Server is crucial, especially when dealing with multiple data files (.ndf) on different disks. Sometimes, an organization may need to decommission a disk or reclaim space by removing an extra .ndf file. However, simply deleting the file is not possible while it contains data.

Therefore, this article focuses on mimicking a real-world SQL Server setup where we move data from one .ndf file to another and use DBCC SHRINKFILE (EMPTYFILE) to ensure smooth data transfer without downtime. This method is preferred as it allows safe data redistribution while keeping SQL Server online.

In this guide, we will walk through:

- Setting up a test environment on Azure to mimic an enterprise setup

- Adding two new data files (`E:` and `G:`)

- Inserting enough data to ensure distribution across both files

- Moving data and shrinking the `.ndf` file using `DBCC SHRINKFILE (EMPTYFILE)`

- Removing the `.ndf` file once emptied

- Confirming data integrity and freeing up disk space

Real-World Scenarios Where This Technique Helps

In real-world enterprise environments, this approach is a lifesaver in the following situations:

- Storage Tier Migration - Your existing .ndf file might be on expensive high-speed storage (e.g., premium SSD) which you want to move to a lower-cost disk tier without incurring downtime. Therefore, using DBCC SHRINKFILE (EMPTYFILE) lets you migrate data smoothly before retiring the expensive storage.

- Database Consolidation Projects - During consolidation or disk cleanup, some legacy filegroups and .ndf files may no longer be needed. Rather than taking the database offline, this method ensures a live cleanup while the application continues to run.

- VM Resize and Storage Rebalancing - On cloud platforms like Azure, disks are often resized, migrated, or replaced for cost and performance optimization. Emptying a file and removing it allows for flexible VM storage reconfiguration without touching the database service.

- Corrupt Disk or IO Bottleneck Situations - If a particular disk begins showing signs of failure or high IOPS, you can proactively shift data to another volume using this method. It adds a layer of resiliency to your database storage strategy.

Step 1: Setting Up the Test Environment

First, we create an ecommerce database with a primary (.mdf) and a secondary (.ndf) file stored on C: drive.

CREATE DATABASE ECommerce ON PRIMARY ( NAME = ECommercePrimary, FILENAME = 'C:\SQLData\ECommerce.mdf', SIZE = 50MB, FILEGROWTH = 10MB ), FILEGROUP ExtraFG ( NAME = ECommerceData, FILENAME = 'C:\SQLData\ECommerce.ndf', SIZE = 50MB, FILEGROWTH = 10MB ) LOG ON ( NAME = ECommerceLog, FILENAME = 'C:\SQLData\ECommerce.ldf', SIZE = 50MB, FILEGROWTH = 10MB ); GO

To mimic moving storage in a real enterprise scenario, we attach a new Azure-managed disk and add an extra .ndf file to that disk (D:).

ALTER DATABASE ECommerce ADD FILEGROUP AzureFG; GO ALTER DATABASE ECommerce ADD FILE ( NAME = ECommerceDataE, FILENAME = 'F:\SQLData\ECommerce_E.ndf', SIZE = 50MB, FILEGROWTH = 10MB ), ( NAME = ECommerceDataG, FILENAME = 'G:\SQLData\ECommerce_G.ndf', SIZE = 50MB, FILEGROWTH = 10MB ) TO FILEGROUP AzureFG; GO

Step 2: Moving Data to the New File and Emptying the Old One

We force SQL Server to store data in the .ndf file by assigning it to the ExtraFG filegroup. We then insert 10,000 rows.

USE ECommerce;

GO

CREATE TABLE Orders (

OrderID INT PRIMARY KEY,

OrderDate DATETIME,

CustomerName NVARCHAR(100),

Amount DECIMAL(10,2)

) ON AzureFG; -- Storing Data on C:\ Drive

GO

Inserting Sample Data:

SET NOCOUNT ON;

DECLARE @i INT = 1;

WHILE @i <= 10000 -- Insert 10,000 records to distribute data

BEGIN

INSERT INTO Orders (OrderID, OrderDate, CustomerName, Amount)

VALUES (@i, GETDATE(), 'Customer_' + CAST(@i AS VARCHAR(10)), RAND() * 500);

SET @i = @i + 1;

END;

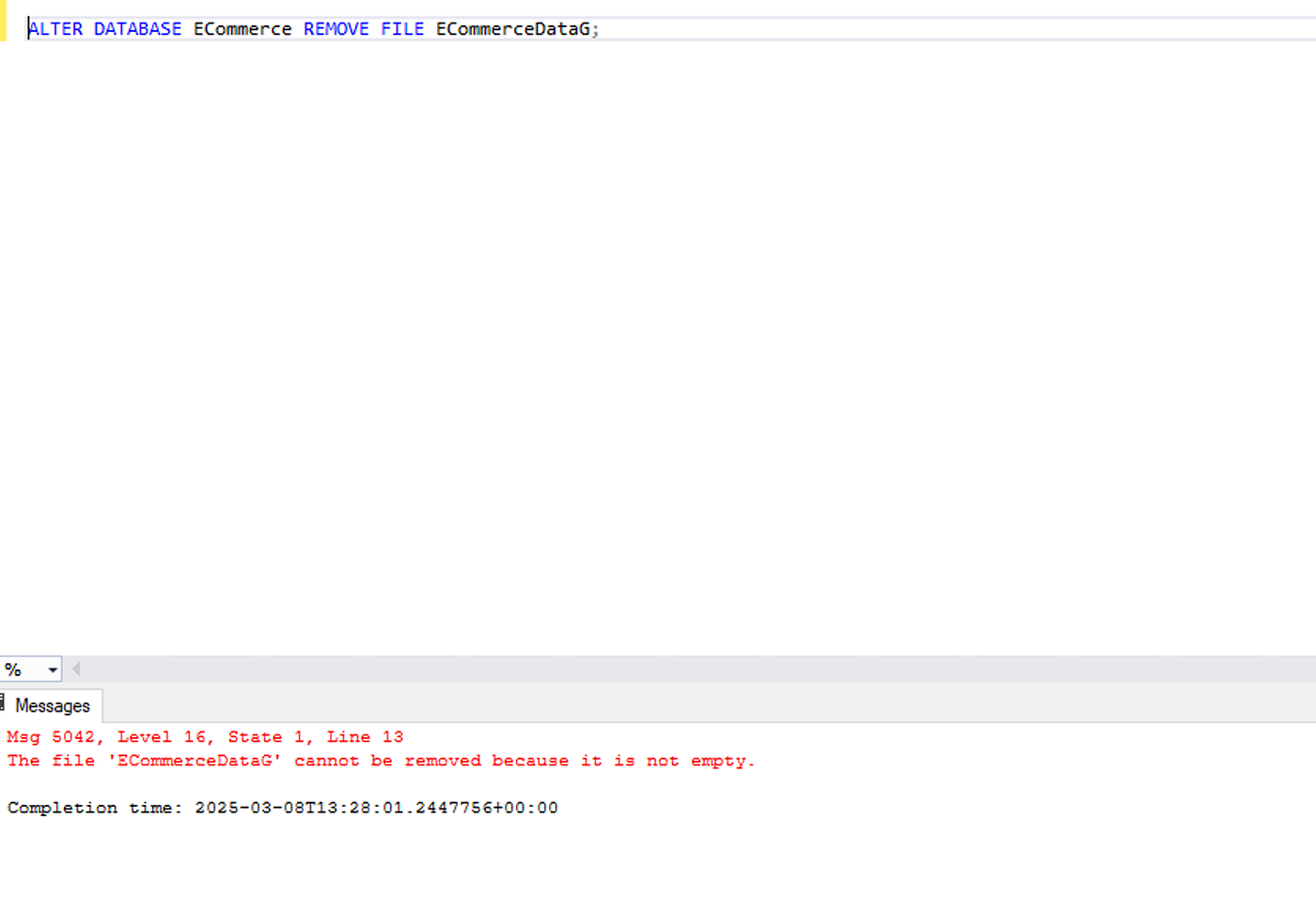

GOAttempting to remove the .ndf file without shrinking (Fails). We an run this code:

ALTER DATABASE ECommerce REMOVE FILE ECommerceDataG;

As the .ndf file still holds data, therefore, we must first move all data and empty it using DBCC SHRINKFILE (EMPTYFILE).

Step 3: Using DBCC SHRINKFILE to Empty the NDF File

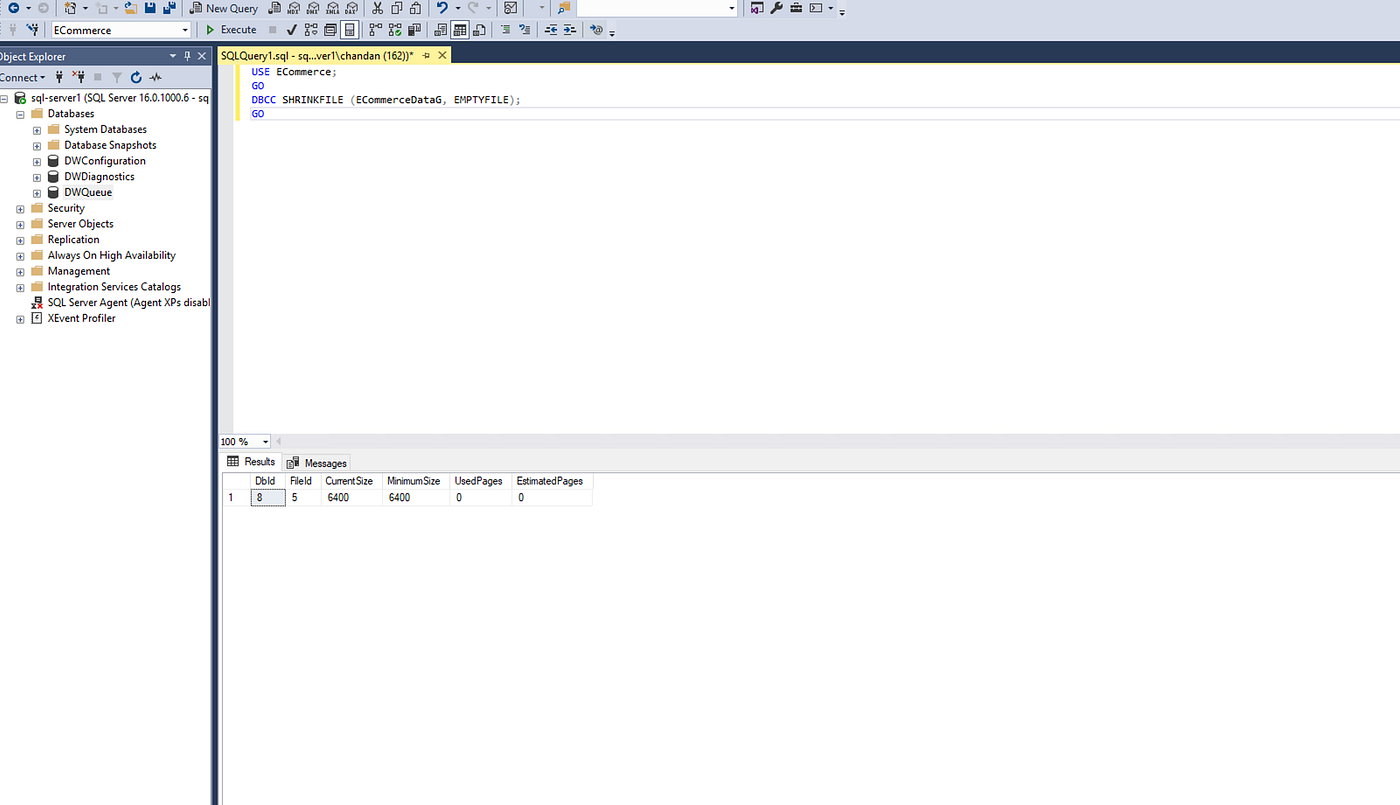

This is the critical step. Using DBCC SHRINKFILE (EMPTYFILE), we instruct SQL Server to move all data to the remaining files.

USE ECommerce; GO DBCC SHRINKFILE (ECommerceDataG, EMPTYFILE); GO

This tells SQL Server to move data to other available files in the database. Learn more about dbcc shrinkfile in Microsoft’s official documentation.

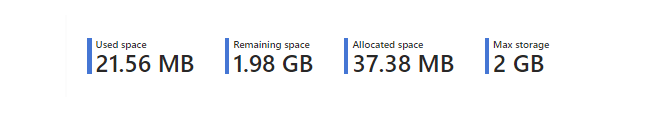

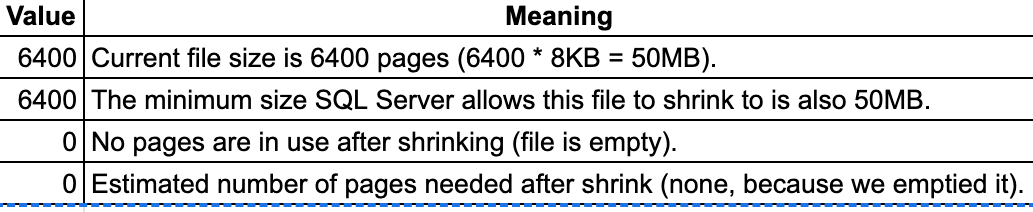

As we have given db file initial and minimum size to 50 MB, this can’t be reduced to zero even if it does not have data, but this is evident there are not any used or estimated pages.

We can now check the file size after shrinking with this code:

SELECT name, size, physical_name

FROM sys.master_files

WHERE database_id = DB_ID('ECommerce');Step 4: Successfully Removing the NDF File

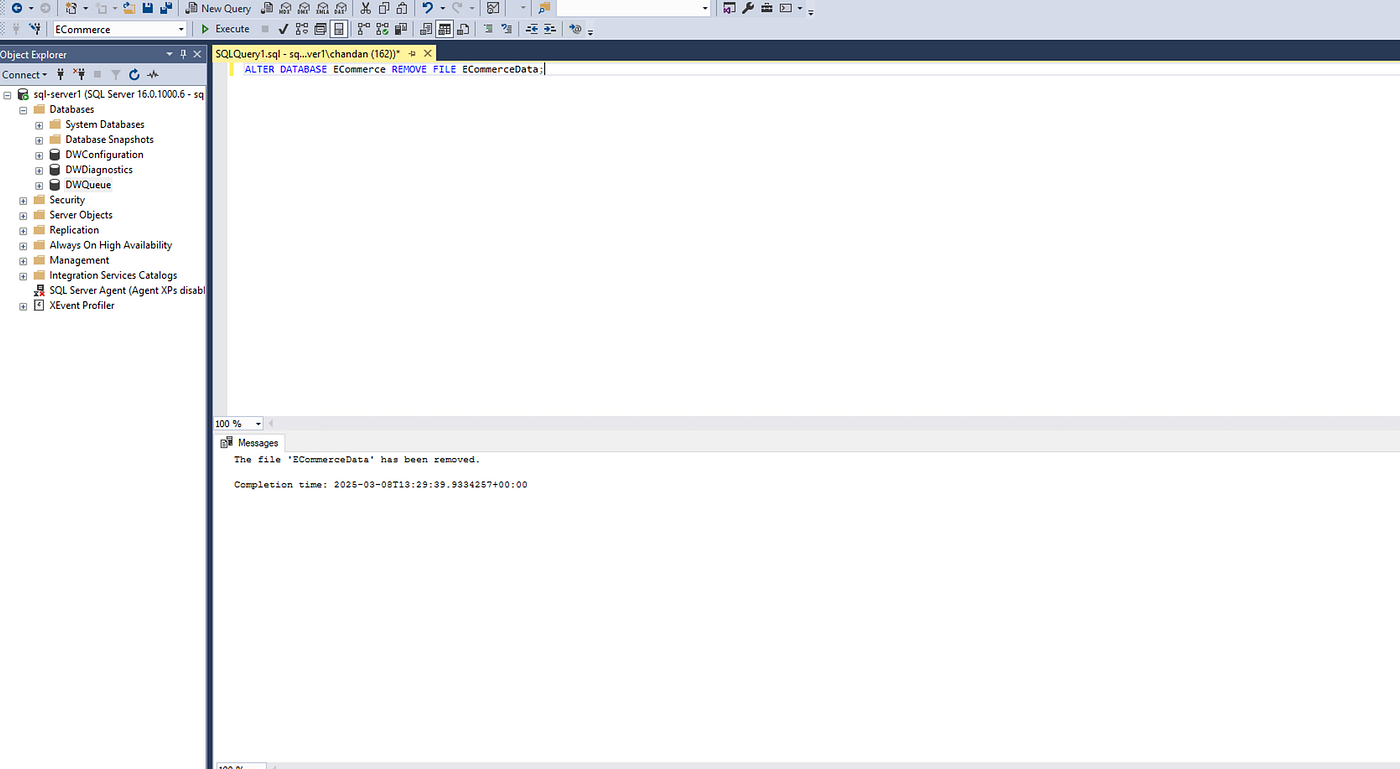

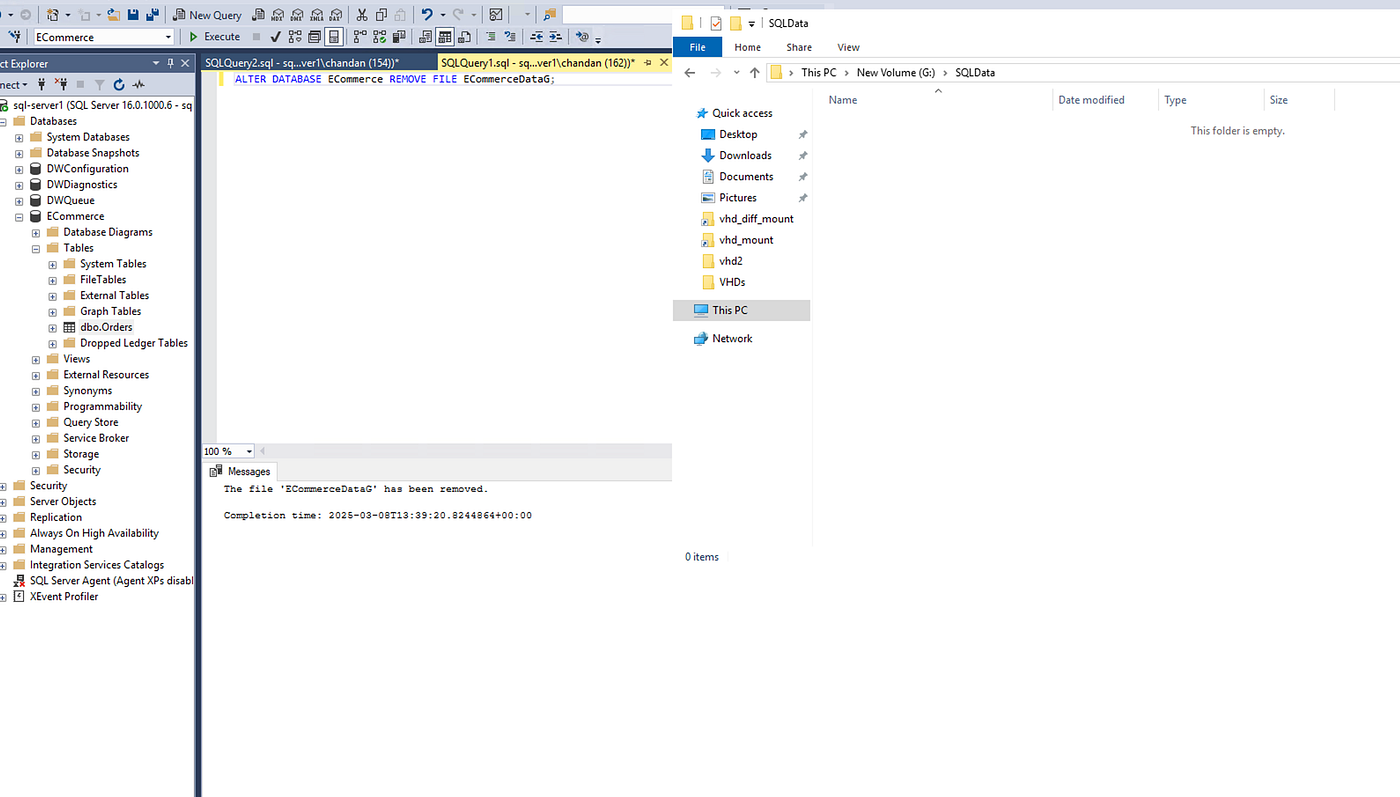

Now that the .ndf file is empty, we remove it from the SQL Server database with this code:

ALTER DATABASE ECommerce REMOVE FILE ECommerceData; GO

There is now no data file available in G:\SQLData.

The file is now removed, and disk space is freed up!

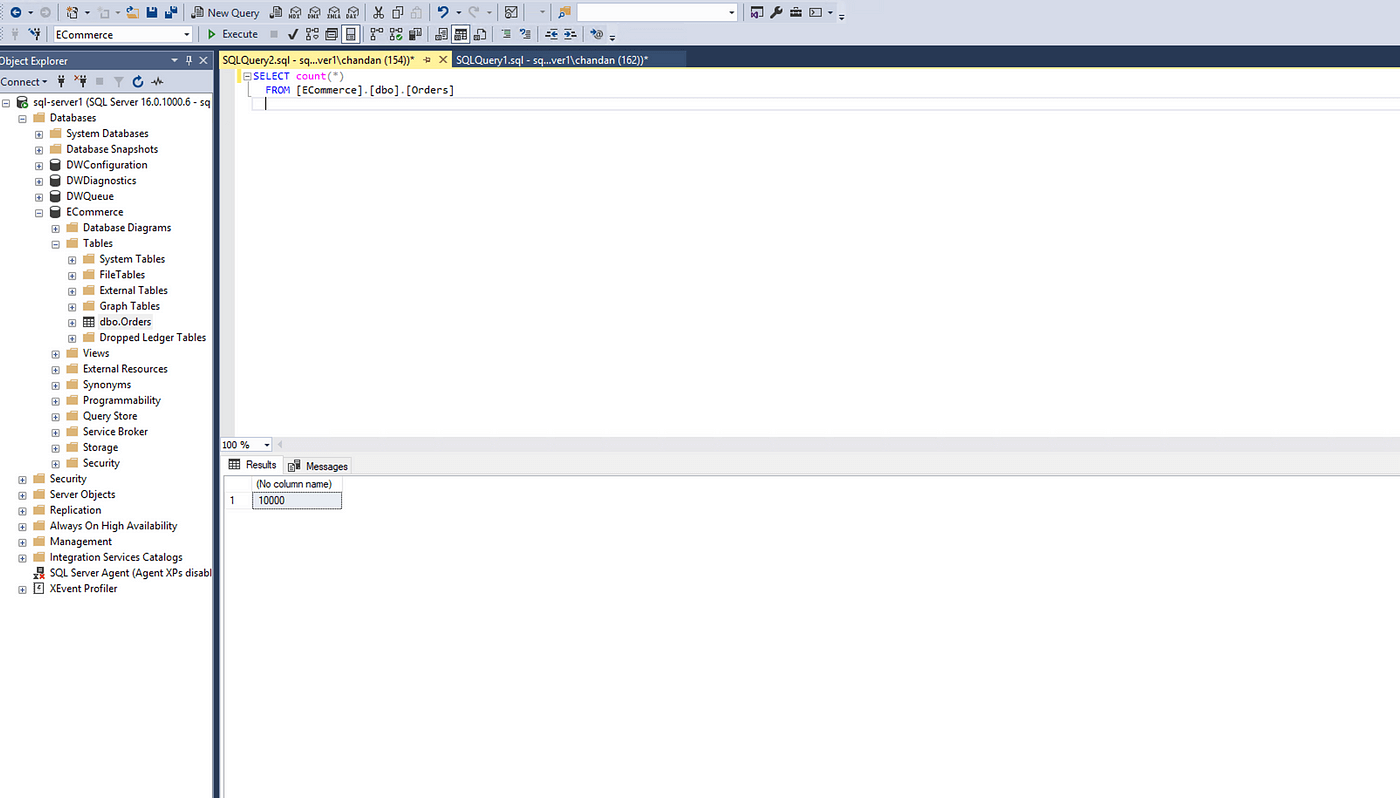

Step 5: Verifying Data Integrity

Finally, the file is removed, we check if our data is still intact:

SELECT COUNT(*) as Computed FROM Orders; GO

Step 6: Deleting the Azure Disk Safely(Final Cleanup)

Since we no longer need the Azure disk, we can detach and delete it.

Remove-AzDisk -ResourceGroupName "MyResourceGroup" -DiskName "SQLDataDisk" -Force

Or we can also do it from Azure UI:

Final Takeaways

This method ensures:

- Zero Downtime — No database restart required.

- Automatic Data Redistribution — SQL Server efficiently moves data when using EMPTYFILE.

- Safe Disk Decommissioning – Once emptied, .ndf files can be removed without corruption.

Would you use this method in your environment? Let’s discuss in the comments!

For deeper insights into SQL Server storage best practices, check this SQL Server Storage Engine Guide.

follow me for future SQL tips- https://www.sqlservercentral.com/forums/user/shuklachandan12, I’ll be sharing more advanced SQL Server tips soon!