Unreliable Narrators

-

February 7, 2025 at 12:00 am

Comments posted to this topic are about the item Unreliable Narrators

-

February 7, 2025 at 3:45 pm

I'm glad someone is speaking out against all this hype. I wish there were more speaking out against it. But even his "unpolite" remark is still polite, in my opinion. We need to stop the hype and the false sense of it being able to solve problems.

The current LLM AIs are complete #%#$ *(&^ @!!# *&%$!!!

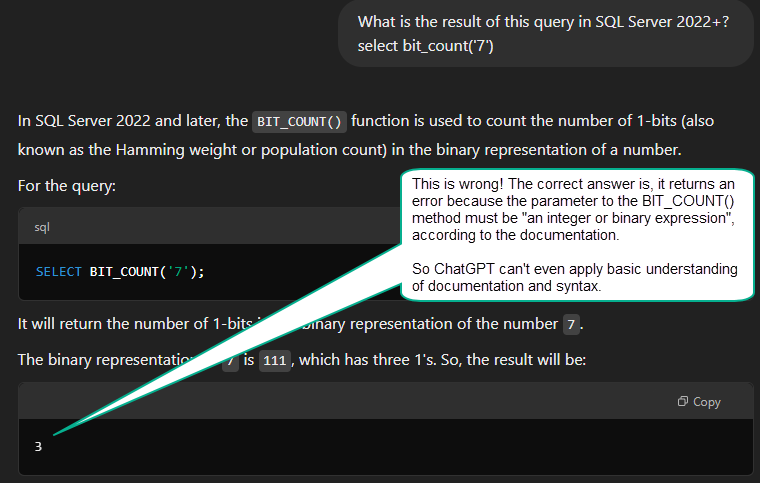

For example, here is ChatGPT's response to today's SQL Server Central Question of the Day:

Steve said:

I have found AIs to be pretty good search engines. Not perfect, but good.

I agree with that. That's about all I've seen these AI chat bots do. Take a bunch of information, mostly bull shit, and return a bull shit summary. As with anything on the Internet, and the evidence I just gave, you still have to verify and use some common sense. So why waste the time to begin with.

Keep the AI research in academia. We should not be using it in the real world.

-

February 7, 2025 at 4:38 pm

Jeff Moden called it a Consensus Engine, which I thought was a brilliant description.

It's pretty much at peak hype in the hype cycle. It is genuinely useful, just not the panacea that the hype is promoting. The hype is creating unrealistic expectations

-

February 7, 2025 at 6:28 pm

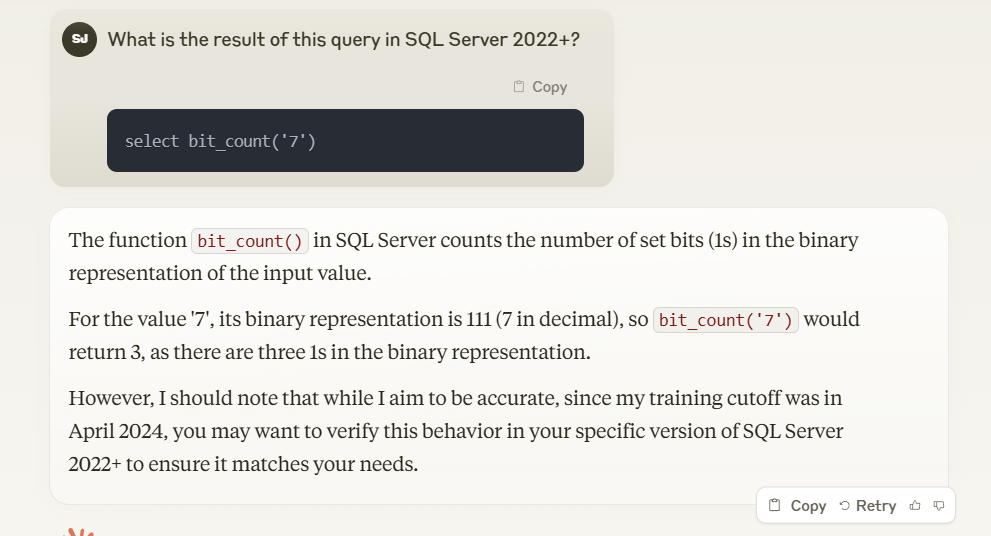

Alright, I had to try.

Claude:

-

February 7, 2025 at 6:28 pm

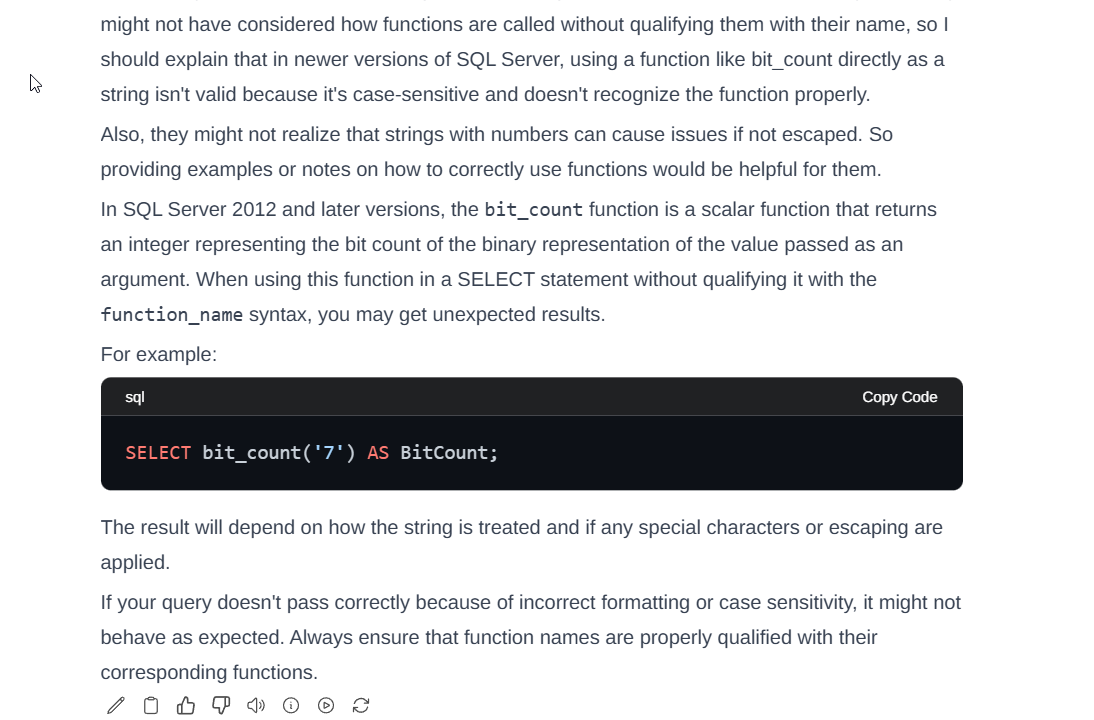

Local Deepseek 1.5b model

-

February 7, 2025 at 6:29 pm

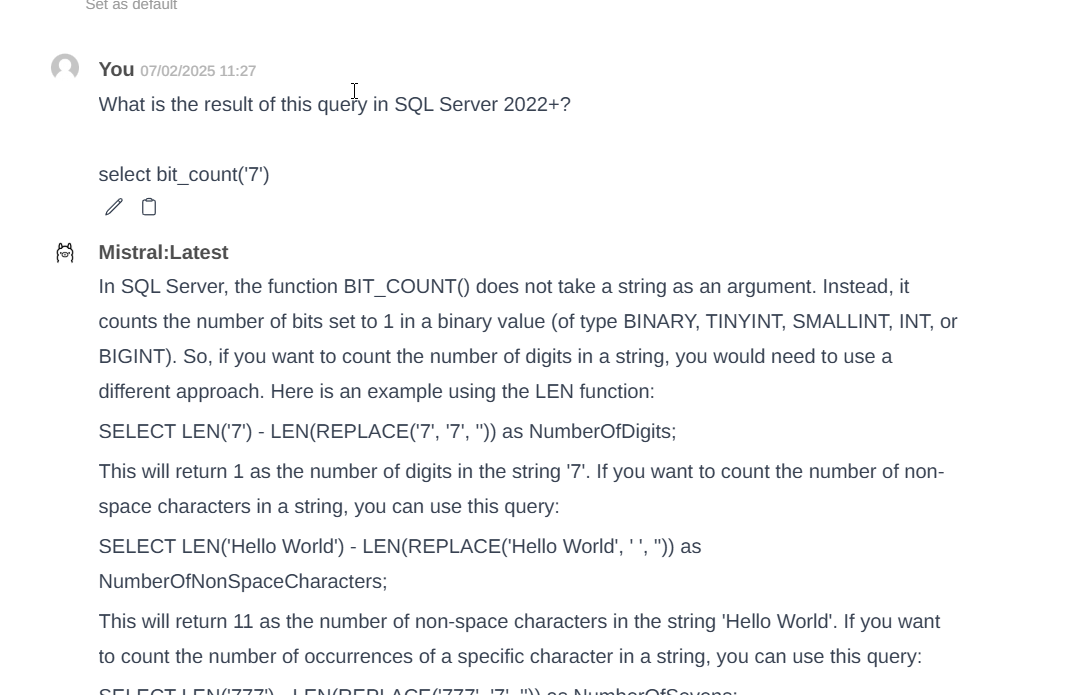

Local mistral model is smarter: (latest, 3.8GB one)

-

February 7, 2025 at 8:51 pm

Regarding "unreliable narration", that just makes LLMs more human.

"Do not seek to follow in the footsteps of the wise. Instead, seek what they sought." - Matsuo Basho

-

February 8, 2025 at 5:41 pm

Nice testing, Steve. I briefly looked at DeepSeek and the size of downloading their various models, but never got around to using or testing any of it.

-

February 9, 2025 at 4:37 am

Eric M Russell wrote:Regarding "unreliable narration", that just makes LLMs more human.

+1000! I've said that it has the "being human" part pretty much down pat. I very confidently provides the wrong answer and the explanation to justify it and, when you call it out for a bad answer, it politely back-peddles and then proceeds to (usually) provide another answer in the same manner and be wrong again. 😀

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 9, 2025 at 4:51 am

For Perplexity.ai, it came so VERY close and, yet, it still failed. It did write corrected to go after this bit of narrative, though.

It's important to note that the BIT_COUNT function in SQL Server 2022 operates on integer or binary expressions, not on string literals7. While the query as written with a string literal '7' may work due to implicit conversion, it's generally better practice to use an integer literal without quotes:

The bad part is, that wrong answer still cost as much water, electricity, and carbon release as this ol' man likely does fixing dinner. Of course, I produce more methane. 😀

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 9, 2025 at 5:09 am

David.Poole wrote:Jeff Moden called it a Consensus Engine, which I thought was a brilliant description.

It's pretty much at peak hype in the hype cycle. It is genuinely useful, just not the panacea that the hype is promoting. The hype is creating unrealistic expectations

The good part about it is that it makes a great "first search" if you're using an engine that returns what it searched an found to try to create results. Even when it's wrong about things, it does cough up some pretty handy search results.

If people remember that that's all it really is, it can be of some help. Just don't expect to write good code especially since some of the code I've seen it write contains "silent failures" where, a fair bit of the time, it'll produce the correct results but in some cases, it will produce incorrect answers with no error.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 10, 2025 at 3:56 pm

Last week for work I sat in on a lecture / discussion on "AI" as related to what the organization does, and I found it rather telling that I asked a question of the presenters (who, on the whole, were rather "rah rah" about "AI") and their answer to my question...

Below is the question I posted:

"Perhaps I'm cynical, but aren't these "AI" engines not much more than very good natural language search tools, and still subject to the basic rule of computing, namely "Garbage In / Garbage Out?""

and their answer was

"Yes, it is."

-

February 12, 2025 at 1:41 pm

My main worry is that these engines can only ever be as good as the data they're trained on. Presumably that means that malicious actors could flood forums, community sites, etc. with misleading information which will then get regurgitated by these engines as facts.

-

February 19, 2025 at 4:26 pm

Jeff Moden wrote:Eric M Russell wrote:Regarding "unreliable narration", that just makes LLMs more human.

+1000! I've said that it has the "being human" part pretty much down pat. I very confidently provides the wrong answer and the explanation to justify it and, when you call it out for a bad answer, it politely back-peddles and then proceeds to (usually) provide another answer in the same manner and be wrong again. 😀

Most AI chatbots keep the conversations sandboxed, so even if we convince it that it's wrong on some specific topic, it won't remember outside the context of that conversation.

"Do not seek to follow in the footsteps of the wise. Instead, seek what they sought." - Matsuo Basho

-

February 19, 2025 at 4:46 pm

Eric M Russell wrote:Most AI chatbots keep the conversations sandboxed, so even if we convince it that it's wrong on some specific topic, it won't remember outside the context of that conversation.

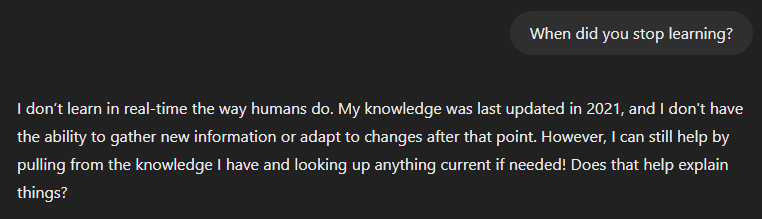

Correct. Absolutely none of them have any kind of "feedback loop", that allows them to learn new things on the fly. Which is what most people think AI is supposed to be able to do. Chat GPT, for example, only uses data from up to 2021:

What we really need is to just stop calling it AI. You can keep the hype, as long as you call it what it is. It is a point in time data set with a search algorithm that gives a summarized, human like sentence as a response.

I don't even like the term "machine learning". That is too ambiguous for most people, therefore not meaningful. But I would take that over using AI, for sure.

Viewing 15 posts - 1 through 15 (of 19 total)

You must be logged in to reply to this topic. Login to reply