SQL - Grouping SQL output in 5 minute intervals

-

March 2, 2023 at 7:06 pm

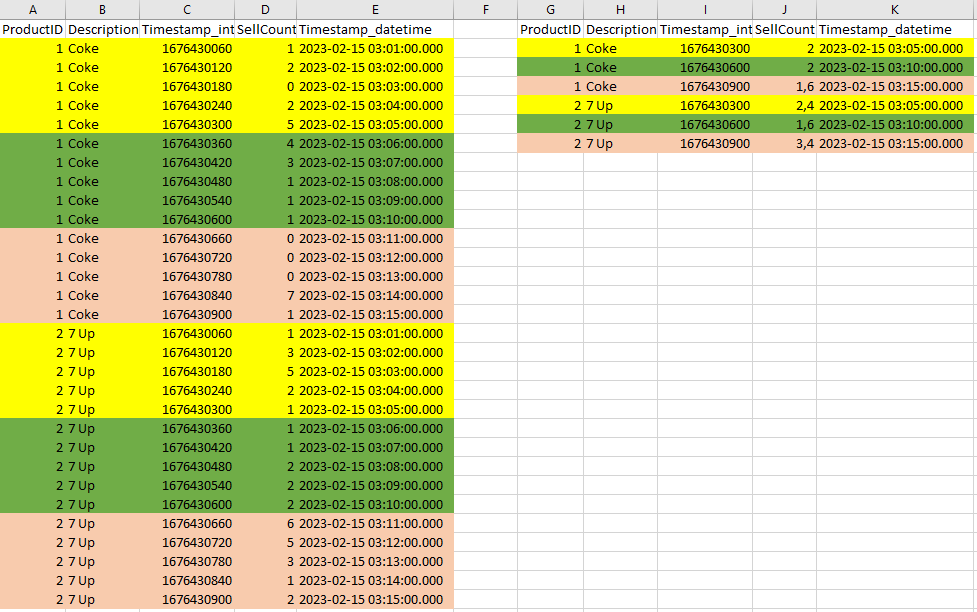

I have a dataset that has a date column every 1 minute. I wanted to group the information to have the data "organized" every 5 minutes and average the SellCount column.

For example, I have 5 records, 1 for minute 1, another for minute 2, another for minute 3, another for minute 4 and finally another for minute 5. I want to group in a single line where the date is present will be the 5th minute.

I send the code to create the table and insert the data.

CREATE TABLE SELL (ProductID int,Description varchar(10),Timestamp_int int, SellCount int,Timestamp_datetime datetime)

INSERT INTO SELL VALUES(1,'Coke',1676430060,1,'2023-02-15 03:01:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430120,2,'2023-02-15 03:02:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430180,0,'2023-02-15 03:03:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430240,2,'2023-02-15 03:04:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430300,5,'2023-02-15 03:05:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430360,4,'2023-02-15 03:06:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430420,3,'2023-02-15 03:07:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430480,1,'2023-02-15 03:08:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430540,1,'2023-02-15 03:09:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430600,1,'2023-02-15 03:10:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430660,0,'2023-02-15 03:11:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430720,0,'2023-02-15 03:12:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430780,0,'2023-02-15 03:13:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430840,7,'2023-02-15 03:14:00.000') INSERT INTO SELL VALUES(1,'Coke',1676430900,1,'2023-02-15 03:15:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430060,1,'2023-02-15 03:01:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430120,3,'2023-02-15 03:02:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430180,5,'2023-02-15 03:03:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430240,2,'2023-02-15 03:04:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430300,1,'2023-02-15 03:05:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430360,1,'2023-02-15 03:06:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430420,1,'2023-02-15 03:07:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430480,2,'2023-02-15 03:08:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430540,2,'2023-02-15 03:09:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430600,2,'2023-02-15 03:10:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430660,6,'2023-02-15 03:11:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430720,5,'2023-02-15 03:12:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430780,3,'2023-02-15 03:13:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430840,1,'2023-02-15 03:14:00.000') INSERT INTO SELL VALUES(2,'7 Up',1676430900,2,'2023-02-15 03:15:00.000')

-

March 2, 2023 at 7:43 pm

Your timestamp column is a UNIX timestamp based on Seconds. Just use integer division to divide that number by 300 and you'll get the GROUP BY value for your Average.Edit: Based on a suggestion, we need some clarification as to what you want to do with times that end with ":00".

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

March 2, 2023 at 8:34 pm

Jeff Moden wrote:Your timestamp column is a UNIX timestamp based on Seconds. Just use integer division to divide that number by 300 and you'll get the GROUP BY value for your Average.

I don't think that is going to work for the OP's request. This will group to the first value in the grouping - so 0, 1, 2, 3 and 4 will group at 0 - 5, 6, 7, 8, 9 will group at 5, etc.

But - using yours as a basis, we can add 240 to Timestamp_int then divide by 300 for the group value. Then - return max timestamp_int, max timestamp_datetime and sum sellcount / 5.0.

Jeffrey Williams

“We are all faced with a series of great opportunities brilliantly disguised as impossible situations.”― Charles R. Swindoll

How to post questions to get better answers faster

Managing Transaction Logs -

March 2, 2023 at 8:43 pm

Jeffrey Williams wrote:Jeff Moden wrote:Your timestamp column is a UNIX timestamp based on Seconds. Just use integer division to divide that number by 300 and you'll get the GROUP BY value for your Average.

I don't think that is going to work for the OP's request. This will group to the first value in the grouping - so 0, 1, 2, 3 and 4 will group at 0 - 5, 6, 7, 8, 9 will group at 5, etc.

But - using yours as a basis, we can add 240 to Timestamp_int then divide by 300 for the group value. Then - return max timestamp_int, max timestamp_datetime and sum sellcount / 5.0.

I was just getting ready to post that question. What about time slots with :00 for minutes? My belief is that they should be first and that the OP has made an error in his post by not including one of those. We need to hear from the op.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

March 2, 2023 at 9:13 pm

I would agree - generally speaking I wouldn't roll up to the next increment and instead roll down - making each increment the start of the group instead of the end of the group.

The real difference is whether you use MIN or MAX to get the starting/ending value.

Jeffrey Williams

“We are all faced with a series of great opportunities brilliantly disguised as impossible situations.”― Charles R. Swindoll

How to post questions to get better answers faster

Managing Transaction Logs -

March 2, 2023 at 9:20 pm

Agreed. That would also decide if the last 5 minute period appeared to be in the next hour or not if hourly calculations from the original average calculations needed to be aggregated for charting/planing purposes or whatever.

This is one of those questions where the OP says "This is what I want" and it leaves me begging to ask "Yeah... but it that what you really need"???

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

March 2, 2023 at 10:10 pm

Jeff Moden wrote:This is one of those questions where the OP says "This is what I want" and it leaves me begging to ask "Yeah... but it that what you really need"???

See it all the time - they get the answer they 'wanted' and very soon come back looking for the actual answer they 'needed'.

Jeffrey Williams

“We are all faced with a series of great opportunities brilliantly disguised as impossible situations.”― Charles R. Swindoll

How to post questions to get better answers faster

Managing Transaction Logs -

March 2, 2023 at 11:39 pm

Hi, Records containing date between minute 01 to minute 05 per product must group to date HH:05:SS. Records containing date between minute 06 to minute 10 by product must group to date HH:10:SS.

Times ending with Minutes in 00 will be to be included in the date grouping when it is between HH:55:SS to HH:00:SS.

For example, 23:55:00 to 00:00:00.

When grouping, records between these dates must be dated 00:00:00

Understood?

- This reply was modified 3 years ago by diogo.venturatomas.

-

March 3, 2023 at 12:30 am

Yep...

Here's the cleaned up test data with a couple of extra rows for the "xx:00" proof...

-- DROP TABLE IF EXISTS dbo.Sell

GO

CREATE TABLE dbo.SELL

(

ProductID int

,[Description] varchar(10)

,Timestamp_int int

,SellCount int

,Timestamp_datetime datetime

)

;

GO

INSERT INTO dbo.SELL

(ProductID,[Description],Timestamp_int,SellCount,Timestamp_datetime)

VALUES (1,'Coke',1676429940,10,'2023-02-15 02:59:00.000') --Added for proof

,(1,'Coke',1676430000,8,'2023-02-15 03:00:00.000') --Added for proof

,(1,'Coke',1676430060,1,'2023-02-15 03:01:00.000')

,(1,'Coke',1676430120,2,'2023-02-15 03:02:00.000')

,(1,'Coke',1676430180,0,'2023-02-15 03:03:00.000')

,(1,'Coke',1676430240,2,'2023-02-15 03:04:00.000')

,(1,'Coke',1676430300,5,'2023-02-15 03:05:00.000')

,(1,'Coke',1676430360,4,'2023-02-15 03:06:00.000')

,(1,'Coke',1676430420,3,'2023-02-15 03:07:00.000')

,(1,'Coke',1676430480,1,'2023-02-15 03:08:00.000')

,(1,'Coke',1676430540,1,'2023-02-15 03:09:00.000')

,(1,'Coke',1676430600,1,'2023-02-15 03:10:00.000')

,(1,'Coke',1676430660,0,'2023-02-15 03:11:00.000')

,(1,'Coke',1676430720,0,'2023-02-15 03:12:00.000')

,(1,'Coke',1676430780,0,'2023-02-15 03:13:00.000')

,(1,'Coke',1676430840,7,'2023-02-15 03:14:00.000')

,(1,'Coke',1676430900,1,'2023-02-15 03:15:00.000')

,(2,'7 Up',1676430060,1,'2023-02-15 03:01:00.000')

,(2,'7 Up',1676430120,3,'2023-02-15 03:02:00.000')

,(2,'7 Up',1676430180,5,'2023-02-15 03:03:00.000')

,(2,'7 Up',1676430240,2,'2023-02-15 03:04:00.000')

,(2,'7 Up',1676430300,1,'2023-02-15 03:05:00.000')

,(2,'7 Up',1676430360,1,'2023-02-15 03:06:00.000')

,(2,'7 Up',1676430420,1,'2023-02-15 03:07:00.000')

,(2,'7 Up',1676430480,2,'2023-02-15 03:08:00.000')

,(2,'7 Up',1676430540,2,'2023-02-15 03:09:00.000')

,(2,'7 Up',1676430600,2,'2023-02-15 03:10:00.000')

,(2,'7 Up',1676430660,6,'2023-02-15 03:11:00.000')

,(2,'7 Up',1676430720,5,'2023-02-15 03:12:00.000')

,(2,'7 Up',1676430780,3,'2023-02-15 03:13:00.000')

,(2,'7 Up',1676430840,1,'2023-02-15 03:14:00.000')

,(2,'7 Up',1676430900,2,'2023-02-15 03:15:00.000')

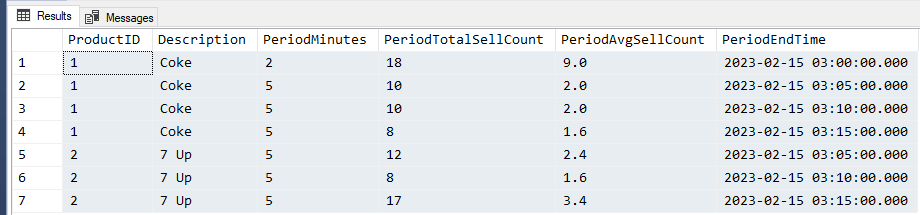

;Here's a solution with some extra columns you or the people requesting this might want (comment them out if you don't want them). Change the other column names to suit yourself.

SELECT ProductID

,[Description]

,PeriodMinutes = COUNT(*)

,PeriodTotalSellCount = SUM(SellCount)

,PeriodAvgSellCount = CONVERT(DECIMAL(9,1),AVG(SellCount+0.0))

,PeriodEndTime = MAX(Timestamp_datetime)

FROM dbo.Sell

GROUP BY ProductID, [Description], (Timestamp_int-60)/300

ORDER BY ProductID,PeriodEndTime

;Understood? 😀

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

March 3, 2023 at 6:03 pm

Modified to do the group by using the "Timestamp_datetime" field in case someone has the scenario where the time stamp is not already available as a seconds value. I just calculate the seconds within the date and applied the same logic used on the prior solution. I use the date and the seconds within the date divided by 300 in the group clause.

SELECT ProductID

,[Description]

,PeriodMinutes = COUNT(*)

,PeriodTotalSellCount = SUM(SellCount)

,PeriodAvgSellCount = CONVERT(DECIMAL(9,1),AVG(SellCount+0.0))

,PeriodEndTime = MAX(Timestamp_datetime)

FROM dbo.Sell

GROUP BY ProductID

, [Description]

, cast(Timestamp_datetime as date)

, ( datepart( hh, Timestamp_datetime) * 3600 + datepart( mi, Timestamp_datetime) * 60 + datepart( ss, Timestamp_datetime) - 60 ) / 300

ORDER BY ProductID,PeriodEndTime -

March 3, 2023 at 11:34 pm

aitorzur@hotmail.com wrote:Modified to do the group by using the "Timestamp_datetime" field in case someone has the scenario where the time stamp is not already available as a seconds value. I just calculate the seconds within the date and applied the same logic used on the prior solution. I use the date and the seconds within the date divided by 300 in the group clause.

SELECT ProductID

,[Description]

,PeriodMinutes = COUNT(*)

,PeriodTotalSellCount = SUM(SellCount)

,PeriodAvgSellCount = CONVERT(DECIMAL(9,1),AVG(SellCount+0.0))

,PeriodEndTime = MAX(Timestamp_datetime)

FROM dbo.Sell

GROUP BY ProductID

, [Description]

, cast(Timestamp_datetime as date)

, ( datepart( hh, Timestamp_datetime) * 3600 + datepart( mi, Timestamp_datetime) * 60 + datepart( ss, Timestamp_datetime) - 60 ) / 300

ORDER BY ProductID,PeriodEndTimeGood idea... I should have considered that eventuality. I've recently had to do a fair bit of work with UNIX Timestamps and I was biased towards using seconds because of that.

If you want to base it on the DATETIME column (especially because all entries are at a whole minute), you can do just calculate the number of minutes that have passed since the 1900-01-01 DATETIME epoch and keep it even simpler.

--===== Solve the problem using integer math on the Timestamp_datetime column.

SELECT ProductID

,[Description]

,PeriodMinutes = COUNT(*)

,PeriodTotalSellCount = SUM(SellCount)

,PeriodAvgSellCount = CONVERT(DECIMAL(9,1),AVG(SellCount+0.0))

,PeriodEndTime = CONVERT(CHAR(16),MAX(Timestamp_datetime),120)

FROM dbo.Sell

GROUP BY ProductID, [Description], (DATEDIFF(mi,0,Timestamp_datetime)-1)/5

ORDER BY ProductID, PeriodEndTime

;Exactly the same integer math methods... just using different units of measure.

Of course, since we're using the "end date" for each 5 minute group in this example, you might have to consider the seconds in the end date entries a little differently depending on if you want them included in the minute group of 5 or in the next group if the actual entries aren't based on whole minutes. 🙂

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally)

Viewing 11 posts - 1 through 11 (of 11 total)

You must be logged in to reply to this topic. Login to reply