Performance improves after reboot

-

February 22, 2017 at 9:30 am

Jeff,

Agree. I already monitoring the content of TEMPDB and try to identify the biggest performance "killers".

I use this query below.

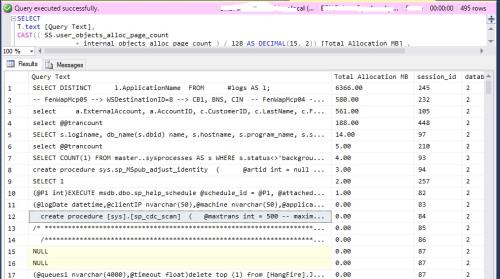

Should I focus on biggest [Total Allocation MB] queries ?SELECT

T.text [Query Text],

CAST(( SS.user_objects_alloc_page_count

+ internal_objects_alloc_page_count ) / 128 AS DECIMAL(15, 2)) [Total Allocation MB] ,

SS.session_id ,

SS.database_id ,

CAST(SS.user_objects_alloc_page_count / 128 AS DECIMAL(15, 2)) [Total Allocation User Objects MB] ,

CAST(( SS.user_objects_alloc_page_count

- SS.user_objects_dealloc_page_count ) / 128 AS DECIMAL(15, 2)) [Net Allocation User Objects MB] ,

CAST(SS.internal_objects_alloc_page_count / 128 AS DECIMAL(15, 2)) [Total Allocation Internal Objects MB] ,

CAST(( SS.internal_objects_alloc_page_count

- SS.internal_objects_dealloc_page_count ) / 128 AS DECIMAL(15,

2)) [Net Allocation Internal Objects MB] ,

CAST(( SS.user_objects_alloc_page_count

+ SS.internal_objects_alloc_page_count

- SS.internal_objects_dealloc_page_count

- SS.user_objects_dealloc_page_count ) / 128 AS DECIMAL(15, 2)) [Net Allocation MB]

FROM sys.dm_db_session_space_usage SS

LEFT JOIN sys.dm_exec_connections CN ON CN.session_id = SS.session_id

OUTER APPLY sys.dm_exec_sql_text(CN.most_recent_sql_handle) T

ORDER BY

CAST(( SS.user_objects_alloc_page_count

+ internal_objects_alloc_page_count ) / 128 AS DECIMAL(15, 2)) descRESULTS

-

February 22, 2017 at 12:09 pm

RVO - Tuesday, February 21, 2017 1:03 PMWe just had a meeting with Infrastructure team.

They say there is no evidence of "main resources throttled or yanked out from under them".

They say they monitor it. It never happened.

If I still insist - it will sound like I don't trust them . . .To be perfectly blunt about it, it doesn't matter what it sounds like.

You're a professional, they're professionals. Don't let it be personal. Your jobs, collectively, are to make the system work. If that means asking them to double or even triple check the hardware, then so be it; that is their job. If they take it personally, they're in the wrong business.

However, that being said, as a few others have already mentioned at this point you really ought to be looking into bringing in a performance specialist. Having read through the thread, you've already been given a ton of things to look at and at this point, it's clear that you have deeper problems on your system than you have the experience to handle. No offense intended, we've all been there at some point in our careers. Part of being really good at this line of work is knowing when to seek help. There is a ton of knowledge on this forum - Jeff and Kevin are two people I've learned a lot from over the years, along with others who post here - but there is a limit to what you can do over a forum.

I would urge you to bring in someone who specializes in SQL performance, who really knows what they're looking for. You could probably get this resolved in a couple of hours. It'll cost some money, yes, but really not much when you weigh it against the time that you and other members of your staff spend dealing with the performance issues you're facing, let alone any costs incurred because of the issues themselves.

-

February 22, 2017 at 12:35 pm

cphite - Wednesday, February 22, 2017 12:09 PMTo be perfectly blunt about it, it doesn't matter what it sounds like.You're a professional, they're professionals. Don't let it be personal. Your jobs, collectively, are to make the system work. If that means asking them to double or even triple check the hardware, then so be it; that is their job. If they take it personally, they're in the wrong business.

However, that being said, as a few others have already mentioned at this point you really ought to be looking into bringing in a performance specialist. Having read through the thread, you've already been given a ton of things to look at and at this point, it's clear that you have deeper problems on your system than you have the experience to handle. No offense intended, we've all been there at some point in our careers. Part of being really good at this line of work is knowing when to seek help. There is a ton of knowledge on this forum - Jeff and Kevin are two people I've learned a lot from over the years, along with others who post here - but there is a limit to what you can do over a forum.

I would urge you to bring in someone who specializes in SQL performance, who really knows what they're looking for. You could probably get this resolved in a couple of hours. It'll cost some money, yes, but really not much when you weigh it against the time that you and other members of your staff spend dealing with the performance issues you're facing, let alone any costs incurred because of the issues themselves.

I would guess it's not that simple though. RVO said at the beginning that they were an ETL developer, not a DBA, and was asked to look at the issue because the other DBAs were "too busy". I'd be more inclined to let it run like crap as it seems to be a business decision by the DBAs who should be addressing this. RVO seems to be pretty bright on the DBA side of things for being an ETL developer, I'm fairly impressed. But RVO likely shouldn't be in the middle of this in the first place. It just seems a bit too convenient that those who should be working on this are too busy. That seems to be more of the problem in terms of addressing this.

Sue

-

February 22, 2017 at 8:07 pm

Sue_H - Wednesday, February 22, 2017 12:35 PMcphite - Wednesday, February 22, 2017 12:09 PMTo be perfectly blunt about it, it doesn't matter what it sounds like.You're a professional, they're professionals. Don't let it be personal. Your jobs, collectively, are to make the system work. If that means asking them to double or even triple check the hardware, then so be it; that is their job. If they take it personally, they're in the wrong business.

However, that being said, as a few others have already mentioned at this point you really ought to be looking into bringing in a performance specialist. Having read through the thread, you've already been given a ton of things to look at and at this point, it's clear that you have deeper problems on your system than you have the experience to handle. No offense intended, we've all been there at some point in our careers. Part of being really good at this line of work is knowing when to seek help. There is a ton of knowledge on this forum - Jeff and Kevin are two people I've learned a lot from over the years, along with others who post here - but there is a limit to what you can do over a forum.

I would urge you to bring in someone who specializes in SQL performance, who really knows what they're looking for. You could probably get this resolved in a couple of hours. It'll cost some money, yes, but really not much when you weigh it against the time that you and other members of your staff spend dealing with the performance issues you're facing, let alone any costs incurred because of the issues themselves.

I would guess it's not that simple though. RVO said at the beginning that they were an ETL developer, not a DBA, and was asked to look at the issue because the other DBAs were "too busy". I'd be more inclined to let it run like crap as it seems to be a business decision by the DBAs who should be addressing this. RVO seems to be pretty bright on the DBA side of things for being an ETL developer, I'm fairly impressed. But RVO likely shouldn't be in the middle of this in the first place. It just seems a bit too convenient that those who should be working on this are too busy. That seems to be more of the problem in terms of addressing this.

Sue

Spot on, Sue. My original post, which I didn't end up posting because it sounded too harsh, was of a very similar nature. I hate what I call "Ivory Tower" DBAs that don't even know how to get the current date/time using T-SQL, never mind troubleshoot a performance problem.

The only thing that differs between what you wrote and what I think is that I'd like to help RVO outshine those deadbeats especially after what he previously said, which I quote below...

I am not DBA. I am an ETL Developer (consultant) who was asked to help.

DBA were not able so far to fix performance issue.

In my opinion they never really tried.Heh... and no... I'm definitely NOT anti-DBA... I'm also a DBA.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 22, 2017 at 8:37 pm

RVO - Wednesday, February 22, 2017 9:30 AMJeff,

Agree. I already monitoring the content of TEMPDB and try to identify the biggest performance "killers".

I use this query below.

Should I focus on biggest [Total Allocation MB] queries ?SELECT

T.text [Query Text],

CAST(( SS.user_objects_alloc_page_count

+ internal_objects_alloc_page_count ) / 128 AS DECIMAL(15, 2)) [Total Allocation MB] ,

SS.session_id ,

SS.database_id ,

CAST(SS.user_objects_alloc_page_count / 128 AS DECIMAL(15, 2)) [Total Allocation User Objects MB] ,

CAST(( SS.user_objects_alloc_page_count

- SS.user_objects_dealloc_page_count ) / 128 AS DECIMAL(15, 2)) [Net Allocation User Objects MB] ,

CAST(SS.internal_objects_alloc_page_count / 128 AS DECIMAL(15, 2)) [Total Allocation Internal Objects MB] ,

CAST(( SS.internal_objects_alloc_page_count

- SS.internal_objects_dealloc_page_count ) / 128 AS DECIMAL(15,

2)) [Net Allocation Internal Objects MB] ,

CAST(( SS.user_objects_alloc_page_count

+ SS.internal_objects_alloc_page_count

- SS.internal_objects_dealloc_page_count

- SS.user_objects_dealloc_page_count ) / 128 AS DECIMAL(15, 2)) [Net Allocation MB]

FROM sys.dm_db_session_space_usage SS

LEFT JOIN sys.dm_exec_connections CN ON CN.session_id = SS.session_id

OUTER APPLY sys.dm_exec_sql_text(CN.most_recent_sql_handle) T

ORDER BY

CAST(( SS.user_objects_alloc_page_count

+ internal_objects_alloc_page_count ) / 128 AS DECIMAL(15, 2)) descRESULTS

Not a bad place to start... especially on that first one. See that "SELECT DISTINCT"? That's normally a pretty good indication that someone thought "set based" meant "all in one query" and ended up with an accidental "many-to-many" join that caused a large number of duplicates to be formed behind the scenes.

In the absence of other tools, if you open the "Object Explorer" in SSMS (reminder, press the {f8} key to get there if it's not already open), right click on the instance at the top of the window, select reports and continue to drill down to the "performance" related reports, find the two concerning IO and CPU. Those will show you the top 10 consumers of IO and CPU. The most hungry use of resources isn't always the longest running code. Sometimes it's fairly short running code that is executed 10's of thousands of times per hour. Don't forget that the values on those reports are cumulative since the last time cache was cleared or since the last time the server was rebooted. If you want to reset it without rebooting, pick a quiet time during the day and execute a DBCC FREEPROCCACHE on the box. The first people to use queries will see some slowness because all queries will need to recompile. Before you do that, though, see the next paragraph below.

There's also "hidden" code that costs a whole lot to run. That's usually code that creates one execution plan per run and won't show up on the reports I just told you about. I've recently run into such a problem myself. I had previously identified the code as a possible future performance issue. No one believed me especially since the code "only" took 100 ms per run to execute (which is actually ridiculously long considering how short the code is and how many times per hour it's executed, IMHO). What no one else realized was that not only would the code recompile every time it was executed, it would take 2 -17 seconds to compile every time. I proved it with the code from the following article.

https://www.sqlskills.com/blogs/jonathan/identifying-high-compile-time-statements-from-the-plan-cache/So, there are actually 4 areas to study... TempDB hogs, Long running code, code that runs frequently, and repetitive code that recompiles every time it's used. If you can fix just the top 1 or 2 from each of those categories, you'll be amazed at how well the server starts to work. Don't stop, though. Get through at least the top 5 of each category and then be ever vigilant. Developers can write a ton of performance challenged code in a hurry because most of it won't be long code.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 23, 2017 at 9:26 am

Jeff Moden - Wednesday, February 22, 2017 8:07 PMSpot on, Sue. My original post, which I didn't end up posting because it sounded too harsh, was of a very similar nature. I hate what I call "Ivory Tower" DBAs that don't even know how to get the current date/time using T-SQL, never mind troubleshoot a performance problem.

The only thing that differs between what you wrote and what I think is that I'd like to help RVO outshine those deadbeats

Yup, we're coming from the same place across the board. I keep following the thread as I really want RVO to put those twits in their place.

And I love the follow up response and the four areas of study. I suspect there are others in this thread that want to see RVO come through on this for the same reasons.

DBAs like that drive me nuts. And I'm one too.Sue

-

February 23, 2017 at 9:45 am

Have you got round to rebalancing those tempdb files yet?

-

February 23, 2017 at 10:53 am

adb2303 - Thursday, February 23, 2017 9:45 AMHave you got round to rebalancing those tempdb files yet?I almost wouldn't bother with that until it's been determined what's causing them to grow to such a large size. 120GB worth of TempDB files is a bit insane even for a multi-terabyte database. RVO really needs to find out what's causing that first. Rebalancing certainly won't hurt but they're so close in size that I don't believe it'll actually matter.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 23, 2017 at 12:46 pm

Jeff Moden - Thursday, February 23, 2017 10:53 AMadb2303 - Thursday, February 23, 2017 9:45 AMHave you got round to rebalancing those tempdb files yet?I almost wouldn't bother with that until it's been determined what's causing them to grow to such a large size. 120GB worth of TempDB files is a bit insane even for a multi-terabyte database. RVO really needs to find out what's causing that first. Rebalancing certainly won't hurt but they're so close in size that I don't believe it'll actually matter.

wasn't there one that was 2GB vs other's around 30GB? SQL will probably be exclusively hitting that one - RVO could check by looking at the virtual file stats DMV to see where all the IO is. Although, I do agree 120GB tempdb files is excessive. There's obviously no silver bullet here... lots of things need sorting.

-

February 23, 2017 at 12:56 pm

adb2303 - Thursday, February 23, 2017 12:46 PMJeff Moden - Thursday, February 23, 2017 10:53 AMadb2303 - Thursday, February 23, 2017 9:45 AMHave you got round to rebalancing those tempdb files yet?I almost wouldn't bother with that until it's been determined what's causing them to grow to such a large size. 120GB worth of TempDB files is a bit insane even for a multi-terabyte database. RVO really needs to find out what's causing that first. Rebalancing certainly won't hurt but they're so close in size that I don't believe it'll actually matter.

wasn't there one that was 2GB vs other's around 30GB? SQL will probably be exclusively hitting that one - RVO could check by looking at the virtual file stats DMV to see where all the IO is. Although, I do agree 120GB tempdb files is excessive. There's obviously no silver bullet here... lots of things need sorting.

Ah... you're correct. Damned eyes... I read the 2GB file as the LDF file probably because that's the way I expected it.

https://www.sqlservercentral.com/Forums/FindPost1857742.aspxMight be worth fixing now after all.

--Jeff Moden

RBAR is pronounced "ree-bar" and is a "Modenism" for Row-By-Agonizing-Row.

First step towards the paradigm shift of writing Set Based code:

________Stop thinking about what you want to do to a ROW... think, instead, of what you want to do to a COLUMN.Change is inevitable... Change for the better is not.

Helpful Links:

How to post code problems

How to Post Performance Problems

Create a Tally Function (fnTally) -

February 23, 2017 at 1:06 pm

Jeff Moden - Thursday, February 23, 2017 12:56 PMadb2303 - Thursday, February 23, 2017 12:46 PMJeff Moden - Thursday, February 23, 2017 10:53 AMadb2303 - Thursday, February 23, 2017 9:45 AMHave you got round to rebalancing those tempdb files yet?I almost wouldn't bother with that until it's been determined what's causing them to grow to such a large size. 120GB worth of TempDB files is a bit insane even for a multi-terabyte database. RVO really needs to find out what's causing that first. Rebalancing certainly won't hurt but they're so close in size that I don't believe it'll actually matter.

wasn't there one that was 2GB vs other's around 30GB? SQL will probably be exclusively hitting that one - RVO could check by looking at the virtual file stats DMV to see where all the IO is. Although, I do agree 120GB tempdb files is excessive. There's obviously no silver bullet here... lots of things need sorting.

Ah... you're correct. Damned eyes... I read the 2GB file as the LDF file probably because that's the way I expecte it.

https://www.sqlservercentral.com/Forums/FindPost1857742.aspxMight be worth fixing now after all.

I already mentioned the unbalanced files, that they needed to be sized and growth-factored identically and explained why way back in this thread. 😀

Best,

Kevin G. Boles

SQL Server Consultant

SQL MVP 2007-2012

TheSQLGuru on googles mail service -

February 23, 2017 at 1:41 pm

Jeff,

Just a reminder.

TEMPDB size is 225 GB. Not 120GB.One of the TEMPDB files size is actually not close at all.

It's around 2GB. All others are around 30GB.This coming Sunday, February 26 between 12AM and 6 AM, DBA's will upgrade PRD to

SQL Server 2014SP2 CU3 - 12.0.5538.0

Because it needs SQL Service rastart - I thought to use this opportunity for TEMPDB resizing.My plan was:

1) Shrink TEMPDB files, let;s say to 5GB each.

2) Grow TEMPDB files to 10GB and set Autogrowth=1GB

3) Ensure Even Autogrowth:DBCC TRACEON(1117)

GO

4) Schedule every 1 hour job to log TEMPDB files sizeBy finding time slot when TEMPDB files grew - I thought it would help me to find those "bad" queries that forces TEMPDB grow.

By the way,

I prepared all the scripts and setup a meeting today with DBA's but they didn't accept my invitation.

I guess TEMPDB resizing will not happen this weekend. -

February 23, 2017 at 1:47 pm

Jeff,

"...SQL will probably be exclusively hitting that one - RVO could check by looking at the virtual file stats DMV to see where all the IO is...."Can you please give me a query to find virtual file stats for tempdb files?

-

February 23, 2017 at 1:48 pm

RVO - Wednesday, February 22, 2017 8:01 AMPerformance did not get substantially worse yet since last reboot on January 29th.

My guess one of these things could help:

----We ran UPDATE STATISTICS 2-3 times since last reboot

----Moved Transaction Log file of one of busy databases to a proper drive (before it was sitting together with data files)Maybe I was wrong when said performance gradually gets worse.

I'm afraid it might be pretty sudden.

Like 2-3 days of slower than usual and then big slowdown.

We don't see a big Disk latency now. Like we saw last time before reboot.

Overall it stays on the same level it was right after reboot.Noticed the thing about performance being suddenly worse instead of gradually...

We had an issue a few months back with one of our servers, where the VM host was writing a journal file - it basically tracks all of the changes that have occurred on the disk since the last snapshot. Anyway, once the file would get to a certain size, things would very abruptly slow to a crawl on the SQL instance. Our hardware team explained that it was because on the storage it was having to scan through this huge file for basically every transaction. Sorry if that's vague but I'm not a hardware storage guy. In any event, they disabled the journal and we haven't had the problem since.

-

February 23, 2017 at 3:18 pm

RVO - Thursday, February 23, 2017 1:47 PMJeff,

"...SQL will probably be exclusively hitting that one - RVO could check by looking at the virtual file stats DMV to see where all the IO is...."Can you please give me a query to find virtual file stats for tempdb files?

At a basic level on that DMV you can just to:

select * from sys.dm_io_virtual_file_stats(2,NULL) ;The first argument is the database id, If you want to capture for all DBs, just set it to NULL. 2 is tempdb.

Usually you want to get baseline and then capture. You can just insert into a table( I'd do a static table for now just to stay out of tempdb) for the baseline and then insert the same into the table, whenever. If you want to see over a half hour, run the baseline and then just insert again 30 mins later...that kind of thing.

I just did this one for you which should work...find some database to create the table, and then just execute the insert into. Play around with it, see if that works for you and let us know if it doesn't. You can just truncate the table after playing around before you start doing someone's job for them...oh sorry I mean before you want to capture for analyzing. No doubt you'll get the gist - it's just grabbing tempdb which you can change:CREATE TABLE TempDB_IO

(DBName sysname,

file_id smallint,

num_of_reads bigint,

num_of_bytes_read bigint,

io_stall_read_ms bigint,

num_of_writes bigint,

num_of_bytes_written bigint,

io_stall_write_ms bigint,

io_stall bigint,

size_on_disk_bytes bigint,

CaptureDateTime datetime)INSERT INTO TempDB_IO

SELECT db_name(database_id) as DBName,

file_id,

num_of_reads,

num_of_bytes_read,

io_stall_read_ms,

num_of_writes,

num_of_bytes_written,

io_stall_write_ms,

io_stall,

size_on_disk_bytes,

GETDATE()as CaptureDateTime

FROM sys.dm_io_virtual_file_stats(2,NULL) ;Sue

Viewing 15 posts - 61 through 75 (of 114 total)

You must be logged in to reply to this topic. Login to reply