INTRODUCTION

Statistics contain information about the data. How the data is distributed inside the table. They are representation of a table. They represent the table in the sense that optimizer refers to the statistics to generate a plan rather than scanning the whole table for plan generation. These stats are much smaller than the original amount of data and as such, allow for a faster plan generation.

The optimizer obtains cardinality (number of records within a range) estimations by the use of statistics. If the stats are outdated, this means they no longer correctly represent the data distribution. They will provide incorrect information to the optimizer and most probably will result in poor plan generation.

Another point to stress is that to make a plan, stats will be consulted, not the base table.

This is also the exact basic principle of Hypothetical Indexes…..which is a more interesting topic….

Statistic object can be created manually using CREATE STATISTICS command. Also a statistic object is automatically created when an index is created/rebuilt. So if you create an index, a corresponding stats object is itself created. BUT this is NOT called automatically created stat (Though it is created so) instead its known as index statistics. Suppose a query is fired on a table that involves columns on which there are no indexes (hence no index related stats). Also suppose that there is no manually created stat for those columns. In such cases, SQL Server automatically creates stat object corresponding to those column(s) so that they can be referred the next time such a query comes. These are referred to as AUTOMATICALLY created.(Obviously they will be created only if you are running your database in Auto Create Stats mode. This is explained later below)

There are 3 types of statistics in a SQL Server database.

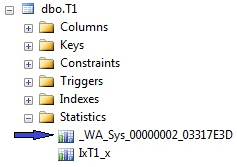

1) Statistics created due to index creation. These statistics have the index name

2) Statistics created by Optimizer(Column statistics). Starts with _WA_*

3) User defined statistics which are created with CREATE STATISTICS command by the DBA

We seldom use CREATE STATISTICS command because it is not trivial to know by which statistics will the optimizer benefit. Also it is not possible to know which stats the optimizer uses to generate plan and which stats are never used.(Like we have sys.dm_db_index_usage_stats for indexes there is no such DMV for stats)

Auto-created statistics can be easily distinguished by their name, which always starts with “_WA”. The sys.sys_stats view has a column called auto_created that differentiates between automatically and manually created statistics. For index-related statistics, this column shows a value of “false”, even though these statistics are auto-generated as well.

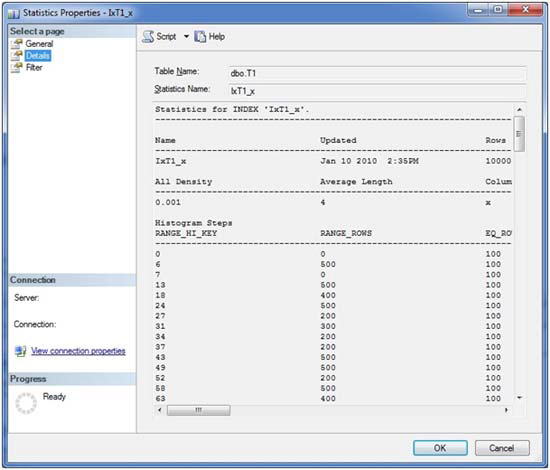

If you open the properties window of a statistics object, the “General” page provides some data about what columns are included and the point in time of the last update. (You may also trigger an instant statistics update by selecting the relevant check box). More interesting data is shown on the “Details” page. Here you will find detailed information about data distributions. Below is a sample of what you might see on this page :

If you do not see the histogram, then that’s because you might have clicked on “automatically” generated statistic. For automatically generated stats we cannot view histogram through GUI. Use the below command to view its histogram :

DBCC SHOW_STATISTICS(table_name, stats_name)

Right below the name of the statistics, there are three main areas of information:

- General information: In this area you can see information regarding the statistics’ creation, and the total number of rows the index encompasses. Also, the number of samples that have been taken from the table for the generation of the histogram is included.

- All Density: This value represents the general density of the entire statistics object. You may simply calculate this value by the formula:1/(distinct values) for the columns comprising the statistics.

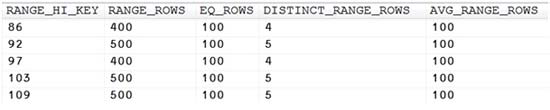

- Histogram: This table contains the sample data that has been collected from the underlying table or index. Inside the histogram, the optimizer can discover a more or less exact distribution of a column’s data. The histogram is created and maintained (only) for the first column of every statistics – even if the statistics is constructed for more than one column. The histogram table will never encompass more than 200 rows. You can find the number of steps inside the first section, containing the general information. The histogram columns have meaning as follows:

RANGE_HI_KEY

This is the column value at the upper interval boundary of the histogram step.

RANGE_ROWS

This is the number of rows inside the interval, excluding the upper boundary.

EQ_ROWS

The value represents the number of rows at the upper boundary (RANGE_HI_KEY). If you like to know the number of rows, including the lower and upper boundary, you can add this value and the number presented through the RANGE_ROWS value.

DISTINCT_RANGE_ROWS

This is the number of distinct interval values, excluding the upper boundary.

AVG_RANGE_ROWS

The value presents the average number of rows for every distinct value inside the interval, again excluding the upper boundary. Hence, this is the result of the expression RANGE_ROWS / DISTINCT_RANGE_ROWS

Keep in mind that the histogram is always only created for the leading column. So, even with multicolumn statistics, there’s only one histogram. (And this is the reason why order of columns matter while creating a composite index !!).

CREATING STATS

Statistics can be created (one at a time) using create statistics command as below:

create statistics stat_name on Table(col1,col2)

create statistics stat_name on Table(col1,col2) with fullscan

create statistics stat_name on Table(col1,col2) with sample 1000 rows

create statistics stat_name on Table(col1,col2) with sample 50 percent

If you don’t specify the sample size, the default setting is used.

Here’s how default sampling rate is calculated :

1) If the table < 8MB then it updates the statistics with a fullscan.

2) If the table > 8MB, it follows an algorithm(non-linear) :

Example: if we have 1,000,000 rows it would use a sampling rate of 30% but when the number of rows increase to 8,000,000 it would reduce the sampling rate to 10%. This default sampling rates is not under the DBAs control but optimizer decides it.

Or you can have your statistics creation done automatically (When it fires it will use the default sampling rate ), as is generally the preferred choice.

You can view the current setting/turn on/off status through Database -> Properties -> Options or you can use the below command :

alter database dbname set auto_create_statistics [off | on]

If you turn off the automatic generation option, your statistics will no longer be created for you by the optimizer. Statistics that belong to an index are not affected by this option, as those statistics will always be automatically created during index generation or rebuild.

If you do not want to create statistic one-by-one by executing CREATE STATISTICS, you may consider below option:

EXEC sp_createstats;

GO

This stored procedure will create single-column statistics for all columns in all of your tables and indexed views.

You can use this procedure along with the AUTO CREATE STATISTICS option set to ON. But then there’s a chance you will end up with a bunch of statistics that never get used, but will have to be maintained (kept in sync with source data). Since we don’t have any usage information for statistics, it’s almost impossible to detect which of your statistics the optimizer takes advantage of.

Keep in mind that automatically-created statistics are always single-column statistics. If you like to take advantage of multi-column statistics, you have to create them manually. To determine which multi-column statistic will benefit your query is quite tricky and I will leave it for some other time.

UPDATE STATS

Statistics represent a snapshot of data samples that have come from a table or index at a particular time. They must be kept in sync with the original source data in order to allow suitable estimations to be made.

Statistics only contain redundant data that have to be kept in sync with original table or index data. SQL Server allows two possibilities of keeping your statistics (almost) in sync. You can leave the optimizer in charge for this task and rely on automatic updates, or you may decide to update your statistics manually.

Below commands can be used for Automatic updates

alter database set auto_update_statistics [on | off]

alter database set auto_update_statistics_async [on | off]

There are two modes in which Auto Update works :

Synchronous updates. If the optimizer recognizes any stale statistics, when creating or validating a query plan, those statistics will be updated immediately, before the query plan is created or recompiled. This method ensures that query plans are always based on current statistics. The drawback here is that plan generation and query execution will be delayed, because they have to wait for the completion of all necessary statistics’ updates.

Asynchronous updates. In this case, the query plan is generated instantaneously, regardless of any stale statistics. The optimizer won’t care about having the latest statistics at hand. It’ll just use statistics as they are right at the moment. However, stale statistics are recognized, and updates of those statistics are triggered in the background. The update occurs asynchronously. Obviously, this method ensures for faster plan generation, but may originate only sub-optimal execution plans, since plan compilation may be based on stale statistics. Asynchronous updates are available only as an additional option of the automatic statistics update, thus you can only enable this setting with AUTO UPDATE STATISTICS set to ON.

How SQL Server determines which Statistics are STALE

SQL Server monitors data changes on columns that are included in statistics by maintaining a newly introduced, so called colmodctr for those columns. The value of colmodctr is incremented every time a change occurs to the regarding column. Every update of statistics resets the value of colmodctr back to zero.

By the use of the colmodctr column and the total number of rows in a table, SQL Server is able to apply the following policies to determine stale statistics:

- If the table contained more than 500 rows at the moment of the last statistics update, a statistics is considered as to be stale if at least 20% of the column’s data and additional 500 changes have been observed.

- For tables with less or equal than 500 rows from the last statistics update, a statistic is stale if at least 500 modifications have been made.

- Every time the table changes its row count from 0 to a number greater than zero, all of a table’s statistics are outdated.

- For temporary tables, an additional check is been made. After every 6 modifications on a temporary table, statistics on temporary tables are also invalidated.

However, colmodctr cannot be observed directly through DMVs. You have to connect via the dedicated administrator connection (DAC) and utilize undocumented DMVs :

====================================================

/*SQL 2005*/

SELECT SO.NAME AS tableName, COL_NAME(sc.object_id, sc.column_id) AS columnName, A.name as stats_name, SSC.*, SSR.* FROM sys.sysrowsetcolumns SSC

INNER JOIN sys.sysrowsets SSR ON SSC.rowsetID = SSR.rowsetID

INNER JOIN sys.sysobjects SO ON SSR.idmajor = SO.id

INNER JOIN sys.stats_columns SC on SSR.idmajor = SC.object_id AND SSC.rowsetcolid = SC.column_id

INNER JOIN sys.stats as A ON A.object_id = SO.id

WHERE SO.xtype = 'U'

ORDER BY so.name, sc.column_id

==============================================

/*SQL 2008*/

SELECT SO.NAME AS tableName, COL_NAME(sc.object_id, sc.column_id) AS columnName, A.name as stats_name, SSC.*, SSR.* FROM sys.sysrscols SSC

INNER JOIN sys.sysrowsets SSR ON SSC.rcid = SSR.rowsetID

INNER JOIN sys.sysobjects SO ON SSR.idmajor = SO.id

INNER JOIN sys.stats_columns SC on SSR.idmajor = SC.object_id AND SSC.rscolid = SC.column_id

INNER JOIN sys.stats as A ON A.object_id = SO.id

WHERE SO.xtype = 'U'

ORDER BY so.name, sc.column_id

=============================================

If you are running 2008R2 SP2 or above you can directly use below query to identify stale stats:

SELECT

obj.name, obj.object_id, stat.name, stat.stats_id, last_updated, modification_counter

FROM sys.objects AS obj

JOIN sys.stats stat ON stat.object_id = obj.object_id

CROSS APPLY sys.dm_db_stats_properties(stat.object_id, stat.stats_id) AS sp

WHERE modification_counter > 1000;

To return all statistics properties for a table

SELECT

sp.stats_id, name, filter_definition, last_updated, rows, rows_sampled, steps, unfiltered_rows, modification_counter

FROM sys.stats AS stat

CROSS APPLY sys.dm_db_stats_properties(stat.object_id, stat.stats_id) AS sp

WHERE stat.object_id = object_id('TEST');

How to prevent Auto update of a big table during business hours when automatic updates is turned on

If you experience problems with too many updates of statistics, you may wish to prevent certain tables or even statistics from automatic updates. Stored procedure sp_autostats lets you exclude certain tables, indexes or statistics from the automatic updates.

Disable AUTO_UPDATE_STATISTICS option for the AK_Product_Name index on the Product table:

EXEC sp_autostats 'Production.Product', 'OFF', AK_Product_Name;

(The CREATE and UPDATE STATISTICS commands also offers a solution, if you create statistics manually. These commands understand a NORECOMPUTE option that you can apply, if you create a statistics manually and don’t want to see automatic updates for this statistics. Likewise, the CREATE INDEX command has an option STATISTICS_NORECOMPUTE than can be switched on, if you don’t like to experience automatic updates for the index-linked statistics. Usage is given in the next few lines)

If you plan for manual updates, there are two options. You can use the UPDATE STATISTICS command or the stored procedure sp_updatestats.

Lets see how we can use UPDATE STATISTICS for manual updates:

Update all statistics tor table T1 with full scan:

update statistics T1 with fullscan

Update all column statistics (that is, statistics without a related index) for table T1 by using the default sample size and exclude those statistics from further automatic updates:

update statistics T1 with columns, norecompute

Update statistics s1 on table t1 by processing 50 percent of the table’s rows:

update statistics T1(s1) with sample 50 percent

NOTE : Index-rebuild operations also update statistics. The linked statistics is updated with full scan. Therefore it’s a bad idea to perform manual updates of index-related statistics after index-rebuild operations. It is not only that you perform the update twice, but the second update can only lead to statistics of declined quality (if you don’t use the FULLSCAN option).

Another manual update option Sp_updatestats is executed at the database level and will perform updates for all existing statistics. It will update all statistics that have experienced the change of at least one underlying row since the last statistics update. So, sp_updatestats will probably update a lot more statistics than usually necessary.

Updating statistics results in cached plan invalidations. Next time a cached plan with a meanwhile updated statistics is detected during query execution, a recompilation will be performed.

Last Points

- If you’ve set the option AUTO CREATE STATISTICS OFF and overlooked the task of creating statistics manually, the optimizer will suffer from missing statistics.

- SQL Server does not maintain statistics for XML and spatial data types.

- If your database is set to read-only, the optimizer can’t add missing statistics even with AUTO CREATE STATISTICS enabled.

- If you rely on the automatic creation of statistics, you’ll need to remember that these statistics are always single-column statistics. In many situations the optimizer can take advantage of multi-column statistics and retrieve more exact row-count estimations, if multi-column statistics are in place.

- Avoid automatic updates of statistics for very big tables during normal OLTP operations.

- Updating statistics result in cached plan invalidations. Next time a cached plan with a meanwhile updated statistics is detected during query execution, a recompilation will be performed.

- Index statistics are always updated with fullscan when we rebuild the index. Therefore it’s a bad idea to perform manual updates of index-related statistics after index-rebuild operations. It is not only that you perform the update twice, but the second update can only lead to statistics of declined quality (if you don’t use the FULLSCAN option).

Summary

Statistics reduce the amount of data that has to be processed during optimization. If the optimizer had to scan the actual table or index data for cardinality estimations, plan generation would be costly and lengthy.

Therefore, the optimizer refers to samples of the actual data called the statistics, or distribution statistics. These samples are, by nature, much smaller than the original amount of data. As such, they allow for a faster plan generation.

Hence, the main reason for statistics is to speed up plan generation.

Whenever a distribution statistic no longer reflects the source data, the optimizer may make wrong assumption about cardinalities, which in turn may lead to poor execution plans.