Next up on the SQL Server 2012 Performance testing circuit: Gap Detection. You know, where you find missing values from a table of sequential numbers.

SQL Server 2012 introduces the LEAD function, which I’ve previously blogged about here. In that blog post, I covered how to do Gap Detection using the LEAD function. Now, it’s time to put it to the performance test.

The Test

For this test, I want a large table, with at least a million rows. Obviously, gaps are needed. We need to start the table off with a gap. There needs to be gaps throughout the table. Some as small as one row, and others need to encompass many rows. Each method to test needs to identify the starting point and ending point of a gap.

The table was created with the following code:

IF OBJECT_ID('dbo.GapTest','U') IS NOT NULL DROP TABLE dbo.GapTest;

CREATE TABLE dbo.GapTest (N BIGINT CONSTRAINT PK__GapTest PRIMARY KEY CLUSTERED);

-- start with a number to make a large gap at the start of the table

INSERT INTO dbo.GapTest (N) VALUES (11000000);

-- insert rows to make 2 million

WITH

TENS (N) AS (SELECT 0 UNION ALL SELECT 0 UNION ALL SELECT 0 UNION ALL SELECT 0 UNION ALL SELECT 0 UNION ALL

SELECT 0 UNION ALL SELECT 0 UNION ALL SELECT 0 UNION ALL SELECT 0 UNION ALL SELECT 0),

THOUSANDS (N) AS (SELECT 1 FROM TENS t1 CROSS JOIN TENS t2 CROSS JOIN TENS t3),

MILLIONS (N) AS (SELECT 1 FROM THOUSANDS t1 CROSS JOIN THOUSANDS t2),

TenMillion(N) AS (SELECT 1 FROM MILLIONS CROSS JOIN TENS),

TALLY (N) AS (SELECT ROW_NUMBER() OVER (ORDER BY (SELECT 0)) FROM TenMillion)

INSERT INTO dbo.GapTest (N)

SELECT TOP (1999999) (82011000000000) + N

FROM TALLY;

-- delete some records to make gaps

DELETE dbo.GapTest

WHERE (N BETWEEN 82011000402000 AND 82011000500000

AND N % 2000 = 0) -- delete one row every 2000 rows

OR (N BETWEEN 82011000600001 AND 82011000700000); -- delete this larger gap of 100,000 rowsThis code starts off with a starting gap of eleven million. Next, more records are added to this to bring the table up to two million rows. Finally, many records are deleted, giving many gaps of one row, and an additional large gap of 100,000 rows.

The Contenders

The first method to test is the LEAD function. The code for doing this is very simple, and hopefully, extremely efficient and fast:

WITH cte AS

(

SELECT curr = N,

nxt = LEAD(N, 1) OVER (ORDER BY N)

FROM dbo.GapTest

)

SELECT [START OF Gap] = curr+1,

[END OF Gap] = nxt-1

FROM cte

WHERE nxt-curr > 1;

GOThe second contender is the method that Itzik Ben-Gan published in SQL Server MVP Deep Dives:

SELECT StartRange = N+1,

EndRange = (SELECT MIN(lt2.N)

FROM dbo.GapTest lt2

WHERE lt2.N > lt1.N) - 1

FROM dbo.GapTest lt1

WHERE NOT EXISTS

(SELECT N

FROM dbo.GapTest lt3

WHERE N = lt1.N+1)

AND N < (SELECT MAX(N) FROM dbo.GapTest lt4);The third contender is the method that I learned from Jeff Moden on SQLServerCentral.com:

SELECT GapStart = ISNULL((SELECT MAX(lo.N+1)

FROM dbo.GapTest lo

WHERE lo.N < hi.N),1),

GapEnd = hi.N - 1

FROM dbo.GapTest hi

WHERE hi.N NOT IN (SELECT N + 1 FROM dbo.GapTest)

AND hi.N > (SELECT MIN(N) FROM dbo.GapTest);Looking at the code from Jeff and Itzik, you can see that they are very similar, though they do have their differences. Notably, they each have three sub-queries against the table, so it looks like the Reads will be higher than what the LEAD function will have. So, let the battle of the performance gurus vs. SQL Server 2012 begin!

Running the Test

Each of the contenders code is put into a stored procedure, and modified to dump the results to a temporary table instead of returning the output to the screen. The following code is used to generate IO and Time statistics, and to run each method ten times:

SET STATISTICS IO,TIME ON;

GO

EXECUTE dbo.GapTest_LEAD;

EXECUTE dbo.GapTest_Itzik;

EXECUTE dbo.GapTest_Jeff;

GO 10

SET STATISTICS IO,TIME OFF;

GOThe Results

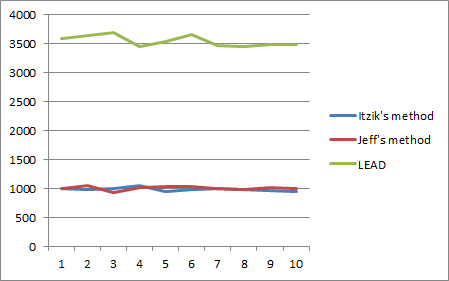

The statistics returned were made into a table and copied into Excel where a line chart was made showing how well each performed. (Units are milli-seconds.)

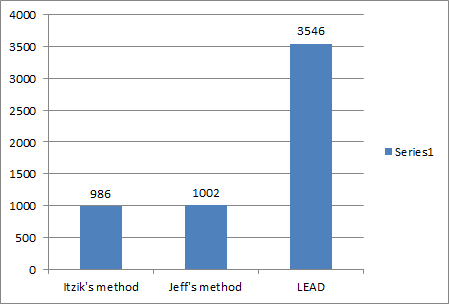

Well, right off I can see that LEAD does not perform as fast and efficient as I had hoped for. The methods from Itzik and Jeff are extremely close – a virtual tie. So, I took the average of the ten runs and charted that as a bar graph:

Here you can see that they are indeed extremely close – a difference of only 16 milli-seconds. The code from either one of these performance gurus runs 3.5 times faster than what the LEAD function from SQL Server 2012 gives us.

The other statistics are interesting: The LEAD function has 1 scan with 4014 logical reads; the gurus both have 55 scans with 8189 logical reads. So, my guess about more reads was dead on. Unfortunately, this did not lend itself to a performance improvement.

The Wrap Up

Well, this is pretty obvious. The code from either Itzik or Jeff will run astonishingly fast. They are so close, that I won’t even declare one of them a winner. Use either one.

The Invitation

If you have some code that you think is a contender for these, please post a reply with it. I’ll add it to the test suite, and update this post to show how it fares if it’s close or better than what these methods from Itzik or Jeff do.