There are a few design choices that can make your SSIS packages run super fast when loading data from table A to table B.

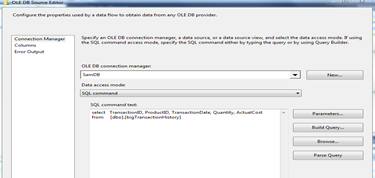

SQL Command at Source

OLE DB source editor allows you to choose either a table or a view from a drop down if data access mode is Table or View. This will execute a SELECT * command on the data source. As a best practice, it is always better to list the columns even when you are selecting all of them. Choose SQL Command and type in the query.

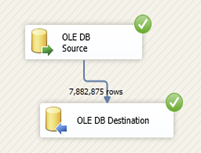

Table or View – Fast Load at Destination

As the name suggests, Table or View – Fast Load is the fastest way to load data to destination. It applies a table lock on the destination table and performs bulk insert. It is in arguably the fastest way to insert data.

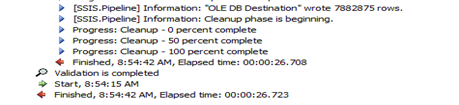

My package on a machine with 8GB RAM and 4 processors ran for 58 seconds to load 7.9 million records. Compare this to 50 minutes when using Table or View as the data access mode. This option works similar to a cursor – inserts one record at a time. Clear evidence for it’s poor performance.

50 minutes? hell No! But 58 seconds to load about 8 million records isn’t bad. Right?

Let’s dig a little deeper to see if we can tune this even more.

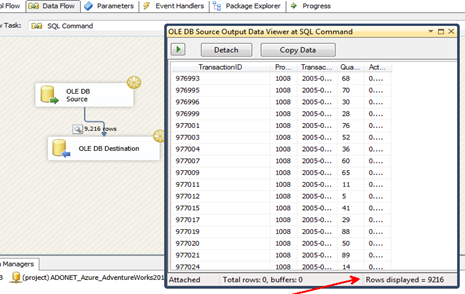

Default Buffer

SSIS relies heavily on buffer. A set of records are read into the buffer, and from buffer they are written to the destination. This process continues until all rows are written to destination. For instance, in this example, buffer is carrying 9,216 rows at a time. You can see that by using data viewers.

This number is controlled by two properties DefaultMaxBufferRows and DefaultMaxBufferSize. The values are 10,000 rows and 10MB by default, whichever comes first. MaxBufferSize is 100MB – it’s the maximum size of rows that can be fitted in buffer.

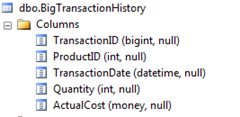

EstimatedRowSize is another property that is calculated based on the metadata of our result. This is 32 bytes for my data set. (BIGINT = 8B + INT = 4B + DATETIME = 8B + INT = 4B + MONEY = 8B) = 32 Bytes.

Buffer reached the default maximum allowed with 9,216 rows, that amounts to a size of 9,216 (rows) * 32 (bytes for each row) = 294912 Bytes which is less than 1MB, remember maximum is 100MB. There is a lot of free space left on the buffer that can be used. Filling this will result in shorten trips thus increasing performance.

For this test, I left DefaultMaxBufferSize as 10MB, but increased DefaultMaxBufferRows to 30,000 rows. The package now runs in less than 30 seconds.

Changing the settings to DefaultMaxBufferSize = 90MB and DefaultMaxBufferRows = 60,000 rows resulted in the package to execute in 15 seconds.

Wrap Up…

Understanding the internals sometimes will yield great performance without the need for additional hardware. This package is running on my local machine and data is being moved within the same database. Off course results might vary depending on your environment.

~Sam.