Support for local variables hasn't always been available in ADF and was only recently introduced to already available pipeline parameters. This addition makes more flexible to create interim properties (variables) that you can adjust multiple times within a workflow of your pipeline.

Here is my case-study to test this functionality.

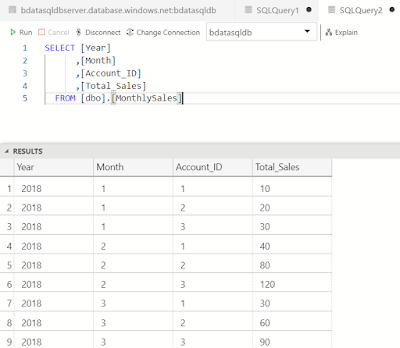

I have a simple SQL Database with 2 tables that could hold daily and monthly sales data which I plan to load from a sample set of CSV data files from my Blob storage in Azure.

My new ADF pipeline has an event trigger that passes a file path and file name values from newly created objects in my Blob storage container:

The logic then would be to check a data feed type (Daily or Monthly) based on a file name and load data to the corresponding table in SQL Database in Azure.

And here is where this [Set Variable] activity comes as a very handy tool to store a value based on a define expression of my variable:

Then I define two sub-tasks to copy data from those flat files into corresponding tables based on the value of the FeedType variable:

And once this all being done, I place two different data files into by Blob storage container and ADF Pipeline trigger successfully executes the same pipeline twice to load data into two separate tables:

Which I can further check and validate in my Azure SQL Database:

My [Set Variable] activity has been tested successfully!

And it's one more point toward using ADF pipelines more often.