Earlier this week I took part in the 24 Hours of PASS live webcast event. There were far more questions at the end of the presentation than what I could answer online, so the answers to the rest are given here. (I have edited some of the questions for spelling, grammar and readability). My slide deck and demos I was using are linked at the end. I’m not including a backup of the DB, as it’s 60MB. The schema is there, as is a SQL Data Generator project.

Q: Is the problem here that addition of checking if the variable is null? (This was related to catch-all queries. )

No, not specifically. The problem is the query pattern of <column> = <parameter> OR <parameter> = <constant> . It’s not specific to the case where that constant is NULL, though that is the more common form that I’ve seen.

Q: What are the good alternatives for catch-all queries?

If you are on SQL 2008 SP2 or later you can add OPTION(RECOMPILE) to the catch all query and get a plan that is optimal for each particular set of parameters.

On SQL 2005 and earlier, even with a recompile you would not get an optimal plan. This is because the optimizer was required to create a plan that was safe for reuse, even if it was never going to be reused.

On builds on SQL 2008 prior to SP1, the use of OPTION (RECOMPILE) worked, but there was a bug related to that which could produce inaccurate results, so use with caution.

On builds of SQL 2008 between SP1 and SP2 (or SP1 CU5), the behaviour of a catch-all with recompile was the same as for SQL 2005

If you’re using SQL 2005 (or horrors SQL 2000) or a build of SQL 2008 that did not have the recompile options working, you can use dynamic SQL (there are advantages to dynamic SQL even on the latest builds of SQL 2008). Use the parameters passed to build up a string that just filtered on the columns for which parameters were passed, then use sp_executesql to run the parameterised dynamic SQL statement.

Details are available on my blog – http://sqlinthewild.co.za/index.php/2009/03/19/catch-all-queries/

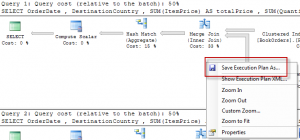

Q: If you don’t have access to run the execution plan, but have to have DBAs run it for you, is there a good way to have it captured?

Absolutely. In SQL 2005 and above, the execution plan is an XML document. You can save the plan as a .sqlplan file. That file can then be copied, emailed, etc. You can open it in management studio and SSMS will display the graphical plan.

Or, you could download Plan Explorer and open the plan in that.

Q: Can you demo an example of fixing parameter sniffing using local variables?

CREATE PROCEDURE OrdersByCountry (@Country CHAR(2))

AS

DECLARE @CountryInner CHAR(2)

SET @CountryInner = @Country

SELECT

OrderDate ,

DestinationCountry ,

SUM(ItemPrice) AS totalPrice ,

SUM(QuantityPurchased) AS totalPurchased

FROM dbo.BookOrders AS bo

INNER JOIN dbo.OrderDetails AS od ON bo.OrderID = od.OrderID

WHERE DestinationCountry = @CountryInner

GROUP BY OrderDate, DestinationCountry

GO

When run, this has a constant execution plan, no matter what parameter values it’s called with the first time.

With that, the exec plans are the same no matter what parameter value is passed. It’s not necessarily the best plan (for smaller row counts it is not), but it is consistent.

Q: Doesn’t recreating indexes update statistics?

Rebuilding or dropping and recreating an index updates it’s associated statistics with full scan. Reorganizing the index does not. Neither will affect the column statistics, statistics that are not associated with an index.

Q: On fixing parameter sniffing, your suggestion that we should insure each possible parameter type gets its own plan seems counterintuitive to the avoid ‘Bad query patterns: multiple execution paths’ advice. I think I’m missing something… can you clarify?

Not necessarily each possible different parameter types, just those with vastly different prevalences in the table. So going back to the book order table that I was using, it may be that most of the rows in table are for countries US, UK and AU (Australia). So we may want to have one stored procedure that’s used for those countries and another for everyone else (on the assumption that, for whatever reason, everyone else makes up a very small percentage of the table). So I might do something like this.

CREATE PROCEDURE OrdersByCountryUSUKAU (@Country CHAR(2))

AS

SELECT

OrderDate ,

DestinationCountry ,

SUM(ItemPrice) AS totalPrice ,

SUM(QuantityPurchased) AS totalPurchased

FROM dbo.BookOrders AS bo

INNER JOIN dbo.OrderDetails AS od ON bo.OrderID = od.OrderID

WHERE DestinationCountry = @Country

GROUP BY OrderDate, DestinationCountry

go

CREATE PROCEDURE OrdersByCountryOther (@Country CHAR(2))

AS

SELECT

OrderDate ,

DestinationCountry ,

SUM(ItemPrice) AS totalPrice ,

SUM(QuantityPurchased) AS totalPurchased

FROM dbo.BookOrders AS bo

INNER JOIN dbo.OrderDetails AS od ON bo.OrderID = od.OrderID

WHERE DestinationCountry = @Country

GROUP BY OrderDate, DestinationCountry

go

CREATE PROCEDURE OrdersByCountry (@Country CHAR(2))

AS

IF @Country IN ('UK','US','AU')

EXEC OrdersByCountryUSUKAU @Country

ELSE

EXEC OrdersByCountryOther @Country

GO

Now this doesn’t cause the kind of problems seen with multiple execution paths because what is in the branches here are separate stored procedures, and each will have it’s own individual execution plan.

This is not necessarily the best solution, it’s not something I would do normally. It increases the amount of code in the database and now any changes to the book order stored procedure have to be made in two places. If that particular procedure is such that it absolutely must execute as optimally as possible no matter what (and we don’t want the overhead of constant recompiles), it is an option

Q: Any tips for improving quality of statistics for very large tables when they are already computed with a full scan? Are filtered indexes the only option?

Filtered indexes or filtered statistics. The 250 steps for the statistics histogram can’t be configured. On larger tables that may be inadequate and the optimizer may think there are fewer rows for some value than there are because it has inadequate (rather than out-dated) statistics.

If you do chose to use filtered indexes or statistics, you need to update the statistics manually. With filtered statistics the 20% threshold for invalidating statistics is 20% of the table, not 20% of the filtered portion of the table. So you could have filtered statistics on just 5% of the table and you would still have to wait for 20% of the entire table to be updated before those stats would be invalidated.

Q: How efficiently do execution plans work with temp tables or table variables?

Same as for permanent tables. With table variables, because there are no statistics, row count estimations are often incorrect. It’s something that needs to be taken into account when using table variables.

With temp tables, if you want to use SSMS’s estimated execution plan feature, you need to create the temp table first or you’ll get an error saying that it doesn’t exist.

Q: I have been using local variable and substitute the passed variables to local variables to avoid parameter sniffing, is that good thing to do?

It’s one of the ways to fix parameter sniffing. I wouldn’t do it as default in every stored procedure, without parameter sniffing (which please remember is a good thing most of the time) you may be getting sub-optimal execution plans. For procedures that are prone to problems cause by parameter sniffing, sure.

Q: Do you think XQuery will work to interrogate the XML Query plans

Absolutely, yes. I’m no expert on XQuery (closer to the opposite), but it certainly can be done and can be very useful. There are examples here:

One word of caution here, if you are going to do this against the cached plans, don’t run the XQuery directly against the plan cache of the production server. Extract the plans you are interested in to a table, transfer that to a dev/test server and run your XQuery there.

Q: Do you ever use indexes to fix plans? You left them out.

I didn’t leave them out, indexes for fixing performance is a whole presentation of it’s own, if not a full day or two training.

Indexes alone aren’t going to help with the kind of problems I covered here, parameter sniffing, stale stats, bad query patterns, because the root of most of those is the optimizer creating a plan which is good (or at least one it thinks is good) which is then reused inappropriately.

Q: We all know NULL stands for “nothing here” or “no data” or “unknown value”. From a performance standpoint, shouldn’t we just drop NULL from physical designs and use a default value (empty string, 0, way out of bounds dates, etc.)? Does IFNULL() badly hit performance?

Wow, that’s opening the proverbial can of worms…

I’m actually in favour of nulls in a database. If there’s no data for a column I don’t want some made up value to indicate that the data is missing.

This is a major debate in DB design circles and there’s no single way that satisfies everyone.

ISNULL can have effects on performance, depending how it’s used. Like just about everything else, use it in appropriate places and you should be fine.

Q: Can the execution plans anyway be used in baselining performance? If yes, how?

I suppose you can. Baselining is all about getting stats and numbers for normal performance. Catching the execution plans for common queries and storing what their normal execution plans are could be useful when problems arise.

You can’t derive query performance from execution plans though.

Q: Is there any scenario the plan column could be null in plan cache ? I see entry for my SP in cache but column plan is always null. SP runs every 2-3 mins though. I am using option(recompile) for one statement in SP though.

I have seen cases where the plan is null, I don’t know what causes it.

Slide deck and demos: 24HoP_demo, 24HoursofPASS_PPT