By Steve Bolton

…………The Tietjen-Moore test may have the coolest-soundest name of any of the outlier detection methods I’ll be surveying haphazardly in this amateur series of mistutorials, yet it suffers from some debilitating limitations that may render it among the least useful for SQL Server DBAs. It is among a set of six methods that I’ll be dispensing with quickly in the middle of this series because they’re designed to perform hypothesis testing on small datasets of a few dozen or a few hundred records, not the thousands commonly found in the smallest SQL Server databases, let alone the billions or even trillions associated with the Big Data buzzword. By the end of the series, I may be capable of providing a matrix in which the various means of finding aberrant data points are divided by their use cases and the questions users want to ask of the data, followed by the number and types of inputs and outputs and their mathematical properties, as well as the means of calculation in between those two steps if it is relevant to performance or adequate access. Any associated workflow ought to include steps to define outliers with rigorous logic, then assess the underlying causes of any that are found to fit those criteria before proceeding to the final step, matching them to a proper response, whether it be deletion in the case of certain data quality issues or elation if outliers happen to be a positive outcome according to the context. As discussed at length in previous articles, a failure in any one of these areas can be worse than an incorrect calculation, in terms of misleading conclusions or even unethical responses. The set of hypothesis testing methods that Tietjen-Moore belongs to would occupy a quite narrow range of such a matrix, since they have numerous constraints that make them inapplicable in many situations, such as the requirement of a Gaussian or “normal” distribution (i.e. a bell curve) and the requisite goodness-of-fit testing to prove it. Within this subset, the Tietjen-Moore test is actually more restrictive in its own way than many others – even Grubbs’ Test (which Tietjen-Moore is derived from) and Dixon’s Q-Test, which can only be used to identify a single outlier. It too returns a single result, but which is merely a Boolean yes/no answer to the question, “Does the dataset contain exactly n number of outliers?” The Generalized Extreme Studentized Deviate Test (GESD), the topic of the last article, can also be used to ascertain how many outliers a dataset has, but is more useful because it does not require the user to guess the exact number in advance.

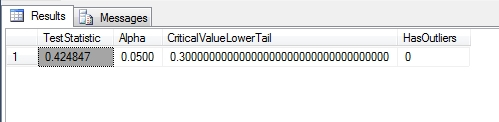

…………The chances of guessing the correct number are much greater in the kinds of relatively small datasets used in hypothesis testing, but obviously quite difficult even in a conventional SQL Server database with millions of more rows. Furthermore, the performance penalties for repetitively retesting a dataset until the correct number is arrived at is obviously much higher with a larger database, even if we don’t factor in the obvious need to also test it many more times over. Moreover, as mentioned in the last couple of articles, many of the outlier detection methods drawn from the realm of hypothesis testing require looking up critical regions for common data patterns like Student’s T-distribution. Unfortunately, the lookup tables widely available on the Internet normally stop at a few hundred rows and are often riddled with gaps, but calculating the missing values needed for millions of records can be both computationally costly and intellectually draining. The description of the Tietjen-Moore Test in the National Institute for Standards and Technology’s Engineering Statistics Handbook (one of the most readable online sources of information on statistics) indicates that such lookup tables are not readily available in this case: “The critical region for the Tietjen-Moore test is determined by simulation. The simulation is performed by generating a standard normal random sample of size n and computing the Tietjen-Moore test statistic. Typically, 10,000 random samples are used. The value of the Tietjen-Moore statistic obtained from the data is compared to this reference distribution.”[1] To be representative of a much larger dataset like those found in SQL Server tables, these random samples would have to be much larger than those normally performed in hypothesis testing; taking 10,000 of these larger samples would add to the performance costs, which already include ferreting out the exact number of outliers in advance, plus rigorous goodness-of-fit testing in beforehand to discern whether or not the necessary bell curve is operative or not. That is why I enabled users to supply their own value through the @CriticalValueLowerTail parameter in Figure 1, which implements the test in a T-SQL stored procedure. In Figure 2 I set an arbitrary value of 0.3, and since the value of the test statistic in that case was higher, the hypothesis that the dataset contains exactly 20 outliers was rejected. The test statistic returned by the procedure may still be of use, however, since it can be interpreted thus even without the critical region: “The value of the test statistic is between zero and one. If there are no outliers in the data, the test statistic is close to 1. If there are outliers in the data, the test statistic will be closer to zero.”[2]

Figure 1: Code for the Tietjen-Moore Test

CREATE PROCEDURE [Calculations].[TietjenMooreTestSP]

@DatabaseName as nvarchar(128) = NULL, @SchemaName as nvarchar(128), @TableName as nvarchar(128),@ColumnName AS nvarchar(128), @PrimaryKeyName as nvarchar(400), @DecimalPrecision AS

nvarchar(50), @Alpha decimal(5,4) = 0.05, @K bigint, @CriticalValueLowerTail decimal(38,35), @DoTwoTailedTest bit = 1

AS

SET @DatabaseName = @DatabaseName + ‘.’

DECLARE @SchemaAndTableName nvarchar(400)

SET @SchemaAndTableName = ISNull(@DatabaseName, ”) + @SchemaName + ‘.’ + @TableName

DECLARE @SQLString nvarchar(max), @CommonTestCode nvarchar(max), @MeanConditionCode nvarchar(max), @SelectConditionCode nvarchar(max), @TempCTEOrderByCode nvarchar(max)

SET @CommonTestCode = ‘DECLARE @Mean decimal(‘ + @DecimalPrecision + ‘), @K bigint

SET @K = ‘ + CAST(@K AS nvarchar(50)) + ‘

SELECT @Mean = Avg(CAST(‘ + @ColumnName + ‘ AS Decimal(‘ + @DecimalPrecision + ‘)))

FROM ‘ + @SchemaAndTableName + ‘

WHERE ‘ + @ColumnName + ‘ IS NOT NULL’

SELECT @MeanConditionCode = CASE WHEN @DoTwoTailedTest = 1 THEN ‘RN < @K’ ELSE ‘RN >= @K’ END

SELECT @SelectConditionCode = CASE WHEN @DoTwoTailedTest = 1 THEN ‘RN > MaxRN – @K’ ELSE ‘RN < @K’ END

SELECT @TempCTEOrderByCode = CASE WHEN @DoTwoTailedTest = 1 THEN ‘ORDER BY ABS(‘ + @ColumnName + ‘ – @Mean)’ ELSE ‘ORDER BY ‘ + @ColumnName + ” END

SET @SQLString = @CommonTestCode +

‘; WITH TempCTE

(‘ + @PrimaryKeyName + ‘, ‘ + @ColumnName + ‘, RN, MaxRN)

AS

(

SELECT ‘ + @PrimaryKeyName + ‘, ‘ + @ColumnName + ‘, RN, Max(RN) OVER (ORDER BY RN DESC) AS MaxRN

FROM (SELECT ‘ + @PrimaryKeyName + ‘, ‘ + @ColumnName + ‘, ROW_NUMBER() OVER (‘ + @TempCTEOrderByCode + ‘) AS RN

FROM ‘ + @SchemaAndTableName + ‘

WHERE ‘ + @ColumnName + ‘ IS NOT NULL) AS T1),

NewMeanCTE

(NewMean)

AS

(

SELECT Avg(CAST(‘ + @ColumnName + ‘ AS Decimal(‘ + @DecimalPrecision + ‘)))

FROM TempCTE AS T1

WHERE ‘ + @MeanConditionCode + ‘ — computes a different mean based on a subset of the dataset

)

SELECT TestStatistic, ‘ + CAST(@Alpha AS nvarchar(50)) + ‘ AS Alpha, ‘ + CAST(@CriticalValueLowerTail AS

nvarchar(50)) + ‘ AS CriticalValueLowerTail, ”HasOutliers” = CASE WHEN TestStatistic < ‘ + CAST(@CriticalValueLowerTail AS

nvarchar(50)) + ‘ THEN 1 WHEN TestStatistic >= ‘ + CAST(@CriticalValueLowerTail AS nvarchar(50)) + ‘ THEN 0 ELSE NULL END

FROM (SELECT TOP 1 TopOperand / BottomOperand AS TestStatistic

FROM (SELECT SUM(CASE WHEN ‘ + @SelectConditionCode +

‘ THEN 0 ELSE Power((CAST(‘ + @ColumnName + ‘ AS Decimal(‘ + @DecimalPrecision + ‘)) – (SELECT NewMean FROM

NewMeanCTE)), 2) END) OVER (ORDER BY RN) AS TopOperand, SUM(Power((‘ + @ColumnName + ‘ – @Mean), 2)) OVER (ORDER

BY RN ASC) AS BottomOperand, RN, (SELECT NewMean FROM NewMeanCTE) AS NewMean, ‘

+ @ColumnName + ‘

FROM TempCTE AS T1) AS T2

ORDER BY RN DESC) AS T2‘

–SELECT @SQLString — uncomment this to debug string errors

EXEC (@SQLString)

…………Readers of other installments in this series should easily spot some commonalities between the code in Figure1 and that of other procedures I’ve posted in recent weeks. The procedure resides in a schema named Calculations, which you can change; to keep it short and simple, I left out SQL Injection and security code; I don’t allow spaces in my object names, so you’ll have to add such logic yourself; the first five parameters allow you to perform the test on any column in any database for which you have adequate access. The column must of course have a numerical data type, whose upper and lower ranges ought to be kept in mind when setting the usual @DecimalPrecision value to avoid arithmetic overflows. Most of the differences from other recent procedures revolve around the @DoTwoTailedTest parameter, which calls for separate greater-than and less-than comparisons that are implemented in five strings that are appended or prepended to the dynamic SQL. Usually I use just a single @SQLString variable for this purpose, which can be debugged by uncommenting the next to last line. The extra common table expression (CTE) is also needed to handle the differences between the two test variants. As usual, the procedure ends with a few nested subqueries that look more intimidating than they really are; they’re only needed to bubble up the results of computations so they can be operated on further, before returning the complete results to the user. Sometimes this is strictly necessary because the values can’t get calculated in one fell swoop, while in others it often makes the code more legible and either to debug, rather than cramming a dozen different arithmetic operations and confusing parentheses in one line.

Figure 2: Results for the Tietjen-Moore Procedure

EXEC [Calculations].[TietjenMooreTestSP]

@DatabaseName = N’DataMiningProjects’,

@SchemaName = N’Health’,

@TableName = N’DuchennesTable’,

@ColumnName = N’LactateDehydrogenase’,

@PrimaryKeyName = N’ID’,

@DecimalPrecision = N’38,21′,

@Alpha = 0.05,

@K = 20,

@CriticalValueLowerTai = 0.3,

@DoTwoTailedTest = 1

…………After validating the two-tailed version of the procedure against the sample data at the NIST webpage, I ran it against the LactateHydrogenase column of the DuchennesTable, which is derived from a 209-row dataset on the Duchennes form of muscular dystrophy that is published online by the Vanderbilt University’s Department of Biostatistics. There was little incentive to stress test it on the other two datasets I’ll be using for the rest of this tutorial series, one of which has more than 11 million rows. I’ve omitted the client statistics and execution plans I’ve often included in previous articles because the performance hits for running the test are minimal – at least when we omit the 10,000 random samples to establish the critical region and can supply a reasonable guess for the number of outliers to test for through the @K parameter.

…………Omitting the goodness-of-fit tests needed to prove that the dataset follows a normal distribution is not a good idea, however, no matter what the computational expense is. Without that precursor, the Tietjen-Moore test can be misleading, just like many of the other outlier detection methods I’m dispensing with in this segment of the series. Even when we’re certain the data ought to follow a bell curve, the main use scenario for the Tietjen-Moore test for SQL Server DBAs and data miners is likely to be restricted to merely interpreting the test statistic. The critical regions are just too computationally expensive to calculate for such a limited use. The main problem, however, is that correctly divining the correct number of outliers in advance among tens of thousands of records is like looking for a needle in a haystack. In fact, it may be worse, because once we’ve found the needle, all the test tells us is that we have indeed found it. Like many of the other means of outlier identification I’m dispensing with in this segment of the series, Tietjen-Moore is more suited for hypothesis testing, in which researchers attempt to rigorously prove a specific point about a small data sample. As I wade through this series, learning as I go, I’m beginning to realize that the kind of much larger datasets that DBAs and Big Data miners work with these days may call for new methods of outlier identification. Some of the means we’ve already discussed can be used for our scenarios, like Z-Scores and Benford’s Law, but many of the others that statisticians and researchers routinely utilize for hypothesis testing have limited applicability for our use cases, which normally begin with ferreting out data quality problems and exploratory data mining.

…………Once I’ve gotten other methods in the same class, like the Modified Thompson Tau Test and Chauvenet’s Criterion, out of the way I’ll delve into others like Interquartile Range, Peirce’s Criterion, Cook’s Distance and Mahalanobis Distance that are more likely to be of use for our scenarios. The same holds true of the segment I’ll do later on Visual Outlier Detection with Reporting Services, in which I’ll demonstrate how to spot outliers with the naked eye. The hypothesis-test set of outlier detection methods are often quite useful in rigorously confirming that the out-of-place data points spotted using eye candy like histograms and scatter plots are indeed outliers. I have some reservations about hypothesis testing even when applied to its typical use cases, like the arbitrariness of commonly assigned alpha values, the frequency with which such concepts as “confidence” and “statistical significance” are abused and the infrequency with which many researchers apply goodness-of-fit tests. Misunderstandings about randomness and probabilistic methods also abound, even in hard sciences like physics where such math concepts are used every day. I’m hardly well-versed in these matters, but I have the impression that the proofs are not as rigorous and trustworthy as those established by other subdisciplines like computational complexity and measures like Minimum Description Length (MDL) and Kolmogorov Complexity, which I hope to one day be able to code in a future tutorial series, Information Measurement with SQL Server. That series will likely be of practical use to DBAs and data miners, just as the second half of this series on outlier detection is certain to be. For the sake of completeness, however, I’ll first finish off this detour into methods based on hypothesis testing by hurrying through Dixon’s Q-Test, Modified Thompson Tau Test and Chauvenet’s Criterion. After that, we’ll get back on track with outlier detection methods that are more suited to our use cases.

[1] See National Institute for Standards and Technology, 2014, “1.3.5.17.2.Tietjen-Moore Test for Outliers,” published in the online edition of the Engineering Statistics Handbook. Available at http://www.itl.nist.gov/div898/handbook/eda/section3/eda35h2.htm

[2] IBID.