By Steve Bolton

…………My last blog series, A Rickety Stairway to SQL Server Data Mining, often epitomized a quip by University of Connecticut statistician Daniel T. Larose, to the effect that “data mining is easy to do badly.”[1] It is clear that today’s sophisticated mining algorithms can still return useful results even when misused by a bumbling amateur like myself, but it is better to understand why they work, which is an area I’m seriously deficient in. In that series of self-tutorials, I was more interested in demonstrating that SSDM could be used by someone who understood it badly, while gleaning what I could about the mechanics and concepts that underpin data mining. I intended to rectify that with a new series tentatively titled Information Measurement with SQL Server, in which I would gradually teach myself how to code dozens of metrics used in information theory and statistics in Transact-SQL (T-SQL), Multidimensional Expressions (MDX) and Visual Basic (VB) Common Language Runtime (CLR) routines. In time, I still intend to publish my misadventures with such sundry metrics as the Küllback-Leibler Divergence, Minimum Description Length, Bayes Factors, the Akaike Information Criterion and Shannon’s Entropy. I will probably also stick the plan of writing a parallel series on Using Other Data Mining Tools with SQL Server, in which I may pass on my inexperience with accessing such alternative mining platforms as WEKA and RapidMiner from Microsoft’s database server software. Prior to writing up both series, however, I decided to sneak in a short but potentially useful series on myriad means of detecting outliers using SQL Server, in part because the material is lighter and the equations involved less complicated by several orders of magnitude. That will help me bridge the gap in skills required for the Information Measurement series. All three series could be of real practical use to database administrators (DBAs) and data miners, but outlier detection is simpler both to implement and discuss. In fact, I’ll dispense in the very first article with one of the more complex and intriguing methods, Benford’s Law, which I will implement with a simple lookup table and some dynamic SQL with windowing functions. In the next eight or nine articles I’ll tackle several others means that can be implemented in SQL Server using Reporting Services, SSDM, T-SQL and possibly some CLR stored procedures written in VB.

The Uses and Abuses of Outliers

…………Before discussing any of these detection methods, it is critical to delineate what an outlier is and more importantly, how to respond to them depending on their causes. One of the greatest pitfalls of statistics (and math in general) is that it is quite easy for the numbers to become detached from their underlying logic and meaning; in such cases, the statistical packages and data mining tools will continue to spit out figures, but they will be meaningless, misleading or otherwise downright false. When math goes wrong, it is often at this juncture with logic and reason, which are terribly neglected in every academic field these days; in fact, one of the most productive things anyone in the Western intelligentsia can do today is to learn about logical fallacies and acquire the skill of recognizing them.[2] As I pointed out in the SSDM series and will reiterate in the future, at a deeper level, it is even more important to acquire the skills of recognizing one’s own fallacies and motivations for holding them – which. In a nutshell, sometimes people don’t like the truth, so they apply whatever form of intelligence they’re proficient in to find a way around it; this is one of the great banes and most common defects of all human history, one that requires painful self-examination and swallowing of pride. When math, statistics, data mining and kindred topics go wrong, it is often because they have come uncoupled from reason, not because the tools are spitting out bad numbers; the few sentences I’ve spent here are all out of proportion to the importance of keeping our calculations moored in reason and reality, given that the slightest mistake in this regard can render them useless or counterproductive. For these reasons, it is always wise to take a page from the philosophers of ages past and reduce the wiggle room available to fallacies by carefully defining our terms, without going to the opposite extreme of hair-splitting reductionism and getting lost in jargon. With all that background out of the way, let’s define an outlier rigidly but plainly so that anyone can understand it: it is a data point that contrasts with the other data points it is grouped with. How we group them is dependent on the questions we want to ask, which amount to a subjective choice; whether or not a particular data point meets the criteria we’ve set down for membership in a set or its outliers is an objective thing beyond our control. This distinction between subjectivity and objectivity is frequently confused and sometimes abused by intellectuals these days, but particularly when it comes to the treatment of outliers. Once we’ve detected them, the potential responses range from doing nothing, to applying alternate outlier-resistant measures like medians, to throwing out the data altogether.[3]

There are two broad classes of hazards involved with outliers, one of which is changing the definition of what an outlier is, or altering the criteria for membership in the larger set they are being contrasted with. Either way, it may amount to moving the goalposts, when not done for a plainly justified reason. The second snare is making assumptions about what caused the aberration in these data points, which is sometimes combined with the first. Outliers can of course be useful in detecting data quality problems, systematic errors in data collection and in the case of Benford’s Law, fraud. It would be a mistake, however, to simply change the definition of outliers or assign those data points to a different population without ascertaining whether erroneous data of some kind is indeed the culprit. This presents researchers with a subtle temptation to simply throw out data they don’t like because it doesn’t fit their preconceived notions, by changing categories. Furthermore, because outlier detection is so useful in ferreting out data quality issues, aberrant data points of any kind are often assumed to be “bad.” Like gold nuggets in the pan of a prospector, they may actually be positive, depending on the context. To a businessman, they may represent an untapped market; to a health care researcher, they may represent a population that turns out to be immune to a particular disease; to a scientist, they may represent a subgroup of materials more resistant to particular physical strain of some kind. If you found yourself in a disaster or horror film with a high body count, you don’t want to be among the “normal” population, because the norm is to end up dead; only the outliers survive in The Poseidon Adventure and Aliens.

Sometimes outliers have no significance at all, due to luck of the draw. Probability theory only tells us that over time we can normally expect a certain set of events to occur with a given frequency, but it does not guarantee it; I wouldn’t be on your chances of rolling snake eyes a hundred times in a row or getting three straight loner hands in Euchre, but it is a fallacy to believe that it can’t happen. Theoretically, all of your data points could be outliers in a given trial of any size, simply by random chance, no matter how infinitesimal it might be. Moreover, a certain number of outliers can always be expected, but that number may vary significantly depending on what the underlying distribution of the data is. In these cases, the presence of many outliers indicates that the dataset is highly skewed and comes from a fat-tailed distribution, or from a mix of one or more distributions.[4] This presents researchers with an even more subtle temptation to continue using popular methods of statistical analysis that are meaningful only with the distribution they have chosen, which more often than not is the Gaussian or “normal” distribution. Researchers in many fields prefer this distribution because there are far fewer statistical tests available for the others, so it is not in their interest to recognize outliers as evidence of some other underlying distribution. In fact, it is frightening to see how little goodness-of-fit tests are applied – particularly in medical research, which I had some incomplete training in back around my grad school days. This is part of a larger pattern of unconscionable misuse of statistics that is standard fare in medical research – which is exactly the one field in which they can do the most damage to ordinary people. At least half the research published today contains at least one statistical error, and just one is enough to render any study invalid; this figure does not take into account the logical errors that may accompany incorrect use of statistical methods and also render them useless or misleading.[5] When I brought up this figure to a health care professional I know, she told me that it is standard fare in her field to simply not publish negative findings, which is likewise dishonorable, because it amounts to suppressing all but the desired evidence. Outliers in particular are easy game for academics who are at best negligent in learning how to use their statistical tools, and at worst are hell-bent on reaching particular conclusions. This is particularly true when the presence of outliers indicates that the underlying theory is flawed, or that prohibitively expensive data collection methods are necessary. No scholar ever wants to admit that a theory they’ve invested years of thought and personal prestige into is wrong, especially when money is at stake and applying more rigorous statistical techniques would require a lot more brain work. Ethical issues of this kind are not uncommon with outlier detection, although SQL Server DBAs are less likely to encounter them if their main use case scenarios are merely looking for data quality problems or doing exploratory data mining, to ferret out possible relationships between variables for further testing.

The Enigma of Benford’s Law

Both types of DBAs, however, can make use of Benford’s Law, a strikingly different and somewhat mysterious form of outlier detection that can be used by the SQL Server community right off the bat. The gist of it is that the leading digits in many number series often follow a particular distribution, unless there is interference by some non-random process. When this occurs it is often regarded as evidence of intelligent interference, especially when there is something to be gained by it; for this reason, it is most often used to detect fraud, which really amounts to an intentional degradation of data quality. As mathematicians Arno Gerger and Theodore P. Hill note, “For instance, the U.S. Internal Revenue Service uses BL to ferret out suspicious tax returns, political scientists use it to identify voter fraud, and engineers to detect altered digital images.”[6] One of my favorite uses to date has been to uncover the kind of academic fraud I discussed in the last section, particularly in health care research and the discipline of economics.[7] West Virginia University Mark Nigrini, a noted expert on Benford’s Law, was one of the pioneers in using it to identify financial fraud, which seems to be its most popular use case. Williams College math Prof. Steven J. Miller has published several anecdotes of embezzlement uncovered through Benford’s Law and mentions a few less colorful uses that might be of use to DBAs, like “analyzing round-off errors” and “determining the optimal way to store numbers.”[8] Another application that might be of interest to DBAs is “computer disk space allocation.”[9] It has also been applied to such dry subjects as hydrology.[10] Its more glamorous use in exposing swindlers is what earned Benford’s Law a starring role on primetime television in 2006, when it was used by the good guys to catch a thief in an episode of the crime series NUMB3RS.[11]

Demonstrating that Benford’s Law works is something altogether different than stating definitively why it does. Astronomer Simon Newcomb was the first to notice it when he was flipping through a reference on logarithm tables in 1881 and saw that pages for logs starting with 1 and 2 were far more common than those starting with 8 and 9.[12] Physicist Frank Benford got his name attached instead of Newcomb because he demonstrated in 1938 that it held for “over 20,000 numbers from sources as diverse as Readers’ Digest articles, street addresses of American Men of Science, atomic weights, population sizes, drainage rates of rivers, and physical constants.”[13] It is actually more of an observation, like Moore’s Law, but even more enigmatic in that explanations of the phenomena to date have fallen short. Some observers link it to scaling laws like Zipf’s Law, which is apparently an instance of the general scaling laws generalized by Benoit Mandelbrot (1924–2010)[14] and popularized in chaos theory.[15] Hill is credited with providing the most tenable to theory to date, which statistician R.M. Fewster says is a “measure-theoretical proof that data drawn from a random mix of different distributions—rather like Frank Benford’s original 20,000—will ultimately converge to Benford’s law.” [16] Fewster attempts to “provide nothing rigorous, but provide a simple, intuitive explanation of why and when the law applies” that is helpful in finding use cases. Yet even Berger and Hill concurred with Fewster in a recent paper in which they take issue with recent explanations, then conclude with the statement that it “remains mysterious.”[17] Anyone interested in getting to the bottom of that mystery can begin at the Benford Online Bibliography at http://www.benfordonline.net/, which is a plentiful source for all things Benford-related.

Like other data mining techniques, we can still make use of it without understanding why it works – just as bumblebees don’t stop flying merely because scientists can’t explain how they apparently defy the laws of aerodynamics.[18] To put it to use, we’ll first build a lookup table, using the Data Definition Language (DDL) code below. Note that I’m using a bigint identity column for personal reasons and hosting both the table and its associated stored procedure in a Calculations schema, which may not fit your particular use cases. The identity column also starts with a zero base, to represent the digit zero. After the DDL statement, I populate it using constants provided in a couple of articles published by Nigrini.[19]

Figure 1: DDL Code for the BenfordTable

CREATE SCHEMA Calculations AUTHORIZATION dbo;

GO

CREATE TABLE [Calculations].[BenfordTable](

[ID] [bigint] IDENTITY(0,1) NOT NULL,

[ExpectedPercentageDigit1] [decimal](5, 5) NULL,

[ExpectedPercentageDigit2] [decimal](5, 5) NULL,

[ExpectedPercentageDigit3] [decimal](5, 5) NULL,

[ExpectedPercentageDigit4] [decimal](5, 5) NULL,

CONSTRAINT [PK_BenfordTable] PRIMARY KEY CLUSTERED

( [ID] ASC) WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE

= OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY]) ON [PRIMARY]

GO

INSERT INTO Calculations.BenfordTable

(ExpectedPercentageDigit1, ExpectedPercentageDigit2,

ExpectedPercentageDigit3, ExpectedPercentageDigit4)

VALUES (NULL, 0.11968, 0.10178, 0.10018),

(0.30103, 0.11389, 0.10138, 0.10014),

(0.17609, 0.19882, 0.10097, 0.10010),

(0.12494, 0.10433, 0.10057, 0.10006),

(0.09691, 0.10031, 0.10018, 0.10002),

(0.07918, 0.09668, 0.09979, 0.09998),

(0.06695, 0.09337, 0.09940, 0.09994),

(0.05799, 0.09035, 0.09902, 0.09990),

(0.05115, 0.08757, 0.09864, 0.09986),

(0.04576, 0.08500, 0.09827, 0.09982)

Figure 2: Comparison Procedure with Dynamic SQL and Windowing Functions

CREATE PROCEDURE Calculations.BenfordComparisonSP

@SchemaAndTableName as nvarchar(128) = NULL,@ColumnName AS nvarchar(128) = NULL

AS

DECLARE @SQLString nvarchar(max)

SET @SQLString = ‘

WITH TempCTE

(RecordCount, Digit1, Digit2, Digit3, Digit4)

AS

(SELECT Count(*) OVER (PARTITION BY 1) AS RecordCount,

(‘ + @ColumnName + ‘ / CAST(”1” + REPLICATE(”0”, LEN(CAST(‘ + @ColumnName + ‘ AS VARCHAR(255))) – 1) AS bigint)) %10 as Digit1, (‘ + @ColumnName + ‘ / CAST(”1” + REPLICATE(”0”, LEN(CAST(‘ + @ColumnName + ‘ AS VARCHAR(255))) – 2) AS bigint)) %10 as Digit2,

(‘ + @ColumnName + ‘ / CAST(”1” + REPLICATE(”0”, LEN(CAST(‘ + @ColumnName + ‘ AS VARCHAR(255))) – 3) AS bigint)) %10 as Digit3,

(‘ + @ColumnName + ‘ / CAST(”1” + REPLICATE(”0”, LEN(CAST(‘ + @ColumnName + ‘ AS VARCHAR(255))) – 4) AS bigint)) %10 as Digit4

FROM ‘ + @SchemaAndTableName + ‘

WHERE ‘ + @ColumnName + ‘ IS NOT NULL

),

SecondTempCTE

(RecordCount, Digit, CountForDigit, DigitNumber)

AS

(

SELECT DISTINCT RecordCount, Digit1, Count(Digit1) OVER (PARTITION BY Digit1) AS CountForDigit1, ”CountForDigit1” AS DigitNumber

FROM TempCTE

UNION

SELECT DISTINCT RecordCount, Digit2, Count(Digit2) OVER (PARTITION BY Digit2) AS CountForDigit2, ”CountForDigit2” AS DigitNumber

FROM TempCTE

UNION

SELECT DISTINCT RecordCount, Digit3, Count(Digit3) OVER (PARTITION BY Digit3) AS CountForDigit3, ”CountForDigit3” AS DigitNumber

FROM TempCTE

UNION

SELECT DISTINCT RecordCount, Digit3, Count(Digit4) OVER (PARTITION BY Digit4) AS CountForDigit4, ”CountForDigit4” AS DigitNumber

FROM TempCTE

)

– the reason zeros occur are because there are a handful of zero values

SELECT Digit, CountForDigit1, CountForDigit2, CountForDigit3, CountForDigit4,PercentageDigit1,PercentageDigit2,PercentageDigit3,PercentageDigit4,

PercentageDigit1 – (ExpectedPercentageDigit1 * 100) AS DeviationFromExpectedPercentageDigit1,

PercentageDigit2 – (ExpectedPercentageDigit2 * 100) AS DeviationFromExpectedPercentageDigit2,

PercentageDigit3 – (ExpectedPercentageDigit3 * 100) AS DeviationFromExpectedPercentageDigit3,

PercentageDigit4 – (ExpectedPercentageDigit4 * 100) AS DeviationFromExpectedPercentageDigit4,

(PercentageDigit1 – ExpectedPercentageDigit1) – (1 / (2 * RecordCount))

/ Power(((ExpectedPercentageDigit1 * (1 – ExpectedPercentageDigit1)) * RecordCount), 0.5) AS NigriniZStatisticDigit1,

(PercentageDigit2 – ExpectedPercentageDigit2) – (1 / (2 * RecordCount)) / Power(((ExpectedPercentageDigit2 * (1 – ExpectedPercentageDigit2)) * RecordCount), 0.5) AS NigriniZStatisticDigit2,

(PercentageDigit3 – ExpectedPercentageDigit3) – (1 / (2 * RecordCount)) / Power(((ExpectedPercentageDigit3 * (1 – ExpectedPercentageDigit3)) * RecordCount), 0.5) AS NigriniZStatisticDigit3,

(PercentageDigit4 – ExpectedPercentageDigit4) – (1 / (2 * RecordCount)) / Power(((ExpectedPercentageDigit4 * (1 – ExpectedPercentageDigit4)) * RecordCount), 0.5) AS NigriniZStatisticDigit4

FROM

(SELECT RecordCount, Digit, IsNull(CountForDigit1, 0) AS CountForDigit1, IsNull(CountForDigit2, 0) AS CountForDigit2, IsNull(CountForDigit3, 0) AS CountForDigit3, IsNull(CountForDigit4, 0) AS CountForDigit4,

IsNull(CountForDigit1, 0) * CAST(100 AS decimal(38,19)) / (CAST(RecordCount AS decimal(38,19))) AS PercentageDigit1, IsNull(CountForDigit2, 0) * CAST(100 AS decimal(38,19)) / (CAST(RecordCount AS decimal(38,19))) AS PercentageDigit2,

IsNull(CountForDigit3, 0) * CAST(100 AS decimal(38,19)) / (CAST(RecordCount AS decimal(38,19))) AS PercentageDigit3, IsNull(CountForDigit4, 0) * CAST(100 AS decimal(38,19)) / (CAST(RecordCount AS decimal(38,19))) AS PercentageDigit4

FROM SecondTempCTE AS T1

PIVOT (MAX(CountForDigit) FOR DigitNumber IN (CountForDigit1, CountForDigit2, CountForDigit3, CountForDigit4)) AS pvt) AS T2

LEFT JOIN Calculations.BenfordTable AS T3

ON Digit = T3.ID

WHERE Digit IS NOT NULL’

EXEC (@SQLString)

…………There’s a lot going in Figure 2, including dynamic SQL, a pivot, some windowing functions and a digit parsing trick I heavily adapted from Gordon Linoff’s example in the StackOverflow thread “Parsing an Integer Field in SQL.”[20] At present, it’s only working on tinyint, smallint, int and bigint fields, because handling data types like floats and numerics that allow decimal places will require some extra logic I haven’t implemented yet. I will if I get a request to do so or discover a need to; for now, my purpose is just to demonstrate how Benford’s Law might be applied in a SQL Server database. Some cautions are in order here. First, I don’t allow spaces in my T-SQL object names, so you’ll have to add brackets to the stored procedure manually if you want to run it against tables and columns that have them. Secondly, I also haven’t done any optimizations on the procedure at all, or even checked the execution plan yet, so test this out before running it against large tables and views. Third, there are probably a lot of undiscovered logical errors embedded here, since I haven’t tested it thoroughly. It’s also important to keep in mind that if you’re using it against a column that only has one digit, you will naturally get nulls returned for the calculations performed against the second through fourth columns, which I momentarily forgot (causing a moment of panic and the invention of a few brand new curse words). Also be aware that I may not be calculating Nigrini’s Z-Statistic correctly, since I wasn’t able to find any complete examples online to compare my results against. I derived the code from the equations on the Wikipedia page for Benford’s Law[21] and in Durtschi, et al.’s “The Effective Use of Benford’s Law to Assist in Detecting Fraud in Accounting Data.”[22] The latter has directions on how to interpret it though.

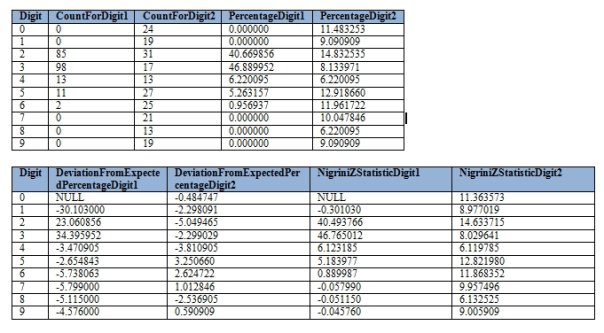

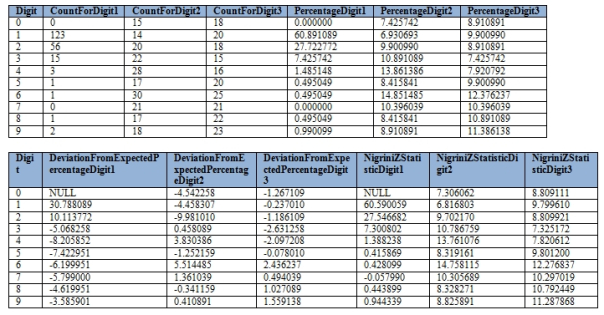

…………If all goes according to plan, however, executing a query like the one in Figure 3 ought to produce a single table with the values in the three tables below, which provide the counts, percentages, deviations and Nigrini Z-stats for the first four digits of that column. Figure 4 does the same for the LactateDehydrogenase column in the same table of the Duchennes database, which is one of three datasets I will be using for practice purposes over the course of my next three or four tutorial series. This tiny nine-kilobyte table of data on a particularly debilitating form of muscular dystrophy is one of many health care research datasets made publicly available through Vanderbilt University’s Department of Biostatistics. In future articles, I will use the much larger Higgs Boson dataset made available through the University of California at Irvine’s Machine Learning Repository and transcriptions of the Voynich Manuscript, an inscrutable medieval tome with an encryption scheme so obscure that even the National Security Agency (NSA) can’t crack it. The problem with using the Voynich Manuscript for this week’s tutorial is that it is the result of an intelligent author rather than a random natural process, one who intentionally kept his words shrouded in mystery and has succeeded for nearly six centuries. This means that it is highly unlikely to follow Benford’s Law. Likewise, the meaning of the seven gigs of float values found in the Higgs Boson dataset is still unclear to me, since I haven’t yet found documentation on its blandly named columns. The significance of the Age column in the Duchennes dataset is self-explanatory though. We can expect significant deviation from Benford’s Law here, given the natural limitations on human longevity and the preponderance of female carriers of childbearing age in the survey population. I deleted the results for the third and fourth digits of this column since there were no carriers in the population over the ages of 100 and 1,000, for obvious reasons. The LactateDehydrogenase column likewise had no values with four digits, so I deleted the corresponding results for that digit for the sake of brevity.

Figure 3: Results of the BenfordComparisonSP on the Age Column of the Duchennes Database

EXEC [Calculations].[BenfordComparisonSP] @SchemaAndTableName = N’Health.DuchennesTable‘, @ColumnName = N’Age‘

Figure 4: Results of the BenfordComparisonSP on the LactateDehydrogenase Column

…………Some of the stats for the Digit 0 are of course null, given that it can’t be the first digit of a number.Note that there are an unexpectedly high number of LactateDehydrogenase values that start with 1 and 2, but that the deviations for the rest of the values and digits drop off sharply after that. I knew very little about this enzyme before converting the Duchennes database into a SQL Server database so I figured that it might be a candidate for Benford’s Law, but the data shows that it deviates from it almost as much as the Age column. After the fact, I looked up the normal value range at MedlinePlus and sure enough, the normal values range from 105-333 IU/L (international units per liter).[23] This is why we see such high deviations for 1 and 2 on the first digit, but far smaller deviations on the second and third digits, which are not affected much by the natural range limits. In this case, the unusually high number of values starting with 1 and 2 qualify as outliers if we’re analyzing them through the prism of a Benford distribution, but it is easy to pinpoint a highly skewed natural distribution as the cause.

Uses and Misuses of Benford’s Law

…………Like any other statistical tool, Benford’s Law has to be used as carefully as a scalpel, because the slightest slip beyond the proper context and mooring in logic can render it useless or misleading. The literature on Benford’s Law is chock full of conditions that can be used by DBAs and .Net programmers to develop use cases though. The article by Durtschi, et al. states for example that “if the mean of a particular set of numbers is larger than the median and the skewness value is positive, the data set likely follows a Benford distribution. It follows that the larger the ratio of the mean divided by the median, the more closely the set will follow Benford’s law.”[24] They also point out a few common sense examples where Benford’s Law doesn’t apply, such as numbers assigned by humans (like operational codes) and numbers with specific minimum and maximum bounds.[25] The Age column discussed above probably falls under the rubric of the latter. Furthermore, they also warn that in regards to detecting financial fraud, “if there are only a few fraudulent transactions, a significant difference will not be triggered even if the total dollar amount is large. Second, statistically, if the account being tested has a large number of transactions, it will take a smaller proportion of inconsistent numbers to trigger a significant difference from expected than it would take if the account had fewer observations.”[26] It is also apparently possible to jump to conclusions in the opposite direction, given that in a case from 2006, the falsified data used in a fraudulent cancer study was found to have nonetheless followed Benford’s Law.[27] The Wikipedia page also lists some sequences that are likely to fit a Benford distribution, such as Fibonacci numbers, factorials, powers of 2 and “the powers of almost any other number”, and some that are unlikely, like “square roots and reciprocals.”[28]

…………One of the most well-written resources on the topic is accountant Tommie W. Singleton’s “Understanding and Applying Benford’s Law,” which has succinct, convenient lists of use cases, including “credit card transactions, purchase orders, loan data, customer balances, journal entries, stock prices, accounts payable transactions, inventory prices and customer refunds.”[29] He also lists “examples of data sets that are not likely to be suitable for Benford’s Law include: airline passenger counts per plane; telephone numbers; data sets with 500 or fewer transactions; data generated by formulas (e.g., YYMM#### as an insurance policy number); and Data restricted by a maximum or minimum number (e.g., hourly wage rate).”[30] Large, randomly generated datasets of unassigned numbers without minimum and maximum limits are ideal, particularly those from exponential series of several orders of magnitude.[31] He also writes that “The theory does not hold true for data sets in which digits are predisposed to begin with a limited set of digits. For instance, Benford’s Law will not hold true for data sets of human heights, human weights and intellectual quotient (IQ) scores. Another example would be small insurance claims (e.g., between US $50 and US $100). The theory also does not hold true when a data set covers only one or two orders of magnitude.”

…………In addition, we need to keep in mind that when applying this to subjects like accounting that are human inventions rather than observations of purely physical phenomena, we can’t necessarily expect them to stay static for deep reasons involving mind-blowing topics like free will and determinism. I suspect that is one of the reasons behind the puzzling increase in zeroes over time in the accounting field, which Jialan Wang discusses in depth in an interesting article on Benford’s Law.[32] Keep in mind that fraud detection is based on the principle that deviation from expected random values is often a sign of purposeful interference by a non-random process, i.e. a human intelligence. In the case of the mysterious increase in zeroes in the accounting field, we may be seeing the accidental results of half-conscious interference by human intelligences, who are unwittingly affecting the outcome of Benford’s Law by such gradual methods as routine changes in accounting practices.

To make a long story short, use your head when applying Benford’s Law. That includes using the code provided here, given that I have no professional experience with this and am learning as I go. If you can grasp Benford’s Law, the rest of the series will be a breeze – at least until the final article on a metric called Mahalanobis Distance. That will probably be the most difficult installment and will help me transition into the Information Measurement with SQL Server series, which will include implementations of various other distances and divergences. Between now and then I’ll probably tackle such simpler means of outlier detection as Z-Scores, Grubbs’ Test, the Tietjen-Moore Test, the Generalized Extreme Studentized Deviate (GESD) Test and Cook’s Distance, perhaps along with Interquartile Range, Dixon’s Q-Test, Peirce’s Criterion, Chauvenet’s Criterion and the Modified Thompson Tau test. I’m not certain of the order I’ll tackle them in yet or whether or not some of the topics might be combined, given that I haven’t coded them yet. The calculations and logic for some of these are a lot easier on the brain than their dry-sounding names, while others like Z-Scores are commonly known statistics. Along the way I will probably also write an article on Visual Outlier Detection with Reporting Services using the various graphs and charts RS provides, as well as touch on the SSDM Clustering algorithm again. As with the Rickety Stairway series on SSDM, I’m bound to make mistakes, but will probably be able to provide some benefit to other DBAs and data miners as I learn through misadventure. Due to my inexperience, I expect many of my articles to qualify as outliers in and of themselves, but by the end of the series, I aim to acquire the skills to recognize them as such, quickly and accurately.

[1] p. xii, LaRose, Daniel T., 2005, Discovering Knowledge in Data: An Introduction to Data Mining. Wiley-Interscience: Hoboken, N.J.

[2] Which I have yet to do.

[3] ASTM International (formerly known as the as the American Society for Testing and Materials), which “publishes voluntary consensus technical standards for a wide range of materials, products, systems, and services” sells a guide called Standard Practice for Dealing With Outlying Observations, if you’ve got $48 to spare for a .pdf copy (the hard cover’s the same price). That isn’t happening on my budget, but there’s the link if you want a more professional guide to the topic. I originally found out about the ASTM publication from the Wikipedia page “Outlier” at http://en.wikipedia.org/wiki/Outlier.

[4] IBID. To make sure I didn’t leave anything out, I consulted the Wikipedia page “Outlier” at http://en.wikipedia.org/wiki/Outlier, which I where I learned that mixed distributions can also be problematic.

[5] For a quick gloss on the topic of the misuse of stats in health care, see the Influential Points.com webpage “Statistical Errors in Research: Use and Misuse of Statistics in Biology,” at http://influentialpoints.com/Training/Statistical_Errors_in_Research_Use_and_misuse_of_statistics_in_biology.htm . Earlier this year another meta-analysis was released showing that half of the journal articles surveyed contained statistical errors, but I wasn’t immediately able to find a reference to that particular one. The webpage above is sufficient to help someone interested in further reading get started/

[6] Berger, Arno and Hill, Theodore P., 2011, “Benford’s Law Strikes Back: No Simple Explanation in Sight for Mathematical Gem,” published by Springer Science Business Media, LLC, Vol. 33, No. 1. Available online at http://people.math.gatech.edu/~hill/publications/PAPER%20PDFS/BenfordsLawStrikesBack2011.pdf.

[7] Tödter, Karl-Heinz, 2009, “Benford’s Law as an Indicator of Fraud in Economics,” pp. 39-351 in German Economic Review, August 2009. Vol. 10, No. 3. I think I merely read the abstract of this one, or references to it, but the important thing for my point is to know that the article exists.

[8] Miller, Steven J., 2008, “Benford’s Law and Fraud Detection, or Why the IRS Should Care About Number Theory!” Published as an outline at the Bronfman Science Lunch, Oct. 21, 2008, at Williams College. Available online at http://web.williams.edu/Mathematics/sjmiller/public_html/math/talks/Benford_Williams20min.pdf.

[9] See Fewster, R.M., 2009, “A Simple Explanation of Benford’s Law,” pp. 26-32 in The American Statistician, February 2009, Vol. 63, No. 1. 2009. Available online at https://www.stat.auckland.ac.nz/~fewster/RFewster_Benford.pdf.

[10] What good is a blog post on stats without a little “dry humor?”

[11] See the webpage “Benford’s Law” at the Wolfram Mathworld website, published by Eric W. Weisstein.

[12] See Bogomolny, Alexander, 2014, “Benford’s Law and Zipf’s Law,” published at the Interactive Mathematics Miscellany and Puzzles website. Available online at http://www.cut-the-knot.org/do_you_know/zipfLaw.shtml. The tale is related in more depth in Fewster, p. 26.

[13] p. 26, Fewster.

[14] See Bogomolny,

[15] For a readable introduction to that topic for non-mathematicians, I highly recommend, Gleick, James, 1988, Chaos: Making a New Science. Penguin: New York.

[16] p. 27, Fewster.

[17] p. 90, Berger and Hill.

[18] At least according to a famous quip by Igor Sikorsky (1889 1972), one of the inventors of the helicopter.

[19] I originally used Weisstein’s figures, until I found a more detailed table in Nigrini, Mark J. and Mittermaier, Linda J., 1997, “The Use of Benford’s Law as an Aid in Analytical Procedures,” pp. 52-67 in Auditing: A Journal of Practice and Theory, Fall 1997. Vol. 16, No. 2. Reprinted at the University of North Carolina at Charlotte’s Belk College of Business web address http://belkcollegeofbusiness.uncc.edu/cwiggins/acct6210/Lecture%20Notes/Nigrini%20article%20pdf1302507.pdf This work in turn cites Nigrini, M. J. 1996. “Taxpayer Compliance Application of Benford’s Law,” pp. 72-92, Journal of the American Taxation Association. Vol. 18, No. 1.

[20] See Linoff, Gordon, 2013, response to the thread “Parsing an Integer Field in SQL,” published Jan. 14, 2013 at the StackOverflow.com website. Available online at http://stackoverflow.com/questions/14319107/parsing-an-integer-field-in-sql.

[21] See the Wikipedia page “Benford’s Law,” available at http://en.wikipedia.org/wiki/Benford’s_law.

[22] pp. 25-26, Durtschi, Cindy; Hillison, William and Carl Pacini, 2004, “The Effective Use of Benford’s Law to Assist in Detecting Fraud in Accounting Data”, pp. 17-33 in Journal of Forensic Accounting, Vol. 5. Available online at the University of South Florida St. Petersburg webpage http://faculty.usfsp.edu/gkearns/Articles_Fraud/Benford%20Analysis%20Article.pdf

[23] See the MedlinePlus webpage “Lactate Dehydrogenase Test” webpage available at http://www.nlm.nih.gov/medlineplus/ency/article/003471.htm.

[24] p. 24, Durtschi, et al. They cite “Wallace (2002).”

[25] IBID., pp. 23-24.

[26] IBID., p. 26.

[27] Diekmann, Andreas and Jann, Ben, 2010, ‘Benford’s Law and Fraud Detection: Facts and Legends,” pp. 397–401 in German Economic Review. Vol. 11, No. 3. Available online at http://www.socio.ethz.ch/socio/news/docs/Diekmann_Jann_GER_2010_1.pdf.

[28] See the Wikipedia page “Benford’s Law,” available at http://en.wikipedia.org/wiki/Benford’s_law.

[29] Singleton, Tommie W., “Understanding and Applying Benford’s Law” undated article published at the ISACA website and retrieved Aug, 11, 2014. Available online at http://www.isaca.org/Journal/Past-Issues/2011/Volume-3/Pages/Understanding-and-Applying-Benfords-Law.aspx.

[30] IBID. I added the semi-colons myself in order to convert the list into a sentence, which I hope is permissible.

[31] IBID.

[32] Wang, Jialan, 2011, “Benford’s Law: A Revised Analysis,” published Oct. 24, 2011 at the Studies in Everyday Life: Investigations and Experiments on Food, Ethics, Economics, and the Environment website. Available online at http://econerdfood.blogspot.com/2011/10/benfords-law-revised-analysis.html