Or “If one index is good, surely many indexes (indexes? indices? indi?) will be better”

Or “If one index is good, surely many indexes (indexes? indices? indi?) will be better”

This is a question that comes up very often on the forums. Something along the lines of:

I have a query with multiple where clause conditions on a table. Should I create one index for each condition, or one index with all the columns in it?

The question basically boils down to this: Which is more optimal and more likely for the optimiser to pick, a single seek operation against a wide index that seeks on all three conditions in one go, or three seek operations against three indexes followed by a join to get back the final set of rows.

One thing to keep in mind is that one of the jobs of an index is to reduce the number of rows in consideration for a query as early as possible in the query’s execution.

So let’s take a made-up example. Let’s say we have a table with a number of columns in it. A query is run against that table with three conditions in the where clause

WHERE ColA = @A AND ColB = @B AND ColC = @C

Let’s further say that 1000 rows qualify for the condition ColA = @A, 15000 rows qualify for ColB = @B and 30000 rows qualify for ColC = @C. The total number of rows that qualify for all three conditions is 25.

Which sounds like it would be more efficient?

- Seek on an index with all three columns and retrieve just 25 rows

- Seek on an index on ColA, retrieve 1000 rows, seek on an index on ColB, retrieve 15000 rows, seek on an index on ColC, retrieve 30000 rows then join the three result-sets together to get the desired 25 rows (called an Index Intersection)

Time for some tests to find out.

CREATE TABLE TestingIndexUsage ( id INT IDENTITY PRIMARY KEY, FilterColumn1 INT, FilterColumn2 INT, FilterColumn3 INT, Filler CHAR(500) DEFAULT ''-- simulate other columns in the table. ) GO INSERT INTO TestingIndexUsage (FilterColumn1, FilterColumn2, FilterColumn3) SELECT TOP ( 1000000 ) ABS(CHECKSUM(NEWID()))%200, ABS(CHECKSUM(NEWID()))%40, ABS(CHECKSUM(NEWID()))%20 FROM msdb.sys.columns a CROSS JOIN msdb.sys.columns b GO

First off, I’m going to create three individual indexes on the three filter columns and see what kind of plan SQL comes up with.

CREATE INDEX idx_Temp_FilterColumn1 ON dbo.TestingIndexUsage (FilterColumn1) CREATE INDEX idx_Temp_FilterColumn2 ON dbo.TestingIndexUsage (FilterColumn2) CREATE INDEX idx_Temp_FilterColumn3 ON dbo.TestingIndexUsage (FilterColumn3)

And the query…

SELECT ID FROM dbo.TestingIndexUsage WHERE FilterColumn1 = 68 -- 4993 matching rows AND FilterColumn2 = 26 -- 24818 matching rows AND FilterColumn3 = 3 -- 49915 matching rows

The comments show how many rows each predicate returns alone. Combined they return 19 rows.

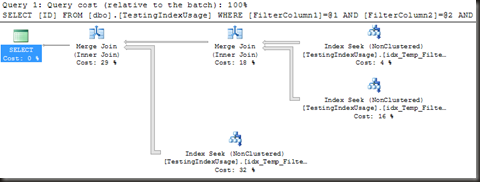

The plan shows the semi-expected index intersection. Seeks on 3 indexes, two merge join operators to join the three resultsets into one.

But what about the performance characteristics?

Table ‘TestingIndexUsage’. Scan count 3, logical reads 150, physical reads 0.

SQL Server Execution Times:

CPU time = 422 ms, elapsed time = 435 ms.

The reads aren’t very high (as the indexes are extremely narrow), but that CPU time is not exactly low. Almost half a second on the CPU to return 19 rows from a 1 million row table? Not good, especially if this is going to run often.

Right, so that’s the three separate indexes. What about the case of a single index with all three columns. In this case, because all three are SARGable equality predicates, the order of the columns isn’t critical for index usage, so I’ll put them in order of selectivity.

DROP INDEX idx_Temp_FilterColumn1 ON dbo.TestingIndexUsage DROP INDEX idx_Temp_FilterColumn2 ON dbo.TestingIndexUsage DROP INDEX idx_Temp_FilterColumn3 ON dbo.TestingIndexUsage CREATE INDEX idx_Temp_FilterColumn123 ON dbo.TestingIndexUsage (FilterColumn1, FilterColumn2, FilterColumn3)

And run the query again.

As kinda expected, the execution plan has a single index seek operation. Exec plan looks cleaner, what do the performance characteristics say?

Table ‘TestingIndexUsage’. Scan count 1, logical reads 3, physical reads 0.

SQL Server Execution Times:

CPU time = 0 ms, elapsed time = 0 ms.

Just about says it all. 147 fewer reads and a 100% reduction in CPU cost. The reduction in reads isn’t going to make a major difference, the reads were low anyway, but the reduction in CPU cost is going to make an impact if this query is frequently run.

So what can we conclude from this?

The optimal index for a query with multiple conditions in the where clause is a single index with all the columns that are used in the where clause in it. The order of these columns may matter, depending on how they are used in the where clause (see Equality predicates and Inequality predicates)

SQL can use multiple indexes on a single table (Index Intersection), but it’s not the most efficient option. It’s worth nothing that SQL won’t always chose to do the index intersection. It may quite well decide that a table/clustered index scan is faster than the multiple seeks and joins that the intersection will do. Or, if one of the conditions is very selective, it may decide to seek on one of the indexes, do key lookups to fetch the rest of the columns and then do secondary filters to evaluate the rest of the predicates.

Now it may not always be possible to create a perfect index for all queries on a table, so in some cases, especially for less important queries, having multiple indexes that SQL can seek and intersect may be adequate, but for the more critical, more frequently run queries you probably want a single index with the appropriate columns.

As an aside, this is why the often-mentioned index ‘strategy’ of a single column index on each column of a table is near-useless and certainly not worth the title ‘strategy’.