Ok, as myths go, its a pretty weak one. In fact, it is true, this whitepaper explicitly states that. But hand in hand with that statement goes another one, “Table variables will always estimate to one row”. This is most definitely false, if there are no statistics then sql server can, at times, default to its ‘guessing’ of distribution of data based upon row counts. This behaviour can even further muddy the water of the old “Which is better, table variables or temp tables” argument.

To demonstrate this, firstly we need to populate a numbers table

create table numbers

(

Num integer primary key

)

go

insert into numbers

Select top(1000) ROW_NUMBER() over (order by (select null))

from sys.columns a cross join sys.columns b

Now we execute the following code

Declare @TableVar Table

(

ID integer not null primary key,

Mod10 integer not null

)

insert into @TableVar(ID,Mod10)

Select top(20) num,num%10

from numbers

order by num

Select tv.Id,num

from @TableVar tv

join numbers

on tv.ID = num

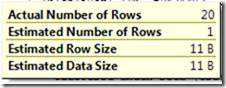

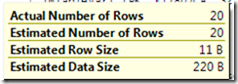

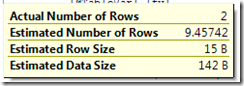

and looking at the execution plan, we see :

drop table IDs

go

create table IDs

(

Id integer primary key,padding char(255)

)

go

insert into IDs(Id,padding)

Select top(1) num,'xxx'

from numbers

order by num

go

drop procedure TableVarTest

go

create procedure TableVarTest

as

declare @TableVar Table

(

ID integer not null,

Mod10 integer not null

)

insert into @TableVar(ID,Mod10)

Select top(20) num,num%10

from numbers

order by num

select COUNT(*)

from AdventureWorks2008r2.dbo.Customer C

join AdventureWorks2008r2.dbo.CustomerOrders CO

on C.CustomerId = CO.CustomerId

Select tv.Id,IDs.id

from @TableVar tv

join IDs

on tv.ID = IDs.Id

where mod10 =0

go

insert into IDs(Id,padding)

Select top(1000) num,'xxx'

from numbers

where not exists(select id from IDs where id = num )

order by num

go

exec sp_recompile ids

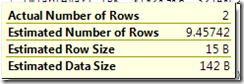

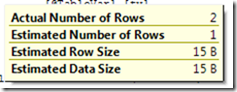

and then re-execute the stored procedure