I recently had a great question on some of the differences in virtual machine disk presentation from one of our amazing clients, and I thought I’d share the answer here because it’s a common question that I receive.

Some hypervisors (including some hyper-converged compute platform vendors who shall remain nameless) do not give you much flexibility in the way storage is presented to a VM. You pick the VM and click to add drives. That’s it. No knobs to turn, no mess, no fuss. It’s meant to be easy, and for almost all situations, that’s just fine.

But, that might not be the completely optimal way to configure a VM that is hungry for I/O (such as a large SQL Server), if you have the option to configure it a bit more closely.

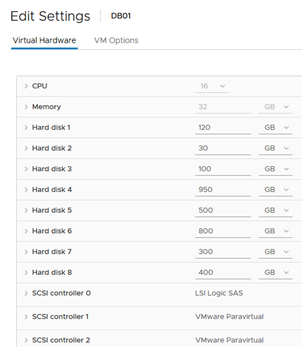

Take this for example from our internal VMware lab. This is one of our big research SQL Servers. At first glance, the configuration looks simple – it’s a straightforward VM with virtual disks connected.

But, you see the line items for the SCSI controllers at the bottom? VMware gives you the ability to define more than one disk controller (up to four). Hyper-V does too. VMware gives you the unique ability to change the ‘type’ of the controller, as it is emulating a specific hardware device. Both Hyper-V and VMware’s default controller emulates the LSI Logic SAS controller, because that’s what is built into Windows driver storage, and *just works* without having to do anything fancy.

But… it’s not necessarily there for speed. It’s there for compatibility so that you can boot up a VM without having to deal with extra drivers.

VMware created a driver a while back that comes with the VMware Tools package called the Paravirtual SCSI controller, and it gives a 10-30% bump in performance (depending on the speed of the underlying storage) because it’s built for speed from the beginning. It’s just not native to Windows, so I don’t personally feel comfortable using it for the C: drive controller unless required. You can change the controller type for these controllers, so we use it by default for non-OS SQL Server object drives.

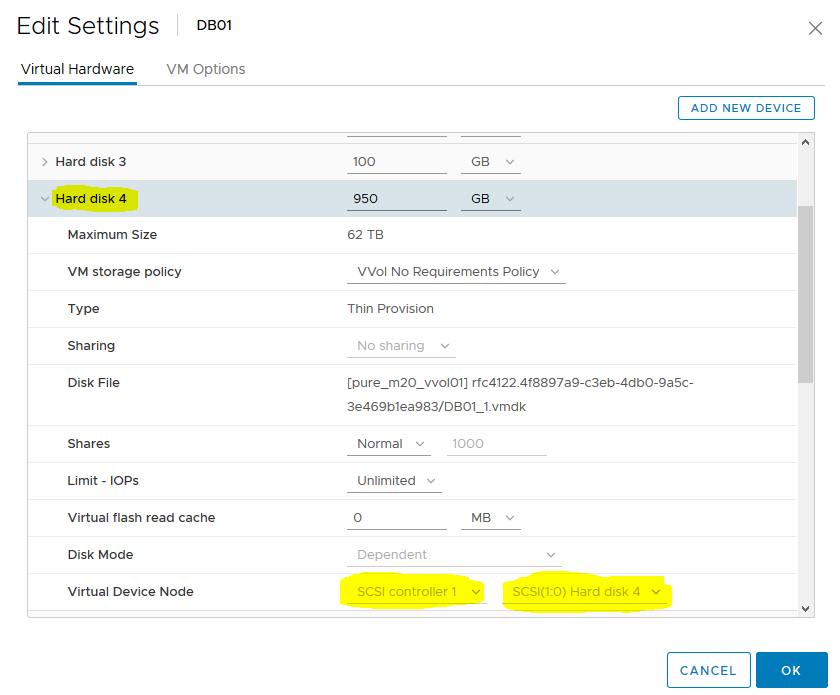

Also, in VMware and Hyper-V, you can determine the placement of this disk on the emulated hardware device disk chain. It’s a holdover from legacy times, but it’s actually how disk controllers assign disks to controllers – through this slotting mechanism.

Contrary to popular belief (if you’re a nerd like me), Windows OS does I/O queueing by disk controller, not by drive. So do the hypervisors – all of them, KVM included (what’s underneath many of the hypervisors out there if it’s not VMware or Hyper-V). By adding additional disk controllers for VMs that are incredibly heavy with I/O demand, we provide more I/O queues for Windows to leverage to independently channel the I/O out of the OS. The hypervisor works in a similar manner, and with both of these in place, we can reduce the latency induced inside Windows and the hypervisor and improve performance. You can see this on very hungry for I/O VMs by looking at Windows Perfmon disk latency metrics, and compare them with the hypervisor-layer metrics. You’ll notice that during heavy I/O windows, Windows will probably show greater latency to disk per drive than the hypervisor reports. This is the Windows layer queueing up and delaying the I/O requests slightly.

So, do your testing and due diligence on your particular environment, and if you find that spreading out your workload among multiple drive controllers improves your performance, make it your standard!