By Steve Bolton

…………When I was about 12 years old, I suddenly discovered football. Many lessons still awaited far in the future – such as the risks of being a Buffalo Bills fan, the explosive sound footballs make when they hit a Saguaro cactus, or the solid reasons for not playing tackle on city streets – but I did make one important finding right away. During the following summer vacation, I fought off boredom by looking up old rosters (in a sort of precursor to fantasy football) and running the previous year’s standings through the NFL’s comprehensive formula for creating schedules. The rules were simple: teams with higher won-loss percentages were the victors whenever they were scheduled against opponents with worse records the previous year, then at the end of each fake season, I ran the new standings through the scheduling formula again. Based on the initial patterns I expected my eccentric amusement to last for a while, but what I ended up getting was a hard lesson in information entropy. No matter what standings I plugged into the formula, they eventually stopped changing, given enough iterations; the best I could get was permanent oscillations of two teams who swapped positions in their division each year, usually with records of 9-7 and 7-9. It occurred to me that I could increase the range of possible patterns by changing the scheduling rules, but that I was merely putting off the inevitable, for in due time they would freeze in some configuration or another. The information system was purely self-contained and was not dependent on any input of physical energy whatsoever; some of my favorite underrated players of the day, like Neil Lomax and Danny White, might have been able to change the course of a real NFL season, but they were powerless to alter this one. This experience helped me to quickly grasp the Law of Conversation of Information[1] when I heard of it as an adult. It is analogous to the Second Law of Thermodynamics, but for a different quantity that isn’t really interchangeable. All of the energy of the sun itself could not affect the outcome of that closed information system, which was doomed to run down sooner or later, once set in motion. The only way to change that fate was to input more information into the system, by adding new scheduling rules, random standing changes and new teams. This is almost precisely what occurs in the Third Law of Thermodynamics[2], in which a closed physical system slowly loses its ability to change as it approaches equilibrium of some kind.

…………Both sets of principles are intimately related to entropy, which is the topic of this segment of my amateur self-tutorial series on coding various information metrics in SQL Server. Nevertheless, both are subject to misunderstandings, some of which are innocuous and others which can be treacherously fallacious, particularly when they are used interchangeably with broad terms like “order.” The most common errors with information theory occur in its interpretations rather than its calculations, since it intersects in complex ways with deep concepts like order, as well as many of the other fundamental quantities I hope to measure later in this series, like redundancy, complexity and the like. As I touched on in my lengthy introduction to Shannon’s Entropy, order is in the eye of the beholder. The choice to search for a particular order is thus entirely subjective, although whether or not a particular empirical observation meets the criteria inherent in the question is entirely objective. Say, for example, that the arrangement of stars was considered “random,” another broad term which intersects with the meaning of “order” but is not synonymous. If some we were to discover a tattoo on the arm of some celestial being corresponding to the arrangement of stars in our particular universe, we would have to assume that it was either put there through conscious effort or as the output of some unfathomable natural process. Either way, it would require an enormous amount of energy to derive that highly complex order. It might also require a lot of energy to derive an unnaturally simple order through the destruction of a fault-tolerant, complex one; furthermore, in cryptography, it sometimes requires a greater expenditure of resources to derive deceptively simple information, which is actually generated from a more complex process than the information it is designed to conceal. A perfectly uniform distribution is often difficult to derive either by natural processes or intelligent intervention; the first examples that spring to mind are the many machinists I know, who do highly skilled labor day-in, day-out to create metal parts that have the smoothest possible tolerances. If kids build a sand castle, that’s one particular form of order; perhaps a real estate developer would prefer a different order, in which case he might bulldoze the sand castle, grade the beach and put up a hotel. Both require inputs of energy to derive their particular orders, which exhibit entirely different levels of complexity. Neither “disorder” nor “order” are synonymous with entropy, which only measures the capacity of an information, thermodynamic or quantum system to be reordered under its own impetus, without some new input of information or energy from some external source.

Adjusting Hartley and Shannon Entropy by Boltzmann’s Constant

As we shall see, coding the formulas for the original thermodynamic measures isn’t terribly difficult, provided that we’ve been introduced to the corresponding information theory concepts covered in the first four blog posts of this series. Despite the obvious similarities in the underlying equations, measures of entropy are the subject of subtle differences in the way these fields handle them. In the physical sciences, entropic states are often treated as something to be avoided, particularly when cosmic topics like the Heath Death of the Universe are brought up.[3] Entropy is often embraced in data mining and related fields, because it represents complete knowledge; paradoxically, the higher the entropy, the higher the potential information gain from adding to our existing knowledge. The more incomplete our understanding is, the more benefit we can derive from “news,” so to speak.

…………It’s not surprising that the measures of entropy in thermodynamics and information theory have similar formulas, given that the former inspired the latter. Information theory repaid this debt to physics by giving birth to quantum information theory and spotlighted the deep principles which give rise to the laws of thermodynamics – which was originally an empirical observation rather than an inevitable consequence of logic. Both ultimately stem from the same principles of math and logic, like the Law of Large Numbers, but as usual, I’ll skip over the related theorems, proofs and lemmas to get to the meat and potatoes. Nor am I going to explain the principles of thermodynamics any more than I have to; this blog is not intended to be a resource for basic science, especially since that topic is much older and well-known than information theory, which means there are many more resources available for readers who want to learn more. This detour in my Information Measurement series is posted mainly for the sake of completeness, as well as the off-chance that some readers may encounter use cases where they have to use T-SQL versions of some of these common thermodynamic formulas; I also hope to illustrate the differences between physical and informational entropies, as well as demonstrate how more concepts of thermodynamics can be assimilated into information theory and put to good use in our databases.

…………On the other hand, I’m going to make another exception to my unwritten rule against posting equations on this blog, in order to point out the obvious similarities between Shannon’s Entropy and its cousin in thermodynamics, Gibb’s Entropy (a.k.a. the Shannon-Gibbs, Boltzmann-Gibbs, Boltzmann–Gibbs–Shannon, BGS or BG are all used interchangeably).[4]. As I noted in Information Measurement with SQL Server, Part 2.1: The Uses and Abuses of Shannon’s Entropy, H = -S pi logb pi is one of the most famous equations in the history of mathematics. Except for the fact it multiplies the result by the infamous Boltzmann’s Constant, kb [5] Gibb’s Entropy is practically identical: S = -kb -S pi logb pi. The thermodynamic formulas are distinguishable from their information theory kin in large part by the presence of his constant, which is measured in different branches of science via 15 different units[6]; in the Code in Figure 1, I used the formulas corresponding to the three main units used in information theory, which I introduced in the article on Hartley’s Function. Its discoverer, Austrian physicist Ludwig Boltzmann (1844-1906) was another one of those unbalanced math wizzes who bequeathed us many other crucial advances in the hard sciences, but who was eccentric to the point of self-destruction; he was in all likelihood a manic depressive, which may have led to his suicide attempts.[7] He also feuded with Ernst Mach (1838-1916), another influential Austrian physicist – yet that may have been to his credit, given that Mach was one of the last holdouts in the field who opposed the existence of atoms.[8]

…………Boltzmann also lent his name to the Boltzmann Entropy, whose formula is obviously similar to the Hartley function. As I pointed out in Information Measurement with SQL Server, Part 1: A Quick Review of the Hartley Function, deciding which count of records to plug into the Hartley measure is not as straightforward as it seems; as expected, the DISTINCT count version returned the same results as a uniform distribution plugged into Shannon’s equation, but counting repeated records (as we would in a multiset) can also tell us useful things about our data. With Boltzmann’s Entropy, we’re more likely to use a really expansive definition of cardinality that incorporates all permissible arrangements in a particular space. This would be equivalent to using the Hartley measure across all permissible states, regardless of their probabilities. This would in turn equal the Shannon’s Entropy on a uniform distribution where all permissible states – not just the ones with nonzero values – are included. Counting all permissible states is usually a lot easier in physics than actually much easier than determining their probabilities, which is turn a far cry from determining the number of unique particle types, let alone the exact counts of particles. In modern databases, this relationship is almost completely reversed; in SQL Server, table counts are preaggregated and thus instantly available, while DISTINCT clauses are costly in T-SQL and even more so in Analysis Services. To make matters worse, calculating a factorial on values higher than about 170 is impossible, even using the float data type; this precludes counting all 1038 + 1 permissible value in a decimal(38,0) column and plugging it into the Boltzmann Entropy, which uses factorials to precalculate the counts plugged into it. In other words, we simply can’t use the Boltzmann Entropy on all permissible values, if the universal set would contain more than 170 members. For that reason, I used the old-fashioned SQL Server count, in this case on the first float column of the same Higgs Boson dataset I’ve been using for practice purposes for several tutorial series.[9] Despite the fact that the table takes up 5 gigabytes and consists of 11 million rows, my sample code ran in just 1 second on my clunker of a development machine. The code is actually quite trivial and can be condensed from the deliberately verbose version below:

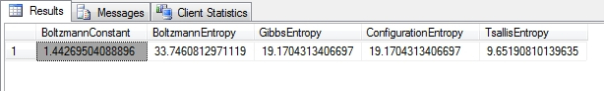

Figure 1: Various Measures of Thermodynamic Entropy

DECLARE @LogarithmBase decimal(38,36)

SET @LogarithmBase = 2 —2.7182818284590452353602874713526624977 — 10

DECLARE @EntropicIndexParameter float = 0.99

DECLARE @Count bigint, @Mean decimal(38,32), @StDev decimal(38,32), @GibbsEntropy float, @BoltzmannEntropy float, @TsallisEntropy float, @ConfigurationEntropy float @BoltzmannConstant float

SELECT @BoltzmannConstant = CASE WHEN @LogarithmBase = 2 THEN 1 / CAST(Log(2) AS float)

WHEN @LogarithmBase = 10 THEN 10 / CAST(Log(2) AS float)

WHEN @LogarithmBase = 2.7182818284590452353602874713526624977 THEN 1

ELSE NULL END

SELECT @Count = Count(*)

FROM Physics.HiggsBosonTable

WHERE Column1 IS NOT NULL

DECLARE @EntropyTable table

(Value decimal(33,29),

ValueCount bigint,

Proportion float

)

INSERT INTO @EntropyTable

(Value, ValueCount, Proportion)

SELECT Value, ValueCount, ValueCount / CAST(@Count AS float) AS Proportion

FROM (SELECT Column1 AS Value, Count(*) AS ValueCount

FROM Physics.HiggsBosonTable

WHERE Column1 IS NOT NULL

GROUP BY Column1) AS T1

SELECT @GibbsEntropy = –1 * @BoltzmannConstant * SUM(CASE WHEN Proportion = 0 THEN 0 ELSE Proportion * CAST(Log(Proportion, @LogarithmBase) as float) END), @TsallisEntropy = (1 / CAST(@EntropicIndexParameter –

1 AS float)) * (1 – SUM(CAST(Power(Proportion, @EntropicIndexParameter) AS float))), @ConfigurationEntropy =

–1 * @BoltzmannConstant * SUM(Proportion * Log(Proportion, @LogarithmBase))

FROM @EntropyTable

SELECT @BoltzmannEntropy = @BoltzmannConstant * Log(@Count, @LogarithmBase)

SELECT @BoltzmannConstant AS BoltzmannConstant, @BoltzmannEntropy AS BoltzmannEntropy, @GibbsEntropy AS GibbsEntropy, @ConfigurationEntropy AS ConfigurationEntropy, @TsallisEntropy AS TsallisEntropy

Figure 2: Results from the Higgs Boson Dataset

…………The formula used here is for Boltzmann’s Constant is based on a common approximation of 1 / Log(2), which introduces inaccuracy as early as the third decimal place; greater precision can be achieved for the common Base-2 log using more accurate approximations, such as a hard-coded constant of 1.3806485097962231207904142850573. Of course, the results in Figure 3 would only make sense if the particular columns measured thermodynamic quantities, and I’m not familiar enough with the semantic meaning of the Higgs Boson data to say if that’s the case. The same is true of the Tsallis Entropy (which may be identical to the Havrda-Charvat Entropy)[10] derived in the first SELECT after the INSERT, which is the thermodynamic counterpart of the Rényi Entropy we covered a few articles ago. The two formulas are almost identical, except that the Tsallis takes an @EntropicIndexParameter similar to the @AlphaParameter used in the Rényi, which also cannot equal 1 because it would lead to a divide-by-zero error. As it approaches this forbidden value, however, it nears the Gibbs Entropy, just as the Rényi Entropy approaches Shannon’s measure. At 0 it is equivalent to the DISTINCT COUNT minus 1. A wide range of other values have proven useful in multifarious physics problems, as well as “sensitivity to initial conditions and entropy production at the edge of chaos,”[11] which could prove useful when I tackle chaos theory at the tail end of this series. The formula for the Configuration Entropy[12] is exactly the same as that for the Gibbs Entropy, except that it measures possible configurations rather than the energy of a system; for our immediate purposes in translating this for DIY data mining purposes, the number of configurations and energy are both equivalent to the information content.

…………Although all of the above measures are derived from thermodynamics, they have obviously similarities and uses in information theory and by extension, related fields like data mining. Some additional concepts from thermodynamics might prove good matches for certain SQL Server use cases in the future as well, although I have yet to see any formulas posted that could be translated into T-SQL at this point. The concept of Entropy of Mixing[13] involves measuring the change in entropy from the merger of two closed thermodynamic systems, separated by some sort of impermeable barrier. This might prove incredibly useful if it could be ported to information theory (assuming it already hasn’t), since it could be used to gauge the change in entropy that occurs from the merger of two datasets or partitions. This might include appending new data in temporal order, partitioning sets by value or topic and incorporating new samples – all of which could be useful in ascertaining whether the possible information gain is worth the performance costs, in advance of the merge operation. In the same vein, a “perfect crystal, at absolute zero” has zero entropy and therefore no more potential for internally-generated change, but it may be in one of several microstates at the point it is frozen in stasis; this is what is measured by Residual Entropy[14], which might be transferable to information theory by quantifying the states an information system can exhibit once its potential for internally-generated change is gone.

Adapting Other Thermodynamic Entropies

One of the most promising new information metrics is information enthalpy, which is an analogue of an older thermodynamic concept. The original version performed calculations on measures of energy, pressure and volume, in which the first “term can be interpreted as the energy required to create the system” and the second as the energy that would be required to ‘make room’ for the system if the pressure of the environment remained constant.”[15] It measures “the amount of heat content used or released in a system at constant pressure,” but is usually expresses as the change in enthalpy as measured in joules.[16] This could be adapted to quantify the information needed to give rise to a particular data structure, or to assess changes in it as information is added or removed. The thermodynamic version can be further partitioned into the enthalpy due to such specific processes as hydrogenation, combustion, atomization, hydration, vaporization and the like; perhaps information enthalpy can be partitioned as well, except by processes specific to information and data science. Information enthalpy is the subject of at least one patent for a cybersecurity algorithm to prevent data leaks, depending on security rating.[17] At least two other research papers use information enthalpy for data modeling with neural nets, which is a subject nearer to my heart.[18] A more recent journal article uses it in measuring artificial intelligence[19], which is also directly relevant to data mining and information theory.

…………Loop Entropy is specific not just to thermodynamics, but to specific materials within it, since it represents “the entropy lost upon bringing together two residues of a polymer within a prescribed distance.”[20] Nevertheless, it might be possible to develop a similar measure in information theory to quantify the entropy lost in the mixing between two probability spaces. Conformational Entropy is even more specific to chemistry, but it might be helpful to develop similar measures to quantify the structures an information system can take on, as this entropy does with molecular arrangements. Incidentally, the formula is identical to that of the Gibbs Entropy, except that the Gas Constant version of the Boltzmann Constant is used.[21] Likewise, Entropic Force is a concept mainly used to quantify phenomena related to Brownian motion, crystallization, gravity and “hydrophobic force.”[22] In recent years, however, it has been linked together with “entropy-like measures of complexity,” intelligence and the knowledge discovery principle of Occam’s Razor, which brings it within the purview of information theory.[23] I surmise that a similar concept could be put to good use in measuring entropic forces in data science, for such constructive purposes as estimating data loss and contamination, or even ascertaining tendencies of data to form clusters of a particular type. It is also possible that a more thorough relationship between thermodynamic free energy, Free Entropy and “free probability” can be fleshed out.[24] These are related to the “internal energy of a system minus the amount of energy that cannot be used to perform work,” but it might be useful outside of the thermodynamic context, if we can further partition measures like Shannon’s Entropy to strain out information that is likewise unavailable for our purposes. I cannot think of possible adaptations for more distant thermodynamic concepts like Entropic Explosion and Free Entropy off the top of my head, but that does not mean they are not possible or would not be useful in data science.

Esoteric Quantum Entropies

These measures of physical entropy are of course prerequisites for bleeding-edge topics like quantum mechanics, where the strange properties of matter below the atomic level of ordinary particle physics introduce mind-bending complications like entanglement. This gives rise a whole host of quantum-specific entropies which I won’t provide code for because they’re too far afield for my intended audience, the SQL Server community, where making Schrödinger’s Cat reappear like a rabbit out of a hat isn’t usually required in C.V.s. These metrics will only prove useful if we can find objects and data that can be modeled like quantum states, which might actually be feasible in the future. Thermodynamics served as a precursor to information theory, which in turn provided a foundation for the newborn field of quantum information theory, so further cross-pollination between these subject areas can be expected. This could arise out of information geometry, another bleeding-edge field that borrows concepts like Riemann manifolds and multidimensional hyperspace and applies them to information theory; I hope that by the end of this wide-ranging series I’ll have acquired the skills to at least explore the topic, but the day when I can write tutorials on it is far off. Another interesting instance of cross-pollination is occurring as we speak between spin glasses, i.e. disordered magnets which are deeply interesting to physicists for their phase transitions, and neural nets, which apparently share analogous properties in common with them. It is nonetheless far more likely that some of the simpler thermodynamic concepts like enthalpy will be adapted for use in information theory (and by extension, data mining) before any of the quantum information measures I’ll quickly dispense with here.

…………The Von Neumann Entropy is one of the brands of entropy most frequently mentioned in books on quantum mechanics, like Ingemar Bengtsson’s Geometry of Quantum States: An Introduction to Quantum Entanglement [25]and Vlatko Vedral’s Decoding Reality: The Universe as Quantum Information[26], but it merely extends the information theory and thermodynamic concepts we’ve already discussed, using the kind of high-level math employed in quantum physics. For example, “the Gibbs entropy translates over almost unchanged into the world of quantum physics to give the von Neumann entropy” [27] except that we plug in a stochastic density matrix for the probabilities and use a Trace operation on it instead of a summation operator. Moreover, when Von Neumann’s Entropy is calculated via its eigenvectors it reduces to Shannon ‘s version.[28] Linear Entropy (or “Impurity”) is likewise in some respects an extension of the concept of entropic mixing to the field birthed from the union of these two cutting-edge fields, quantum information theory.[29]

…………Certain quantum-specific measures, such as the Belavkin-Staszewski Entropy, are obscure enough that it is difficult to find references on them anywhere, even in professional quantum theory and mathematical texts. I became acquainted with these quantum measures awhile back while skimming works like Bengtsson’s and Vedral’s, but as you can tell from my citations, I had to rely heavily on Wikipedia to fill in a lot of the blanks (which were quite sizeable, given that I’ve evidently forgotten most of what I learned on the topic as a kid, when I imbibed a lot from my father’s moonlighting as a college physics teacher). Fortunately, I was able to find a Wikipedia article on the Sackur-Tetrode Entropy[30], which was just comprehensible enough to allow me to decipher its purpose. Evidently, it’s used to partition quantum entropies by the types of missing information they quantify, similar to how I used various measures of imprecision, nonspecificity, strife, discord, conflict and the like at the tail of my Implementing Fuzzy Sets in SQL Server series to partition “uncertainty.” In those tutorials I in turn likened uncertainty partitioning to the manner in which variance is partitioned in analysis of variance (ANOVA).

…………It is much more common to find references to measures like Quantum Relative Entropy and Generalized Relative Entropy[31] in works on quantum mechanics, which are just highly specialized, souped-up versions of the Kullback-Leibler Divergence we’ll be tackling later in this series. They’re used to quantify the dissimilarity of indistinguishability of quantum states across Hilbert Spaces, which aren’t exactly everyday use cases for SQL Server DBAs and data miners (yet not entirely irrelevant, given that they’re constructed from inner products). On the other hand, the KL-Divergence they’re derived from is almost as important in information theory as Shannon’s Entropy, so I’ll be writing at length on it once I can set aside room in this series for a really long segment on distance and divergence metrics. It can certainly be put to a wide range of productive uses by SQL Server end users. The same can be said of the conditional and joint information entropies we’ll be tackling in the next article, alongside such ubiquitous measures as information rates. In one sense, they’re a little more complex that the topics we covered so far in this series, since they’re binary relations between probability figures rather than simple unary measures. On the other hand, they’re far more simple and useful than counterparts like Quantum Mutual Information and Conditional Quantum Entropy. I’m only mentioning these metrics and their quantum kin for the benefit of readers who want to learn more about information theory and don’t want to wade into the literature blind, without any inkling as to what these more esoteric entropies do or how compartmentalized their use cases are. When applying information theory outside of quantum mechanics, we don’t need to concern ourselves with such exotic properties as non-separability and oddities like the fact that quantum conditional entropy can be negative, which is equivalent to a measure known as “coherent information.”[32] In contrast, I’ll provide code at the end of this segment of the series for measures like Mutual, Lautum and Shared Information, which indeed have more general-purpose use cases in data mining and knowledge discovery. The same can be said of next week’s article on Conditional and Joint Entropy, which are among the tried-and-true principles of information theory.

[1] This is also justified by such principles as the Data Processing Theorem, which demonstrate that a loss of information occurs at each step in data processing, since no new information can be added. It is actually a more solid proof than that its thermodynamic counterpart, since it stems directly from logical and mathematical consistency rather than empirical observations with unknown causes. For a discussion of the Data Processing Theorem, see p. 30, Jones, D.S., 1979, Elementary Information Theory. Oxford University Press: New York.

[2] Boundless Chemistry, 2014, “The Third Law of Thermodynamics and Absolute Energy,” published Nov. 19, 2014 at the Boundless.com web address https://www.boundless.com/chemistry/textbooks/boundless-chemistry-textbook/thermodynamics-17/entropy-124/the-third-law-of-thermodynamics-and-absolute-energy-502-3636/

[3] See the Wikipedia webpage “Heat Death of the Universe” at http://en.wikipedia.org/wiki/Heat_death_of_the_universe

[4] See the Wikipedia page “History of Entropy” at http://en.wikipedia.org/wiki/History_of_entropy

[5] See the Wikipedia article “Boltzmann’s Entropy Formula” at http://en.wikipedia.org/wiki/Boltzmann%27s_entropy_formula

[6] I retrieved this value from the Wikipedia page “Boltzmann’s Constant” at http://en.wikipedia.org/wiki/Boltzmann_constant

[7] See the Wikipedia article “Ludwig Boltzmann” at http://en.wikipedia.org/wiki/Ludwig_Boltzmann

[8] See the Wikipedia page “Ernst Mach” at http://en.wikipedia.org/wiki/Ernst_Mach

[9] I originally downloaded this from the University of California at Irvine’s Machine Learning Repository and converted it into a single table in a sham SQL Server database called DataMiningProjects.

[10] See the Wikipedia article “Talk:Entropy/Archive11” at http://en.wikipedia.org/wiki/Talk%3AEntropy/Archive11

[11] See the Wikipedia webpage “Tsallis Entropy” at http://en.wikipedia.org/wiki/Tsallis_entropy

[12] See the Wikipedia article “Configuration Entropy” at http://en.wikipedia.org/wiki/Configuration_entropy

[13] See the Wikipedia page http://en.wikipedia.org/wiki/Entropy_of_mixing

[14] See the Wikipedia article “Residual Entropy” at http://en.wikipedia.org/wiki/Residual_entropy

[15] See the Wikipedia page “Enthalpy” at http://en.wikipedia.org/wiki/Enthalpy

[16] See the ChemWiki webpage “Enthalpy” at

http://chemwiki.ucdavis.edu/Physical_Chemistry/Thermodynamics/State_Functions/Enthalpy

[17] See the Google.com webpage “High Granularity Reactive Measures for Selective Pruning of Information” at http://www.google.com/patents/US8141127

[18] Lin, Jun-Shien and Jang, Shi-Shang, 1998, “Nonlinear Dynamic Artificial Neural Network Modeling Using an Information Theory Based Experimental Design Approach,” pp. 3640-3651 in Industrial and Engineering Chemistry Research, Vol. 37, No. 9. Also see Lin, Jun-Shien; Jang, Shi-Shang; Shieh, Shyan-Shu and M. Subramaniam, M., 1999, “Generalized Multivariable Dynamic Artificial Neural Network Modeling for Chemical Processes,” pp. 4700-4711 in Industrial and Engineering Chemistry Research, Vol. 38, No. 12.

[19] Benjun, Guo; Peng, Wang; Dongdong, Chen; and Gaoyun, Chen, 2009, “Decide by Information Enthalpy Based on Intelligent Algorithm,” pp. 719-722 in Information Technology and Applications. Vol. 1.

[20] See the Wikipedia article “Loop Entropy” at http://en.wikipedia.org/wiki/Loop_entropy

[21] See the Wikipedia article “Conformational Entropy” at http://en.wikipedia.org/wiki/Conformational_entropy

[22] See the Wikipedia article “Entropic Force” at http://en.wikipedia.org/wiki/Entropic_force

[23] IBID.

[24] See the Wikipedia pages “Free Entropy,” “Thermodynamic Free Energy” and “Free Probability” at http://en.wikipedia.org/wiki/Free_entropy, http://en.wikipedia.org/wiki/Thermodynamic_free_energy and http://en.wikipedia.org/wiki/Free_probability respectively.

[25] Bengtsson, Ingemar, 2008, Geometry of Quantum States: An Introduction to Quantum Entanglement. Cambridge University Press: New York.

[26] Vedral, Vlatko, 2010, Decoding Reality: The Universe as Quantum Information. Oxford University Press: New York

[27] See the Wikipedia article “Entropy (Information Theory)” at http://en.wikipedia.org/wiki/Entropy_(information_theory)

[28] See the Wikipedia article “Von Neumman Entropy” at http://en.wikipedia.org/wiki/Von_Neumann_entropy

[29] See the Wikipedia article “Linear Entropy” at http://en.wikipedia.org/wiki/Linear_entropy

[30] See the Wikipedia webpage “Sackur-Tetrode Equation” at http://en.wikipedia.org/wiki/Sackur%E2%80%93Tetrode_equation

[31] See the Wikipedia articles “Quantum Relative Entropy” and “Generalized Relative Entropy” at http://en.wikipedia.org/wiki/Quantum_relative_entropy and http://en.wikipedia.org/wiki/Generalized_relative_entropy respectively

[32] See the Wikipedia articles Quantum Mutual Information and “Conditional Quantum Entropy” at

http://en.wikipedia.org/wiki/Quantum_mutual_information and http://en.wikipedia.org/wiki/Conditional_quantum_entropy respectively.