By Steve Bolton

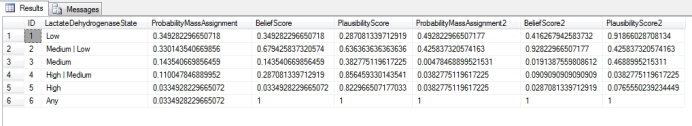

…………To avoid overloading readers with too many concepts at once, I split my discussion of Dempster-Shafer Evidence Theory into two parts, with the bulk of the data modeling aspects and theory occurring in the last article. This time around, I’ll cover how fuzzy measures can be applied to it to quantify such forms of uncertainty as nonspecificity and imprecision (i.e., “fuzziness”) that were introduced in prior articles. Since the Plausibility, Belief and probability mass assignment figures work together to assign degrees of truth, they also introduce the potential for contradictory evidence, which leads to a few other measures of uncertainty: Strife, Discord and Conflict, which aren’t as relevant to possibility distributions and ordinary fuzzy sets. In addition, the probability mass for a universal hypothesis can be interpreted as a form of uncertainty left over after all of the probabilities for the subsets have been partitioned out. For example, in Figure 1, this crude type of uncertainty would be associated with the 0.0334928229665072 value for row 6. For the sake of brevity, I won’t rehash how I derived the ordinal LactateDehydrogenaseState category and the first three fuzzy measures associated with it, since the numbers are identical to those in the last tutorial. For the sake of convenience I added three columns with nearly identical names and calculated some sham data for them (based on the frequencies of some CreatineKinase data in the original table) so that we have some Conflicting data to work with. Ordinarily, such comparisons would be made using joins against an external view or table with its own separate ProbabilityMassAssignment, BeliefScore and PlausibilityScore columns, or a query that calculated them on the fly.

Figure 1: Some Sample Evidence Theory Data from the Last Tutorial

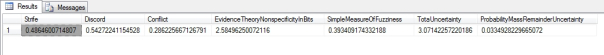

…………In Figure 2, I translated some of the most popular formulas for evidence theory measures into T-SQL, such as Strife, Discord and Conflict.[1] For these, I used a simpler version of the equations that performed calculations on differences in set values rather than fuzzy intersections and unions.[2] Despite the fact the two measures only differ by the divisor and order of the difference operation, Discord is apparently not used as often as Strife on the grounds that it does not capture as much information. These subtle differences occur only in the alternate measures of Conflict they’re based on; since the one related to Strife is more important, I only included that one in Figure 3, where it’s represented by a score of 0.286225667126791. Versions of Strife and Discord are available for possibility distributions, but I omitted these because the fact that possibility theory is “almost conflict-free” signifies that they’re of “negligible” benefit.[3] I also coded the evidence theory version of nonspecificity and essentially rehashed the crude fuzziness measure I used in Implementing Fuzzy Sets in SQL Server, Part 2: Measuring Imprecision with Fuzzy Complements, except with the YagerComplement parameter arbitrarily set to 0.55 and the probability mass used in place of the membership function results. Both of these are unary fuzzy measures that apply only to the set defined by the first three float columns, whereas Strife, Discord and Conflict are binary measures that are calculated on the differences between the two sets encoded in the Health.DuchennesEvidenceTheoryTable. We can also add the Strife and fuzziness figures together to derive a measure of total uncertainty, plus interpret the height of a fuzzy set – i.e., the count of records with the maximum MembershipScore of 1 – as a sort of credibility measure. Keep in mind that I’m not only a novice at this, but am consulting mathematical resources that generally don’t have the kind of step-by-step examples with sample data used in the literature on statistics. This means I wasn’t able to validate my implementation of these formulas well at all, so it would be wise to recheck them before putting them to use in a production environments where accuracy is an issue. I’m most concerned by the possibility that I may be incorrectly aggregating the individual focal elements for evidentiary fuzziness and nonspecificity, each of which should be weighted by the corresponding probability mass.

Figure 2: Several Evidence Theory Measures Implemented in T-SQL

DECLARE @Conflict float, @ConflictForDiscord float

SELECT @Conflict = SUM(CASE WHEN BeliefScore2 = 0 THEN ProbabilityMassAssignment2 * ABS(BeliefScore – BeliefScore2)

ELSE ProbabilityMassAssignment2 * ABS(BeliefScore – BeliefScore2) / ABS(CAST(BeliefScore AS float))

END),

@ConflictForDiscord = SUM(CASE WHEN BeliefScore2 = 0 THEN ProbabilityMassAssignment2 * ABS(BeliefScore2 – BeliefScore)

ELSE ProbabilityMassAssignment2 * ABS(BeliefScore2 –BeliefScore) / ABS(CAST(BeliefScore2 AS float))

END)

FROM Health.DuchennesEvidenceTheoryTable

— FUZZINESS

DECLARE @Count bigint, @SimpleMeasureOfFuzziness float

DECLARE @OmegaParameter float = 0.55 — ?

SELECT @Count=Count(*)

FROM Health.DuchennesEvidenceTheoryTable

SELECT @SimpleMeasureOfFuzziness = SUM(ABS(ProbabilityMassAssignment – YagerComplement)) /@Count

FROM (SELECT ProbabilityMassAssignment, Power(1 – Power(ProbabilityMassAssignment, @OmegaParameter), 1 / CAST(@OmegaParameter AS float)) AS YagerComplement

FROM Health.DuchennesEvidenceTheoryTable) AS T1

— NONSPECIFICITY

DECLARE @EvidenceTheoryNonspecificityInBits float

SELECT @EvidenceTheoryNonspecificityInBits = SUM(ProbabilityMassAssignment * Log(@Count, 2))

FROM Health.DuchennesEvidenceTheoryTable

SELECT Strife, Discord, Conflict, EvidenceTheoryNonspecificityInBits,SimpleMeasureOfFuzziness, Strife + EvidenceTheoryNonspecificityInBits

AS TotaUncertainty, (SELECT ProbabilityMassAssignment

FROM Health.DuchennesEvidenceTheoryTable

WHERE LactateDehydrogenaseState = ‘Any’) AS ProbabilityMassRemainderUncertainty

FROM (SELECT –1 * SUM(ProbabilityMassAssignment * Log((1 – @Conflict), 2)) AS Strife,

–1 * SUM(ProbabilityMassAssignment * Log((1 – @ConflictForDiscord), 2)) AS Discord, @Conflict AS Conflict, @EvidenceTheoryNonspecificityInBits AS EvidenceTheoryNonspecificityInBits, @SimpleMeasureOfFuzziness AS SimpleMeasureOfFuzziness

FROM Health.DuchennesEvidenceTheoryTable) AS T1

Figure 3: Sample Results from the Duchennes Evidence Theory Table

…………The nonspecificity measure in evidence theory is merely the Hartley function weighted by the probability mass assignments. On paper, the equation for Strife ought to appear awfully familiar to data miners who have worked with Shannon’s Entropy before. The evidence theory version incorporates some additional terms so that a comparison can be performed over two sets, but the negative summation operator and logarithm operation are immediately reminiscent of its more famous forerunner, which measures probabilistic uncertainty due to a lack of stochastic information. Evidentiary nonspecificity trumps entropy in many situations because it is measured linearly, therefore avoiding computationally difficult nonlinear math (my paraphrase), but sometimes doesn’t produce unique solutions, in which case Klir and Yuan recommend using measures of Strife to quantify uncertainty.[4] Nevertheless, when interpreted correctly and used judiciously, they can be used in conjunction with axioms like the principles of minimum uncertainty, maximum uncertainty[5] and uncertainty invariance[6] to perform ampliative reasoning[7] and draw useful inferences about datasets:

“Once uncertainty (and information) measures become well justified, they can very effectively be utilized for managing uncertainty and the associated information. For example, they can be utilized for extrapolating evidence, assessing the strength of relationship on between given groups of variables, assessing the influence of given input variables on given output variables, measuring the loss of information when a system is simplified, and the like. In many problem situations, the relevant measures of uncertainty are applicable only in their conditional or relative terms.”[8]

…………That often requires some really deep thinking in order to avoid various pitfalls in analysis; in essence, they all involve honing the use of pure reason, which I now see the benefits of, but could definitely use a lot more practice in. For example, Dempster-Shafer Theory has well-known issues with counter-intuitive results at the highest and lowest Conflict values, which may require mental discipline to ferret out; perhaps high values of Strife can act as a safeguard against this, by alerting analysts that inspection for these logical conundrums is warranted.[9] Critics like Judea Pearl have apparently elaborated at length on various other fallacies that can arise from “confusing probabilities of truth with probabilities of provability,” all of which need to be taken into account when modeling evidentiary uncertainty.[10] Keep in mind as well that Belief or Plausibility scores of 1 do not necessarily signify total certainty; as we saw a few articles ago, Possibility values of 1 only signify a state of complete surprise when an event does not occur rather than assurance that it will happen.

…………The issue with evidence theory is even deeper in a certain sense, especially if those figures are derived from subjective ratings. Nevertheless, even perfectly objective and accurate observations can be quibbled with, for reasons that basically boil down to Bill W.’s adage “Denial ain’t just a river in Egypt.” One of the banes of the human condition is our propensity to squeeze our eyes shut to evidence we don’t like, which can only be overcome by honesty, not education; more schooling may even make things worse, by enabling people to lie to themselves with bigger words than before. In that case, they may end up getting tenure for developing entirely preposterous philosophies, like solipsism, or doubting their own existence. As G.K. Chesterton warned more than a century ago, nothing can stop a man from piling doubt on top of doubt, perhaps by reaching for such desperate excuses as “perhaps all we know is just a dream.” He provided a litmus test for recognizing bad chains of logic, which can indeed go on forever, but can be judged on whether or not they tend to drive men into lunatic asylums. Cutting edge topics like fuzzy sets, chaos theory and information theory inevitably give birth to extravagant half-baked philosophies, born of the precisely the kind of obsession and intellectual intoxication that Chesterton speaks of in his chapter on The Suicide of Thought[11] and his colleague Arnold Lunn’s addresses in The Flight from Reason.[12] These are powerful techniques, but only when kept within the proper bounds; problems like “definition drift” and subtle, unwitting changes in the meanings assigned to fuzzy measures can easily lead to unwarranted, fallacious or even self-deceptive conclusions. As we shall see in the next series, information theory overlays some of its own interpretability issues on top of this, which means we must trend even more carefully when integrating it with evidence theory.

…………Fuzzy measures and information theory mesh so well together than George J. Klir and Bo Yuan included an entire chapter on the topic of “Uncertainty-Based Information” in my favorite resource for fuzzy formulas, Fuzzy Sets and Fuzzy Logic: Theory and Applications.[13] The field of uncertainty management is still in its infancy, but scholars now recognize that uncertainty is often “the result of some information deficiency. Information…may be incomplete, imprecise, fragmentary, not fully reliable, vague, contradictory, or deficient in some other way. In general, these various information deficiencies may result in different types of uncertainty.”[14] Information in this context is interpreted as uncertainty reduction[15]; the more information we have, the more certain we become. Methods to ascertain how the reduction of fuzziness (i.e how imprecise the boundaries of fuzzy sets are) contributes to information gain were not fully worked out two decades ago when most of the literature I consulted for this series was written, but I have the impression that still holds today. When we adapt the Hartley function to measure the nonspecificity of evidence, possibility distributions and fuzzy sets, all we’re doing is taking a count of how many states a dataset might take on. With Shannon’s Entropy, we’re performing a related calculation that incorporates the probabilities associated with those states. Given their status as the foundations of information theory, I’ll kick off my long-delayed tutorial series Information Measurement with SQL Server by discussing both from different vantage points. I hope to tackle a whole smorgasbord of various ways in which the amount of information associated with a dataset can be quantified, thereby helping to cut down further on uncertainty. Algorithmic complexity, the Lyapunov exponent, various measures of order and semantic information metrics can all be used to partition uncertainty and preserve the information content of our data, so that organizations can make more accurate decisions in the tangible world of the here and now.

[1] pp. 259, 262-263, 267, 269, Klir, George J. and Yuan, Bo, 1995, Fuzzy Sets and Fuzzy Logic: Theory and Applications. Prentice Hall: Upper Saddle River, N.J. The formulas are widely available, but I adopted this as my go-to resource whenever the math got thick.

[2] IBID., p. 263.

[3] IBID., pp. 262-265.

[4] IBID., p. 274

[5] IBID., pp. 271-272. Klir and Yuan’s explanation of how to use maximum uncertainty for ampliative reasoning almost sounds a sort of reverse parsimony: “use all information available, but make sure that no additional information is unwittingly added…the principle requires that conclusions resulting from any ampliative inference maximize the relevant uncertainty within the constraints representing the premises. The principle guarantees that our ignorance be fully recognized when we try to enlarge our claims beyond the given premises and, as the same time, that all information contained in the premises be fully utilized. In other words, the principle guarantees that our conclusions are maximally noncommittal with regard to information not contained in the premises.”

[6] IBID., p. 275.

[7] IBID., p. 271.

[8] IBID., p. 269.

[9] See the Wikipedia webpage “Dempster Shafer Theory” at http://en.wikipedia.org/wiki/Dempster%E2%80%93Shafer_theory

[10] IBID.

[11] See Chesterton, G.K., 2001, Orthodoxy. Image Books: London. Available online at the G. K. Chesterton’s Works on the Web address http://www.cse.dmu.ac.uk/~mward/gkc/books/

[12] Lunn, Arnold, 1931, The Flight from Reason. Longmans, Green and Co.: New York.

[13] pp. 245-276, Klir and Yuan.

[14] IBID.

[15] IBID., p. 245.