Currently, as I'm writing this blog post, Azure Data Factory doesn't provide an out-of-box functionality to send emails. However, we can use Web Activity task in ADF that would use a custom Azure Logic App to help us to deliver an email notification.

Writing this blog, I give a full credit to this resource - http://microsoft-bitools.blogspot.com/2018/03/add-email-notification-in-azure-data.html by Joost van Rossum from Netherland, that has step-by-step instructions to create ADF Notification Logic Apps in Azure. I have just made a slight modification to this solution to support the functionality of the existing ADF pipeline from my previous blog post - http://datanrg.blogspot.com/2018/11/system-variables-in-azure-data-factory.html

Case A - Email Notification or failed Copy Data task (explicitly on a Failed event)

Case B - Email Notification for logged even with Failed Status (Since the logging Information would have data for both Failed and Succeeded activities, I would like to generate email notifications only for the Failed events at the very end of my pipeline).

So, first, I would need to create a Logic App in my Azure portal that would contain two steps:

1) Trigger: When an HTTP POST request is received

2) Action: Send Email (Gmail)

The Trigger would have this JSON body definition:

{

"properties": {

"DataFactoryName": {

"type": "string"

},

"PipelineName": {

"type": "string"

},

"ErrorMessage": {

"type": "string"

},

"EmailTo": {

"type": "string"

}

},

"type": "object"

}

and Gmail Send Email action would further use elements of this data construction.

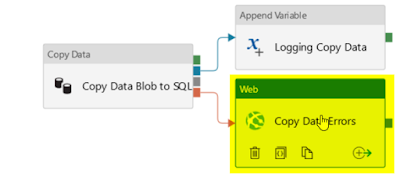

So, to support my "Case A - Email Notification or failed Copy Data task":

1) I open my pipeline "Load data into SQL" container and add & connect Web Task for a failed event from the "Copy Data Blob to SQL" activity task.

2) Then I copy HTTP Url from the Logic App in the Azure portal and paste its value in the Web task

3) I add a new header with the following values:

Name: Content-Type

Value: application/json

4) And then I put a body definition to my POST request and fill all the data elements for the initially defined JSON construction of the Logic App:

{

"DataFactoryName":

"@{pipeline().DataFactory}",

"PipelineName":

"@{pipeline().Pipeline}",

"ErrorMessage":

"@{activity('Copy Data Blob to SQL').Error.message}",

"EmailTo":

"@pipeline().parameters.var_email_address"

}

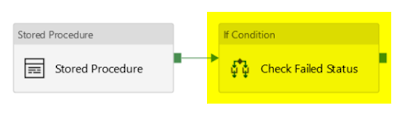

To support my "Case B - Email Notification for logged even with Failed Status":

1) I open my pipeline "Logging to SQL" container and add another container to check if the logged event has a Failed status by the following conditional expression @equals(split(item(),'|')[12], 'Failed')

2) Then I add two activity tasks within this Condition activity to support email notifications:

3) Similarly to the previous Case A, I copy HTTP Url from the Logic App in the Azure portal and paste its value in the Web task and add the same new header.

4) And then I put a body definition to my POST request and fill all the data elements for the initially defined JSON construction of the Logic App:

{

"DataFactoryName":

"@{pipeline().DataFactory}",

"PipelineName":

"@{pipeline().Pipeline}",

"ErrorMessage":

"@variables('var_activity_error_message')",

"EmailTo":

"@pipeline().parameters.var_email_address"

}

where "var_activity_error_message" variable is defined by this expression @split(item(),'|')[4] in the pipeline "Set Variable" activity task.

As a result, after running a pipeline, along with the expected results I now can receive an email notification if something fails (to test the failed event notification, I temporarily changed the name of a table that I use as a destination in my Copy Data task).

It's working!