I showed in my previous post how we generated the datasets for our Azure Data Factory pipelines. In this post, I’ll show the BimlScript for our pipelines. Pipelines define the activities, identify the input and output datasets for those activities, and set an execution schedule. We were creating several pipelines with copy activities to copy data to Azure Data Lake Store.

We generated one pipeline per schedule and load type:

- Hourly – Full

- Hourly – Incremental

- Daily – Full

- Daily – Incremental

We also generated some one-time load pipelines for DR/new environment setup.

The first code file below is the template for the pipeline. You can see code nuggets for the data we receive from the generator file and for conditional logic we implemented. The result is one copy activity per source table within the appropriate pipeline.

In the second code file below, lines 104 to 119 are generating the pipelines. We read in the necessary data from the Excel file:

- Schema name

- Table name

- Columns list

- Incremental predicate

Sidenote: We wrote a quick T-SQL statement (not shown) to generate the columns list. This could have been done in our BimlScript, but it was something we changed after the fact to accommodate the limitations of Polybase (Dear Microsoft: Please fix). SQL was quicker and easier for us, but if I were to do this again I would add that into our BimlScript. We needed to replace new lines and double quotes in our data before we could read it in from the data lake. You can get around this issue by using .ORC files rather than text delimited files. But the ORC files aren’t human readable, and we felt that was important for adoption of the data lake with the client on this project. They were already jumping in with several new technologies and we didn’t want to add anything else to the stack. So our select statements list out fields and replace the unwanted characters in the string fields.

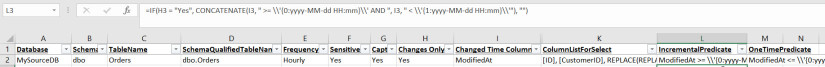

Our Excel file looks like this.

Columns B, C, L, and M are populated by Excel formulas. This is the file that is read in by the BimlScript in the code below.

In our generator file (which is the same file that was used to generate the datasets), we use the CallBimlScript function to call the pipeline template file and pass along the required properties (table, schema, frequency, scope, columns list, predicate).

The great thing about Biml is that I can use it as much or as little as I feel is helpful. That T-SQL statement to get column lists could have been Biml, but it didn’t have to be. The client can maintain and enhance these pipelines with or without Biml as they see fit. There is no vendor lock-in here. Just as with Biml-generated SSIS projects, there is no difference between a hand-written ADF solution and a Biml-generated ADF solution, other than the Biml-generated solution is probably more consistent.

And have I mentioned the time savings? There is a reason why Varigence gives out shirts that say “It’s Monday and I’m done for the week.”

We made changes and regenerated our pipelines a few times, which would have taken hours without Biml. With Biml, it was no big deal.

Thanks to Levi for letting me share some of his code, and for working with me on this project!