Continuation from the previous 114 parts, the whole series can be found at http://www.nikoport.com/columnstore/.

This blog post is dedicated to the Bulk Load API and the sudden changes that might take place and affect it during the loading process into Columnstore Indexes. I have already blogged about the “Magic Number” 102.400 rows, which separates the loading into the compressed Row Groups (fast) and the into the Delta-Stores (slow) at Columnstore Indexes – part 30 (“Bulk Load API Magic Number”), but there is more to add on this topic and this blog post shall focus on this topic.

At the PASS Summit 2017, during my Columnstore Indexes precon, I have shown the demo on this topic and after giving some exclusivity (1 week to be precise), I am starting to blog on some of the new stuff, I was showing.

Memory Pressure

To test the memory pressure and the Bulk Load API, I will use the good old free ContosoRetailDW database, that can be freely downloaded at Microsoft’s page.

On my SQL Server 2016 (will work the same way on 2014 and 2017 versions of SQL Server), I will restore the database from the C:\Install folder and store Data & Log files in the C:\Data folder, plus I shall set up the Clustered Columnstore Index on the FactOnlineSales table with the following script:

USE [master]

if exists (select * from sys.databases where name = 'ContosoRetailDW')

alter database ContosoRetailDW

set SINGLE_USER WITH ROLLBACK IMMEDIATE;

RESTORE DATABASE [ContosoRetailDW]

FROM DISK = N'C:\Install\ContosoRetailDW.bak' WITH FILE = 1,

MOVE N'ContosoRetailDW2.0' TO N'C:\Data\ContosoRetailDW.mdf',

MOVE N'ContosoRetailDW2.0_log' TO N'C:\Data\ContosoRetailDW.ldf',

NOUNLOAD, STATS = 5;

alter database ContosoRetailDW

set MULTI_USER;

GO

use ContosoRetailDW;

EXEC dbo.sp_changedbowner @loginame = N'sa', @map = false

GO

GO

ALTER DATABASE [ContosoRetailDW] SET COMPATIBILITY_LEVEL = 130

GO

ALTER DATABASE [ContosoRetailDW] MODIFY FILE ( NAME = N'ContosoRetailDW2.0', SIZE = 2000000KB , FILEGROWTH = 128000KB )

GO

ALTER DATABASE [ContosoRetailDW] MODIFY FILE ( NAME = N'ContosoRetailDW2.0_log', SIZE = 400000KB , FILEGROWTH = 256000KB )

GO

use ContosoRetailDW;

ALTER TABLE dbo.[FactOnlineSales] DROP CONSTRAINT [PK_FactOnlineSales_SalesKey];

create clustered columnstore Index PK_FactOnlineSales

on dbo.FactOnlineSales;Let us set up 4 staging tables, that will be the copies of the FactOnlineSales table and that will be used for loading 1 Million rows into each one of them, for the demo purposes.

DROP TABLE IF EXISTS [dbo].[FactOnlineSales_Stage1]; DROP TABLE IF EXISTS [dbo].[FactOnlineSales_Stage2]; DROP TABLE IF EXISTS [dbo].[FactOnlineSales_Stage3]; DROP TABLE IF EXISTS [dbo].[FactOnlineSales_Stage4]; GO CREATE TABLE [dbo].[FactOnlineSales_Stage1]( [OnlineSalesKey] [int] NOT NULL, [DateKey] [datetime] NOT NULL, [StoreKey] [int] NOT NULL, [ProductKey] [int] NOT NULL, [PromotionKey] [int] NOT NULL, [CurrencyKey] [int] NOT NULL, [CustomerKey] [int] NOT NULL, [SalesOrderNumber] [nvarchar](20) NOT NULL, [SalesOrderLineNumber] [int] NULL, [SalesQuantity] [int] NOT NULL, [SalesAmount] [money] NOT NULL, [ReturnQuantity] [int] NOT NULL, [ReturnAmount] [money] NULL, [DiscountQuantity] [int] NULL, [DiscountAmount] [money] NULL, [TotalCost] [numeric](18, 3) NOT NULL, [UnitCost] [money] NULL, [UnitPrice] [money] NULL, [ETLLoadID] [int] NULL, [LoadDate] [datetime] NULL, [UpdateDate] [datetime] NULL, INDEX CCI_FactOnlineSales_Stage1 CLUSTERED COLUMNSTORE ) CREATE TABLE [dbo].[FactOnlineSales_Stage2]( [OnlineSalesKey] [int] NOT NULL, [DateKey] [datetime] NOT NULL, [StoreKey] [int] NOT NULL, [ProductKey] [int] NOT NULL, [PromotionKey] [int] NOT NULL, [CurrencyKey] [int] NOT NULL, [CustomerKey] [int] NOT NULL, [SalesOrderNumber] [nvarchar](20) NOT NULL, [SalesOrderLineNumber] [int] NULL, [SalesQuantity] [int] NOT NULL, [SalesAmount] [money] NOT NULL, [ReturnQuantity] [int] NOT NULL, [ReturnAmount] [money] NULL, [DiscountQuantity] [int] NULL, [DiscountAmount] [money] NULL, [TotalCost] [numeric](18, 3) NOT NULL, [UnitCost] [money] NULL, [UnitPrice] [money] NULL, [ETLLoadID] [int] NULL, [LoadDate] [datetime] NULL, [UpdateDate] [datetime] NULL, INDEX CCI_FactOnlineSales_Stage2 CLUSTERED COLUMNSTORE ); CREATE TABLE [dbo].[FactOnlineSales_Stage3]( [OnlineSalesKey] [int] NOT NULL, [DateKey] [datetime] NOT NULL, [StoreKey] [int] NOT NULL, [ProductKey] [int] NOT NULL, [PromotionKey] [int] NOT NULL, [CurrencyKey] [int] NOT NULL, [CustomerKey] [int] NOT NULL, [SalesOrderNumber] [nvarchar](20) NOT NULL, [SalesOrderLineNumber] [int] NULL, [SalesQuantity] [int] NOT NULL, [SalesAmount] [money] NOT NULL, [ReturnQuantity] [int] NOT NULL, [ReturnAmount] [money] NULL, [DiscountQuantity] [int] NULL, [DiscountAmount] [money] NULL, [TotalCost] [numeric](18, 3) NOT NULL, [UnitCost] [money] NULL, [UnitPrice] [money] NULL, [ETLLoadID] [int] NULL, [LoadDate] [datetime] NULL, [UpdateDate] [datetime] NULL, INDEX CCI_FactOnlineSales_Stage3 CLUSTERED COLUMNSTORE ); CREATE TABLE [dbo].[FactOnlineSales_Stage4]( [OnlineSalesKey] [int] NOT NULL, [DateKey] [datetime] NOT NULL, [StoreKey] [int] NOT NULL, [ProductKey] [int] NOT NULL, [PromotionKey] [int] NOT NULL, [CurrencyKey] [int] NOT NULL, [CustomerKey] [int] NOT NULL, [SalesOrderNumber] [nvarchar](20) NOT NULL, [SalesOrderLineNumber] [int] NULL, [SalesQuantity] [int] NOT NULL, [SalesAmount] [money] NOT NULL, [ReturnQuantity] [int] NOT NULL, [ReturnAmount] [money] NULL, [DiscountQuantity] [int] NULL, [DiscountAmount] [money] NULL, [TotalCost] [numeric](18, 3) NOT NULL, [UnitCost] [money] NULL, [UnitPrice] [money] NULL, [ETLLoadID] [int] NULL, [LoadDate] [datetime] NULL, [UpdateDate] [datetime] NULL, INDEX CCI_FactOnlineSales_Stage4 CLUSTERED COLUMNSTORE )

Next step will be to configure the amount of memory that will be used for the initial test, and I will set it to 6 GB of RAM (WARNING: do not forget to reset it back to your original value!!!):

EXEC sys.sp_configure N'max server memory (MB)', N'6000'; GO RECONFIGURE WITH OVERRIDE GO

To monitor the current memory grants, I shall use rather primitive query against the sys.dm_exec_query_memory_grants DMV, getting the necessary basic results:

SELECT session_id, dop, request_time, grant_time, requested_memory_kb, granted_memory_kb, required_memory_kb, used_memory_kb, max_used_memory_kb, query_cost FROM sys.dm_exec_query_memory_grants

For checking on the final results, I shall use the CISL (free and open-source library) function dbo.cstore_GetRowGroupsDetails and notice, that because I want to observe all 4 tables, I am not specifying the @preciseSearch parameter:

EXEC dbo.cstore_GetRowGroupsDetails @tableName = 'FactOnlineSales_Stage';

Now these are the scripts that I shall fire in 4 independent windows from my SQL Server Management Studio:

use ContosoRetailDW; GO SET STATISTICS TIME, IO OFF TRUNCATE TABLE [dbo].[FactOnlineSales_Stage1] ; INSERT INTO [dbo].[FactOnlineSales_Stage1] SELECT TOP 1000000 OnlineSalesKey, DateKey, StoreKey, ProductKey, PromotionKey, CurrencyKey, CustomerKey, SalesOrderNumber, SalesOrderLineNumber, SalesQuantity, SalesAmount, ReturnQuantity, ReturnAmount, DiscountQuantity, DiscountAmount, TotalCost, UnitCost, UnitPrice, ETLLoadID, LoadDate, UpdateDate FROM dbo.FactOnlineSales ORDER BY OnlineSalesKey

use ContosoRetailDW; GO SET STATISTICS TIME, IO OFF TRUNCATE TABLE [dbo].[FactOnlineSales_Stage2]; INSERT INTO [dbo].[FactOnlineSales_Stage2] SELECT TOP 1000000 OnlineSalesKey, DateKey, StoreKey, ProductKey, PromotionKey, CurrencyKey, CustomerKey, SalesOrderNumber, SalesOrderLineNumber, SalesQuantity, SalesAmount, ReturnQuantity, ReturnAmount, DiscountQuantity, DiscountAmount, TotalCost, UnitCost, UnitPrice, ETLLoadID, LoadDate, UpdateDate FROM dbo.FactOnlineSales ORDER BY OnlineSalesKey;

use ContosoRetailDW; GO SET STATISTICS TIME, IO OFF TRUNCATE TABLE [dbo].[FactOnlineSales_Stage3]; INSERT INTO [dbo].[FactOnlineSales_Stage3] SELECT TOP 1000000 OnlineSalesKey, DateKey, StoreKey, ProductKey, PromotionKey, CurrencyKey, CustomerKey, SalesOrderNumber, SalesOrderLineNumber, SalesQuantity, SalesAmount, ReturnQuantity, ReturnAmount, DiscountQuantity, DiscountAmount, TotalCost, UnitCost, UnitPrice, ETLLoadID, LoadDate, UpdateDate FROM dbo.FactOnlineSales ORDER BY OnlineSalesKey

use ContosoRetailDW; GO SET STATISTICS TIME, IO OFF TRUNCATE TABLE [dbo].[FactOnlineSales_Stage4]; INSERT INTO [dbo].[FactOnlineSales_Stage4] SELECT TOP 1000000 OnlineSalesKey, DateKey, StoreKey, ProductKey, PromotionKey, CurrencyKey, CustomerKey, SalesOrderNumber, SalesOrderLineNumber, SalesQuantity, SalesAmount, ReturnQuantity, ReturnAmount, DiscountQuantity, DiscountAmount, TotalCost, UnitCost, UnitPrice, ETLLoadID, LoadDate, UpdateDate FROM dbo.FactOnlineSales ORDER BY OnlineSalesKey

Let’s start the show, and execute the loading scripts, while monitoring the memory grants and checking on the final results of the type of the Row Groups that will land into our Columnstore Tables.

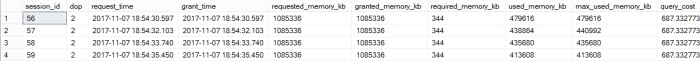

Let’s check on the Memory grants:

SELECT session_id, dop, request_time, grant_time, requested_memory_kb, granted_memory_kb, required_memory_kb, used_memory_kb, max_used_memory_kb, query_cost FROM sys.dm_exec_query_memory_grants

You can observe the memory grants in around 1 GB for each of the 4 processes, without any delay or problems.

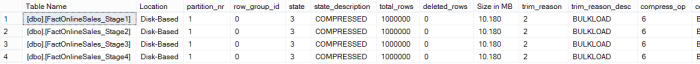

Now, let’s check on the final results, after the processes have finished:

EXEC dbo.cstore_GetRowGroupsDetails @tableName = 'FactOnlineSales_Stage';

It looks gorgeous – all Row Groups are perfect, loaded with Bulk Load API the only type of Row Groups that we have are the compressed Row Groups !

Let’s put some memory pressure on this server, and for that purpose I will lower the total amount of memory to 1.6 GB (WARNING: do not forget to reset it back to your original value!!!):

EXEC sys.sp_configure N'max server memory (MB)', N'1600' GO RECONFIGURE WITH OVERRIDE GO

Now, let us re-execute the 4 threads in a fast way, canceling the first 2 processes, and letting the 3rd one and the 4th threads to finish.

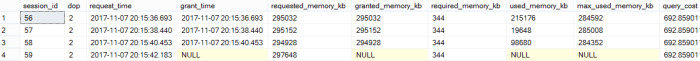

Let’s monitor the execution for the first 30 seconds, invoking the following query against the sys.dm_exec_query_memory_grants a couple of times:

SELECT session_id, dop, request_time, grant_time, requested_memory_kb, granted_memory_kb, required_memory_kb, used_memory_kb, max_used_memory_kb, query_cost FROM sys.dm_exec_query_memory_grants

As you can see on the picture above, the initial grant was not successful, as the 4th process keep on waiting on it, and because there is no free memory available in the system, it will not receive the so much required & desired memory grant to run the insertion process successfully with the Bulk Load API.

After waiting for the 25 seconds (notice the difference between the request_time and grant_time is exactly 25 seconds), the engine decides to grant some minimum amount of memory anyway, allowing the process to carry on, without being cancelled, but the penalisation is very heavy – the inserts will not go into the compressed row groups, but into the Delta-Stores, making this operation not-minimally-logged and in other words, painfully slow and inefficient.

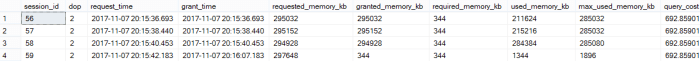

To confirm the final results, let’s check on the Row Groups of our tables, given that we have canceled the inserts into the 2 first tables, we expect 1 row group for the [dbo].[FactOnlineSales_Stage3] table and 1 row group for the [dbo].[FactOnlineSales_Stage4] table, corresponding to the 3rd and 4th threads of data loading:

EXEC dbo.cstore_GetRowGroupsDetails @tableName = 'FactOnlineSales_Stage';

As per the picture above, you can see that the 3rd table has received a compressed Row Group (it had no problems with the memory grant), but the 4th table has an open Delta-Store (because after waiting for 25 seconds for the desired memory grant, it has yielded to the Delta-Store slow data insertion).

That’s beautiful … or is it ?  And if you really want to get impressed, then insert 10 Million Rows under these circumstances, but do it before the lunch, so that you can be back on time before the insertion process into Delta-Store comes to an end.

And if you really want to get impressed, then insert 10 Million Rows under these circumstances, but do it before the lunch, so that you can be back on time before the insertion process into Delta-Store comes to an end.

In the end, do not forget to reset your MAX SERVER MEMORY after all the changes back (in my case to 26GB)

EXEC sys.sp_configure N'max server memory (MB)', N'26000' GO RECONFIGURE WITH OVERRIDE GO

Dictionary Pressure

No matter how ugly the dictionary pressure is, it will not fall back into using the Delta-Store, but it will produce you very small compressed Row Groups if needed, and in my tests I have easily managed to get 6.000 rows per Row Group from the 1 Million Rows insert (yes, it is easy to build an evil dictionary pressure, just by putting a column CHAR(800) and by casting Row_Number as VARCHAR(10) together with REPLICATE(‘C’,600) for the insertion into it.

The Bulk Load API seems to be suffering in worse way from the Memory Pressure, than from the dictionary pressure, though you can solve the memory pressure most of the time, while there are no tools to solve Dictionary Pressure so far.

Final Thoughts

No matter how ugly it may look on the first sight, this is a good solution, in my books. Without canceling your transaction with almost no memory available, the engine finds a way of allowing the data to get into your Columnstore table. In the vast majority of the situations the default functionality is simply brilliant and is exactly what is needed. By lowering the impact on the memory, when the system is already under memory pressure, this operation allows the server to keep on serving smelling transaction while keeping possible Bulk Load attempt alive.

This also should teach everyone a very important lesson – do not start 1000 parallel threads loading data into your tables, unless your have 24 TB of RAM, a blazing fast storage array, and guaranteeing that your threads consume very few memory, and by guaranteeing I mean Resource Governor.

Just because you can, does not mean you should to do it … well, unless you want to push it to the boundaries, and discover interesting stuff.

to be continued with Columnstore Indexes – part 116 (“Partitioning Specifics”)