Chances are you have extra information in the buffer pool for a bad query and it’s dragging down your PLE, causing SQL Server to run slower because it’s reading more from disk. Although this approach is taking a 180 from my post Fixing Page Life Expectancy it has the same effect, with that post focusing on fixing your worst queries and this one focused on fixing your most misused indexes. One approach doesn’t replace the other, it’s more like burning the candle at both ends, except that you end up with a better running database instead of getting burnt out.

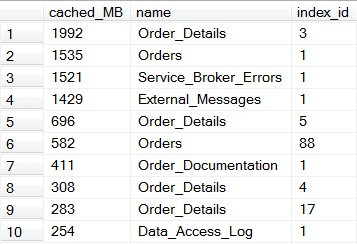

With this approach we start with what’s in the cache. You’ll see the same types of issues in almost any database, and this just happens to be a production database I’m going through today.

The results were found with this query:

SELECT count(1)/128 AS cached_MB , name , index_id FROM sys.dm_os_buffer_descriptors AS bd with (NOLOCK) INNER JOIN ( SELECT name = OBJECT_SCHEMA_NAME(object_id) + '.' + object_name(object_id) --name = 'dbo.' + cast(object_id as varchar(100)) , index_id , allocation_unit_id FROM sys.allocation_units AS au with (NOLOCK) INNER JOIN sys.partitions AS p with (NOLOCK) ON au.container_id = p.hobt_id AND (au.type = 1 OR au.type = 3) UNION ALL SELECT name = OBJECT_SCHEMA_NAME(object_id) + '.' + object_name(object_id) --name = 'dbo.' + cast(object_id as varchar(100)) , index_id , allocation_unit_id FROM sys.allocation_units AS au with (NOLOCK) INNER JOIN sys.partitions AS p with (NOLOCK) ON au.container_id = p.partition_id AND au.type = 2 ) AS obj ON bd.allocation_unit_id = obj.allocation_unit_id WHERE database_id = db_id() GROUP BY name, index_id HAVING Count(*) > 128 ORDER BY 1 DESC;

Service Broker Errors Table

First, there is an errors table here with Service_Broker_Errors, and that should never be in the top 10. What are we doing wrong? The index ID of 1 tells me right away that this is a clustered index, the fact that the table is at least 1.5 GB tells me that we’re probably not purging old data, and 1.5 GB in memory on this table is hinting that we’re probably doing a clustered index scan.

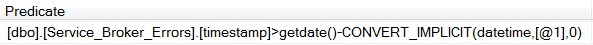

I’ll start by looking at the proc cache to see what’s going on. There’s only one execution plan that used that index and it is, in fact, doing a clustered index scan as I expected.

Predicates or Seek Predicates

In an execution plan you’ll have a seek predicate and just a plain old predicate. The seek predicate is what you were able to do taking advantage of the index being in order, and the predicate is what you had to scan for.

This case is easy because we’re only searching by a single column, but others could have both a seek predicate and a predicate. For instance, if I had an index on my customers table with the key columns Active, First_Name, Last_Name then searched where Active = 1 and Last_Name = ‘Hood’ then it will show up as an index seek with a seek predicate of Active = 1 and a predicate of Last_Name = ‘Hood’. Anyways, lets get back on topic with the issue of my Service_Broker_Errors table…

Now this sounds like a reasonable query looking for errors. I’m looking for the errors that occurred in the last so many days. The CONVERT_IMPLICIT(datetime,[@1],0) shows me that someone typed this the lazy way of GetDate()-1, which isn’t as efficient as DateAdd(Day, -1, GetDate()), but you’re getting me off topic again.

Fixing a useless index

Looking at the indexes on this table I realize there is only one, and it has the single key column of ID. For uniqueness you can’t do much better than an ID column, but you have to ask yourself if you’ll ever use it to query by. In this case the index has never had a seek against it, only scans. Although there table is rarely queried with only 4 uses in the last 2 months (I limited my historical data for this query), it’s still pulling 1.5 GB into cache for every use. After a couple seconds of shaking my head I start to create a change request to make add TimeStamp in as the first key column in the clustered index.

However, I then remembered that this table is 1.5 GB. Is that right? It’s an error table, so if it’s really 1.5 GB then I should either be cleaning up old data or there are so many problems that there is no reason I should be spending my time tuning. Seeing that it has 0 updates in the last 2 months, I already know it’s old data. To double-check on this I run a simple query, keeping in mind the ID is still the clustered index, to find the oldest record, and discover that we haven’t removed anything from this table in years.

SELECT timestamp FROM Service_Broker_Errors WHERE id = (SELECT Min(ID) FROM Service_Broker_Errors)

So I have to talk to people about how old an error can be before we say we just don’t care. It was determined that we probably don’t care about anything more than a month old. I’m paranoid, it comes with the job title, so I made it three months with my change being this:

DECLARE @ID Int SELECT @ID = MAX(ID) FROM Service_Broker_Errors WHERE TimeStamp < GETDATE()-90 WHILE @@ROWCOUNT > 0 BEGIN DELETE TOP (10000) FROM Service_Broker_Errors WHERE ID <= @ID END IF EXISTS (SELECT * FROM sys.indexes WHERE object_id = OBJECT_ID(N'[dbo].[Service_Broker_Errors]') AND name = N'PK_Service_Broker_Errors') ALTER TABLE [dbo].[Service_Broker_Errors] DROP CONSTRAINT [PK_Service_Broker_Errors] GO ALTER TABLE [dbo].[Service_Broker_Errors] ADD CONSTRAINT [PK_Service_Broker_Errors] PRIMARY KEY CLUSTERED ( [TimeStamp] ASC , [id] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON) ON [PRIMARY] GO

The reason I did it like this is because I don’t want to fool around with the junk values as I drop the clustered index (rebuilding the table) then recreate the clustered index (rebuilding the table) with all of that information in there, then delete it all and wreak havoc on the new index. Sure, the fragmentation at the page level would be fine since I’m deleting in order, but that’s a lot of changes to the b-tree.

Finally, I add these lines to my nightly cleanup job to keep things trimmed up from now on:

DECLARE @BatchSize Int SELECT @BatchSize = 10000 WHILE @@ROWCOUNT > 0 BEGIN DELETE TOP (@BatchSize) FROM Service_Broker_Errors WHERE TimeStamp < GETDATE()-90 END

I know, I just got done saying that GetDate()-90 was less efficient than DateAdd(Day, -90, GetDate()), but it’s an implicit conversion that’s done once per call. I admit this is a bad habit of mine, and any time there’s even a chance of this being called once per row I have to write it differently than I normally do. Nobody’s perfect, and the most important thing is that I’m getting rid of all those records using batches, right?

In Conclusion with Service Broker Errors

Now that all of this is done I took a 1.5 GB table with all of it in cache to a 20 MB table with only 1 MB in cache. Cleaning up the data had more of an impact that my index change, but it’s usually not that easy to clean stuff up. Even if I wasn’t able to clean up the data, the index change alone would have allowed for the cache usage to be decreased by at least 1.4 GB.

On to the next one

That’s not the only issue I’m seeing here, in fact I bet I could take half of these off the top 10 list. However, today I’m picking up low-hanging fruit and moving on to the next task on my list. In this case, External_Messages is the next one that doesn’t look right to me. Once again, it’s a clustered index (index_id = 1) of a table that isn’t part of the primary focus of the database, which is handling orders.

Starting with the same steps I look in the proc cache to see what put this here. Once again I see a single query in cache referencing the table, but this one is different. It’s the typical IF X = @X or @X IS NULL that we’ve all written, and it’s being used as the predicate.

I check the indexes on the table and it has the ID as the only key column of the clustered index, so that’s not an issue. Why isn’t it able to use the clustered index with a seek predicate? After all, I’m rather certain that they almost always, if not always, call this with a parameter where it should be filtered down.

Lets look at the execution plan to make sure. The sniffed parameter value from the first time it was called, as found at the bottom of that statement in the XML version of the execution plan, is, in fact, a non-null value.

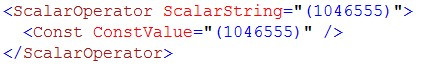

However, SQL Server can’t guarantee that you’re going to pass it a non-null value, and it has to make an execution plan that can account for either possibility. I’ve seen this before, so I know the basic options for a single optional parameter (there are more options, with increasing complexity). I can either add OPTION (RECOMPILE) to the query or I can rewrite it to be two separate queries.

OPTION (RECOMPILE)

Here’s the option I didn’t choose. This will recompile the statement every run, which isn’t too much of a problem because it’s a quick compile on something that only runs a couple times a day. However, it will make it so this doesn’t show up in the proc cache anymore, which I take advantage of quite a bit (for instance, look at the blog post you’re currently reading). Also, it goes against my rule of using hints as a last resort.

That’s not saying you can’t do it this way, just that I didn’t. The reason it works is because SQL Server knows when it makes this execution plan that it will only be used once, so it doesn’t have to account for the possibility of it being a NULL value next run. In fact, it just makes the execution plan with your parameter as a constant value.

Two queries

Here’s the method I decided to go with. Assuming third-normal form doesn’t apply to query text, you should be good to go here. If it’s simple enough then it will be very obvious to anyone editing this at a later point that they need to make any changes in two places.

CREATE PROC NotTheRealProcName @id INT = NULL AS IF @id IS NULL BEGIN SELECT ID , Subject , Message , DateAdded FROM ExternalMessage EM END ELSE BEGIN SELECT ID , Subject , Message , DateAdded FROM ExternalMessage EM WHERE ID = @ID END

This solution isn’t too complex with just a single parameter as it only creates two queries, but if you get just three parameters and try to do this then you’re up to 8 queries. The OPTION (RECOMPILE) method starts to look pretty good as a quick and easy fix before too long. I would still prefer one of the long and drawn out methods, such as getting interim results to a temp table, making it look a little more complex, but getting it to run efficiently with a cached plan.

It worked as I expected, with no one actually getting all of the IDs. I left the code in place to make it possible to get all of them to avoid digging through all of the application code to find where it could return everything then make sure it gets changed there. The result I was looking for was cleaning up the cache, which I got when this dropped from 1.4 GB down to 25 MB in cache.

The End

Overall I was able to drop about 3 GB out of cache, making room for other data while raising the PLE, in two simple changes that were rather easy to track down just by saying “that doesn’t look like it belongs here”. Even the ones that do look like they belong there probably don’t need to have that large of a presence in the cache.

Although I didn’t actually measure the impact that it would have on the end users in these cases because I didn’t start with a performance complaint, I would imagine that SQL Server tends to run faster returning processing 1 row it found quickly instead of reading through a couple million. So, measured or not, I’ll count that as a success as well.

PS. Jonathan Kehayias helped me

My queries looking into the proc cache are slightly modified versions of Jonathan’s work on his blog. Honestly, it didn’t need tweaked or touched for any reason other than the fact that I learn by tinkering. I did help myself by adding in filtering by database because I have a couple servers that have multiple copies of the same database, but, again, it worked great before I touched it, which is something you should expect from Jonathan’s work.

Here’s my altered version:

SET TRANSACTION ISOLATION LEVEL READ UNCOMMITTED; DECLARE @IndexName SYSNAME = '[PK_ExternalMessage]'; DECLARE @DatabaseName SYSNAME; SELECT @DatabaseName = '[' + DB_NAME() + ']'; WITH XMLNAMESPACES (DEFAULT 'http://schemas.microsoft.com/sqlserver/2004/07/showplan') SELECT n.value('(@StatementText)[1]', 'VARCHAR(4000)') AS sql_text, n.query('.'), cp.plan_handle, i.value('(@PhysicalOp)[1]', 'VARCHAR(128)') AS PhysicalOp, i.value('(./IndexScan/@Lookup)[1]', 'VARCHAR(128)') AS IsLookup, i.value('(./IndexScan/Object/@Database)[1]', 'VARCHAR(128)') AS DatabaseName, i.value('(./IndexScan/Object/@Schema)[1]', 'VARCHAR(128)') AS SchemaName, i.value('(./IndexScan/Object/@Table)[1]', 'VARCHAR(128)') AS TableName, i.value('(./IndexScan/Object/@Index)[1]', 'VARCHAR(128)') as IndexName, i.query('.'), STUFF((SELECT DISTINCT ', ' + cg.value('(@Column)[1]', 'VARCHAR(128)') FROM i.nodes('./OutputList/ColumnReference') AS t(cg) FOR XML PATH('')),1,2,'') AS output_columns, STUFF((SELECT DISTINCT ', ' + cg.value('(@Column)[1]', 'VARCHAR(128)') FROM i.nodes('./IndexScan/SeekPredicates/SeekPredicateNew//ColumnReference') AS t(cg) FOR XML PATH('')),1,2,'') AS seek_columns, RIGHT(i.value('(./IndexScan/Predicate/ScalarOperator/@ScalarString)[1]', 'VARCHAR(4000)'), len(i.value('(./IndexScan/Predicate/ScalarOperator/@ScalarString)[1]', 'VARCHAR(4000)')) - charindex('.', i.value('(./IndexScan/Predicate/ScalarOperator/@ScalarString)[1]', 'VARCHAR(4000)'))) as Predicate, cp.usecounts, query_plan FROM ( SELECT plan_handle, query_plan FROM ( SELECT DISTINCT plan_handle FROM sys.dm_exec_query_stats WITH(NOLOCK)) AS qs OUTER APPLY sys.dm_exec_query_plan(qs.plan_handle) tp ) as tab (plan_handle, query_plan) INNER JOIN sys.dm_exec_cached_plans AS cp ON tab.plan_handle = cp.plan_handle CROSS APPLY query_plan.nodes('/ShowPlanXML/BatchSequence/Batch/Statements/*') AS q(n) CROSS APPLY n.nodes('.//RelOp[IndexScan/Object[@Index=sql:variable("@IndexName") and @Database=sql:variable("@DatabaseName")]]' ) as s(i) --WHERE i.value('(./IndexScan/@Lookup)[1]', 'VARCHAR(128)') = 1 OPTION(RECOMPILE, MAXDOP 1);

Related articles

- Fixing Page Life Expectancy (PLE) (simplesqlserver.com)

- Indexing Fundamentals (simplesqlserver.com)

- sys.dm_os_performance_counters Demystified (simplesqlserver.com)

- Indexes – Unused and Duplicates (simplesqlserver.com)

Filed under: Buffer Pool, Indexes, SQL Server, Tuning Tagged: Buffer Pool, cache, Page Life Expectancy, PLE, Query Plan, Tuning

![]()