I mentioned in my previous post about manually updating statistics that you can specify whether they’re updated using a full scan, or you can specify an amount of data to sample, either a percentage of the table size, or a fixed number of rows. You can also choose not to specify this, and SQL Server will decide for you whether to do a full scan, or to sample a certain amount of data.

I thought it would be interesting to look at what the sample sizes are that SQL will choose to use, depending on the amount of data in your table. Note that this occurs if you update statistics without specifying how they should be sampled as below:

UPDATE STATISTICS dbo.Test _WA_Sys_00000002_3AD6B8E2;

This is also the behaviour you will get when SQL updates statistics through the auto-stats mechanism. The fact that auto-stats may sample at a lower rate than is optimal for a given table and the queries against it is another reason you may choose to perform manual statistics updates.

To test this, I created a table and progressively pumped data in. Then after I inserted each batch of rows:

- I Ran a stats update capturing the CPU time taken

- Checked the statistics to see what sample size was used in the update

- Checked the size of the index

Here’s some of the code I used for the test:

--Drop table if exists IF (OBJECT_ID('dbo.Test')) IS NOT NULL DROP TABLE dbo.Test; --Create table for Testing CREATE TABLE dbo.Test( Id INT IDENTITY(1,1) CONSTRAINT PK_Test PRIMARY KEY CLUSTERED, TextValue VARCHAR(20) NULL ); --Create index on TextValue CREATE INDEX IX_Test_TextValue ON dbo.Test(TextValue); --Insert a bunch of rows INSERT INTO dbo.Test(TEXTValue) SELECT TOP 100000 'blah' FROM sys.objects a, sys.objects b, sys.objects c, sys.objects d; --Update statistics without specifying how many rows to sample SET STATISTICS TIME ON; UPDATE STATISTICS dbo.Test IX_Test_TextValue; SET STATISTICS TIME OFF; --View the statistics DBCC SHOW_STATISTICS('dbo.Test', IX_Test_TextValue) WITH STAT_HEADER; --Check the size of the index SELECT i.name AS IndexName, SUM(s.used_page_count) AS Pages FROM sys.dm_db_partition_stats AS s JOIN sys.indexes AS i ON s.[object_id] = i.[object_id] AND s.index_id = i.index_id WHERE i.name = 'IX_Test_TextValue' GROUP BY i.name

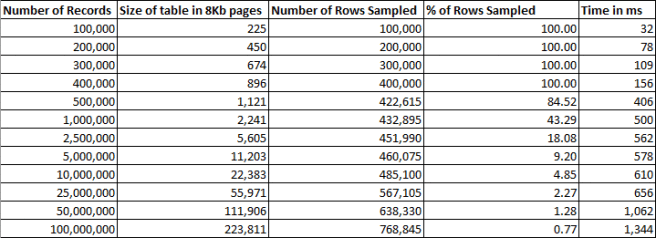

The results of my testing are shown in the below table:

You can see that we have a full sample being taken for the statistics updates up to 4000,000 records (896 pages) but that once the table size hits 500,000 sampling is happening. If you look at the number of pages you will see we now have over 1,000 pages, 1000 pages being about 8MB of data, which is the threshold that sampling kicks in.

I wasn’t able to find a nice neat formula to determine the sampling rate based on table size, but if we look at the above figures there are still some insights to be gained. The main one is that you’ll notice that even as we double the table size, the number of rows sampled doesn’t go up by much. For instance from 500,000 to a million rows, only 10,000 more rows are sampled. This also means that even for pretty large tables, the update isn’t taking long – another reason why it’s worth leaving auto stats updates enabled and running synchronously with queries – they’re generally not going to take that long.

Another insight is that the percentage of rows sampled drops off very quickly. As the sample size doesn’t really increase that much even when the table size doubles – the percentage sampled has almost halved each time.