As a Database Administrator, something that should be part of your database audit is monitoring the growth of files. That means tracking when the log file grows and tracking when the data file(s) grow(s). Many will argue that there is nothing worse than not knowing when a database-related file changed in size except maybe when it rapidly and constantly grows until the disk is filled.

Beyond just trapping when these events occur, the DBA usually also wants to know what caused the file to grow or shrink. This has been a problem that has plagued many a DBA over time. Sure there are plenty of methods to assist in the capture of such things. I even wrote about that quite some time ago here. In that article, I gave the tools to help track when a transaction log grew. Today, I am looking to share a different method on how to trap the executing SQL that causes a file to grow. And unlike that previous method, this method will also help to track when a file decreases in size.

Beyond just trapping when these events occur, the DBA usually also wants to know what caused the file to grow or shrink. This has been a problem that has plagued many a DBA over time. Sure there are plenty of methods to assist in the capture of such things. I even wrote about that quite some time ago here. In that article, I gave the tools to help track when a transaction log grew. Today, I am looking to share a different method on how to trap the executing SQL that causes a file to grow. And unlike that previous method, this method will also help to track when a file decreases in size.

I do want to point out that there is an alternate method to capture the shrink events if you wish to read more. I wrote previously about using the default trace to capture when a shrink occurs within the instance. This can be captured thanks to the default trace. You can read all about that over here. Despite the availability of the information within the default trace, I think the method I share here will be lightweight enough that it won’t hurt to have it running—at least occasionally.

Enter the Database Audit

If you have become accustomed to reading my articles, you will probably surmise that there is some degree of setup that needs to be done before I get to the meat of the matter. That holds true today. It just wouldn’t be right to share a method to do something without a harness to show how to get there. So, let’s get the setup out of the way.

Use master;

Go

IF DB_ID('Sandbox2') IS NULL

BEGIN

EXECUTE ('CREATE DATABASE Sandbox2');

END

GO

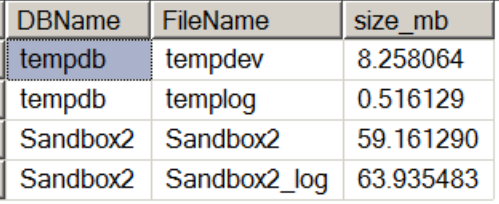

/*We Need The database ID of the newly created Sandbox2 Database - just file it away */SELECT db_id('Sandbox2') as DatabaseIDSince we will be wanting to create something that will track when a file changes in size, we will need to have a baseline of the size of the files for the newly created Sandbox2 database. Let’s capture that with the following query. Small note on this query is that I am including the tempdb related file sizes. You don’t need to include that, but it may be of interest for future testing.

SELECT DB_NAME(database_id) AS DBName,name AS FileName,size/124.0 AS size_mb

FROM master.sys.master_files

WHERE database_id in (2, DB_ID('Sandbox2'));That is all the setup that is needed at this point. We are now ready to implement a tool that can help us monitor these changes. This tool is lightweight and pretty efficient at capturing the info that would be essential for the exceptional DBA to keep on top of the changes occurring in his/her environment. This tool comes to us in the form of an extended event. Let’s do a little checking into what is available before we dive into the actual XE session itself.

First, when you are curious, if there is an extended event that may be sufficient for your needs, you should check the event store to see what is there. We can do that via a query similar to the following.

SELECT c.object_name as EventName,p.name as PackageName,o.description as EventDescription

FROM sys.dm_xe_objects o

INNER JOIN sys.dm_xe_object_columns c

ON o.name = c.object_name

and o.package_guid = c.object_package_guid

INNER JOIN sys.dm_xe_packages p

on o.package_guid = p.guid

WHERE object_type='event'

AND c.name = 'channel'

AND (c.object_name like '{529e71a51265b45c1f7f96357a70e3116ccf61cf0135f67b2aa293699de35170}file_size{529e71a51265b45c1f7f96357a70e3116ccf61cf0135f67b2aa293699de35170}'

or c.object_name like '{529e71a51265b45c1f7f96357a70e3116ccf61cf0135f67b2aa293699de35170}growth{529e71a51265b45c1f7f96357a70e3116ccf61cf0135f67b2aa293699de35170}')

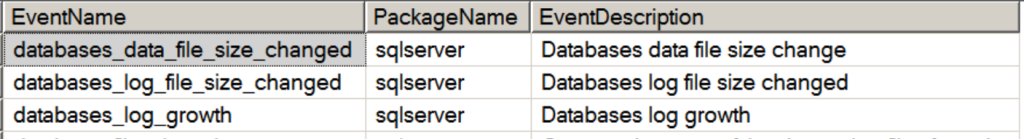

Order By o.package_guid;This query may return something along the lines of what we see in the following image:

From that list, we can immediately see that we have some options to help us try and capture what is happening with our databases when our back is turned. From here, we can query to find what kind of data is captured with each of these events. To do that, we simply need to run a query such as the following query:

/* Let's take a look at the columns available for each of the events picked out */ SELECT * FROM sys.dm_xe_object_columns WHERE object_name = 'databases_data_file_size_changed' AND column_type != 'readonly'; SELECT * FROM sys.dm_xe_object_columns WHERE object_name = 'databases_log_file_size_changed' AND column_type != 'readonly'; SELECT * FROM sys.dm_xe_object_columns WHERE object_name = 'databases_log_growth' AND column_type != 'readonly';

Looking at the available data points in each of these sessions can prove promising. It is indeed promising enough that we can now proceed with the creation of an XE session.

CREATE EVENT SESSION [TrackDBFileChange] ON SERVER

ADD EVENT sqlserver.databases_data_file_size_changed(

ACTION(sqlserver.session_id,sqlserver.database_id,sqlserver.client_hostname,

sqlserver.sql_text)

)

,ADD EVENT sqlserver.databases_log_file_size_changed(

ACTION(sqlserver.session_id,sqlserver.database_id,sqlserver.client_hostname,

sqlserver.sql_text)

)

ADD TARGET package0.asynchronous_file_target(

SET filename='C:\XE\DBFileSizeChange.xel',max_file_size = 5,max_rollover_files = 4

,metadatafile='C:\XE\DBFileSizeChange.xem') --if the path does not exist, a nasty error will be thrown

,

ADD TARGET package0.ring_buffer-- Store events in the ring buffer target

(SET max_memory = 4096)

WITH (MAX_MEMORY = 4MB, EVENT_RETENTION_MODE = ALLOW_SINGLE_EVENT_LOSS, TRACK_CAUSALITY = ON, MAX_DISPATCH_LATENCY = 1 SECONDS,startup_state = ON)

GO

ALTER EVENT SESSION TrackDBFileChange

ON SERVER

STATE = START;

GOI decided to just focus on the two events that included file_size_changed in the name of the event. You can also see that I chose to send this to two targets. You would be fine to just send this to an asynchronous file target. Just bear in mind that you do need to specify a path for the file_target that does exist or an error will be thrown. I have also specified that this session will restart on server startup and then I turned the session to the started state.

Does it work?

That is a really good question. Now that we have a test database and a session to trap events, all we need is to figure out how to test it. We would want to do a few different things to test—like grow and shrink a file in some way. And that is exactly what we are going to do at this point.

First test, since we have a really small empty database, is to try and force the database to grow by inserting some data. We will do that with this next query.

SELECT TOP 100000

SomeID = IDENTITY(INT,1,1),

SomeInt = ABS(CHECKSUM(NEWID())){529e71a51265b45c1f7f96357a70e3116ccf61cf0135f67b2aa293699de35170}50000+1,

SomeLetters2 = CHAR(ABS(CHECKSUM(NEWID())){529e71a51265b45c1f7f96357a70e3116ccf61cf0135f67b2aa293699de35170}26+65)

+ CHAR(ABS(CHECKSUM(NEWID())){529e71a51265b45c1f7f96357a70e3116ccf61cf0135f67b2aa293699de35170}26+65),

SomeMoney = CAST(ABS(CHECKSUM(NEWID())){529e71a51265b45c1f7f96357a70e3116ccf61cf0135f67b2aa293699de35170}10000 /100.0 AS MONEY),

SomeDate = CAST(RAND(CHECKSUM(NEWID()))*3653.0+36524.0 AS DATETIME),

SomeHex12 = RIGHT(NEWID(),12)

,TheBlob= REPLICATE('Hey, Dracula!! Why do you say blah, blah blah?',10)

INTO Sandbox2.dbo.millionrowtest

FROM Master.dbo.SysColumns t1,

Master.dbo.SysColumns t2

--drop table millionrowtest

GO

SELECT DB_NAME(database_id) AS DBName,name AS FileName,size/124.0 AS size_mb

FROM master.sys.master_files

WHERE database_id in (2,DB_ID('Sandbox2'));I didn’t post the numbers earlier for my files for the Sandbox2 database, but they were small. I had a 2MB data file and a 1mb log file. Now, when I look at the results from that last query that included the file size information, I will see a database that has grown a fair amount.

That should be enough of a change to have triggered something in our extended event session. Let’s take a look at the session. To do that, I am going to pull out a query to help parse the XML and see what has happened. Here is that query:

SELECT

event_data.value('(event/@name)[1]', 'varchar(50)') AS event_name

,event_data.value('(event/@timestamp)[1]','varchar(max)') as timestamp

,event_data.value('(event/data[@name="count"]/value)[1]', 'bigint') AS DbSizeChangeTo_KB

,event_data.value('(event/action[@name="sql_text"]/value)[1]', 'varchar(max)') AS sql_text

,db_name(event_data.value('(event/action[@name="database_id"]/value)[1]', 'int')) AS DBQueryExecutedFrom

,db_name(event_data.value('(event/data[@name="database_id"]/value)[1]','int')) as AffectedDB

,event_data.value('(event/action[@name="client_hostname"]/value)[1]', 'varchar(max)') AS ClientHost

,event_data.value('(event/action[@name="session_id"]/value)[1]', 'varchar(max)') AS session_id

FROM(

SELECT CAST(event_data AS xml) AS TargetData

FROM sys.fn_xe_file_target_read_file('C:\XE\DBFileSizeChange*.xel','C:\XE\DBFileSizeChange*.xem',NULL, NULL)

) AS evts(event_data)

WHERE event_data.value('(event/@name)[1]', 'varchar(50)') = 'databases_log_file_size_changed'

or event_data.value('(event/@name)[1]', 'varchar(50)') = 'databases_data_file_size_changed'

--or event_data.value('(event/@name)[1]', 'varchar(50)') = 'databases_log_growth'

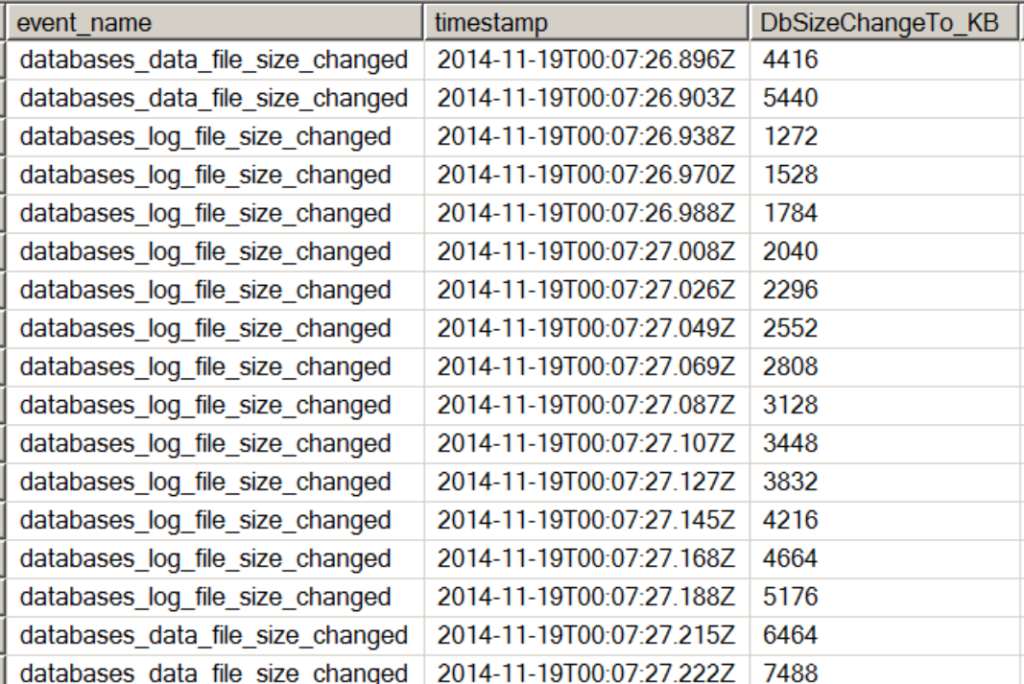

ORDER BY timestamp asc;Despite having two targets in my session, we will only cover the query that helps parse the data from the asynchronous file target. When I run that query against the files that exist for this session I get a result set of 90 records on my system. That probably seems like a ton of results for such a small increase in the database. As it would happen, I left the growth settings at the default growth increments (don’t do that in a production system) and this means I get a ton of growth activities at very small numbers (1mb for the data file and 10% for the log file). Here is a sample of the output:

You can see how this might be helpful for when a process runs hog wild in the environment. What about going in the other direction though? What if we need to know about when the database is shrunk or when a file is shrunk? Well, let’s do that too. Let’s try the following query.

USE [Sandbox2]

GO

DBCC SHRINKFILE (N'Sandbox2' , 1)

GO

USE [Sandbox2]

GO

DBCC SHRINKFILE (N'Sandbox2_log' , 1)

GO

SELECT DB_NAME(database_id) AS DBName,name AS FileName,size/124.0 AS size_mb

FROM master.sys.master_files

WHERE database_id in (2,DB_ID('Sandbox2'));

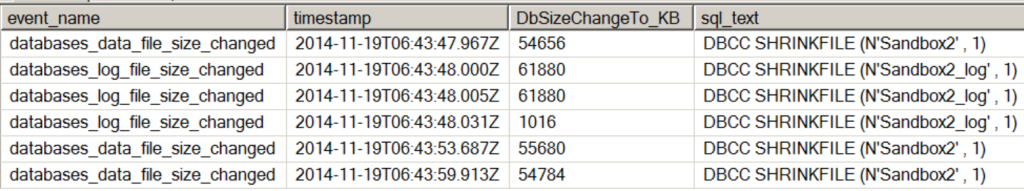

GOAgain, I check for a baseline on the files to see if the file sizes changed. In this case, you can run that final query and compare or just trust me on it. Having shrunk both files in the Sandbox2 database, let’s check the XE session data again:

Check that out! We have captured the shrink events too! There are multiple shrink events in this case only because I ran the shrink statements multiple times. This is excellent news for everybody that is trying to keep an eye on these database size changes. You can see in the XE session that I applied the sql_text() action. I have done this so I will be able to see what query caused the growth or shrink event to occur. Even better news is that this event session is perfect for those of you still on SQL 2008.

If you enjoyed this article, check out some of the follow-up articles: Data Growth Audits or Mysterious Growth.