Suppose, you have several folders in your Azure Blob storage container, where daily product inventory data from different stores is saved. You need to transfer those files with product stock daily snapshots to a database.

It will be a natural way to get a list of files from all sourcing folders and then load them all into your database.

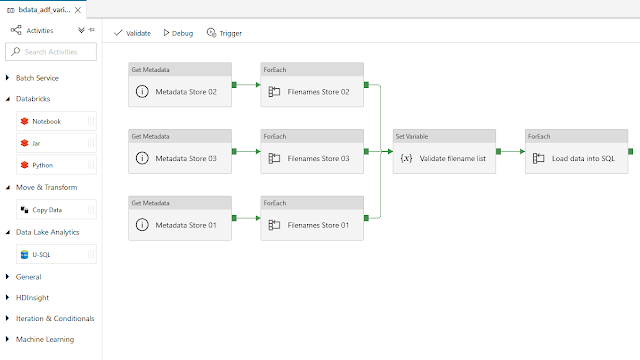

Technically:

1) We can read metadata of our sourcing folders from the Blob storage

2) Then we can extract all the files names and save them in one queue object

3) And finally, use this file list queue to read and transfer data into a SQL database.

Let's recreate this use case in our Azure Data Factory pipeline.

1) To get metadata of our sourcing folders, we need to select "Child Items" for the output of our [Get Metadata] activity task:

Which provides a list of sub-folders and files inside the given folder with a list of name and type of each child item.

Here is an output of this task using a list of files from my first store:

2) Then I need to extract file names. To make it happen I pass the output of the [Get Metadata] activity task to my [ForEach] container.

Where Items parameter is set to @activity('Metadata Store 01').output.childitems.

And then within this loop container, I actually start using this new [Append Variable] activity task and specifically choose to extract only names and not types from the previous task output set. Please note that this task can only be used for 'Array' type of variables in your pipeline.

Then all that I have to do is to replicate this logic for other sourcing folders and stream the list of the file names to the very same variable.

In my case it's var_file_list. Don't forget to define all the necessary variables within your pipeline.

3) Once all the file names are extracted into my array variable, then I can use this as a queue for my data load task. My next [ForEach] loop container's Items parameter will be set to this value: @variables('var_file_list')

And internal [Copy Data] activity task within this loop container will receive file names by using individual item names from my variable loop set [@item()].

List of files is appended from each sourcing folders and then all the files are successfully loaded into my Azure SQL database. Just to check a final list of file names, I copied the content of my var_file_list variable into another testing var_file_list_check variable to validate its content.

Azure Data Factory allows more flexibility with this new [Append Variable] activity task and I do recommend to use it more and more in your data flow pipelines! 🙂