In a previous article I discussed the various methods available in SQL Server 2016 & 2017 to perform in-database analytics. In the article I used a model that was stored directly inside SQL Server to perform predictions. But what if your models are not stored inside SQL Server but are build inside Azure Machine Learning?

In this article I am going to show you how you could integrate the worlds of SQL Server and Azure Machine learning by performing model scoring on data that is stored in a SQL Server database through an AzureML web service directly in SQL Server!

Scenario

We are aiming to build a solution for the following scenario:

- Machine Learning model is build inside AzureML;

- Our data is stored inside SQL Server;

- We want to perform a prediction on data as soon as it is inserted into a table;

- We want to store the predicted value with the data we just inserted.

What I came up with is the following workflow:

- A new row of data is inserted into a table;

- A trigger grabs the data that is going to be inserted;

- The trigger then pushes the inserted values to a stored procedure;

- The stored procedure calls an in-database R script through the sp_execute_external_script procedure. The R script will take care of pushing the values to the AzureML webservice and returns the predicted value;

- The predicted value is returned to the trigger which will then insert the new row inside the table together with the predicted value.

There are a couple of things to keep in mind when you run this scenario. First of all, your SQL Server Instance should be connected to the internet since that’s the place our model is stored. Second of all, we are using a trigger to call the AzureML webservice, this means that the inserting or rows will have to wait till we have a predicted value returned by the webservice which can lead to blocking issues when many concurrent inserts occur. In situations where you have a high insert workload it would probably be better to move the predictions to a separate process instead of a trigger so the inserts are not slowed down.

The AzureML Predictive Experiment

To show you a working example of the scenario I described above I created an AzureML experiment based on the “Iris” dataset. The Iris dataset can be used to classify a plan species based on four features of a plant. The dataset is directly available in AzureML as an example dataset.

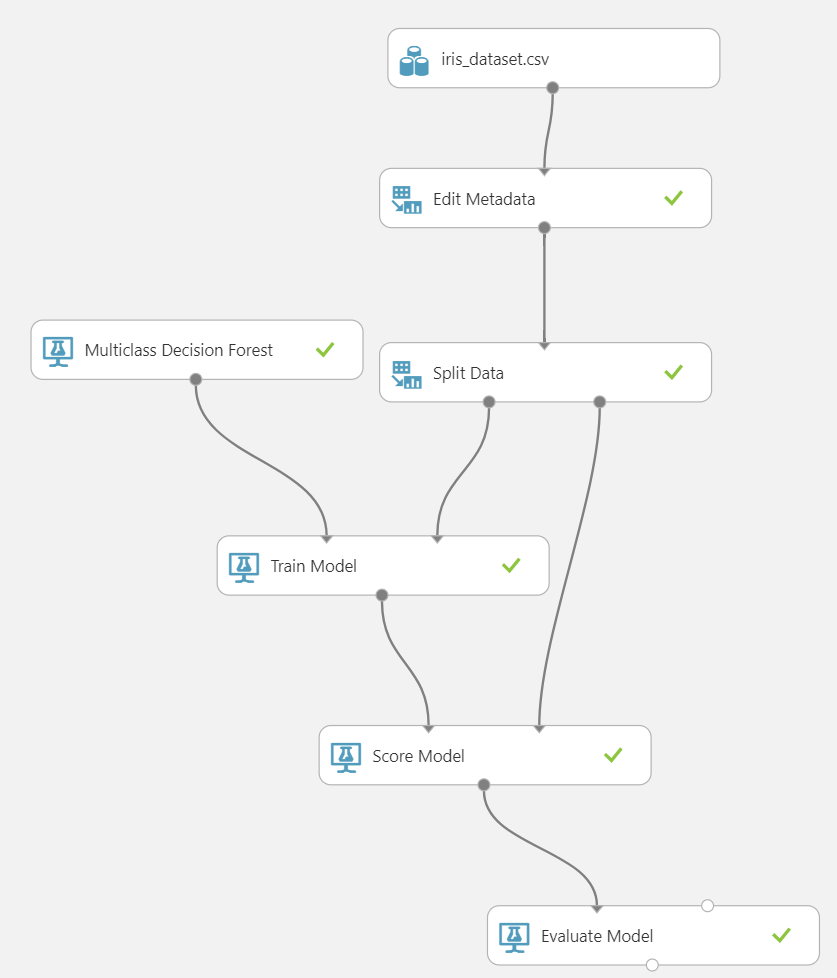

The AzureML training experiment I build is very simple and can be seen in the image below.

In this case I used a .csv file that contains the training data from the Iris dataset. Using the “Edit Metadata” module I convert the “Species” feature to a categorical feature. All the other features are left untouched. I then performed a split on the data, leaving 80% of the data to train the Multiclass Decision Forest and 20% to test the model.

From this training experiment I generated a predictive experiment which can be seen below.

I did move some things around and added two “Select Columns in Dataset” modules to configure what data goes into the model and what data is returned by the trained model. In the first “Select Columns in Dataset” I filtered out the “Species” feature since we will not specify it when we call upon the model to do a prediction. I connected the “Web service input” model directly to the “Score Model” module so it expects the same input features we configured in the “Select Columns in Dataset” module. The second “Select Columns in Dataset” module only selects the “Scored Labels” column. The reason for this is that I only want the predicted species returned by the predictive experiment. If you do not add this “Select Columns in Dataset” module everything, including the features we specified, will be returned by the experiment.

After saving and running the predictive experiment I generated an AzureML webservice that we will use to perform our predictions later on.

I removed the API key. If you plan on rebuilding this example make sure to save the key since we will need it later on.

Now that we have an predictive experiment and a webservice available to us in AzureML, let’s take a look at the configuration we need inside SQL Server to use the AzureML webservice.

SQL Server configuration

The first thing I would like to point out here is that you will need to run SQL Server 2016 or 2017 with in-database R services enabled if you plan to recreate the examples. The reason for this is that we will be using the sp_execute_external_script procedure to execute R code that will access the AzureML webservice.

To start off I am going to create the table that will hold our data. All the Iris information and the predicted species will be written to this table:

CREATE TABLE [dbo].[iris] ( [Sepal_Length] [real] NULL, [Sepal_Width] [real] NULL, [Petal_Length] [real] NULL, [Petal_Width] [real] NULL, [Predicted_Species] [varchar](50) NULL ) GO

Now that we have our table in place to hold our data it’s time to look at the Stored Procedure we will use to perform the webservice request. The Stored Procedure contains the R code needed to call the webservice and will return the predicted species as an output parameter. We will call the Stored Procedure using an Insert trigger we will create later on.

CREATE PROCEDURE [dbo].[sp_Predict_Iris]

@sepal_length REAL,

@sepal_width REAL,

@petal_length REAL,

@petal_width REAL,

@predicted_species VARCHAR(50) OUTPUT

AS

EXEC sp_execute_external_script

@language= N'R',

@script = N'

library("RCurl")

library("rjson")

options(RCurlOptions = list(cainfo = system.file("CurlSSL", "cacert.pem", package = "RCurl")))

h = basicTextGatherer()

hdr = basicHeaderGatherer()

req = list(

Inputs = list(

"input1"= list(

list(

"Sepal_Length" = as.character(sepal_length_R),

"Sepal_Width" = as.character(sepal_width_R),

"Petal_Length" = as.character(petal_length_R),

"Petal_Width" = as.character(petal_width_R)

)

)

),

GlobalParameters = setNames(fromJSON("{}"), character(0))

)

body = enc2utf8(toJSON(req))

# Copy the API key here

api_key = "[API KEY HERE]"

authz_hdr = paste("Bearer", api_key, sep=" ")

h$reset()

# Copy the Request-Response URL here

curlPerform(url = "[Request-Response URL HERE]",

httpheader=c("Content-Type" = "application/json", "Authorization" = authz_hdr),

postfields=body,

writefunction = h$update,

headerfunction = hdr$update,

verbose = FALSE

)

headers = hdr$value()

result = h$value()

scored_results <- as.data.frame((fromJSON(result)))

predicted_species_R <- as.character(scored_results$Scored.Labels)',

@params = N'@sepal_length_R real,

@sepal_width_R real,

@petal_length_R real,

@petal_width_R real,

@predicted_species_R varchar(50) OUTPUT',

@sepal_length_R = @sepal_length,

@sepal_width_R = @sepal_width,

@petal_length_R = @petal_length,

@petal_width_R = @petal_width,

@predicted_species_R = @predicted_species OUTPUT

WITH RESULT SETS NONE;

RETURN

GOThere are a couple things to take note of in the code of the Stored Procedure. First of all, since we are calling sp_execute_external_script you will need to have installed R Services (In-Database) for SQL Server 2016, or Machine Learning Service (In-Database) for SQL Server 2017.

To perform the web-request you will need to install two additional R packages: RCurl and rjson. There are various methods you can use to add additional libraries to the in-database R installation and they are described in this article: https://docs.microsoft.com/en-us/sql/advanced-analytics/r/install-additional-r-packages-on-sql-server.

In the code above you will also need to add two things: your API key, which you received when creating the webservice and the Request-Response URL. The last one can be a bit tricky to find, the easiest way is to open the properties of your webservice through the AzureML Studio page and click the “New Web Service Experience”:

From that page click on the “Consume” button at the top and the Request-Response URL should be in the list together with your primary and secondary API key’s and the Batch Requests URL.

With all the information added to the create Stored Procedure script so it points to the correct AzureML webservice we created earlier, run the script to create the stored procedure.

Consuming the AzureML webservice

With the AzureML webservice in place and the results table and stored procedure created we are ready to call the AzureML webservice to perform predictions!

Let’s start by testing the Stored Procedure we created in the previous section:

DECLARE @prediction_results VARCHAR(50) EXEC sp_Predict_Iris @sepal_length = 5.2, @sepal_width = 2.7, @petal_length = 3.9, @petal_width = 1.4, @predicted_species = @prediction_results OUTPUT SELECT @prediction_results

If everything is working and the connection to the AzureML webservice is functioning you should get a result like this:

If you do not see a result or get an error message, make sure that your API key and the request-response URL are filled in correctly in the Stored Procedure and you have a working internet connection so you can actually access the webservice.

If everything is working so far we can go to the last step and build the trigger that will execute the Stored Procedure and writes the features and predicted species into a table.

CREATE TRIGGER trgPredictSpecies ON dbo.iris

INSTEAD OF Insert

AS

DECLARE @sepal_length_TR varchar(50)

DECLARE @sepal_width_TR varchar(50)

DECLARE @petal_length_TR varchar(50)

DECLARE @petal_width_TR varchar(50)

SELECT @sepal_length_TR = i.Sepal_Length FROM inserted i

SELECT @sepal_width_TR = i.Sepal_Width FROM inserted i

SELECT @petal_length_TR = i.Petal_Length FROM inserted i

SELECT @petal_width_TR = i.Petal_Width FROM inserted i

DECLARE @predicted_output VARCHAR(50)

-- Execute the Stored Procedure

EXEC sp_Predict_Iris

@sepal_length = @sepal_length_TR,

@sepal_width = @sepal_width_TR,

@petal_length = @petal_length_TR,

@petal_width = @petal_width_TR,

@predicted_species = @predicted_output OUTPUT

-- Insert the values we supplied on the INSERT statement together with the

-- predicted species class we retrieved from the AzureML web service

INSERT INTO Iris (Sepal_Length, Sepal_Width, Petal_Length, Petal_Width, Predicted_Species)

VALUES (@sepal_length_TR, @sepal_width_TR, @petal_length_TR, @petal_width_TR, @predicted_output)The trigger will fire as soon as we add a new record to the “iris” table. It will grab the values we supplied in the INSERT statement and pass them to the Stored Procedure that call the AzureML webservice. It will then grab the returned predicted species value and together with the insert values add them to the “iris” table.

Let’s see if it works!

INSERT INTO iris ( Sepal_Length, Sepal_Width, Petal_Length, Petal_Width ) VALUES ( 5.1, 2.6, 3.8, 1.3 )

SELECT * FROM iris

If everything worked correctly the result of the last SELECT query should look like this:

Summary

In this article we used in-database R code call a webservice we created in AzureML. Through the webservice we were able to perform a prediction using the Iris dataset and return that information inside a SQL Server table with the use of a trigger mechanic.

Before we finish this article, there are some important things you might want to keep in the back of your head when building a solution like this.

While the scenario I describes works perfectly, it is far more efficient to build and store your model directly in SQL Server instead of AzureML. The simple reasoning here is that data has to travel to and from the AzureML webservice which can delay the prediction and requires an internet connection on the SQL Server machine.

Another important aspect is the use of a trigger. Using a trigger in situations where there is a big insert workload will probably result in quite some pain. Insert triggers are notorious for causing deadlocking and slowdown, especially now that we have to access a webservice on the internet.

A better alternative might be to not use a trigger to access the AzureML webservice but rather build an automated process that grabs the unscored data inside a table and pushes it to AzureML for scoring. That way insert statements will not be slowed down and you can avoid excessive locking. This does mean scoring does not directly occur when data is inserted inside a table though.