By Steve Bolton

…………In A Rickety Stairway to SQL Server Data Mining, Part 14.3: Debugging and Deployment, we passed the apex of this series of amateur self-tutorials on SQL Server Data Mining (SSDM) and have seen the difficulty level and real-world usefulness of the material decline on a steady slope since then. This week’s topic is a bit more challenging than the material covered in the last article, A Rickety Stairway to SQL Server Data Mining, Part 14.7: Additional Plugin Functionality, but only because it pertains to an SSDM feature that is poorly documented, to the point that it sometimes difficult to determine whether the lack of results are due to bugs or incorrect usage. In fact, the only way I have been able to get the product’s Predictive Model Markup Language (PMML) support features to work at all is by implementing the relevant methods in SSDM’s extensibility architecture for adding custom algorithms.

…………PMML was developed in the late 1990s by the Data Mining Group (DMG), a consortium of data mining product vendors, in order to facilitate interoperability between their disparate mining tools using XML. The consortium maintains a handy list of vendors that support particular versions of PMML at the DMG.org website, which is also the central repository of other critical information on PMML, particularly schema specifications. These include Weka, Tibco, Angoss, Augustus, BigML, Experian, IBM InfoSphere, Knime, KXen, MicroStrategy, Pervasive DataRush, Predixion Software, RapidMiner, R/Rattle, Salford Systems, Sand CDBMS, SAS Enterprise Miner, Teradata, Clementine and Zementis. The last of these even has a PMML Converter product that can translate older PMML documents to the latest standard, which I have yet to try out. Unfortunately, Microsoft has fallen further behind the current standard than any other vendor on the list, thanks to the pattern of neglect by the company’s top brass that I have spoken about before. It only supports version 2.1, which was released way back in March 2003, according to the helpful Wikipedia page on PMML. The latest release was version 4.1, back in December of 2011, a scant two years ago. Most portability features in the computing field don’t live up to their billing in their earliest incarnations, due various kinks in the translation processes, lack of strict adherence to interoperability standards and lack of widespread adoption among vendors. PMML is no exception to that rule of thumb and Microsoft’s support for the standard is frozen at a point in time when it was much more applicable. Because I am a rookie at this, I cannot yet gauge how common it is for mining models to be exchanged today between different tools using PMML; perhaps Microsoft determined it was one of those pie-in-the-sky standards that didn’t provide much benefit for end users and thus didn’t bother to update it. Either way, the bottom line is the same: regardless of what the rest of the industry is doing with PMML, use cases in which mining models can be successfully exchanged in this way with SSDM are going to be quite rare. I’m not going to spend much time on this topic because SSDM is using an obsolete version of PMML that is more than a decade old. Furthermore, it is limited to just two or three (Microsoft’s documentation is unclear) of the nine algorithms included with SSDM out-of-the-box, which we discussed much earlier in this series. Moreover, this stunted feature is also unwieldy and poorly documented, thereby making it even less useful.

…………In theory, it is possible to create a SQL Server mining model using a statement like CREATE MINING MODEL MyPMMLMiningModelName FROM PMML ‘My XML String’, by pasting the full string of a PMML 2.1 document created by a different mining tool. It should also be possible to create PMML documents from existing SQL Server mining models using a statement like SELECT FROM MyPMMLMiningModelName.PMML. The DMSCHEMA_MINING_MODEL_CONTENT_PMML and DMSCHEMA_MINING_MODEL_XML schema rowsets return basically the same information in a common set of columns, which will be blank for mining models that are not derived from PMML. The MODEL_PMML column is supposed to display the PMML text associated with a model, while the SIZE column contains the length of the PMML definition in bytes and LOCATION should contain the document’s storage location on disk. Unless a model is created from PMML, however, these schema rowsets are useless. Yet in many cases there is no practical benefit to this, because recreating the ported mining model in the Visual Studio GUI may be less of a hassle than dealing with endless XML parsing errors, even for the PMML version and algorithm types that SQL Server can theoretically handle. It good that Microsoft’s Data Mining Team included nascent features for working with PMML way back in 2005, but the neglect the top brass at Microsoft has displayed towards data mining tools since then has had a greater impact on this stillborn feature than any other component of SSDM. I’m not going to say that it’s useless in its current stunted form, because there are undoubtedly PMML documents out there that SSDM can parse correctly, thereby saving data miners the time of implementing them a second time. Models that will port correctly for the narrow set of algorithms and ancient PMML version SQL Server theoretically supports are not easy to come by though, which diminishes its usefulness further.

…………For example, five of the sample models available at the DMG’s PMML Sample Models Archive are Clustering or Decision Trees algorithms encoded in PMML 2.0 or 2.1, which means SQL Server should theoretically have been able to parse them. Yet all five models produced a variety of error messages when the text was pasted into SQL Server’s CREATE MINING MODEL statement, including “Incorrect document syntax,” “Cannot parse mining model due to malformed PMML” and various “PMML parsing failed” errors. The most ominous of these was a warning that “The model inside the PMML 2.1 document uses an algorithm that is not supported,” after the document was apparently parsed correctly. Whether they were malformed because of some fault in DB2 Intelligent Miner, the IBM product that produced the documents, or could not be parsed due to some error on SQL Server’s part is difficult to determine. The bottom line is that getting this portability feature to actually work between different data mining tools may be a taller order than it seems, one that may involve a lot of unwieldy, time-consuming editing of documents by hand. For relatively small documents under the size of a megabyte it might be feasible to fix invalid PMML manually, but it would defeat the purpose of this portability feature if we had to do this kind of menial work to translate all 22 gigagytes of data stored in the mining structures we’ve used as samples in this series. And this is a pittance compared to the terabytes or even petabytes of information required when Big Analysis is performed on Big Data. The latter term is of course a buzzword that obscures the fact that Data has always been getting Bigger, throughout much of the history of the human race; it is a lot like the term “globalization,” which has been occurring steadily for millennia, as I learned while I was studying foreign policy history in grad school. One of the best strategies for coping with the Even Bigger Data of the 21st Century is to develop tools that automate the processing of such data, which will become a Big Hassle in and of itself, in tandem with the size of the datasets. PMML is designed to do this by performing the task of porting mining models, but at the point when the 2.1 specification was released, it apparently could not be done sufficiently “under the hood” to make user intervention unnecessary. Until Data Mining Expressions (DMX) statements like these can be made to work without a lot of costly attention on the part of end users, their practical benefits will diminish in tandem with the rise of Big Data, as the costs in terms of time and energy to use them increase with each megabyte of XML that has to be checked manually. Certainly, with sufficient expenditure of resources, PMML documents can be reworked to fit into the existing SSDM architecture, but those costs are already prohibitive and only increasing as time passes.

…………Similarly, if you run a DMX query like SELECT * FROM DMSCHEMA_MINING_MODEL_CONTENT_PMML, it is a good idea to add a WHERE clause, because the statement will return an error message about the first model it fails to process instead of the results. Unfortunately, most of the models I created earlier in this series could not be included in this statement due to various error messages. Books Online says that Naive Bayes, Decision Trees and Clustering are the only ones out of the nine out-of-the-box algorithms we surveyed earlier in this series which support “the use of Predictive Model Markup Language (PMML) to create mining models,” but it is unclear from this wording whether BOL means creating models from PMML or vice-versa. Apparently it is the former, because the ALLOW_PMML_INITIALIZATION column of DMSCHEMA_MINING_SERVICES schema rowset is only true for Decision Trees and Clustering, whereas queries of DMSCHEMA_MINING_MODEL_CONTENT_PMML against Naive Bayes models always fail. All three of the models we retained in A Rickety Stairway to SQL Server Data Mining, Algorithm 1: Not-So-Naïve Bayes returned error messages when included in queries to this schema rowset, but for different reasons. Limiting the WHERE clause to NBRecordTableViewModel returned a long message including the phrase “persisted file cannot be found” and the path of the SQL Server Analysis Services (SSAS) file containing the model, which usually signifies that the model has not been processed yet. Processing Naive Bayes models won’t lead to successful queries of DMSCHEMA_MINING_MODEL_CONTENT_PMML though because you’re likely to encounter a message that “the algorithm does not support the functionality requested by the ” model,” which is a tip-off that it does not support deriving PMML documents from model content queries. This was the case with NBDenormalized3Model, but NBDenormalizedAllModel returned one of those inscrutable error messages that crop up every so often in Analysis Services: “An unexpected error occurred (file ‘mddiscretizer.cpp’, line 1689, function ‘MDDiscretizer::GetBucketRange’.” Not surprisingly, searches for the term “GetBucketRange” with both “Sql server” and “Discretizer” returned no hits on Google. I suspect that GetBucketRange might someday belong in the invaluable list Jeannine Takaki posted last year in the TechNet thread A Guide to the (Undocumented) System Stored Procedures for Data Mining. Given that it is unlikely that Microsoft’s obsolete implementation of PMML can be used with Naive Bayes in this way, I didn’t make solving this bug a priority.

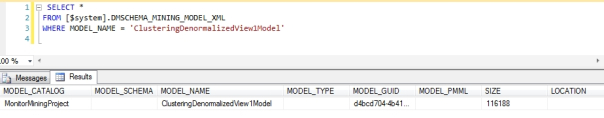

…………Since BOL and ALLOW_PMML_INITIALIZATION both state that Decision Trees is supported by SSDM, I did not expect to have different errors returned for all ten of the mining models we used in A Rickety Stairway to SQL Server Data Mining, Algorithm 3: Decision Trees. DTDenormalizedView3Model, DTDenormalizedView3ModelDiscrete and DTDenormalizedView3ModelDiscrete2 all returned an error to the effect that the “mining model has a column that uses the DATE data type. This data type is not supported by the PMML 2.1 specification. The DATE data type can typically be converted to the DOUBLE data type as a workaround, which is supported by the PMML 2.1 specification.” Because the other seven models all returned the more ominous error that the “mining model contains features that are not supported in the PMML 2.1 specification,” I skipped debugging the date error and altered one model so that it had just one attribute (marked as both an input and output), to see if I could return any Decision Trees PMML at all. Alas, I received the same error and reached the conclusion that Microsoft’s implementation of Decision Trees is not compliant with its implementation of PMML 2.1, but that it didn’t document precisely which of the algorithm features are the root cause. I also reached the conclusion that it was not worth solving the problem by trial and error, since we’re dealing with an implementation of PMML that is not just obsolete, but buggy and undocumented. I also received the date error when running the query against ClusteringDenormalizedView3Model. The other 16 mining models we used in A Rickety Stairway to SQL Server Data Mining, Algorithm 7: Clustering returned without errors, but the MODEL_PMML column was blank in each case. As Figure 1 shows, SSDM returned such basic information as the MODEL_CATALOG, MODEL_NAME and MODEL_GUID, but left the others blank. Note how the second query returns identical information when using a SELECT…PMML statement. The result is the same: the MODEL_PMML column that is supposed to return “An XML representation of the model’s content in PMML format,” as BOL states, is still blank.

Figure 1: Sample Query of DMSCHEMA_MINING_MODEL_CONTENT_PMML

…………To date, the only way I have been able to get the MODEL_PMML columns of these statements to return a PMML document is by implementing the logic myself during the development of custom algorithms. The only documents I have yet to encounter which the CREATE MINING MODEL statement would accept as valid were likewise produced this way, using several methods built into the plug-in architecture for that purpose. Implementing PMML support in a plug-in algorithm is mainly a matter of adding three optional Overridable methods to your code. The easiest of these is the SupportsPMML member of AlgorithmMetadataBase, which is another one of those methods that behaves like a property and merely returns a Boolean value. The heavy lifting is done in RenderPMMLContent and LoadPMMLContent methods of AlgorithmBase. First we’ll use RenderPMMLContent to produce a PMML document from the PluginModel we’ve been using in this section of the Rickety series, on building custom algorithms for SQL Server. When you perform a SELECT…PMML query against a plug-in algorithm, SQL Server will you’re your plug-in’s RenderPMMLContent method first, followed by HasFeatureSelection and GetAllFeatureSelectionInfo methods we discussed in our last article. I have yet to see msmdsrv.exe call GetAttributeFeatureSelectionInfo yet during debugging, but these three methods are apparently designed merely to populate PMML models with feature selection information. The PMMLWriter is automatically passed by the msmdsrv process and only needs to be populated with valid PMML attributes. The WriteStartElement and WriteAttributeString statements in Figure 2 assign the values of certain mandatory fields of the algorithm content portion of an XML document. WriteAttributeString is one of a family of PMMLWriter object members that perform similar functions, except on different data types, such as WriteAttributes, WriteAttributeString, WriteBinHex, WriteCData, WriteChars, WriteComment, WriteElementString, WriteEndAttribute, WriteFullEndElement, WriteName, WriteNmToken, WriteNode, WriteQualifiedName, WriteRaw, WriteStartAttribute, WriteString, WriteValue and WriteWhitespace. The plug-in documentation mentions that some of the methods will throw an exception if invoked in .Net plug-ins, but be wary, because the list is incomplete.

Figure 2: The RenderPMMLContent Method

Protected Overrides Sub RenderPMMLContent(PMMLWriter As PMMLWriter)

PMMLWriter.WriteStartElement(“NaiveBayesModel”)

PMMLWriter.WriteAttributeString(“modelName“, Me.Model.Name)

PMMLWriter.WriteAttributeString(“functionName“, “classification”)

PMMLWriter.WriteAttributeString(“algorithmName“, Me.Model.Metadata.GetServiceName())

PMMLWriter.WriteAttributeString(“threshold”, 1)

Me.Model.RenderModelCreationFlagsPMML(PMMLWriter)

Me.Model.RenderMiningSchemaPMML(PMMLWriter)

Me.Model.RenderModelStatisticsPMML(PMMLWriter) ‘

PMMLWriter.WriteEndElement()

End Sub

…………The .chm help file of the plug-in software development kit (SDK) provides somewhat impenetrable explanations of the RenderModelCreationFlagsPMML, RenderMiningSchemaPMML and RenderModelStatisticsPMML methods – which is better than no explanation at all, i.e. the result you’ll get when using a search engine to look them up. The good news is that plug-in developers apparently don’t have to write their own implementations of these methods, because the code below works. The first method renders the MiningSchema section of a PMML document, RenderMiningSchemaPMML takes care of the global stats section (an equivalent of the MarginalStats object in SSDM) and RenderModelStatisticsPMML renders PMML extensions, which are the equivalent of algorithm parameters and flags. AlgorithmMetadataBase.GetMarginalRequirements must be set to AllStats (as discussed earlier in this segment on plug-in algorithms) in order for RenderMiningSchemaPMML to work correctly. Just like the OpenScope and CloseScope statements we used to encapsulate the logic of the LoadContent and SaveContent methods of the AlgorithmBase class (as explained) in A Rickety Stairway to SQL Server Data Mining, Part 14.2: Writing a Bare Bones Plugin Algorithm), our PMMLWriter object requires WriteStartElement and WriteEndElement statements. The most confusing part of the code below might be the mention of Naïve Bayes in the WriteStartElement. The PluginModel we’ve been working with in this segment on custom algorithms doesn’t match any of the broad classes of mining methods supported by the PMML 2.1 standard, so I simply substituted a value that is compliant to that standard. Other potential values include GeneralRegressionModel, ClusteringModel, SequenceModel, NeuralNetwork, AssociationModel and RegressionModel, TreeModel and possibly also a Taxonomy for hierarchized categories. Each of these require highly specific mandatory XML elements that make them more challenging to render; for the full reference, see the DMG webpages on how to structure a 2.1 document and the XSD for that version. For example, the sample code provided by Bogdan Crivat, one of the original SSDM programmers, in the plug-in SDK requires a modelClass tag that is specific to Clustering algorithms. For General Regression, you would have to fill in a ModelType with values like regression, generalLinear, logLinear, multinomialLogistic, etc. and so on. I won’t get into an in-depth discussion of these XML elements, because this is a tutorial in how to use Microsoft’s implementation of PMML, not in PMML syntax itself. Now if we run a DMX query like SELECT * FROM PluginModel.PMML the MODEL_PMML Column ought to be populated with a valid XML document like the one depicted in Figure 3, except crammed into one line. We would also see the SIZE column populated with the size of the document in bytes.

Figure 3: Sample PMML Document Return in the MODEL_PMML Column

…………If we feed this PMML document to SSDM in a single string, at the end of a statement like CREATE MINING MODEL MyPMMLMiningModelName2 FROM PMML, a mining model of the same name ought to be created on the server, within a mining structure that also uses that name, except terminated with a the suffix _Structure. Provided, that is, that we implement the LoadPMMLContent method of AlgorithmBase correctly. The XML string passed to one of these statements will be converted to an XMLElement object that SQL Server automatically passes to the LoadPMMLContent method. The code within it must parse the document and build a new mining model out of its PMML elements. The first order of business is to make sure the model name matches one of the values that the PMMLWriter.WriteStartElement will accept; I used a dummy value of Naïve Bayes even though my plug-in is in no way related to that algorithm, as discussed a few paragraphs ago. Although I do not make practical use of this in Figure 4, Crivat also notes in his SDK sample code that is wise to “Create a namespace manager with the namespace of the content, and prefix it with ‘pmml.’ This way, all the XPath queries will be qualified with pmml.” The If…End If block immediately after the NamespaceManager code is designed to map the PMML document’s Extensions element to our model’s parameters, which were defined in the last tutorial. Crivat advises to “Look for the Extension element with the MiningAlgorithmFlags name and ‘Microsoft Analysis Services’ as extender,” as I do when declaring the ParameterXPath variable. The rest of the code in this block merely loops through the model parameters and assigns the values to our model. After this If…End If block, we would normally put any code (or call methods in our custom classes) for loading elements specific to the type of model we’re working. For example, in Bogdan’s tutorial, this is the point where you’d retrieve the number of clusters, their distributions and other information specific to the variant Clustering algorithm he demonstrates. For Naive Bayes we’d include information on the inputs, outputs and value pairs; a SequenceModel has many required tags, such as those identifying the number of sequences, items and rules; an AssociationModel would of course require information on the itemsets, rules and so forth; neural nets have a lot of specific functionality such as the activation function, thresholds and the number of layers. Likewise, the definitions of models labeled Trees might include split characteristics, whereas General Regression requires specifications for its predictors and the Regression type takes familiar values as intercepts and coefficients. These would all have to be retrieved from the PMML string here and plugged into the variables of our plug-in that define the structure of the model. I’ll spare readers the quite lengthy code that would be required to illustrate every type of conversion, especially since that would require the development of multiple sample plug-ins, one for each type of PMML model. The important thing is to grasp the process behind it, which entails parsing the XMLElement supplied to the method, using standard .Net XML functionality, and translating it to your model’s variables.

Figure 4: Sample Implementation of the LoadPMMLContent Method

Protected Overrides Sub LoadPMMLContent(TempAlgorithmContent As System.Xml.XmlElement)

If String.Compare(TempAlgorithmContent.Name, “NaiveBayesModel”, True) <> 0 Then

Throw New System.Exception(“Invalid PMML node: “ & TempAlgorithmContent.Name)

End If

Dim NamespaceManager As New System.Xml.XmlNamespaceManager(TempAlgorithmContent.OwnerDocument.NameTable)

NamespaceManager.AddNamespace(“pmml“, TempAlgorithmContent.NamespaceURI)

Dim ParameterXPath As String = “pmml:Extension[‘MiningAlgorithmFlags‘=@name and ‘Microsoft Analysis

Services’=@extender]”

Dim ParameterNode As System.Xml.XmlNode = TempAlgorithmContent.SelectSingleNode(ParameterXPath, NamespaceManager)

If ParameterNode IsNot Nothing Then

Dim ParameterXMLNodeList As System.Xml.XmlNodeList = ParameterNode.SelectNodes(“pmml:key-val“,

=NamespaceManager)

‘loop through the PMML model parameters and assign them to our model’s parameters

For I As UInteger = 0 To ParameterXMLNodeList.Count - 1

If ParameterXMLNodeList.Item(I).Attributes(“name”) IsNot Nothing Then

Select Case ParameterXMLNodeList.Item(I).Attributes(“name”).Value

Case “MyFeatureSelectionParameter“

MyMiningParameterCollection(ParameterXMLNodeList.Item(I).Attributes(“name”).Value).Value = System.Convert.ToInt32(ParameterXMLNodeList.Item(I).Attributes(“value”).Value)

Case “MyTrainingParameter“

MyMiningParameterCollection(ParameterXMLNodeList.Item(I).Attributes(“name”).Value).Value = ParameterXMLNodeList.Item(I).Attributes(“value”).Value

End Select

End If

Next I

End If

End Sub

…………If the plug-in code works correctly, msmdsrv will respond to a CREATE MINING MODEL FROM PMML statement by calling this method, then SaveContent to persist the structure of the newly created model. After this, a new mining model and parent structure will be added to your SSAS database. It might conceivably be possible to add functionality to LoadPMMLContent and RenderPMMLContent to handle documents that are compliant with higher versions of PMML than 2.1, but I have yet to verify this; it is also entirely possible that the SSAS query parser will reject syntax that is not 2.1-compliant, regardless of whether a plug-in algorithm’s internal code could successfully process the string or not. This capability would greatly increase the utility of SSDM’s PMML functionality, which would otherwise be the tool’s least useful feature, in its current stunted form. Regardless of whether or not the plug-in architecture can be used to fix the shortcomings of Microsoft’s PMML implementation, the ability to write custom algorithms is one of the most useful features of SSDM, which is in turn the most underrated but productive component of SQL Server. The same can be said of the ability to extend SSDM with custom data visualization capabilities, as we shall discuss in the final segment of the Rickety series.