By Steve Bolton

…………The pinnacle of difficulty in this series of amateur self-tutorials on SQL Server Data Mining (SSDM) was surmounted in the last installment, when we addressed the debugging and deployment of custom plug-in algorithms. From here on in, we will be descending back down the stairway, at least in terms of exertion required to squeeze practical benefit out of the lessons. In our first steps we’ll have to deal with a few aspects of plug-in development that were deferred in order to divide the tutorials into bite-sized chunks, particularly the topic of node navigation. The construction and navigation of a hierarchy of nodes out of our mining results takes place almost exclusively in the AlgorithmNavigationBase class, which we touched on briefly in A Rickety Stairway to SQL Server Data Mining, Part 14.2: Writing a Bare Bones Plugin Algorithm. In order to display rudimentary results in a single node in the Generic Content Viewer by the end of that article, it was necessary to return trivial or dummy values for many of the 18 methods in that class which were marked Must Override. The first bit of good news is that we’ve already introduced GetStringNodeProperty, GetDoubleNodePropert and GetNodeDistribution, the methods responsible for assigning the values of familiar columns from SSDM’s common metadata format, like NODE_DESCRIPTION, NODE_RULE, MARGINAL_RULE, NODE_PROBABILITY, MARGINAL_PROBABILITY, NODE_DISTRIBUTION, NODE_SUPPORT, MSOLAP_MODEL_COLUMN, MSOLAP_NODE_SHORT_CAPTION and NODE_CAPTION. The second good news item is that debugging of AlgorithmNavigationBase is much simpler than that of AlgorithmBase, where most of the number-crunching occurs. It is several orders of magnitude easier than debugging AlgorithmMetadataBase, where errors can be difficult to track down since they cause SQL Server Analysis Services (SSAS) to skip loading faulty algorithms when the service starts up. There are some pitfalls to constructing a node hierarchy out of your mining results, however, which can still be tricky to overcome.

…………Debugging of this class is much easier in part because we simply refresh the results in the Visual Studio GUI or SQL Server Management Studio (SSMS) in order to trigger breakpoints set here, whereas in AlgorithmBase or AlgorithmMetadataBase it is often necessary to reprocess mining models or restart the service. Restarts are still necessary after you deploy changes to the code, but not if you’re simply stepping through the results again. Expanding a closed node in the Generic Content Viewer may also cause various routines in AlgorithmNavigationBase to be called, which will activate any breakpoints you’ve set there in Visual Studio – assuming you’ve set them as prescribed in the last article. One of the more common errors you may see while debugging SSDM navigation may be worded like this: “Execution of the managed stored procedure GetAttributeValues failed with the following error: Exception has been thrown by the target of an invocation. The specified marginal node cannot be found in the mining model ‘PluginModel’.” The sentence indicates that the culprit may be an inappropriate choice of data mining viewer types, which are defined when the service starts by the GetViewerType routine of AlgorithmMetadataBase. I encountered this frequently while debugging this project because I initially returned a value of MiningViewerType.MicrosoftNeuralNetwork in that routine. The plug-in we developed a couple of tutorials ago merely returns a few global statistics like skewness, kurtosis and excess kurtosis, so it is not surprising that the neural net viewer would be a poor choice. Curiously, any attempt on my part to rectify it since that rookie mistake by substituting a different MiningViewerType enumeration value in GetViewerType has produced a more serious GetAttributeValues error. The text is the same except for the final line, which instead mentions the name of our practice plug-in algorithm at the end: “The targeted stored procedure does not support ‘Internal_Name_For_My_Algorithm’.” It apparently takes a little more work to change the viewer type of an algorithm once it’s been set, at least in some cases; it’s not a problem I’ve solved yet, but one plug-in developers should be wary of. It proved to be merely an inconvenience for the purposes of this series, since selecting Generic Content Viewer from the dropdown list in the Visual Studio GUI or SSMS works without errors whenever I return the original value of MicrosoftNeuralNetwork. Whenever I returned results in a new window in one of those tools I would receive the first GetAttributeValues error, then simply selected the Generic Content Viewer, which is the only one we need to worry about in a bare bones plug-in like this.

…………Developers might notice that there is no GetAttributeValues member in the object model or the DMPluginWrapper class it is derived from, via the means outlined in A Rickety Stairway to SQL Server Data Mining, Part 14.1: An Introduction to Plug-In Algorithms. Nor does either one expose the GetAttributeScores routine, which is mentioned in some of the arcane error messages SQL Server occasionally returns when processing the nine algorithms included with the product, which we covered much earlier in this series. These are internal routines within the SSAS process, msmdsrv.exe, which we can’t assign breakpoints to in Visual Studio, let alone debug and recompile. By good fortune, I stumbled across a list of 20 such undocumented internal routines at Jeannine Takaki’s invaluable Technet post A Guide to the (Undocumented) System Stored Procedures for Data Mining, at the last minute while writing up this article. She explains the uses of each in detail and how to use the CALL syntax on them in Data Mining Expressions (DMX) code. GetAttributeValues and GetModelAttributes are the only two undocumented routines on the list which can be used with any one of the nine out-of-the-box algorithms. GetAttributeScores applies specifically to neural nets and Linear Regression. Some of the routines are specific only to the two Microsoft-supplied clustering algorithms, such as GetClusterCharacteristics, GetClusterDiscrimination, GetClusterProfiles and GetClusters, whereas ARGetNodes; GetItemsets, GetRules and GetStatistics only apply to the Association Rules algorithm. Linear Regression and Decision Trees likewise are the only data mining methods which make use of CalculateTreeDepth, DTAddNodes, DTGetNodeGraph, DTGetNodes and GetTreeScores, while GetAttributeCharacteristics, GetAttributeDiscrimination, GetAttributeHistogram and GetPredictableAttributes can only be used with Naïve Bayes mining models. I have yet to experiment with them myself, but they all seem to be related to the retrieval of mining model results, which means you may encounter them when developing plug-ins that use the corresponding mining viewer types.

…………It is imperative to be conscious of the order in which SSDM triggers the 18 mandatory methods in AlgorithmNavigationBase when you retrieve the mining results in the GUI. The sequence begins in the GetNavigator routine of AlgorithmBase, which instantiates a new instance of AlgorithmNavigationBase, which in turn calls any code you’ve included in the constructor. In my case, all the New method does is set a reference to a class-scoped instance of the algorithm and a Boolean which indicates whether or not the navigation operation is being performed on a data mining dimension. I cannot verify that the rest of the sequence is the same for all mining models or all algorithms, but to date, I have seen SSDM invariably trigger the GetCurrentNodeID routine next. The next seven calls are to routines which set either the type or the name of the node, such as GetNodeType, GetNodeUniqueName, GetUniqueNameFromNodeID, GetNodeType again, a single call to GetStringNodeProperty to assign the NODE_CAPTION value, then GetNodeUniqueName and GetUniqueNameFromNodeID once more. I’m not sure what the purpose of repeating these calls to GetNodeType, GetNodeUniqueName and GetUniqueNameFromNodeID is; I only know that it occurs. The code for these routines is fairly trivial. GetNodeUniqueName has no parameters and merely calls GetUniqueNameFromNodeId, which merely converts the NodeID integer parameter supplied by SSDM into a string value. GetNodeIdFromUniqueName merely performs the same function as GetUniqueNameFromNodeID, except in reverse; in my example, I merely converted the NodeUniqueName supplied by SSDM as a parameter to the function and converted it to a 32-bit integer. I suppose other plug-in developers might have a genuine need to develop a much more complex naming scheme than in my trifling example of this class (which can be downloaded here), but for our purposes, there’s no real reason to complicate things. GetCurrentNodeID is likewise called frequently during the retrieval of mining results, but only returns the value of the CurrentNode, which is a class-scoped integer that identifies the node the navigation class is currently assigning the values for. In the implementations I’ve seen so far, such as the tutorial that founding SSDM developer Bogdan Crivat included in the plug-in software development kit (SDK) and Microsoft Business Intelligence with Numerical Libraries: A White Paper by Visual Numerics, Inc., the value of the CurrentNode is not set in this routine, merely returned. So how do we direct SSDM to operate on a different node – or for that matter, determine the number and order of the nodes? That doesn’t occur in GetNodeType, which merely returns a value of the NodeType enumeration to assign the NODE_TYPE value we’ve seen returned throughout this series in SSDM’s common metadata format. You might need to know the number of the node in order to assign the proper value, which can be any one of the following: AssociationRule; Cluster; CustomBase; Distribution; InputAttribute; InputAttributeState; Interior; ItemSet; Model; NaiveBayesMarginalStatNode; NNetHiddenLayer; NNetHiddenNode;NNetInputLayer; NNetInputNode; NNetMarginalNode; NNetOutputLayer; NNetOutputNode; NNetSubNetwork; None; PredictableAttribute; RegressionTreeRoot; Sequence; TimeSeries; Transition; Tree; TSTree; and Unknown. In other words, we’d assign None, Unknown or one of the 25 NODE_TYPE values we’ve seen often throughout this series. In my implementation, I performed a Select Case on the value of CurrentNode and set a type of Root when it equaled zero and InputAttribute in all other cases.

…………Decisions already have to be made based on the CurrentNode value, yet we have not yet assigned it in code. As mentioned in the last couple of tutorials, one of the trickiest aspects of debugging AlgorithmBase and AlgorithmMetadataBase is that calls to methods appear to come out of thin air, because they originate within msmdsrv.exe. It is a different expression of the same problem embodied in the hidden calls to undocumented methods, like GetAttributeValues and GetAttributeScores. In AlgorithmNavigationBase, this is manifested in apparently uncontrollable calls to the 18 mandatory methods, directed by msmdsrv.exe, which seem to leave developers without any well-defined place to specify the very node structure these routines operate on. That is not to say that these methods calls are random; msmdsrv seems to follow a fairly consistent pattern, in which the calls listed above are followed by an invocation of GetParentCount, then GetNodeAttributes. After this, GetNodeUniqueName is usually followed by another call to GetUniqueNameFromNodeId, then both are repeated again, followed by yet another call to GetNodeType. The NODE_CAPTION is typically set again in GetStringNodeProperty for some reason, followed by further calls to GetNodeUniqueName and GetUniqueNameFromNodeId. SQL Server then invokes GetChildrenCount and GetParentCount, followed by three calls to GetStringNodeProperty in which the NODE_DESCRIPTION, NODE_RULE and MARGINAL_RULE are set; NODE_PROBABILITY and MARGINAL_PROBABILITY are then set in two separate calls to GetDoubleNodeProperty; the structure of the NODE_DISTRIBUTION table is then set in a single call to GetNodeDistribution, followed by alternating calls to GetDoubleNodeProperty and GetStringNodeProperty to set the NODE_SUPPORT, MSOLAP_MODEL_COLUMN, MSOLAP_NODE_SCORE and MSOLAP_NODE_SHORT_CAPTION. It was trivial to set the values of these in Post 14.2, when we were only dealing with a single node. If we’re dealing with multiples nodes, we’d need to insert Select Cases on the CurrentNode value to assign the correct values of these columns to the appropriate nodes, but it wouldn’t normally be suitable to change the value of CurrentNode itself here. After this, SSDM usually calls LocateNode twice, which merely invokes ValidateNodeID, i.e. the routine where you’d embed any custom code to authenticate your node structure; in my algorithm, I simply added code to throw an error if the CurrentNode value exceeded the count of the number of attributes. Somewhere in this vicinity, SSDM will call GetChildrenCount and sometimes MoveToChild, as well as GetParentNodeID and MoveToParent in some cases. Correctly specifying the cases when those particular routines are hit is the key to constructing your node network, not through a call to a method that simply declares the size and shape of the whole network explicitly. After this, SSDM will usually call GetCurrentNode and repeat this whole sequence over again until it reaches the final node – which it may never do, if you don’t specify the node structure correctly.

…………In a typical program, you’d expect to set a fixed number of nodes, perhaps in a class-scoped multi-dimensional array or collection that also determined the structure of the relationships between them. In SSDM plug-ins, however, the structure is specified at almost primordial levels, through calls to methods that determine the counts of each nodes parents and children. The numbers returned by GetParentCount and GetChildrenCount in turn determine how many times SSDM will iterate through MoveToParent and MoveToChild respectively. This group of methods also includes GetChildNodeId and GetParentNodeID, in which you merely return the parameter value supplied by SSDM, as far as I can tell. In my implementation, I merely wanted to return a root node with global statistics, followed by two child nodes representing two columns of input data I supplied to my mining model. Since the root node has no parents, I returned zero for GetParentCount when the CurrentNode was equal to zero, i.e. the unique index for the single root node; since the two input attributes have just one parent, I returned a value of one in all other cases. In GetChildrenCount, however, I returned a value equal to the number of input attributes when the CurrentNode matched the root node. Since the root node is the only parent, I returned zero when the CurrentNode is equal to any other value, since these are input attributes, which have only one parent in this case. The CurrentNode value is only changed in one place in this trifling plug-in, in the MoveToChild routine, where it is increased by one in each iteration. The number of iterations will be equal to the value assigned to the single root node in GetChildrenCount. In my plug-in, MoveToParent is never invoked because the only parent specified in GetParentCount has already been passed once, but it may be triggered in structures that require more complex implementations of GetParentCount and GetChildrenCount. This means of specifying the node structure is so basic that simple errors in your logic can cause this sequence of method calls to repeat itself indefinitely, until you receive a warning from SSMS that the XMLA query timed out. If you plan to develop plug-ins, expect to see a lot of these. Also expect to set aside more time than usual for the code that specifies your node structure, since it is done in an unconventional way that is not easy to control. I would prefer to deal with explicit definitions of those structures in old-fashioned class-scoped arrays or collections, which might be easier to manage when dealing with particularly complex node setups; yet perhaps there are sounds reasons for the way Microsoft implemented this, maybe as a performance enhancement of some kind. Just keep in mind that most of the time you spend in AlgorithmNavigationBase will be devoted to specifying your node setup. Most of its methods are trivial, sometimes to the point of merely returning the values SSDM supplies in function parameters. Likewise, even the most complex node setups may not require many lines of code, but that doesn’t mean they won’t be a challenge to arrange properly. It is a good idea to have a clear idea of the structure you want beforehand, then to keep a close eye on the conditional logic in GetChildrenCount and GetParentCount, as well as paying close attention to the way in which you increase the value of CurrentNode or any other index placeholder variable you might use. Expect to iterate through the sequence of method calls outlined above many times during debugging before you get it right; it is not a bad idea to set breakpoints in many of them keyed to the Hit Count or Condition (which can be set in Visual Studio by right-clicking on the breakpoints).

…………As Crivat points out in the tutorial he wrote for the SDK, there may be cases in which a single node may have multiple parents, such as tree structures that represent graphs, but these instances are too extraordinary to warrant a full discussion. I should at least mention MoveToNextTree, a mandatory method Microsoft included to enable unusual structures with more than one root node. As the help file included with the SDK states, “Positions the navigator to the next content tree, if your algorithm’s content is laid out as multiple trees…Usually, all the content can be represented as a single tree and this function should return false. It is useful to implement this function when your content graph is disjoint, to ensure that all nodes can be traversed in a content query.” As I’ve said many times in this series and in others like An Informal Compendium of SSAS Errors, troubleshooting Analysis Services is sometimes like exploring deep space with the crew of the Enterprise, since you may be boldly going where no man has gone before. The MoveToNextTree method is another one of those features of SSAS for which there is apparently no documentation anywhere on the planet aside from the single line of code in sample files Crivat includes with his tutorials, and a single brief C++ example at the MSDN webpage A Tutorial for Constructing a Plug-in Algorithm. The latter doesn’t do much, but says “This function sets _iIDNode to be the root of the next tree in the sequence. Note that if we are in browser mode, there will never be a next tree.” The only useful documentation I could find is the sentence quoted above from the .chm file. Undaunted, I decided to experiment by simply returning a value of True in all cases, which will create an infinite loop that eventually triggers the XMLA timeout warning. The code in Figure 1 worked because it included a limit on the number of times the routine returns True. Each time MoveToNextTree returns that value, it not only iterates through the sequence of method calls we discussed earlier, but does so for each node in that structure. Intrepid programmers with a need for disjoint graphs (i.e. sets with no common members) could add conditional logic to the other methods we’ve already discussed to return different values, depending on which of the separate tree structures is being iterated through. In my case, the code below merely added three nodes under the root, on the same level as the other two inputs, although I suppose I could have included conditional logic in other routines to create secondary roots or the like.

Figure 1: Some Sample MoveToNextTree Code

Static TreeCount As Int16

If TreeCount < 3 Then TreeCount = TreeCount + 1

Return True

Else

Return False

End If

…………The remaining functionality we have yet to discuss with AlgorithmNavigationBase all pertains to means that we have yet to discuss for supplying values for columns in the SSDM’s common metadata for a particular node. For the sake of brevity, I left a discussion of GetNodeAttributes out of Post 14.2, even though it’s relatively inconsequential. It merely specifies the values assigned to the ATTRIBUTE_NAME column by supplying the names assigned already assigned to your attributes, which in my case correspond to mining model columns labeled “InputStat” and “SecondInputStat.” All you need to do is supply the index of the attribute in your AttributeSet to the return variable, which is an array of unsigned integers. To create a comma-separated list of attributes associated with a particular node, return more than one value in the array. This week’s tutorial also features a more complex implementation of the NODE_DISTRIBUTION table than the one returned for a single node a couple of posts ago. The Select Case returns the marginal stats for the whole model when the CurrentNode is equal to the index of the root, but numbers specific to the InputStat or SecondInputStat column for the two child nodes. First I add the marginal stats for the appropriate column to a collection of AttributeStatistics objects, which in turn specifies the values for the first few rows of its NODE_DISTRIBUTION table. The real difference between this and the code we used a few weeks ago is that we’re also including three custom NODE_DISTRIBUTION rows, by adding three extra AttributeStatistics objects whose StateStatistics objects have custom values. First I instantiate the new AttributeStatistics objects, then the three StateStatistics objects which are added to them at the end of the Case statement. The VALUETYPE for each custom NODE_DISTRIBUTION row is set using the MiningValueType iteration, as depicted in Figure 2. Immediately after that, I assign the ATTRIBUTE_VALUE to either the skewness, kurtosis or excess kurtosis value we associated with that attribute in previous tutorials. I’ve left the NODE_SUPPORT, NODE_PROBABILITY and VARIANCE blank in these cases, but set the ATTRIBUTE_NAME (which should not be confused with the column of the same name which is one level higher in the hierarchy of SSDM’s common metadata format0 using the NodeID property. Ordinarily it might also be a good idea to logic in this routine to do conditional processing based on the NODE_TYPE, but I didn’t want to complicate this tutorial further by nesting Select Cases and other such statements inside each other.

Figure 2: Adding Custom Rows to the NODE_DISTRIBUTION Table

Case Else

Dim TempStats As AttributeStatistics() = New AttributeStatistics(3) {}

TempStats(0) = CurrentAlgorithm.MarginalStats.GetAttributeStats(CurrentNode – 1)

TempStats(1) = New AttributeStatistics

TempStats(2) = New AttributeStatistics

TempStats(3) = New AttributeStatistics

Dim SkewnessState, KurtosisState, ExcessKurtosisState As New StateStatistics()

SkewnessState.ValueType = MiningValueType.Other

KurtosisState.ValueType = MiningValueType.Coefficient

ExcessKurtosisState.ValueType = MiningValueType.Intercept

SkewnessState.Value.SetDouble(CurrentAlgorithm.SkewnessKurtosisClassArray(CurrentNode – 1).Skewness)

KurtosisState.Value.SetDouble(CurrentAlgorithm.SkewnessKurtosisClassArray(CurrentNode – 1).Kurtosis)

ExcessKurtosisState.Value.SetDouble(CurrentAlgorithm.SkewnessKurtosisClassArray(CurrentNode – 1).ExcessKurtosis)

TempStats(1).StateStatistics.Add(SkewnessState)

TempStats(2).StateStatistics.Add(KurtosisState)

TempStats(3).StateStatistics.Add(ExcessKurtosisState)

‘we have to set the ATTRIBUTE_NAME string that appears next to these stats in the parent AttributeStats object, for whatever reason

TempStats(1).Attribute = AttributeSet.Unspecified

TempStats(1).NodeId = “Skewness”

TempStats(2).Attribute = AttributeSet.Unspecified

TempStats(2).NodeId = “Kurtosis”

TempStats(3).Attribute = AttributeSet.Unspecified

TempStats(3).NodeId = “Excess Kurtosis”

Return TempStats

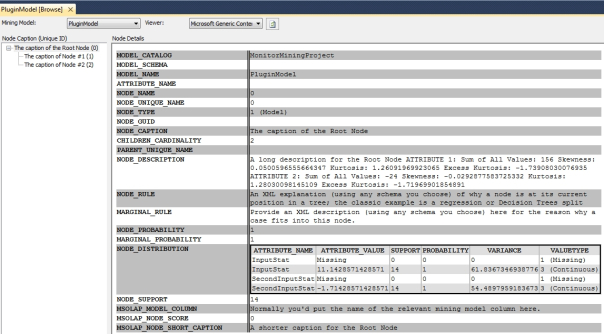

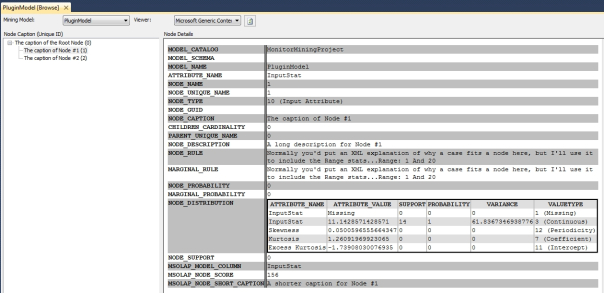

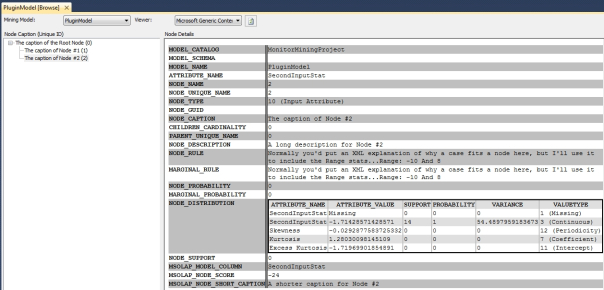

…………The results for the updated code I’ve available here produced the three nodes depicted in Figures 3 through 5. The first NODE_DISTRIBUTION table includes the marginal stats for the whole model, plus some values for NODE_CAPTION, NODE_DESCRIPTION and other such columns that we assigned in GetDoubleNodeProperty and GetStringNodeProperty a couple of tutorials ago. Note how the NODE_DISTRIBUTION tables for the second and third figures include custom rows featuring the values for the skewness, kurtosis and excess kurtosis associated with the column mentioned in the ATTRIBUTE_NAME column (the one at the top of each figure, not in the NODE_DISTRIBUTION tables. I have yet to solve the mystery of why the VALUETYPE for the skewness rows is set to Periodicity, when the value supplied in code is MiningValueType.Other, but that is insignificant at this point. The mystery of how the Generic Content Viewer columns are populated in plug-in algorithms is essentially solved; would-be plug-in writers need only decide what they want to appear in them, rather than worrying about how to get the values in there. Only a few enigmas remain in the attic at the top of this Rickety Stairway, such as how use the Predict method, advanced functionality like custom mining functions and Predictive Model Markup Language (PMML). After those four plug-in tutorials are finished, we’ll unravel one final mystery to end the series, how to write custom data mining viewers. Discussion of GetNodeIDsForCase, a specialized AlgorithmNavigationBase method, will be deferred until then because it implements drillthrough to mining model cases, which can’t be done in the Generic Content Viewer. Since the more useful and attractive mining viewers that ship with the product depend on the same values as the Generic Content Viewer, it would be fairly easy to upgrade this sample AlgorithmNavigationBase code to create structures that are compatible with them, such as hierarchies than can be displayed by the Decision Trees Viewer. It wouldn’t be child’s play and the code would be lengthy enough to unduly clutter this series, but once you grasp the basic principles of how to navigate through the appropriate structure, upgrading this skeleton code into custom algorithms that can be displayed in the out-of-the-box mining viewers becomes feasible. The last tutorial in this series will address scenarios where those viewers don’t meet the needs of end users, which calls for custom means of displaying data in the Visual Studio GUI or SSMS.