by Steve Bolton

In last week’s edition of this amateur series of self-tutorials on SQL Server Data Mining (SSDM), we covered Clustering, an algorithm with an exceptionally wide variety of uses in comparison to the other eight Microsoft includes with the product. Sequence Clustering may be derived from that data mining method, but it contrasts sharply with ordinary Clustering in that its range of uses and the kind of data it can operate on are greatly constrained. It makes use of the Expectation Maximization (EM) type of Clustering discussed in A Rickety Stairway to SQL Server Data Mining, Algorithm 7: Clustering but includes another processing step to link the groups it creates together by paths. One of the most common uses for it cited in the literature are protein sequencing of DNA[ii], which has a physical but not a temporal order, yet most of the other popular applications of Sequence Clustering imply some kind of ordering by time. It differs from Time Series, the last algorithm we will cover in this series, in that it is well-suited for analyzing data that is separated by undefined, unranked measures of time, whereas Time Series deals with fixed intervals. One of the reasons I have written this series as I learn the ropes is to demonstrate to other SQL Server professionals just how useful this terribly neglected tool can be for those with little statistical training, even when applied to purely relational or OLTP databases. One of the most practical uses that non-OLAP DBAs can put this specific SSDM algorithm to is harvesting the database server and Windows Application, System and Security logs, in order to identify events that might predict a particular adverse event, such as an eventual server failure. It is ideal for tracking events of this sort which often do not occur at predictable, fixed intervals. Another popular use for Sequence Clustering is clickstream analysis, to discover the paths users are most likely to take; faulty webpages can even be identified by looking for paths users are least apt to traverse on a website. Just as Clustering can be used for anomaly detection (which DBAs may find quite useful to identify bad data), Sequence Clustering can point out anomalous, uncommon paths. It is not used for prediction as frequently as Time Series, but it can be directed to predict the next path an object is likely to take.

It can also be used in the market basket scenarios that Association Rules is designed to solve, with the added benefit of preserving ordering of the transaction items. As discussed in A Rickety Stairway to SQL Server Data Mining, Algorithm 6: Association Rules, that particular mining tool is limited to certain specific mining scenarios, requires a quite steep learning curve and is quite limited in the range of data it can accept. Sequence Clustering is not only useful for some of the same scenarios, but shares similar limitations, albeit for different reasons. Technically, Sequence Clustering accepts a larger range of the Content types offered by SSDM than any other algorithm, which represent a crucial distinction in the meaning of each mining model column. For a refresher on that topic, or how to perform basic tasks like setting up a mining project and its structures and models, see post 0.0, post 0.1 and post 0.2. It is the only one of the nine algorithms that accepts the Cyclical and Ordered types without a warning in Books Online (BOL) that the values will simply be treated as Discrete, without any special processing, which is pointless. In this week’s trials on the IO data we’ve been using for examples throughout this series, I was able to assign the Cyclical type to the Minute, Hour and DayOfWeek columns, all of which fit the bill since they repeat themselves at precise intervals. The number of clusters for that mining model increased to six, all with case support over 100, and processing time went up about 20 percent, but the results weren’t as good. There was a marked effect on the clusters the algorithm produced, but it seemed to treat the values for these columns as Discrete; I ended up with results which were cluttered with 60 separate attribute-value pairs for Minute and another 60 for Hour, which told me nothing useful. The documentation on the Cyclical and Ordered types is so thin in BOL and the other mining literature that it is difficult to ascertain exactly how they are processed with Sequence Clustering; even Data Mining with Microsoft SQL Server 2008, the best reference ever published on SSDM, mentions it only once at a glance.[iii] Unlike Association Rules and several other SSDM algorithms, Sequence Clustering can at least make use of the two leading Content types, Continuous and Discrete.

Its major drawback (which almost amounts to a fatal flaw) is that it requires a single input table with a column set to the Key Sequence type, which uniquely identifies each row. As discussed in A Rickety Stairway to SQL Server Data Mining, Part 0.2: How to Dig Out of a Data Mining Cave-In, I’ve had my share of troubles with SSDM’s nested tables, which can be difficult to work with. I solved a lot of those problems in the tutorial on Association Rules, which depends heavily on nested tables, but the topic becomes murkier to the uninitiated with Sequence Clustering, thanks to the special way it handles them. Association Rules has a case key, i.e. a relationship defined in the Data Source View (DSV) a mining structure comes from, which is practically identical to a foreign key in the relational database world. Yet it also has a nested key to identify one measure in a nested table that you want to analyze. This is not the same as the case key, which you don’t even need to include in your mining models. If you learn Association Rules first, then the way Sequence Clustering handles nested tables may be a bit confusing. There is no nested key, only a mandatory Key Sequence column that uniquely defines each row in the nested table. It is the only algorithm which makes use of this Content type, which is like a case key in Association Rules, except it is mandatory. Association Rules allows multiple nested tables with multiple columns in addition to the nested keys, but with Sequence Clustering you’re limited to a single nested table with a mandatory Key Sequence column and a single column for the measure you want to analyze. I’ll refer to the latter as the state column, for lack of a better term. To make matters worse, that measure must use the Discrete Content type. The parent of the nested table can have other measures, but its nested table can only have one, so unless you artificially aggregate all of your other dependent table measures somehow and include them in the parent – which will inevitably decrease the granularity of its information content – then you’re limited to analyzing a gutted shell of your original dataset.

Throughout this series, we’ve been experimenting on data taken from about three days of polling dm_exec_query_stats, dm_io_pending_io_requests, dm_io_virtual_file_stats, dm_os_performance_counters, dm_os_wait_stats and sp_spaceused each minute, in the hopes I could simultaneously learn more about IO bottlenecks while using measures working DBAs might be more at ease with. I later cut back to three denormalized views that left joined dm_io_pending_io_requests, dm_io_virtual_file_stats, dm_os_performance_counters and a parent RecordTable of time measures to sp_spaceused, dm_os_wait_stats and dm_exec_query_stats respectively. Because of the requirement of a single nested table with a single non-key attribute, comparing the performance of Sequence Clustering against the results of other algorithms as we’ve done in the past would be pointless. Since these strictures make Sequence Clustering a world unto itself, the best we will be able to do is compare its parameter settings against each other. This would be an opportune time to take a closer look at certain Discrete measures in our dataset, such as the WaitTypeID column of dm_os_wait_stats and the five columns in dm_exec_query_stats that can be used to uniquely identify particular queries, to see if they are associated with particular bottleneck stats. Our parent RecordTable, however, had mostly time measures, with all of the other measures of IO pressure being included in other nested tables we can’t include; the only measure in the parent which would be of particular interest without them would be MinuteGap, which tracked a mysterious increase in the intervals between runs of the SQL Server Agent job that took care of the data collection process. Furthermore, some of the Discrete measures in the dependent tables are of little use to us, like the wait figures, which occurred at predictable intervals but were accompanied with numerical values – which are not only Continuous, but can’t be included because we’re limited to just one column aside from the case key. The best we would be able to do is to treat each record where a single WaitTypeID passed a particular threshold as a distinct event to track, but we wouldn’t be able to compare it against other waits, or even against other values for the same wait type. This leaves us with the five columns in dm_exec_query_stats that uniquely identify queries: QueryTextID, SQLHandleID, PlanHandleID, QueryHandleID and PlanHashID. I picked the first of these for this week’s trials because I saved the text of the queries during the data collection process, but forgot to collect the particular query plans the other measures point to; theoretically though, if not for that oversight, we could have easily correlated performance bottlenecks with particular query plans. Instead, we will have to look for correlations with particular query texts. Even after all this, we still face another limitation, in that each of these Discrete values must have a 1:1 relationship with the Key Sequence column; sometimes the same query was run multiple times in a single polling interval, so I had to reduce the granularity of the data further by adding a T-SQL query in my DMV that asked a Boolean Yes-No question of whether or not a particular query was run in a polling interval.[iv] The count of how many times it occurred in each interval was probably significant, but we had to discard all of that information. We could just skip the whole mess and run Sequence Clustering without a sequence column, but in that case all we end up doing is creating EM clusters, without all of the extra parameters discussed in the Clustering article.

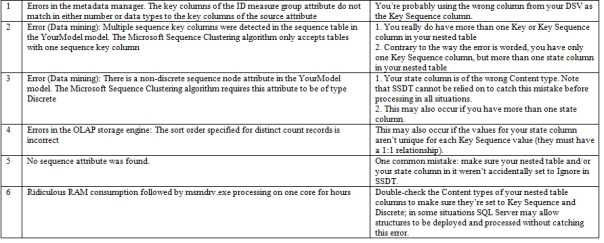

Furthermore, certain errors are common with Sequence Clustering, which can be frustrating to deal with because of their unintuitive behavior and lack of documentation. More than a year after I posted the first installment, my series of posts labeled An Informal Compendium of SSAS Errors remains one of the only centralized sources of information for certain SQL Server Analysis Services (SSAS) errors, if not the only one on the Web for some of them. There are no hits at all on Google, for example, for the third error listed in Figure 1, which mentions the most common errors I have encountered over the course of the last couple years of struggling here and there with Sequence Clustering. I’ve counted at least two situations in which this can occur: first, you may really be using the wrong Content type, which SSDT will catch only part of the time, or you have more than one state column in your structure. The latter mistake can also lead to the second error in the list, even though it is worded to suggest that the problem stems from having more than one Key Sequence column. Some of these errors are badly worded, such as the fourth one, which can occur if the values for your state column aren’t unique for each case key; that can throw off the sort orders of the internal queries SSDM uses, but the ordinary users wouldn’t know that unless they dug deep into the Profiler data or logs. The fifth error occurred to me repeatedly because I was in the habit of copying and pasting old mining structures when creating new ones, just to save the time of entering all of the same information again, but SQL Server Data Tools (SSDT) sets your nested table and its columns to Ignore rather than Predict, PredictOnly or Input when you do this. Another potential glitch is that SSDT sometimes allows projects to be deployed and processed in which the state column is set to some disallowed value, like Discretized or Continuous. This can lead to outrageous performance bottlenecks; for example, I caught this error after some trials I ran using SQLHandleID as my state column crashed msmdrv.exe after consuming 5.4 gigs of RAM and processing endlessly on one core for hours. Somehow, SQL Server had failed to catch the fact that the SQLHandleID column had been set to Continuous rather than the mandatory Discrete value, then deployed and processed the project many times. After I set the attribute to Discrete, it processed within minutes with very little load on the server; once I tried to change it back to Continuous, or to other values like Discretized, SQL Server raised the errors it should have in the first place. The same error also occurred when the Content type of one of my structures was set to Key rather than Key Sequence, which SQL Server should have theoretically disallowed. If you get massive memory consumption while running on one core with this algorithm, check the Content types of your nested table columns to make sure they’re set to Key Sequence and Discrete, because SQL Server may not have caught these mistakes. Some of these are actually glitches in the program itself, which I probably really ought to report to TechNet, maybe after this series is done.

Figure 1: Common Error Messages with Sequence Clustering and How to Fix Them

These errors and other difficulties mean that Sequence Clustering has one of the highest learning curves among the nine algorithms, behind Association Rules. These are the only two mining methods that I have yet to return useful results from immediately at the default values for their parameters. On the other hand, Association Rules is a bit simpler in certain respects, because its inner workings are much less complicated to understand – so if an explicit understanding of how SSDM arrived at a particular conclusion is critical to you, that would be the ideal choice for market basket analysis. The innards of Sequence Clustering are much more difficult to interpret, although they are nowhere near as inscrutable as those of neural nets, as I describe a little more colorfully in A Rickety Stairway to SQL Server Data Mining, Algorithm 5: The Neural Network Algorithm. I find it is easier to explain what little I know about stats in terms of the building blocks divided by their functions, which are brought together in particular combinations suited to the mining problem at hand. In this case, the first major building block is the EM version of Clustering, which we covered in depth last week. Sequence Clustering builds upon it by adding a second phase, in which a transition matrix is created full of probabilities that one cluster will be followed by the next.[v] One of the purposes of this whole series is to show that you don’t need a doctorate in statistics in order to gain valuable information from SSDM with a minimal investment of time, energy, training and server resources, just as you don’t need to write a dissertation on combustion engineering to drive a car. Microsoft is essentially failing to capitalize on a vast market for its data mining tools among moderate and low-skill information workers because of the incorrect assumption that you need to know how to write equations to use it; the indispensable elements are the ability to interpret the results, manipulate the parameters and set up mining models that return useful results with minimal performance impact. For this reason, I won’t get into a discussion of how transition matrices are formed, although I would really like to learn matrix math, since it is highly useful with neural nets, the area of data mining I’m most interested in. I also won’t get into the math of Markov chains, which form the backbone of the sequencing side of the algorithm. I don’t want to put readers to sleep any more than I already have to by including the equations behind transition matrices and Markov models, not when clusters are so easy to visualize using the tools Microsoft has provided, as we shall see.

The inventor of this ubiquitous statistical tool was Andrey Markov (1865-1922), the most notable member of a family of Russian mathematicians. As I’ve noted before, some of the early history of math was speckled with bad judgment, junk science and even bad ethics on occasion, but in Markov’s character was exemplary – just like several other Eastern European mathematicians we’ve mentioned in the last few weeks, who went unsung because they toiled behind the Iron Curtain.. He may have succumbed to the dry allure of that nasty brand of atheism which was an intellectual fad of the European intelligentsia in the late Victorian Age, but he demonstrated bravery by refusing to cooperate with the tsarist government’s investigations of student dissidents following the failed 1908 revolution. He was removed from his office as a result of his principled stand for a good cause.[vi] Among his contributions before this persecution were Markov chains, which are widely used for many statistical purposes today beyond the narrow world of Sequence Clustering, especially in bioinformatics. In the simplest Markov models, the probability of one state leading to another is calculated independently of the states that came before it, but SSDM’s application of it in Sequence Clustering uses nth-order Markov chains, in which Bayesian probabilities (which I introduced in a typically slapdash fashion in A Rickety Stairway to SQL Server Data Mining, Algorithm 1: Not-So-Naïve Bayes) are applied that take into account prior states.[vii] In other words, SQL Server will take into account the possibility that Cluster X will lead to Clusters Y or Z depending on whether it arose from Clusters A or B. As the number of potential prior and future states the algorithm must take into account multiplies, the number of calculations SQL Server must perform grows exponentially rather than linearly; furthermore, the transitions matrices become proportionally sparser and therefore lose significance, thanks to the dreaded “curse of dimensionality” we discussed in the article on neural nets.[viii] This quickly can lead to the bogeyman feared by all data miners: overfitting, i.e. decreased performance in return for cluttered, nonsensical or misleading results.

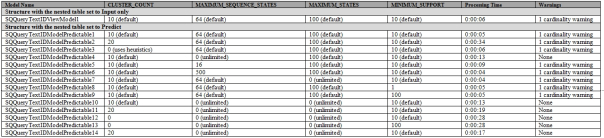

The Data Mining Team’s aforementioned book says that an excessive number of potential states can lead to matrices that are cluttered with too many transitions of low probability, which can be controlled by “storing only those probabilities that are above a certain threshold” but I am uncertain if this is possible using the four parameters provided with Sequence Clustering.[ix] It is certainly possible to control the growth of the transition matrices with them, but they seem to cap its growth by the number of clusters, cluster cases and states that the probabilities are generated from, not by eliminating low probability states after the fact, which is the way I read that comment. CLUSTER_COUNT works just like with the ordinary Clustering algorithm we discussed last week, by capping the number SQL Server generates during the clustering phase of processing. Likewise, the MINIMUM_SUPPORT parameter we’ve seen numerous times in this series sets the minimum number of cases (i.e. rows) needed to create a cluster. Both of these parameters default to 10 as usual, whereas MAXIMUM_STATES defaults to 100, as is typically the case with other algorithms. We’ve already discussed that parameter many times, but there is one slight difference in behavior here: to get rid of cardinality warnings, you must set MAXIMUM_SEQUENCE_STATES to 0 rather than MAXIMUM_STATES to 0, which would normally be the case. This parameter defaults to 64 and is unique to Sequence Clustering because it “Specifies the maximum number of states that a sequence can have,” as BOL puts it so succinctly. It also warns that setting it to more than 100 can lead to cluttered results, i.e. one of the twin horns of overfitting. For whatever reason, Microsoft doesn’t provide any of the other parameters available with ordinary Clustering, which would be helpful in controlling the first phase of processing. The usual MAXIMUM_INPUT_ATTRIBUTES and MAXIMUM_OUTPUT_ATTRIBUTES found with most other SSDM algorithms aren’t available either, perhaps because the sequencing phase doesn’t use feature selection and the clustering exclusively uses the Interestingness Score, which is not affected by these parameters. BOL says with the former that “Feature selection is not invoked; however, you can control the behavior of the algorithm by setting the value of the parameters MINIMUM_SUPPORT and MINIMUM_PROBABILIITY,” but there is no MINIMUM_PROBABILIITY parameter, nor can you add one manually in SSDT without receiving an error during deployment. As usual, we will avoid setting the MODEL_EXISTENCE_ONLY and NOT NULL mining model flags in this week’s trials, since our data doesn’t have any nulls and we don’t want to reduce our data to a dichotomous choice between Missing or Existing states.

As you can see from Figure 2, all of the processing times were quite fast at a wide variety of parameter settings. The most noteworthy change was the elimination of cardinality warnings by setting MAXIMUM_SEQUENCE_STATES to 0. Keep in mind that these results are in no way comparable to the trials we’ve done in prior weeks. We’re only evaluating the 2,586 cases in the master RecordTable and just one state column and the key from the nested table dm_exec_query_stats, not all of its 40 columns. Moreover, the DISTINCT clause I used to make the values for the state column QueryTextID unique undoubtedly eliminated all of the rows where the same query occurred more than once during a polling interval, so we’re not even dealing with the full 1,105,650 rows in that dependent table either. The numerous strictures on Sequence Clustering structures mean we really can’t compare its performance with other algorithms at all, unless we were to edit most of our previous mining models to match the structure used here. It is interesting to note, however, that the twelfth model in the list took longer to retrieve in the GUI than it did to process it, which is unusual. It produced six clusters at first, two of which had just one case apiece, which is where MINIMUM_SUPPORT comes in handy. Theoretically this shouldn’t have happened since the default is supposed to be 10, but setting it to 100 had the expected effect of eliminating the two extra clusters, bring the total to four in the next model.

Figure 2: Performance Comparison by Sequence Clustering Parameters (click to enlarge)

Comparing the usefulness of the results is also difficult, since almost all of our key measures of IO were in other dependent tables we can’t include in our structure. In retrospect, I could have gleaned much more useful information by doing some proper aggregates on our IO measures and included them in the parent RecordTable, but I’m usually reluctant to do that, since reducing the granularity of data directly empties it of information content; furthermore, since we didn’t aggregate our data in previous weeks, we still wouldn’t be able to compare our results to those of other algorithms. Almost all of the measures in RecordTable were related to time, except MinuteGap, which reflected the growth of a strange gap between runs of the Agent job that performed the data collection. Initially it ran every minute as directed, but over the course of three days it slowly crept up to two minutes, then three, then four, without any corresponding warnings or job failures in the server logs. The most useful correlations we could look for with our stripped-down mining structure would be between MinuteGap and specific queries, especially ones that followed each other in a particular order.

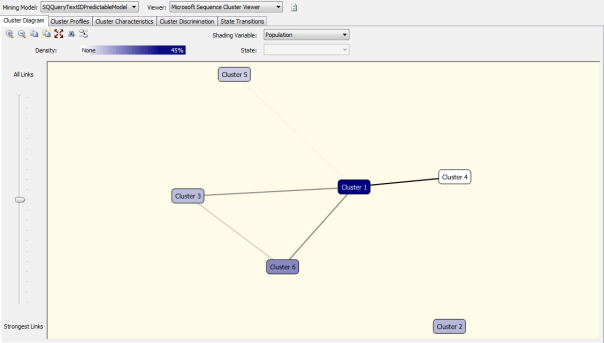

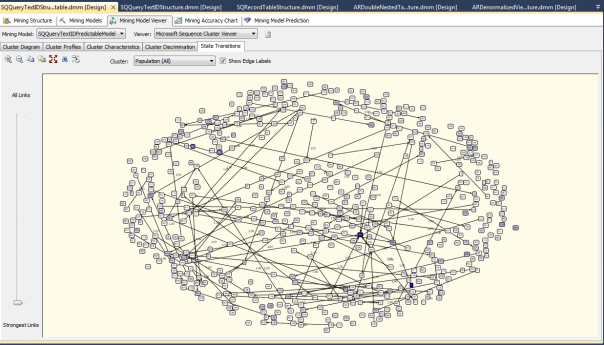

This is exactly what I found, particularly in the thirteenth model. It was cleanly divided into six clusters, four of which had high values for MinuteGap. The relationships between them are depicted in Figure 3, which is a simple Cluster Diagram of the exact same kind as the one introduced in last week’s article. The Cluster Discrimination, Cluster Profiles and Cluster Characteristics tabs all work the same as described there, except that they may have additional values for Start and End to indicate that a particular value leads to the beginning or end of the transition matrix. This week we also have an additional tab, State Transitions. It’s actually quite easy to understand, despite the clutter in Figure 4, which stems merely from the fact that there were so many different queries in our dataset. The number in each rectangle represents the numerical QueryTextID I assigned to each distinct query in order to normalize dm_exec_query_stats at the beginning of these trials. Those with the highest case support are shaded in blue, those in the middle in grey and the least common ones in white. The lines signify a transition from one state to the next, i.e. from one query to the next in the case of our data, while the numbers next to them indicate the probability of the link. The probabilities can be hidden using the ShowEdgeLabels checkbox. The Strongest Links slider can be used to add or remove links from the Sequence Clustering Viewer based on their likelihood, while the Cluster dropdown can limit the clusters depicted.

Figures 3 and 4: Cluster Diagram and State Transitions for Model 13

This model had the clearest and most useful relationships, but the gist with the same with its brethren, all of which seemed to divide clusters along two different lines: low vs. high values for MinuteGap, or transitions that began or ended with different query types. After looking up the texts of the most frequently cited queries, I found that almost all were on msdb or otherwise involved SQL Agent job steps, which seemed to confirm my initial suspicion: the MinuteGap mystery was probably a result of the performance bottleneck caused by the Agent jobs themselves. Furthermore, the Agent-related jobs often divided into two separate categories, one for background Agent processes that I’m usually blissfully unaware of, plus the data collection queries I wrote myself. The first group included queries on dbo.sp_verify_subsystems and sp_help_targetserver, plus regularly polled sys.configurations to see if Agent XPs were enabled in sp_configure, for example. To my surprise, when I checked some of the clusters with the lowest case support that seemed unconnected to these major groupings, I found the text of queries that I had run during the data collection process for activities that had nothing to do with this project at all. SSDM had segregated these queries off in a corner, just as it should have, without any conscious intervention on my part. On top of that, certain stored procedures like sp_help_jobhistory_full and sp_sqlagent_log_jobhistory were grouped together in a way that suggested the many checks I made of the progress of the Agent job in the server logs were segmented as well. I didn’t find the transitions that the algorithm returned as useful, but I think that is mainly because certain repetitive jobs, like the polling job I ran every minute, tended to overshadow other meaningful connections. In all of these models, the most common end point was to the main query in that job, but many of the links other queries had to it almost certainly had artificially high probabilities simply because that query ran every single minute, while they did not. Nevertheless, I did spot some vaguely defined transitions between queries that you might find on a real server, such as one particular Agent background process being followed by another with a fair amount of consistency. I don’t know enough about Agent’s inner workings to state with any certainty that the background queries I saw ought to follow each other, but the data gave me an excellent starting point with which to check into the matter further. This is where much of the untapped potential in SSDM resides, especially for DBAs. It’s not going to perform magic or take DBAs out of the loop, but it can greatly speed up the process of identifying things like possible bottlenecks or relationships between queries that a DBA might not have time to spot, regardless of how seasoned they are. There are simply too many variables out there for any DBA to name, let alone track in their heads, but SSDM can do this kind of grunt work. It can’t make decisions for you, but it can alert people with domain knowledge to items of interest they might not otherwise have spotted. When properly designed, sooner or later SSDM is bound to call attention to an important relationship even an expert in any field would have missed.

Figure 5: Metadata for Sequence Clustering (adapted from Books Online as usual)

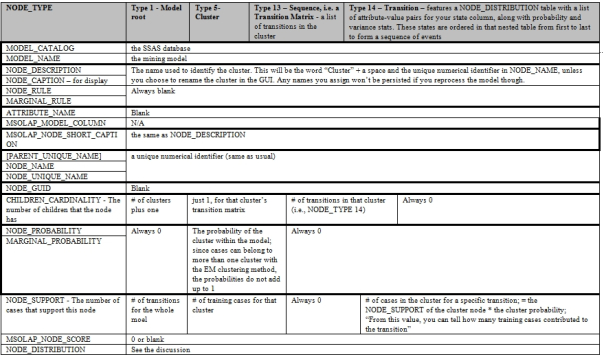

As always, if the SSDT visualization tools do not provide enough detail for you, it is always possible to dig deeper into the raw data the visualizations are built from, using the Generic Content Tree Viewer. As discussed in each previous article, SSDM uses a common metadata format that is flexible enough to represent the disparate output of each of the nine algorithms, which is like comparing apples and oranges; I look at the format like a produce stand, which is capable of holding both apples and oranges side by side, so to speak. The price to be paid for this, however, is a degree of denormalization that relational DBAs probably aren’t accustomed to. The same column names are always used, but their meaning changes in subtle ways between algorithms. They even vary in meaning from one row to the next within each algorithm, depending on the NODE_TYPE value. In the case of Sequence Clustering, we have four of these to take into account, the first of which is the single root of the model at the top of the Generic Content Tree Viewer, as usual. Below that we will find a single NODE_TYPE 5 node for each cluster in our model, plus an additional NODE_TYPE 13 node labeled “Sequence Level for Cluster n,” where n is the maximum number of clusters we have plus one. Each cluster also has one of these sequence nodes beneath it, which represent transition matrices. Each of these transition matrices in turn has a long list of the transitions for the state column, which are represented by type 14 nodes. As usual, the most important information for all of these node types is found in the NODE_DISTRIBUTION table, which is not only nested but varies in meaning from one row to the next depending on its VALUETYPE code. Fortunately, in the case of Sequence Clustering, we’re only dealing with two different values for this flag, 1 for Missing and 4 to indicate a Discrete column, which should be easy to understand for readers who have followed the rest of this series. We must also take into account the ordering of the rows when our NODE_DISTRIBUTION table is within a NODE_TYPE 14 transition node, because the first row will represent the starting state and the following ones successive states, according to BOL. I assume that this means that all of the following rows are ordered from first to last, but I have yet to verify this empirically. In practice, what you’re likely to see when you check inside the Generic Content Tree Viewer are attribute-value pairs for your state column in both the transition matrices and their individual transitions, alongside figures for the probability, variance and count of cases for that particular attribute-value pair. The stats for the columns in your parent table will only be found in the cluster nodes and the model root. To date I have not yet used Data Mining Extensions (DMX) queries to retrieve this information directly from the mining models, but after perusing the Generic Content Tree Viewer, I can see how it might easily turn up useful information on particular transitions. It is easier to use this Viewer than with other algorithms, but the drawback is that there are hundreds of transitions in each cluster’s transition matrix, which would make it difficult to find the specific information you’re looking for. Using a DMX query, however, we would be able to return all of the most likely transitions for a particular QueryTextID.

DMX queries are an advanced topic that we will not delve into unto after we’ve finished surveying all nine algorithms, which we will wrap up next week with Time Series. One of the reasons I have yet to use DMX to find specific transitions in Sequence Clustering models is that there are far too many constraints on the data you can input, which severely crimps the algorithm’s utility. My wishlist for future editions of SSDM now includes upgrades to Sequence Clustering so that users can input more than one nested table, plus analyze more than one state column within each. It would also be much more fruitful if we could at least use the Discretized Content type for other types of columns, or maybe even full-fledged Continuous columns. All of these limitations mean that more often than not, Sequence Clustering ought to be left for the end of a data mining workflow, once you already have quite specific ideas of what kind of events you want to search for in your data. Time Series thankfully has fewer such limitations, although it is limited only to Continuous attributes. Sequence Clustering is typically used for temporal data, but this is apparently not always the case, given that one of the problems it is most commonly applied to is protein sequencing. Time Series is designed specifically with temporal data in mind, although it deals with specific intervals of time rather than events with undefined gaps between them, as Sequence Clustering does. Time Series may have a wider range of uses and fewer limitations than Sequence Clustering, but it would be a mistake to see it is a “better” algorithm, because it is meant to address a complementary set of data mining tasks. The object in data mining is to select the right tool for the job. When investigating data at specific temporal intervals, that tool is usually Time Series, especially if you want to perform the highly valuable but equally risky task of predicting the future, based on the past.

For a decent professional tutorial on this subject, see the AdventureWorks exercise on Sequence Clustering at Microsoft’s Technet website, titled “Lesson 4: Building a Sequence Clustering Scenario” at http://technet.microsoft.com/en-us/library/ms167594.aspx. It is difficult to find a comprehensible lesson on the subject, but this is one of the best available on the Internet.

[ii] A specific example is the National Institutes of Health (NIH) project at Indiana University’s Cloud Computing for Health Research website titled “Sequence Clustering: Pipeling Applications to Classify Biological Sequences,” which can be found at http://salsahpc.indiana.edu/nih/index.php/Sequence_Clustering I ran across a lot of sources that cited protein sequencing as an example of how it can be applied bioinformatics, which is just a fancy buzz word for developing computerized methods for biological analysis. Quite often, it just means applying computers to problems in the health field.

[iii] MacLennan, Jamie; Tang, ZhaoHui and Crivat, Bogdan, 2009, Data Mining with Microsoft SQL Server 2008. Wiley Publishing: Indianapolis.

[iv] In case the exact syntax might illustrate the problem, here’s the full query. The DISTINCT clause is the most important part to keep in mind:

CREATE VIEW [Monitoring].[QueryTextIDView]

AS

SELECT DISTINCT TOP 9999999 ROW_NUMBER () OVER (PARTITION BY 1 ORDER BY RecordID) AS ID, RecordID, QueryTextID

FROM [Monitoring].[dm_exec_query_stats]

ORDER BY ID, RecordID, QueryTextID

[v] IBID., pp. 334-335.

[vi] See the Wikipedia page“Andrey Markov”at http://en.wikipedia.org/wiki/Andrey_Markov

[vii] See the documentation from Books Online, which is available at the MSDN webpage “Microsoft Sequence Clustering Algorithm Technical Reference” at http://msdn.microsoft.com/en-us/library/cc645866.aspx

Also see the Wikipedia page “Markov Chain” at http://en.wikipedia.org/wiki/Markov_chain I am not familiar with Hidden Markov Models or their differences with visible Markov models, but they are apparently pervasive enough that MacLennan, et al. mentioned on pp. 336-337 that SQL Server doesn’t use them.

[viii] Books Online makes the comment about matrix sparsity, but I added the rest. See the Wikipedia webpage “Curse of Dimensionality” at http://en.wikipedia.org/wiki/Curse_of_dimensionality

[ix] p. 336, MacLennan, et al.