by Steve Bolton

In last week’s installment of this series of self-tutorials on SQL Server Data Mining (SSDM), we covered my favorite algorithm, Neural Networks, which is also among the most intricate but productive of the nine data mining methods Microsoft provides. The major drawback is that its inner workings are almost impossible to interpret; it is about as difficult to figure out why a mining model reached a particular conclusion from examining its weighted artificial neurons as it is read a man’s thoughts from a coroner’s report on his cerebellum. This week we’re going to whipsaw into a completely unrelated algorithm, Association Rules, which has characteristics that are almost a mirror image of the properties of Neural Networks. Its inner workings are perhaps easier to understand than any of the other nine algorithms, because it is so simple. Despite its inner simplicity, it is paradoxically more difficult to extract useful information from it because of the steep learning curve required to manipulate its parameters properly. As I have made clear in prior posts, this series is meant to show that an amateur like me can still garner a wealth of useful information from the most under-utilized tool in SQL Server with a minimum of investment in time, energy, server resources and training. In every other algorithm we’ve tested to date (and in my prior experience with the three we have yet to discuss), interesting results have immediately popped out even when the parameters controlling each one were left at their default values, but Association Rules takes a lot of coaxing to extract useful results from. At least in my limited experience, it seems to be more prone to the scourge of data mining, overfitting, i.e. decreased performance in return for cluttered, meaningless or misleading results. For these reasons and others, it is my least favorite among Microsoft’s nine algorithms.

That is not to say that it does not have its uses. It was first developed by chemical engineer Rakesh Agrawal and computer scientists Tomasz Imielinski and Arun Swami in a landmark research paper in 1993, which built on the study of GTE labs researcher Gregory Piatetsky-Shapiro. It may have been foreshadowed by Petr Hájek and Ivan Havel and Metodej Chytil, three Czech researchers working behind the Iron Curtain, who published a similar paper in 1966. In past articles I have written about some sordid episodes in the history of statistics, math and the hard sciences, but in this case, we have people of seemingly sterling character, like Hajek, who was known for resisting the Communist occupiers of Czechoslovakia, and Havel, who was the brother of Vaclav Havel, the famed leader of the nation’s “Velvet Revolution” in 1989.[ii] Either way, it was not popularized until the 1993 article, which makes it “one of the most cited papers in the data mining field.”[iii] There’s apparently quite a bit of ongoing research on this particular mining method, including the development of more advanced refinements like Eclat, FP-growth, GUHA and OPUS that allow for hierarchical categorization, sequence ordering and other uses that the original A Priori method doesn’t handle well. Alternative ways of gauging the interestingness of data have also been invented, including measures of collective strength, conviction and leverage. I can’t comment directly on any of these new variations, because my experience with Association Rules is limited to the version that Microsoft ships with SSDM, which Books Online (BOL) says “is a straightforward implementation of the well-known Apriori algorithm.” My gut feeling, however, is that the algorithm is a hot topic only because it is well-suited for a couple of specific, narrow implementations, like market basket analysis and recommendation engines, which are in high demand right now in the limited field of E-commerce. In fact, this specific scenario was spelled out in the very first sentence in Agrawal, et al.’s article. Like any other tool, it has its uses, but several drawbacks that severely limit its utility in comparison to other algorithms. It is not well-suited to scenarios where the order of items in a particular case of transactions (think of a row in a dependent table joined to a parent table) is important. Association Rules can be used for predictions, but only in a crude way, because it has no ordering and therefore can only refer to specific points in time. Even in data mining there are no crystal balls, so I can’t peek into the future and see if researchers will continue to devote as much attention to this algorithm. My gut feeling, however, is that we’re probably already nearing the top of a bell curve, after which the advantages of refining this particular algorithm will begin to level out. It may continue to be popular for many reasons, such as the fact that its inner workings are very simple to explain, but the very simplicity of this data mining method is what limits its utility and possible refinements so drastically. The shovel was one of the great inventions in human history, but since that time, it has undergone only slight modifications of limited utility. Research into building better shovels has not stopped by any means, but it has topped off – for example, anyone with more than a hundred bucks can purchase a high-tech “crovel” like the one featured on a recent episode of the National Geographic Channel show Doomsday Preppers. That souped-up shovel is a great tool, but its refinements are even more highly specialized, to the point that we now have one geared specifically for end-of-the-world scenarios.[iv] In the same way, research into Association Rules will probably continue but follow the law of diminishing returns, whereas we’ve barely scratched the surface of the potential of other data mining methods like neural nets and Clustering.

Just like a shovel, the basic A Priori version of Association Rules is simple to explain. SQL Server counts all of the cases in your data mining model where certain columns values are found together, which can be a time-consuming process, then creates an itemset for each combination with a count above a certain threshold. Basically, it just counts the different states of your columns to see how frequently they occur in combination with the states of other columns, then tosses out the ones that are sufficiently numerous. You can certain properties to specify how many columns can appear in your itemsets; for example, you can tell SQL Server to only create itemsets with three or four items, i.e. column-value pairs. For example, using the IO data we’ve been working with throughout this series (see post 0.0, post 0.1 and post 0.2 for refreshers on the details of our schema, as well other basics like how to create a project), one of the itemsets returned was labeled “IO Stall = 4439-10428, IOPendingMsTicks = 1-28″ which was supported by 9,053 cases and had an itemset length of two columns, IOStall and IOPendingMsTicks. Throughout this series we have referred to the number of rows processed by SSDM as supporting cases, but in the lingo of Association Rules this simple measure is also sometimes referred to as frequency. Once the itemsets are generated, the algorithm creates a rule by simply calculating the probability (also referred to as confidence in the context of Association Rules) that one will be found with another. One of the more important rules I found, for example, was labeled “IO Stall Read Ms = 15273-78862008 -> Num Of Bytes Read > = 3006464.” Several itemsets can appear on the left-hand side of a rule, but only one in the predictable column listed in the right of the -> arrow figure. The multiplicity of greater than, less than and equals signs in the presence of that arrow can be initially confusing, especially once we find itemsets with more than two members and rules with more than two itemsets on the left side, but users can get acclimated fairly quickly with little training. The only operation that might be a little beyond the ordinary math skills of the Average Joe might be the lift assigned for each itemset and rule. For itemsets, the count of cases is divided by the total number of cases, then the probability is normalized ( i.e. a fancy way of saying that they’re put on a single comparable scale, as you might need to do if you one were comparing ranges of prices for euros, dollars and yen, or disparate rating systems for movies, etc.). With rules, the calculation is a bit more complex, in that a ratio is built by comparing the left-hand itemsets with the predictable result on the right on one hand, against the results on the right-hand when they’re not found in the presence of those itemsets. The comparisons of rules are then normalized, this time using a logarithm. The essential thing for those of us without doctorates in statistics to remember is that the point is to build a way for us to measure the interestingness of the results. This is actually simpler to calculate and easier for laymen to grasp with Association Rules, since it is the only algorithm which doesn’t use the four methods of feature selection discussed in prior posts to compute interestingness. This measure is sometimes referred to as importance in this context. As BOL points out, the simpler way in which Association Rules calculates it means has a drawback in that the associations it makes are less likely to provide relevant new information than the splits produced by Decision Trees, which we discussed a few weeks ago, or for any of the other algorithms for that matter. The structures produced by these calculations are also basic as a scoop or a spade. As BOL puts it, “An association model has a simple structure. Each model has a single parent node that represents the model and its metadata, and each parent node has a flat list of itemsets and rules. The itemsets and rules are not organized in trees, they are ordered with itemsets first and rules next.”

The drawbacks of Association Rules, however, also seem to stem from the advantages of its simplicity. A shovel is a simple, basic tool, but it’s only economical to use it when the holes you want to dig are shallow, or when more advanced tools like back hoes and drills can’t be applied. You could theoretically use a shovel to excavate a gold mine two miles deep into the ground, like South Africa’s TauTona mine, but that would be an exceptionally painful and inefficient way of going about it. Likewise, I seriously doubt Association Rules can be used more efficiently than the other eight SSDM algorithms to unearth interesting relationships buried deep in any big dataset. It might be an appropriate choice when you need quick insight into a small dataset without many unique variables, in which case you don’t have to quarry very deep below the surface of your data, which means that the outlines of the relationships you seek may already be visible. It may also be appropriate when you need to explicitly understand how SSDM arrived at a particular answer, which is the only area in which beats the Neural Networks algorithm hands down. In this sense, Association Rules is so simple even a caveman can do it. Any caveman can also excavate a mineshaft by beating the ground with a stick, but they’re going to exhaust all of their brute strength long before they turn up their first precious gem or ounce of gold. To put it bluntly, that’s essentially what we’re doing with Association Rules: brute force data mining. The process is easy to grasp because it’s so basic, but it also has the drawbacks of any simple, clumsy tool: it takes an awful lot of effort to get any work done. A first-time user might expect that in return for the shallow view of data that Association Rules provides, we would have to expend correspondingly fewer resources, but this is not the case. In fact, you are much more likely to encounter immediate performance problems with this algorithm than any other, in return for a much greater risk of information glut, which together constitutes the twin horns of overfitting, the bogeyman of all data miners. For these reasons, it might be wise to limit Association Rules to preliminary examinations of data, but even for this narrow class of uses, Naïve Bayes does a better job by consuming few server resources in order to return its relatively shallow results. It also has the same innate Content type limitation which hobbles Naïve Bayes, in that it accepts only Discrete and Discretized data. Association Rules is in some ways even simpler to understand than Naïve Bayes, because we don’t have to worry about feature selection, nor is there a statistical mystery about why it returns the results it does. Yet even the simplicity of its inner workings is misleading, because a really steep learning curve is required to master the parameters that control Association Rules. I’ve seen all of the other algorithms immediately return back interesting results with their parameters set to their defaults, but have yet to see this occur with Association Rules. Its simplicity is deceptive in this sense. Excavating a mine with a simple tool like a spade, for example, can quickly turn into a maddeningly complex job. As I’ve said before, I’m an amateur at this, but in the last few years I’ve experimented with it, Association Rules has consistently returned data of far less quality in return for much higher consumption of server resources, on top of a much greater investment in time and energy merely to complete processing at all. I’m sure an expert can point out some specific scenarios where Association Rules might be the preferred tool, but I can’t think of any off the top of my head which are much different than those it was developed for: market basket analysis and recommendation engines, which we’ll explain shortly, after demonstrating how to misapply it to other scenarios. Perhaps it works better on certain data distributions, as Naïve Bayes does. These limitations, however, make it a much more specific but simple digging tool, analogous to a pick instead of an all-purpose shovel for light digging.

One of the points I hope this series of self-tutorials gets across is that any SQL Server professional can make practical use of SSDM without much investment in time, energy, server resources and training. Association Rules is an exception to that rule, because you are likely to experience a baptism by fire that forces you to delve right into the parameters that control the algorithm. Regardless of your skill level you will have to get through it, otherwise you may not get any results back at all. Thankfully, we can skip over the mining flags MODEL_EXISTENCE_ONLY and NOT NULL as usual, since our data has no nulls and we don’t want to reduce our data to just two values, Missing or Existing. There are also two parameters we won’t worry about for now since they’re not relevant to this week’s trials. One of these is AUTODETECT_MINIMUM_SUPPORT, which former members of the Microsoft’s Data Mining Team say “represents the sensitivity of the algorithm used to autodetect minimum support. Setting this value to 1.0 will cause the algorithm to automatically detect the smallest appropriate value of minimum support. Setting this value to 0 turns off autodetection, and the algorithm operates on the actual value of minimum support. This parameter is used only when MINIMUM_SUPPORT is set to 0.0.”[v] I have set MINIMUM_SUPPORT to this value but have yet to see any place to set this particular parameter in SQL Server Data Tools (SSDT). We also won’t use OPTIMIZED_PREDICTION_COUNT, which is a brute force method of limiting the results returned by DMX prediction queries, a topic that will be discussed in this series once we’ve gotten through all nine algorithms. That leaves us seven parameters to control the behavior of Association Rules, the first of which is also a brute force method of limiting the results returned by the algorithm. MAXIMUM_ITEMSET_COUNT essentially takes a hatchet to your data mining results and hacks off any that might appear after your model has finished processing this number of itemsets, regardless of how important they might be. Unfortunately, at its default setting of 200,000, I continually ran into the String Store error described in the Decision Trees post: “File system error: A string store or binary store with a compatibility level of ’1050′ is at the maximum file size of 4 gigabytes. To store additional strings, you can change the StringStoresCompatibilityLevel property of the associated dimension or distinct count measure to ’1100′ and reprocess. This option is only available on databases with a compatibility level of ’1100′ or higher. Physical file: . Logical file: .” I have already recounted the sad story of how Microsoft added a new property in 2012 that allows SQL Server Analysis Services (SSAS) users to circumvent this serious limitation with cubes, but apparently forgot to add the functionality to SSDM. It is also unclear if SSDM is actually breaching this limit, or if some undocumented problem is causing this error message for unrelated reasons, as I explained in that post; in this instance, however, it seems more probably that the limit is actually being hit, given that one of my smaller Association Rules mining structures suddenly grew by 504 megabytes once processing finally succeeded. That occurred after I set the limit as low as 500, which finally rid me of that particular error.

In a previous project, I saw error 7with the phrases “the job completed with failure” and “readdata” repeat themselves ad infinitum, ad nauseum in a Profiler trace until my server crashed during an Association Rules test, but thankfully that didn’t occur during this week’s trials. On one occasion in this project I also ran into an error that informed me “The Association Rules algorithm found no frequent itemsets matching the algorithm parameters for model ARDenormalizedView1Model.” A more persistent and trickier problem to solve was a third error, which sometimes occurred simultaneously with the String Store fault: “The Association Rules algorithm found no rules matching the algorithm parameters for model ARDenormalizedView1Model.” It took a lot of playing with the remaining six parameters to get my models to return any rules at all, which has been par for the course with other projects I’ve tested on SSDM. The one I played with the least was MAXIMUM_SUPPORT, which sets an absolute limit on the case support for any particular itemset when set to more than 1, or as a percentage of all cases when set to less than its default of 1. As BOL says, “This parameter can be used to eliminate items that appear frequently and therefore potentially have little meaning.” This of course depends highly on what you’re digging for in your data mine. If you want to spot frequent relationships, it would be better to leave this parameter alone, but if you want to spot previously unknown ones then tweaking MAXIMUM_SUPPORT may help, assuming you haven’t spotted these relationships yet because they’re relatively infrequent. Eliminating this rule-generating error required a difficult balancing act between two parameters we’ve encountered before in this series, MINIMUM_PROBABILITY and MINIMUM_SUPPORT, and three we have not, MINIMUM_ITEMSET_SIZE, MAXIMUM_ITEMSET_SIZE and MINIMUM_IMPORTANCE. As usual, relationships where the confidence level is below the MINIMUM_PROBABILITY setting or the case count is below MINIMUM_SUPPORT are discarded. The documentation says that the default for MINIMUM_SUPPORT with Association Rules is 0.03, i.e. that an itemset must be included in 3 percent of all cases – yet when you go to set the parameter in SSDT, you will find a text label there saying that the default is actually 0.0. I have yet to verify which one of these conflicting statements is accurate.

The itemset size parameters limit the size of the data returned in a horizontal way, by shrinking the number of columns that can appear in any itemset. For example, if we set MINIMUM_ITEMSET_SIZE.to 2 and MAXIMUM_ITEMSET_SIZE to 3, in the dataset used throughout this series we could theoretically encounter an itemset that contains the three columns MinuteGap, IOStallReadMs and IndexSize, because that is three columns wide. We couldn’t have one that combined Rows, DayOfWeek, NumOfReads and NumOfBytesWritten, since that would be four columns wide, which would be beyond the setting in MAXIMUM_ITEMSET_SIZE. Nor could we have one that combined IOOffset and CounterID alone, since that would be two columns, one less than the setting of MINIMUM_ITEMSET_SIZE. Take care not to set MINIMUM_ITEMSET_SIZE higher than MAXIMUM_ITEMSET_SIZE, as I did once, because no results will be returned. BOL also warns that, “You cannot reduce model processing time by increasing the minimum value, because Analysis Services must calculate probabilities for single items anyway as part of processing. However, by setting this value higher you can filter out smaller itemsets.” From my experience, this is accurate.

Frequently, working with SSAS is a lot like exploring outer space, because you’re bolding venturing out where few men have gone before. For a few examples, see my series on some of the errors you’ll encounter in SSAS for which there is apparently no documentation anywhere on the planet. Documentation for MINIMUM_IMPORTANCE exists, but it is so thin that you can read find it all within a few minutes. There are two sentences referring to it in the last edition of the Data Mining with Microsoft SQL Server 2008, which was written by former members of Microsoft’s Data Mining Team: “Minimum_Importance is a threshold parameter for association rules. Rules with importance less than Minimum_Importance are filtered out.”[vi] A search on Google turns up just 84 hits, of which only maybe four or five are relevant posts which aren’t repeats of each other. One of these is a post in which Jamie MacLennan of the DM Team says that a bug was found in the parameter back in 2006 and that a fix was being prepared for the next service pack in SQL Server 2005, but I haven’t yet found any reference to whether it was fixed or not since then.[vii] The only explanation I found was in another post by MacLennan, who said that “Minimum_Importance is a calculation that further filters rules based on the amount of lift they provide – the purpose is to filter out tautologies, e.g. “Everybody buys milk, so Cookies->Milk is true with 100%”. This rule is not important, since <anything>->Milk would also be 100%.”[viii] I also found some other good explanations of the meaning of the statistical term importance in Association Rules at the Data Mining Forum, but without any direct mention of the parameter.[ix] They say that a little knowledge is a dangerous thing, yet I had no choice but to charge into the fray with this minimal understanding of the parameter, because it quickly became of maximum importance to the success of this week’s trials to get my processing times down using MINIMUM_IMPORTANCE.

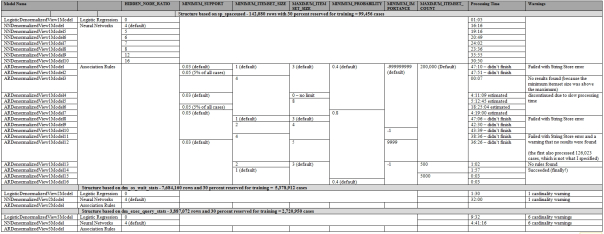

What followed was a process of trial and error, with a lot of the latter. Figure 1 is a summary of this week’s trials on the three views I have built my mining structures from throughout the series, which are taken from about three days of polling dm_exec_query_stats, dm_io_pending_io_requests, dm_io_virtual_file_stats, dm_os_performance_counters, dm_os_wait_stats and sp_spaceused every minute, in order to intentionally cause IO pressure. I had hoped that this would kill two birds with one stone, by familiarizing myself more with a SQL Server topic I don’ t yet know enough about, while providing a familiar reference point for those DBAs who already understand it. As discussed in previous posts, I cut back to three denormalized views that left joined dm_io_pending_io_requests, dm_io_virtual_file_stats, dm_os_performance_counters and a parent RecordTable (which tracked time) to sp_spaceused, dm_os_wait_stats and dm_exec_query_stats respectively. Ideally, Association Rules calls for market basket analysis and nested tables, but I’ll defer discussion of that till the end of this post. For the meantime we’ll stick with mining structures like those we’ve worked with in previous weeks, so that we don’t make apples vs. oranges comparisons. I’ve included some figures from our Logistic Regression and Neural Networks trials to show how processing times vary, although they use different parameters. As you can see, the results of this pioneering public test of these parameters were not positive. Not only did many of the jobs fail to complete, but the processing times were often simply awful whether they completed or not. It took a full day of playing with these parameters over the course of 14 trials to successfully return any results at all. That time would have stretched out across an entire week, if I hadn’t learned a really valuable tip the hard way: when processing an unfamiliar model, always keep a Profiler trace going and calculate how much time it will take in advance, by taking samples of the interval between messages that look like this, “Reading cases: n cases read” and multiplying by the number of cases in your model. You’ll need to click on Figure 1 to enlarge it, because the table contains a gold mine of information on the mining process and the parameters it took to finally get the job done. It doesn’t tell the whole story though. IO pressure was next to nil throughout all 16 trials on the Association Rules models, but when I finally got usable results back on the 14th model, 504 megs of data were suddenly added to the mining structure all at once, which is probably why I was hitting the 4 gigabyte string store limit when MAXIMUM_ITEMSET_COUNT was set much higher than a few hundred or a few thousand. I was recently fortunate enough to get a new 8 gig stick of RAM, otherwise I would have been in serious trouble in this week’s trials, in which the msmdrv process routinely gobbled up between 3 and 5 gigs at a time. My processor continually ran on just one of its six cores during this set of trials, which may indicate that SSDM could benefit from better multi-threading in certain phases of model processing. Perhaps one of the “forbidden parameters” in SSAS (i.e. those that can only be changed by editing the msmdsrv.ini file) could help, but I’m not familiar with them enough yet to try. All in all, this brute force method of data mining was brutal on my poor machine. Strangely, once I finally got results back from the 14th model, the final two processed in just a few tenths of a seconds, with just a couple of megs being added to the database size. I used different parameters in both cases, including multiplying the MAXIMUM_ITEMSET_COUNT by a factor of ten to 5,000, but I suspect that SQL Server simply reused some of the data stored during the processing of the 14th model.

Figure 1: Performance of Association Rules at Various Parameter Settings, Compared to Other Algorithms (click to enlarge)

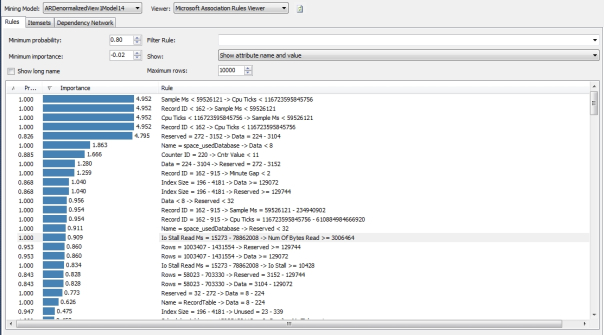

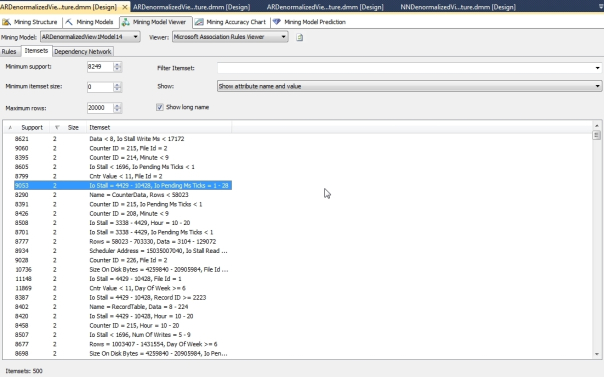

What did we get in return for this large of investment of training, time, server resources and energy? Not much. In Figures 2 and 3 we see the output of the Rules and Itemsets tabs in the Microsoft Association Viewer. It also has a Dependency Network tab, which I found to be a useful starting point in the workflow for sifting through mining results, but we’ve already discussed that in depth in past posts, such as those on Naïve Bayes and Linear Regression. The Minimum Probability and Minimum Importance dropdowns and Maximum Rows control can be used to limit the results in the Rules tab, which can be sorted by Probability or Importance by clicking on the appropriate column header. The Minimum Support, Minimum Itemset Size and Maximum Rows controls serve basically the same purposes in the Itemsets tab, which can be sorted by the Support by number of cases or the Size, by the number of columns represented in each itemset. The Filter Rule, Filter Itemset, Show and Show Long Name are used to limit the rules or itemsets by the names contained in them, or to alter the way they are displayed. Luckily these controls aren’t hard to use or understand, because I had to really sift through the results to retrieve any relationships that were relevant to me. I noticed two relationships between IOStallReadMs and two other measures in the Rules tab and the one highlighted relationship between IOStall and IOPendingMsTicks in the Itemsets tab, but that was it. I suppose I could have found more relationships by sifting through the thousands of results on both tabs, but that would have taken far more time than with any of the other algorithms we’ve surveyed to data, where useful relationships were immediately apparent in the results. Every time I have used Association Rules in the past, I have had the same unpleasant sensation of having to mine the mining results themselves by hand, so to speak. I suppose this shouldn’t be that surprising, given that we can’t dig that deep with such a blunt instrument, which forces us to continue sifting through the results with the equivalent of our bare hands.

Figures 2 and 3: The Rules and Itemsets Tabs (click to enlarge)

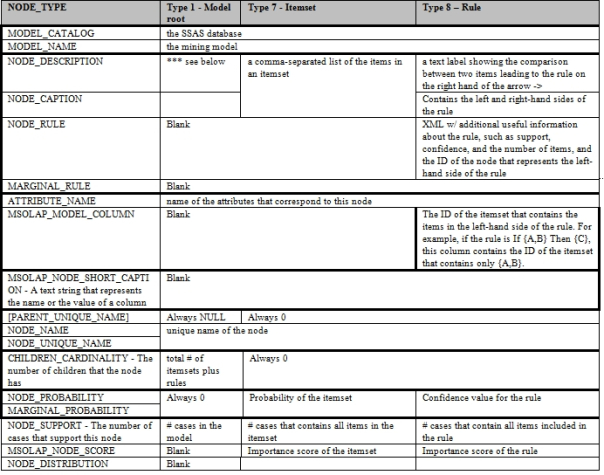

I will spare you the chore of having to sift through the long, unordered list of itemsets and rules that we can see in the Generic Content Viewer. It’s not torture to understand, like interpreting the innards of a neural net, but it doesn’t really tell us much more than we’ve already seen in the Rules and Itemsets tabs. There are only three types of nodes, two of which specify items or rules. The key node to look for is the model root at the top, since it has stats for the whole model, including ITEMSET_COUNT, RULE_COUNT, MIN_SUPPORT, MAX_SUPPORT, MIN_ITEMSET_SIZE, MAX_ITEMSET_SIZE, MIN_PROBABILITY, MAX_PROBABILITY, MIN_LIFT and MAX_LIFT. All of the minimums and maximums are those found for any itemset or rule in the whole model. For the sake of consistency I’ll provide a quick reference to the metadata returned by Association Rules as I have in past posts, although this would be most useful if we were parsing the results ourselves with some custom DMX queries. The nested NODE_DISTRIBUTION table isn’t difficult to interpret with this algorithm, because each row refers to a different item in an itemset or rule. VARIANCE is always 0, the ATTRIBUTE_NAME simply tells us the column name, ATTRIBUTE_VALUE gives us a label representing the state of the column and PROBABILITY is always equal to the NODE_PROBABILITY and MARGINAL_PROBABILITY. VALUETYPE tells us the Content type of the column, such as Discrete or Discretized.

Figure 4: Metadata for Association Rules (adapted from Books Online)

Overfitting is a big problem with Association Rules, as I found out the hard way long before I ever started this series of tutorials. These results are about par for the course. Of course, that begs the question of whether or not I’m using the algorithm the way it was designed to be used. The answer is that I haven’t, simply because I wanted to illustrate how much more clumsy the algorithm is when processing datasets of sizes similar to those we’ve already used. The documentation warns that large datasets with many distinct items or low thresholds for minimum itemset in the model parameters can be time-consuming and they’re not kidding. It also advises that “To minimize processing time and reduce the complexity of the itemsets, you might try grouping related items by categories before you analyze the data,” which might work. Yet in a sense, if we’re comparing it against other algorithms, that’s cheating, because we’re drastically reducing the granularity of our data in that way. Furthermore, if we already know enough our data to group them this way, then why run an algorithm that is designed to group items together? Yes, we can get more detail about specific items in this way, in order to link items together more accurately, but it’s a bit like digging a hole with your bare hands, then finishing the job with a spade. I’m not disputing that it can be useful to do this, only pointing out that the range of scenarios where this will be useful is quite limited.

That brings us to market basket analysis and recommendation engines, which are all the rage now in the E-commerce world (which, it should be remembered to keep our priorities in proportoin, represents just a tiny corner of the wide range of human problems that can be addressed by data mining). The two are basically flip sides of the same coin; one notices that customers tend to buy certain things together, while the other recommends products to customers because they are frequently found in the purchases of other customers. Long before I ever used SSDM, an apocryphal story had already seeped across the Internet to me of how data miners had discovered a curious market basket relationship: men who buy beer also tend to buy diapers with them. I don’t know if it’s true, but I’ve seen it repeatedly referenced in the origins of Association Rules now. I crack a smile every time I see recommendation engines on websites offer me products, because I have an inkling of what’s going on under the hood at those web servers. I used to snicker because the recommendations were sometimes patently ridiculous, but the technology must be improving, because certain websites now actually point out things I might buy or want that I previously didn’t know about. It’s entirely possible that they’re using some other algorithm, like neural nets or Sequence Clustering, to do jobs like this – I can make a good case that they might do a more efficient job of recommending better products, if designed correctly. Or they may be using one of the newer enhancements to Association Rules. Chance are, however, that they are using the original A Priori version of it, which was designed with one thing in mind: transaction analysis.

For that, you basically need two things: a parent table to uniquely identify transactions, plus a dependent table with a list of items for each. I now know that the major mistake I made in A Rickety Stairway to SQL Server Data Mining, Part 0.2: How to Dig Out of a Data Mining Cave-In was a common one: I assumed that nested tables in SSDM were exactly equivalent to relational tables related by a foreign key, but this isn’t precisely true. I missed a subtle difference which counts for a lot in the end. Two keys are actually required: a case key, which SSDM retrieves automatically from the relationships in your data source views (DSVs), and a nested key, which identifies a column in the dependent table that you want to analyze. In fact, it quite often makes sense to eliminate the case key from your model, if it has no information content other than to link the two tables together.[x] Since the data mining cave-in I mentioned in that post, I have had to resort to three denormalized views in order to reduce the outrageous processing times I encountered with my original DSV, which featured the tables corresponding to my six DMVs linked to a single RecordTable. I made the mistake of using RecordID, the case key in all six tables, as my nested key, which is probably what led to the mother of all performance bottlenecks. There were simply multiple RecordIDs for each unique row in the dependent tables, which the DM Team’s aforementioned book says can lead to “undefined” behavior. When you use nested tables, each column within them is stored on disk as a separate dimension, which may have been what caused the massive IO pressure I encountered, followed by massive page file usage and memory consumption. After this week’s trials on the three denormalized structures we’ve been using as substitutes, I was finally able to return to my original DSV schema and nest all six dependent tables the way I had planned at the beginning. At times, I departed slightly from the classic market basket setting because the data I collected wasn’t exactly suited to it. In many scenarios, we would have to do a lot of artificial aggregation of our data, if there were more than one distinct value for our nested key for each unique case key. I’m assuming, however, that it is safe to skip aggregation if our nested key has a 1:1 relationship with the case key. This is precisely the case with certain crucial measures in our data that I want to investigate further, such as WaitTypeID and CounterID. The same few hundred wait types and dozen or so performance counters I collected occurred in exactly the same proportion with each data collection job, so we don’t need to perform any smoothing of our data to use them. In a normal market basket analysis, a person may buy more than one of the same item in a single transaction, or not buy any at all, so I also experimented with aggregating on measures that didn’t have a 1:1 relationship with the case key.

Now that I was using the algorithm and the nested tables feature as directed, SSDM behaved better. Processing time took only a dozen minutes or so on a couple of occasions and the single resulting mining structure took up just 27 megs of disk space. Furthermore, contrary to the previous trials, SSDM began making use of multiple processor cores, possibly because there were multiple dimensions to process; the algorithm still could have made better use of multi-threading in certain phases (such as the “Started training algorithm phase,” where it went back to one core to stay). Yet not all of the problems with Association Rules were due to my own inexperience, because as I go to press with this post several weeks after writing it, I’m back to playing with the parameters in the hopes of finally generating some rules out of these nested tables. Forget the “training algorithm phase”; I’m still stuck on the “training amateur data miner phase.” The learning curve for this data mining method seems to be prohibitively high in comparison to the other eight SSDM algorithms, on top of the unavoidable performance and lack of utility in the results. This algorithm is indeed a simple, blunt instrument, but has more in common with a pick than a shovel, because its utility is further limited to a few narrow chores. I occasionally find uses for picks when digging, but not as often as shovels.[xi] For this reason, I would almost always choose Naïve Bayes or Linear Regression as a preliminary algorithm in any workflow over Association Rules, unless there was some specific matching need like market basket analysis. Don’t get me wrong, I’m not knocking Association Rules. I’m not knocking picks either. I just wouldn’t want to do any serious digging with either one. The analogy breaks down there, however, because a pick doesn’t require much of an investment in training the way Association Rules does. Due to its inner simplicity, I would have put it at the beginning of this tutorial series, but I wanted to stick with the concept of statistical building blocks, to make the series a little more comprehensible. If I wanted to go in order of the complexity of the parameters and learning curve, this algorithm would have come last. This tool’s a little bit of an oddball; it’s not even used as a building block for any of the other eight algorithms, nor is it founded on any of them. I’ve only included it at this point in the series because it’s vaguely similar to Clustering, which will be the topic of the next two tutorials. Clustering isn’t often compared to Association Rules, but there is some overlap in their functionality, in that one groups items by the commonality with which they are found together, while the other groups them by common properties. Clustering also implies a measure of distance between groups of items, which can be useful. Its more advanced cousin, Sequence Clustering, can also perform many of the same functions as Association Rules, while taking temporal ordering into account. This is merely one more function that this week’s algorithm can’t perform well. Association Rules is a one-dimensional tool in the world of multidimensional databases, but there are times when one dimension is all we need.

Piatetsky-Shapiro, Gregory, 1991, “Discovery, Analysis, and Presentation of Strong Rules,” pp. 229-248 in Piatetsky-Shapiro, Gregory and Frawley, William J. eds., Knowledge Discovery in Databases, MIT Press: Cambridge, Mass. Also see Agrawal, Rakesh; Imielinbski, Tomasz and Swami, Arun, 1993, “Mining Association Rules Between Sets of Items in Large Databases,” pp. 207-216 in Proceedings of the 1993 ACM SIGMOD International Conference on Management of Data. No publisher or city listed. Available online through the Penn State CiteSeerX website at http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.217.4132

[ii] Hájek, Petr; Havel, Ivan and Chytil, Metodej, 1966,”The GUHA Method of Automatic Hypotheses Determination,” pp. 293-308 in Computing 1. No volume or number given. Also see the Wikipedia article “Petr Hájek” at http://en.wikipedia.org/wiki/Petr_H%C3%A1jek

[iii] See the Wikipedia article “Association Rule Learning” at http://en.wikipedia.org/wiki/Association_rule_learning

[iv] I was originally going to post the link to the site where you can buy a crovel, just because I like to cite everything as much as possible out of habit, but thought that might be construed as a commercial endorsement. I wonder how many of them were returned after the Mayan Apocalypse fizzled in December…and how many of the buyers thought that purchasing a really expensive shovel might actually save them from Armageddon.

[v] p. 369, MacLennan, Jamie; Tang, ZhaoHui and Crivat, Bogdan, 2009, Data Mining with Microsoft SQL Server 2008. Wiley Publishing: Indianapolis

[vi] IBID., p. 368.

[vii] MacLennan, Jamie, 2006, “Usage of Association Rules,” posted Aug. 18, 2006 at the MSDN Data Mining Forum at http://social.msdn.microsoft.com/Forums/en/sqldatamining/thread/c90a1c08-bf97-41a0-ac7d-4e4450423e0a

[viii] MacLennan, Jamie, 2007, “The Mean of Using Association with Importance and Probability,” posted April 17, 2007 at the MSDN Data Mining Forum at http://social.msdn.microsoft.com/forums/en-US/sqldatamining/thread/c7616723-9f98-4ea5-9d7e-a20f7c528a4a/

[ix] See the MSDN Data Mining Forum discussions “Association algorithm – Importance of a rule,” started March 6, 2006 and “Association Rules – Importance,” started Feb. 14, 2008, at

[x] pp. 89-90 in MacLennan, et al. contains a much better explanation of how nested tables work than that found in Books Online.

[xi] Since I was in high school, I’ve done my share of digging shale out of a particular mountain in Western New York to keep it from falling on a relative’s rooftop, and haven’t gotten very far yet. Every so often, however, I drop my shovel and reach for a pick, which helps me shrink the pile of fallen shale a little bit faster. When I use Association Rules, I get the same sensation of straining at a mountainside, wishing I had heavier equipment.