NOTE: The reporting solution described in this article relies on use of two Red Gate tools, SQL Backup and SQL Multi Script.

**

As a SQL Sever DBA for a large company, I am a big proponent of acquiring or building applications that automate several of my team's daily tasks. To that end, I have spent laborious months building a DBA repository and reporting solution that combines SQL Server Reporting Services and SQL Server Integration Services to deliver daily reports about the status of the 80+ SQL Servers in my care. The solution pulls together information about SQL Agent jobs, backup successes and failures, user and role permissions, and myriad other types of reporting data that a DBA must analyze and react to, if necessary.

This solution has worked well for me in the past and continues to do so. However, it does have some limitations:

- It is reliant upon a successful execution of a growing SSIS package that now takes nearly 15 minutes to complete

- The package only executes three times a day. Data that is even 15 minutes old can be stale and lead to inaccuracies that could in turn cause bad decisions.

What I needed to complement my existing solution was the ability to fire off an ad-hoc query against multiple SQL Servers, simultaneously, and have one result set to review on the fly.

My company uses Red Gate's SQL Backup for their SQL Server backup solution, and I learned recently of a new Red Gate tool, SQL Multi Script, that appeared to offer the solution I needed to the problem stated in the previous paragraph. Being one to put new applications to the test and creatively combine technologies, I set about using these two tools together to provide me with data that would otherwise be unavailable.

Getting status information from SQL Backup

To begin with I should state what I know about SQL Backup version 5.0 and beyond. Much of this information was gleaned from Red Gate knowledgebase searches and some, well, let's just call it investigative research.

SQL Backup stores information about the status of backups in a SQL Compact Edition database as well as in log files on the file system of each SQL Server. The database, however, is not readily visible without the use of a stored procedure that Red Gate installs with SQL Backup as part of its server components. The stored procedure in question is:

MASTER..sqbdata

This handy stored procedure takes a SQL statement as a parameter and returns results about backup history, among other things. Figuring this out was not difficult. I simply opened the SQL Backup GUI, ran a report to show failed backups, and captured the TextData via SQL Profiler.

NOTE: You can view the Red Gate SQL Compact Edition database SDF file using SQL Server Management Studio by opening a connection to the Data.SDF file, typically located at: "Documents and Settings\All Users\Application Data\Red Gate\SQL Backup\Data\(LOCAL)".

The Red Gate SQL Backup 5.0 GUI does offer a central repository solution to provide much of the same information on failed jobs, via a limited set of reports. However, I realized that this required use of linked servers. I can only speak for myself, but I do not like linked servers, for the same reason that I do not like temp tables or cursors. Not that I do not use them all shamefully, but I try to limit their use whenever I can.

So, enter SQL Multi Script.

Getting backup information from multiple servers

My goal was to define a query that collected all the backup information I required, pass that query as a parameter to the sqbdata stored procedure, and then run it across multiple Servers using SQL Multi Script, getting back a single result set of data for review.

Let's examine the query that I put together that shows all Red Gate backup failures for a date range (the dates can obviously be modified manually, however the goal in this case is to view the previous day's backup history):

DECLARE @sqlstatement VARCHAR(1000)

SELECT @sqlstatement = 'SELECT backup_id,

backup_type,

backuphistory.dbname AS DatabaseName,

backuphistory.backup_start AS StartDate,

backuplog.sqlerrorcode AS SQLErrorCode,

backuplog.code AS BackupErrorCode,

backuplog.message AS Message

FROM backuplog

LEFT JOIN backuphistory ON backuplog.backup_id = backuphistory.id

WHERE backuplog.entry_type = 1

AND backuphistory.backup_start >= ''12/11/07''

AND backuphistory.backup_start <= ''12/12/07''

order by backup_id Desc

' ;

EXECUTE MASTER..sqbdata @sqlstatement

In a perfect world, of course, the DBA team would be notified instantly, via e-mail or page, if any backup failure occurs. SQL Backup has a built-in mail client that will send mail either on success or failure, but what if the e-mail address was setup incorrectly and notifications could not be sent? Yes, this does happen, especially when large configuration changes are occurring, or new servers have come online and, for whatever reason (mainly human error), the server's e-mail option or e-mail address is incorrectly set up.

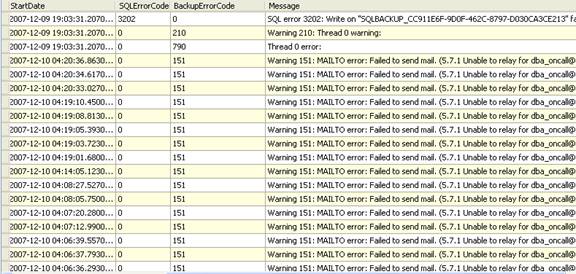

The following result from the above query demonstrates a real life scenario of an e-mail address that was not correctly configured. The result: no mail was sent. Unless scrupulously followed up by other means, such as manual discovery, this may have gone unnoticed.

Figure 1

I have, of course, omitted server names and legitimate e-mail addresses. However, the issue for this particular server was that the e-mail distribution list was setup without a fully qualified name. This query was run against 20 SQL Servers and the response time was under 5 seconds.

As you can see in Figure 1, other failures that may have occurred are all included, along with the SQLErrorCode and BackupErrorCode(the SQL Backup-specific error code), along with a description of the error so that the DBAs can do further analysis to determine the cause of the failure. Was it because of insufficient space? Was it due to network latency or I/O performance? All of this can be gleaned from the output, as the following error shows.

![]()

Figure 2

It is my responsibility to respond to the above scenarios to insure that notifications are being sent and that there is enough space to perform successful backups. Further, at a higher level, it provides information that I can use to justify purchasing more SAN space, for example, or inform my team that there is an issue with improperly configured e-mail addresses. It may also direct me to look at disk fragmentation, a known culprit for backup failures, especially over a LAN to a network share.

Speaking of Space

DBAs are often put in the hot seat when asked for specifics about their SQL infrastructure. Generally, an IT director will ask such questions as "how much space do we need to backup all of our SQL Server instances?" The question is answered either with a definitive response, based on assumptions that may be inaccurate, or with a request for more time to analyze. That analysis might involve manually interrogating the space used by each database, on each SQL Server, and calculating the levels of compression. This analysis will still result in estimation, not hard cold facts because some databases, like ones that store images for example, simply do not afford the same percentage of compression as others that might store OLTP data with lots of white space.

Alternatively, we can write another query that, combining SQL Backup and Multi Script, can in one execution tell you precisely how much space is required for each SQL Server, including compression. In this case, we want to know how much size is being consumed by each backup job. The date range of the job is important as we could have multiple backups of different type (full database or transaction logs) occurring multiple times per day. The query, again executed via SQL Backup's extended stored procedure, master..sqbdata, returns the required data in seconds:

DECLARE @sqlstatement VARCHAR(1000)

SELECT @sqlstatement = 'SELECT

backup_type,

count(dbname)as Database_Count,

sum(backuphistory.size) / 1000000 as Sum_Size_MB,

sum(backuphistory.compressed_size) /1000000 as Sum_Compressed_Size_MB

FROM backuplog

LEFT JOIN backuphistory ON backuplog.backup_id = backuphistory.id

LEFT JOIN backupfiles on backuphistory.id = backupfiles.backup_id

WHERE

backuplog.sqlerrorcode = 0 and backuplog.code = 0 AND

backuphistory.backup_start >= ''12/02/07'' AND

backuphistory.backup_start <= ''12/03/07'' GROUP BY backup_type

;'

--print @sqlstatement

EXECUTE MASTER..sqbdata @sqlstatement

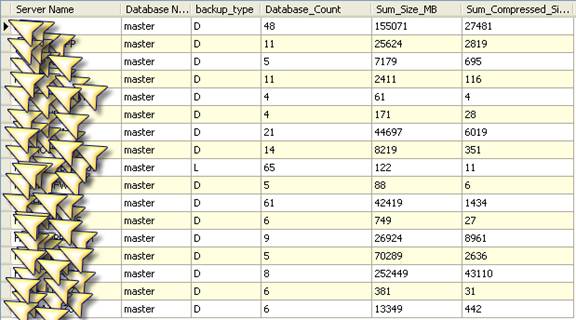

The results of the query, run against all of the servers that are backing up to a single UNC path, can be seen in Figure 3:

Figure 3

Those large shark-tooth arrows over the server names are my handiwork rather than Multi Script's, but suffice it to say that underneath are legitimate servers. The server names are not part of the query but are included in Multi Script's result set…which is a very beneficial feature. The result at this point can be exported to a CSV file and incorporated into other FFA applications (FFA is my very own acronym and means For Further Analysis).

For example, I could import the result into Excel and get a total of the compressed sizes for each database backup. This would tell me exactly how much room we need, nightly, for backups for all of the queried servers. Just like that you have your answer. There is no need to query each server individually and figure out what compression you expect to have. This is what you actually had and you can be confident in answering the question as follows:

"We need 94171 (94G) per night or 282513 (282G) for a three day rotation on our central backup disk".

I will say at this point that Multi Script is a new product and in the future I expect to see the ability to store the results to a table FFA. This would facilitate using CTEs, another of my favorite acronyms, FYI (another one).

The extra steps of selecting all and copying to Excel (no need to export to CSV this way) is only marginally inconvenient.

When are those damn backups running anyway?

Another useful piece of information is the time that the backups are occurring during the wee hours of the night, when DBAs pretend to sleep. Failures and application time outs can often occur because there is too much disk activity on the backup location. This is an unfortunate fact that can only be cured by adding additional resources, which is like pulling teeth in many cases. So, to at least know when the backups are happening, so that it is possible to alter the schedule of conflicting backup jobs, I put together the following query:

DECLARE @sqlstatement VARCHAR(1000)

SELECT @sqlstatement = 'SELECT

backuphistory.dbname,

convert(nvarchar(19),backup_start,100) as Backup_Start,

duration,

backuphistory.size,

speed,

threads,

backupfiles.name

FROM backuplog LEFT JOIN backuphistory ON backuplog.backup_id = backuphistory.id

LEFT JOIN backupfiles on backuphistory.id = backupfiles.backup_id

WHERE backuplog.sqlerrorcode = 0 and backuplog.code = 0 AND

backuphistory.backup_start >= ''12/02/07'' AND

backuphistory.backup_start <= ''12/03/07''

order by backuphistory.id Desc;'

--print @sqlstatement

EXECUTE MASTER..sqbdata @sqlstatement

There are a few things to note with SQL Compact Edition, which SQL Backup 5.0 and beyond uses. To convert the backup_start to text, so as to avoid getting the time in milliseconds, I had to use the nvarchar data type of 19 to get results in the proper date format, like:

"Dec 2 2007 11:08PM".

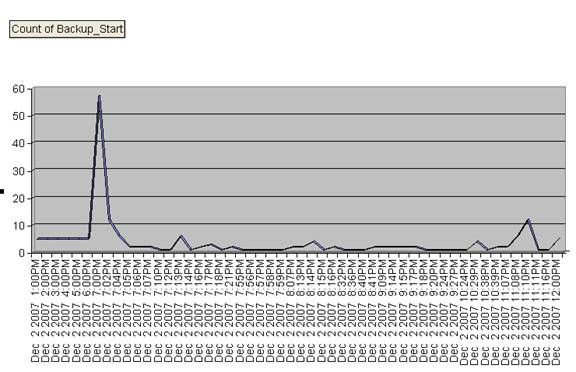

When the results are copied to Excel, in order to use the Pivot Table and Pivot Chart features, there was no issue with grouping. After all, as Figure 4 demonstrates, not all backups occur at the same time in milliseconds, but several occur at the same minute.

Figure 4

In this way, I was able to determine that most of the backups during the evening occur between 6:00PM and 7:00PM. Of course, it would be possible to do other interesting analysis in Excel, based on the result set of the query, like duration and file name. However, for this report, I only wanted a quick glance at the previous day's backups.

Summary

I've shown three ways to use Red Gate's SQL Backup stored procedure in conjunction with the new product, SQL Multi Script, in order to obtain useful, real-time information regarding database backups. Together they can open up a window that was formerly closed to DBAs, or at least clouded with uncertainty or speculation. As we all know, when questions are asked, direct answers are expected, and the faster you can deliver the answers the sooner you can get on to the other important tasks in your DBA life, like how do I kill all these users who are preventing log shipping from occurring on my Standby server? But that is a question I will investigate and report on at another time.

**

A note from Red Gate: If you would like to learn more about SQL Backup and SQL Multi Script, you can download your free trials and take part in a January special offer, receiving a free unlimited-user license for Multi Script when you buy a SQL Backup license.